If you’ve ever wondered how people are really feeling during a conversation, you’re not alone. By and large, we rely on a huge number of cues — body language, speech, eye contact, and a million others — to determine the feelings of others. It’s an inexact science to say the least. Now, researchers at MIT have developed a wearable system to analyze the tone of a conversation.

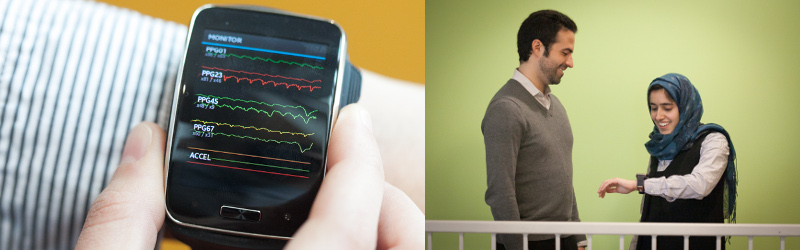

The system uses Samsung Simband wearables, which are capable of measuring several physiological markers — heart rate, blood pressure, blood flow, and skin temperature — as well as movement thanks to an on-board accelerometer. This data is fed into a neural network which was trained to classify a conversation as “happy” or “sad”. Training consisted of capturing 31 conversations of several minutes duration each, where participants were asked to tell a happy or sad story of their own choosing. This was done in an effort to record more organic emotional states than simply eliciting emotion through the use of more typical “happy” or “sad” video materials often used in similar studies.

The technology is in a very early stage of development, however the team hopes that down the road, the system will be sufficiently advanced to act as an emotional coach in real-life social situations. There is a certain strangeness about the idea of asking a computer to tell you how a person is feeling, but if humans are nothing more than a bag of wet chemicals, there might be merit in the idea yet. It’s a pretty big if.

Machine learning is becoming more powerful on a daily basis, particularly as we have ever greater amounts of computing power to throw behind it. Check out our primer on machine learning to get up to speed.

[Thanks to Adam for the tip!]

So, body language, speech, eye contact, and a million others are needed to determine the feelings of others.

But this uses a wearable – that the person you are trying to get information on has to be wearing – and can only measure heart rate, blood pressure, blood flow, and skin temperature — as well as movement thanks to an on-board accelerometer.

How is that a valid substitute for body language, speech, eye contact, and a million other things? How is the accelerometer data even remotely valid or useful either? This is like using a desktop book scanner to listen to an opera and be able to translate the inner thoughts of what the singer wants to have for dinner.

Well, it might be used for patients in hospitals, care homes, people with lifelong illnesses. It might be able to recognize the physical signs a schitzophrenic is buildin up to a psychotic episode or when the meds have begun to wear off. An epileptic might have an oncoming fit detected, its a first option for medical treatment when presenting t a doctor or nurse. It gives an immediate readout of basic functions. It might have use for diabetics to help with their illness management, palliative care would also benefit from these. I can forsee anyone with a medical cndition wearing something like this as a constant monitoring of ones functions.

muA

Again though, how are heart rate, blood pressure, blood flow, and skin temperature — as well as movement thanks to an on-board accelerometer values likely to help with determining the feelings of others?

You can reliably detect a psychotic episode with those data points? What diseases are you able to determine with high specificity with those data points exactly? How does that help diabetics?

My point is not could datapoints be useful. My point is how do these specific data points based on these sensors actually help determine what people are feeling?

Could be useful for interactions with people on the autism spectrum – for both parties. This thing would probably require training per person to be particularly useful anyway, so non-standard responses shouldn’t pose too much of an issue as long as it has enough data.

I can also see it being useful as an aid in interacting with AI systems, where it could wireless communicate user’s mood and not require complex computer vision/natural language processing.

The other obvious use of this device would be medical monitoring – stuff like parkinsons can be diagnosed/tracked through accelerometer readings.

While I see little application of this between humans it might be useful for AIs to be able to read the emotional state of the people it is interacting augmenting natural language processing.

Accelerometer tells you if the person was gesturing doing a speech or (trying to) raise their hands I suppose. for a neural network all bits of info help.

Portable polygraph.

But polygraphs are unreliable, and basically pseudoscience rubbish.

for detecting lies, not necessarily emotional state.

You make bold claims. Please quote your sources.

http://www.apa.org/research/action/polygraph.aspx

Also, search online for:

lie detector results not permissible in court

http://www.legalmatch.com/law-library/article/admissability-of-polygraph-tests-in-court.html

If wearing one always wave arms and flail around on the floor screeching like a sea lion.

This reminds me a lot of last weeks TBBT episode “The Emotion Detection Automation”…

Yet another sci-fi concept becoming reality: CASIE (Computer Assisted Social Interaction Enhancer) from Deus Ex: Human Revoluition.

Just watch out for your creepy stalker husband who will use it to detect if you are attracted to other men, then beat the crap out of you for talking to them.

Filed under [What could possibly go wrong.]

It’s MIT, a university, so I’m sure it’s meant for the authorities to intrude into your privacy and not for your spouse.