They say that a picture is worth a thousand words. But what is a picture exactly? One definition would be a perfect reflection of what we see, like one taken with a basic camera. Our view of the natural world is constrained to a bandwidth of 400 to 700 nanometers within the electromagnetic spectrum, so our cameras produce images within this same bandwidth.

For example, if I take a picture of a yellow flower with my phone, the image will look just about how I saw it with my own eyes. But what if we could see the flower from a different part of the electromagnetic spectrum? What if we could see less than 400 nm or greater than 700 nm? A bee, like many other insects, can see in the ultraviolet part of the spectrum which occupies the area below 400 nm. This “yellow” flower looks drastically different to us versus a bee.

In this article, we’re going to explore how images can be produced to show spectral information outside of our limited visual capacity, and take a look at the multi-spectral cameras used to make them. We’ll find that while it may be true that an image is worth a thousand words, it is also true that an image taken with a hyperspectral camera can be worth hundreds of thousands, if not millions, of useful data points.

The Data Cube

Spectroscopy is the study of how light interacts with materials. Generally, the light reflecting or being emitted from a material is passed through a prism in order to separate it into its spectral components. Then each component is analyzed for information. In high-end three-CCD digital cameras; each image is separated into its red, green and blue spectral components. Each RGB component is then given a value which is applied to a pixel. The resulting image produced is more or less a reflection of what you see.

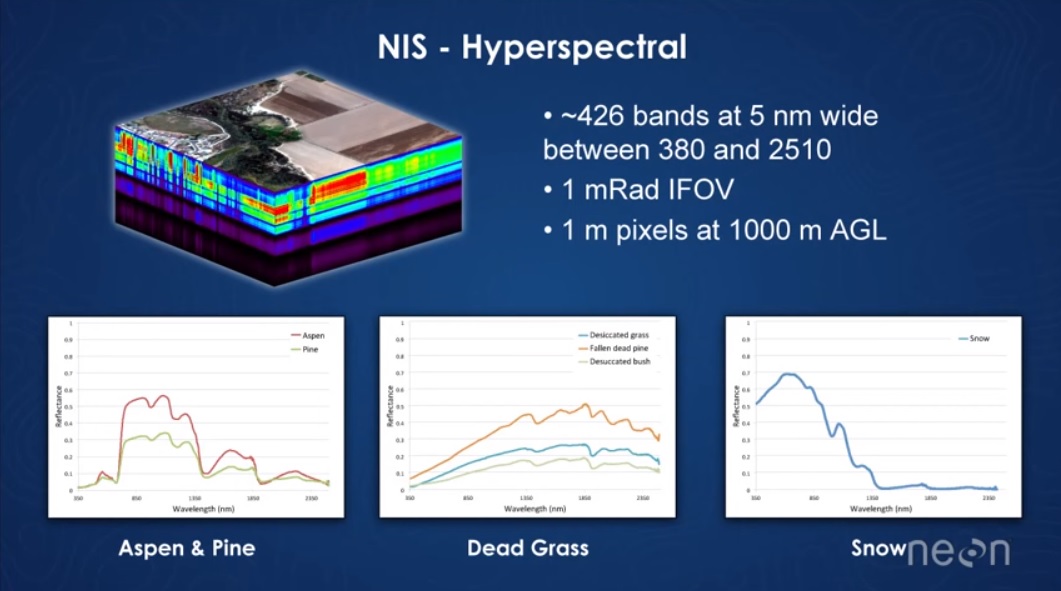

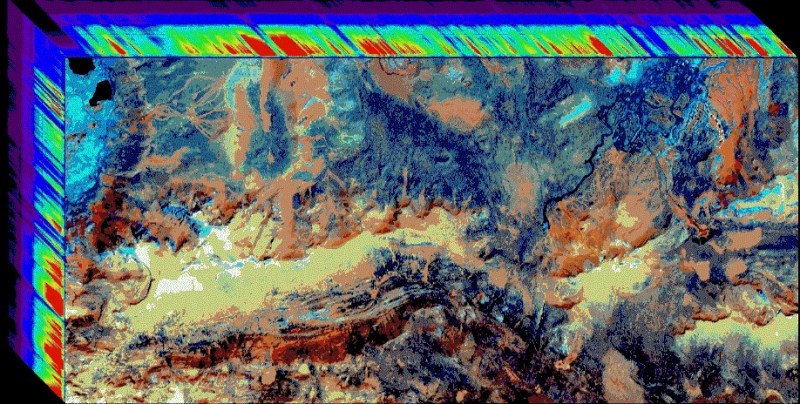

It should be possible to follow this same process but look at other spectral components other than the visual RGB spectrum. And this is precisely what a hyperspectral camera does. Instead of acquiring 3 data points per pixel as with an RGB camera, a hyperspectral camera might have tens or hundreds of data points per pixel. The total hyperspectral image is three-dimensional and is represented in a Data Cube. The X and Y plane of the cube represent the spatial part of the image, and the spectral information is recorded in the Z axis of the cube: a full spectrum for every pixel.

For a practical example – the National Ecological Observatory Network [NEON] uses a hyperspectral camera with a range between 380 and 2510 nm, with a resolution of five nanometers. When the camera is 1,000 m off the ground, the resolution is about one meter per pixel, and each pixel will contain 26 data points. From all of this information, different types of vegetation, moisture content, and more can be gleaned.

Hyperspectral cameras are highly useful in ecological studies, geographic monitoring, and agriculture management. A farmer can use a hyperspectral camera to see what area of her crops need fertilizer, pesticides and/or water. This allows her to apply what is needed to a specific area of the crop instead of the whole, saving the farmer a lot of time and money.

Why Are You Telling Me This?

As you probably already know or have guessed – hyperspectral imaging has been around for a long time. But the setups and software needed to create a hyperspectral image used to be prohibitively expensive, and like many technologies of the past, costs have plummeted. Now anyone with a few thousand dollars to play with can get in on the multispectral game. And before you scoff at that price, consider the amount of valuable information you can acquire with a multispectral setup. Multispectral is similar to hyperspectral, but sees fewer bands.

The cheapest multispectral camera we can find is the Parrot Sequoia, which comes in with a $3,500 price tag. This particular camera is geared to the agricultural industry.

Its sensors look at red, green and two “invisible” infrared bands. It has its own GPS and other guidance hardware built in, so you don’t have to worry about keeping your drone’s altitude and speed fixed. The camera will adjust accordingly, within limits, of course. It can operate from an altitude of 30 ft to 500 ft and weighs just under 80 g, so putting one on just about any well-made drone is doable.

The other slightly more expensive camera is RedEdge by Micasense, which comes in at just north of five grand. It’s a bit heavier at 180 g, and comes with the same sensors as the Parrot, but adds blue to the mix. At a height of 400 ft, it can resolve to 8 cm per pixel. The software is cloud-based, and they have a well done online demonstration.

Conclusion

Hyperspectral sensing is not new. But just as 3D printing has been around for ages and is now a booming industry thanks to the expiring patents, hyperspectral imaging could follow the same path thanks to dropping prices. The obvious market is small farms. But hackers have a knack for pushing tech well beyond its intended audience, and stretching out the boundaries of what’s possible. What could you do with a hyperspectral or multispectral camera? Or better yet, how are you going to make your own?

Sources

Header Image via Vespadrones.

Thumbnail Image via NIST.

Hyperspectral Imaging Technology in Food and Agriculture ISBN 978-1-4939-2836-1

You are confusing multispectral with hyperspectral imaging. Parrot sequoia and Rededge are multispectral cameras, and are not capable of capturing more than red, green, blue, nir and rededge bands. Hyperspectral cameras capture many narrow bands by using some type of scanning and special light filtering (see pushbroom sensors used in satellite platforms).

Ha! This is very true. Thanks for the catch, fixed it best I could.

As an example hyper spectral camera have a look at the HySpex Mjolnir. Found this at https://www.hyspex.no/products/mjolnir.php

I’m confused, isn’t hyperspectral a term that describes both IR and near UV bands as well as RGB? If so, this has been around for years. I’m about to hack a old digital camera to remove the lens filter and turn it into a amateur UV+IR hyperspectral imager by allowing all IR and UV through to the lens while blocking visible. This is done by removing the visible light notch filter in front of the lens and installing a specialty visible light blocker notch filter. Then, on the front of the main outside lens assembly, you can place additional filters if you only want IR or UV but not both.

No, hyperspectral is a clumsy catchall that is used to refer to sensors capturing a large number (50+) of narrow bands (c 20nm FWHM)- these could be distributed entirely in the visible, NIR-SWIR or TIR regions of the spectrum or across all of them. Your adapted camera is broadband RGB with sensitivity extended into the NIR / UV. The spectral sensitivity of each band depends on the properties of the Bayer filter in front of the sensor and the sensitivity of the sensor itself.

Any examples of displaying hyperspectral or multispectral image data in a useful way? I have the words “data cube” which sounds pretty cool but I still don’t know how this data is usually presented to users and what specific information it provides that normal cameras would miss.

Check this out

Part of the value of hyperspectral imagery is that it has high spectral resolution (<10nm) and is continuous, meaning the bands overlap, it can reveal chemically and biologically important absorption features not visible with broadband multispectral sensors. Often some type of data dimensionality reduction like PCA or MNF is applied to the imagery to visualize the data, these transformations can also be used for classification problems like species mapping.

Yeah, and not to forget some steps are being made in neural network and nearest neighbor for the follow up classification of the data.

You can even use other (pre)processing methods like mean centering, mahalanobis distance, k-means as well as (D)PLS to further quantify. Hierarchical cluster analysis for classification is extremely interesting once you have a standard data set and pre-processing and processing methods down.

Maybe think like an example of an FTIR microscope. For each pixel or “data cube” you can think of the pixel as a IR spectra to detail chemical information or even biochemical information if you have a more advanced IR database and methods of processing the data to compare the more advanced database. See my and the last article for some basics.

An easy way to think is the datacube usually has at least four dimensions of information: The x-coordinate, y-coordinate, wavenumbers and intensity of the spectral wavenumbers. I think that is an easier way to explain.

I forgot to add the link to the article in reference above: http://www.americanlaboratory.com/1413-Issues/37116-October-2008/

I built a hyperspectral camera a few years back, adapting a webcam to operate as a slot camera through a diffraction grating. I’d be happy to dig up my notes if someone is interested in continuing where I left off.

Getting enough light is a big challenge. A 2m, a 500W halogen work lamp was needed to adequately illuminate a subject. Like all slot cameras, mine took a long time to capture an image (minutes, one column at a time.)

I never did make it portable enough to use outdoors, or bring plant life in to capture. (Most artificial objects aren’t very interesting to look at.)

Naive question for those who know: can’t you just gang a bunch of cameras with appropriate filters together? I know that you can get IR pretty easy with a dark red filter, and (near?) UV should be possible pretty easily too, but for some crazy short wavelengths you need special lenses.

What are we talking about here? Could you do something useful with three cameras and some heavy filters?

Well yeah man, what does this look like to you: https://www.micasense.com/rededge/

To me it looks like 5 cameras shooting the same way!

That’s what I am trying to do, (hackaday.io: https://goo.gl/XJb58f ) But I went out of funds (those filters are expensive and the ones you see in the photo were damaged by moisture). The difficult part of the project is to identify reflectance values accurately, specially when the automatic settings of the camera are active and you don’t have a spectroradiometer lying around.

You got pretty far

Automatic settings are indeed nasty. But, for prism based systems you could just use the spectra of a known lightsource and a white target. Fluorescent light sources have a few very sharp and specific bands which can be used for calibration.

Alternatively, use spectral calibration tiles.

The folks from the GOSH community are trying to produce a single pixel camera that would have many applications, hyperspectral imaging being one of them: https://forum.openhardware.science/t/welcome-to-the-forum-to-develop-an-open-source-single-pixel-camera/437

Problem: How to calibrate?

For calibration of hyperspectral cameras, you’d normaly use a white spectral target, which has uniform reflection acros the entire spectral range. It is not that different from a flatfield non uniformity calibration in regular cameras really. Call it a white balance if you will, but instead of getting the RGB values to level out, you get leveled out specral responses :)

Its kind of a shame the article didn’t talk about how to actually acquire hyperspectral images, such as pushbroom scanning.

Also, hyperspectral imaging is used a LOT in industrial applications. For instance in food (detecting impurities), medicine (counterfeit, inconsistent mixtures) recycling (plastic sorting) and suchlike.

Wouldn’t you also want to have a wavelength calibration standard such as an IR-Vis-UV standard? SRM 2035 or SRM2036? I was just checking to see the latest status of the NIST glass standards that replaced SRM1920a as I was working with NIST as I was making custom standards (glass rods, gold foil cylinders for scintillation bottles, and some other designs for testing materials with reflectance standards behind a sample in a custom slide/vial and performed some DOE’s and a specific SRM2035 DOE. Interestingly the first certificate for SRM 2036 was certified the day before my birthday. I’m guessing SRM2036 can be certified or will if clients demand like SRM2035a for a broader use into the Vis-UV range since the SRM2036 was just the SRM2035 glass with a 99% reflectance standard backing and if the 2035a is the same glass material.

Interesting how lasers and even laser ablation (like LIBS too) is being used to perform this on inorganic materials also and not only the organic materials.

Great to read hyperspectral imaging is catching on in a LOT of industrial applications. I was really angered by the lack of commitment to process analytical technologies being implemented in the agriculture, food and drug industries.

Was great working with the FDA team at the time to move the technology forward after working with the USDA and being amazed at the lack of advanced Microwave and IR-Vis-UV methods implemented. This was maybe 15 years ago since I encouraged the efforts.

Now, I am more concerned with like Cyber Crimes Enforcement, the need for Electromagnetic Spectrum Crime Enforcement… which is slowly moving forward albeit in the range of trying to get the powers that be to come out more into the open with the “potential” technologies that they like to deny even exist. To me the technology can be used like LIBS, ultrasonic blasters, MASERS, X-Ray Beams and worse technologies that are more a radiation broken arrow incident in the electric and magnetic field and charges range of incidents that require corrective and preventative actions once the root cause of the deviation (that needs to be identified validly and not false/fraudulently determined) is determined. More reason for lean sigma in government like the DOD, DOJ and really DHS now days.

Can I get a shout out to back up my thought of this sounds exactly like how sensors on the Enterprise works. If you had to have a real world equivalent to a sci-fi Idea then this would be it. And I’m not just talking about a tri-corder but full on sensors aboard the ship that scan a whole planet for life signs and reports back type and place and what species.

Imagine if you will a fantastic computer tied to these type of cameras and more all over a ship and BOOM! You got Star Trek Sensors baby.

But the crux of this is we want to build our own systems. What is shared above is about preexisting systems, but frankly we don’t need their software and their prebuilt devices. I know personally I just want the sensors to go as low as they can go and as high as they can go in the wavelengths and then to be able to composite this data myself with my own software. Can you share some research in this area that could get us started at getting raw sensors to build our own?