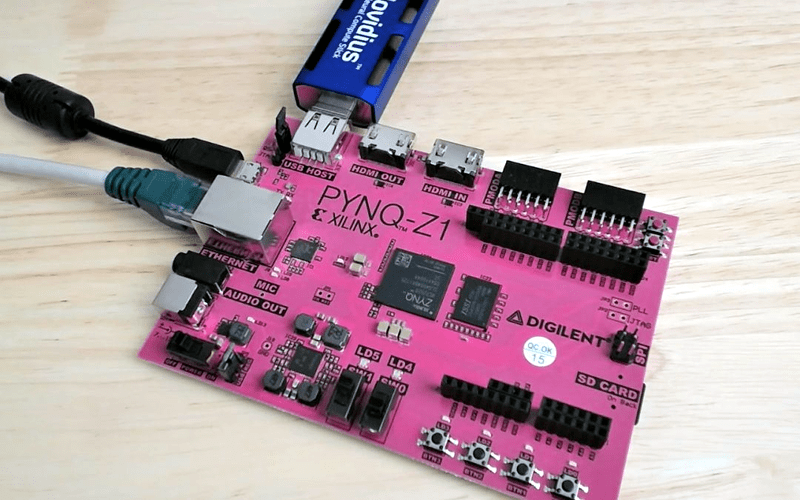

They probably weren’t inspired by [Jeff Dunham’s] jalapeno on a stick, but Intel have created the Movidius neural compute stick which is in effect a neural network in a USB stick form factor. They don’t rely on the cloud, they require no fan, and you can get one for well under $100. We were interested in [Jeff Johnson’s] use of these sticks with a Pynq-Z1. He also notes that it is a great way to put neural net power on a Raspberry Pi or BeagleBone. He shows us YOLO — an image recognizer — and applies it to an HDMI signal with the processing done on the Movidius. You can see the result in the first video, below.

At first, we thought you might be better off using the Z1’s built-in FPGA to do neural networks. [Jeff] points out that while it is possible, the Z1 has a lower-end device on it, so there isn’t that much FPGA real estate to play with. The stick, then, is a great idea. You can learn more about the device in the second video, below.

There’s a lot of processing going on in these tasks, just taking a slice of the video allowed processing to occur at 3 frames per second, but scaling for full size using software dropped to half that. However, [Jeff] thinks if he did the scaling in the FPGA he could easily get the rate back up to 3 frames per second.

We’ve looked at the Pynq before and have no doubt where it gets its name and its PCB color. We’d love to see some of the robots with vision we’ve covered refitted to use this technology.

Did anyone else notice the t800 cpu resembles a USB stick?

Seems so!

http://retrotext.blogspot.com/2015/03/terminator-cpu-as-usb-memory-stick.html

I always wanted to build a small cluster of machines to run the surveillance software originally developed by BRS Systems (now Omni AI, Inc after much BS apparently). They were the first company to be able to provide full-color 648×480 machine vision systems for surveillance (with a lot of the features that we would take for granted from a similar system today) They were doing things similar to your average Deep Learning-style machine vision system back in 2009.

That cluster? T-800 CPU rendered like the protoype “SkyNet processor” featured in Terminator 2. Since this was 2009, we didn’t have the Raspberry Pi so the most reasonable alternative would be to use several MiniITX boards – which required a large case.

These days, of course, I would want to see if a toy form of their software could be ported to either the MIT Micro Mote (a 2mmx2mm full computing platform) or the system that IBM describes as being “The World’s Smallest Computer” that’s a quarter of the size. Again, I would use the shape of the T800 CPU as a guide, but now I would be making it smaller than the props used in the movie.

You mean a USB stick resembles the T-800 CPU.

This is quite impressive, but I can’t get past the incredibly annoying music in the video! argh!

too expensiv,

cheapes is epiphany and normal linux on board

Epiphany is dead. Give up on it already.

Eh… Someone point me to where the HDL is in this project….

I want one!

By the time everyone starts using it intel will discontinue it anyway.

Like their glasses.

Exactly! just like the maker boards.

Hello marketing? We need some advice on a GPU.

Hello, yeah, no probs, we draw a huge grid to show off the cores put the memory in a monster typeface and we’re all sweet, how many cores?

Twelve.

WOW, twelve thousand cores? That will sell itself, or do you mean hundred? because that’s pedestrian soon but we can totally work with it.

Er, no, just twelve. They are good cores, VLIW, Like PRISM.

Ohhhh, kaaaay. I’ve checked the desperation list and the only two hypes we’ve not irreparably burned are crypto and neural networks.

Flip a coin and we’ll put that on a USB stick with a half gig of memory to use as a co-processor.

Sorted, website up by Friday.

You are comparing a very special type of vector processor to a standard core? Okay.

Did you know massive processing are done on FPGA chips? Most of them have 0 cores.