Getting bounced to a website by scanning a QR code is no longer an exciting feat of technology, but what if you scanned the ingredient list on your granola bar and it went to the company’s page for that specific flavor, sans the matrix code?

Bright minds at the Columbia University in the City of New York have “perturbed” ordinary font characters so the average human eye won’t pick up the changes. Even ordinary OCR won’t miss a beat when it looks at a passage with a hidden message. After all, these “perturbed” glyphs are like a perfectly legible character viewed through a drop of water. When a camera is looking for these secret messages, those minor tweaks speak volumes.

The system is diabolically simple. Each character can be distorted according to an algorithm and a second variable. Changing that second variable is like twisting a distorted lens, or a water drop but the afterimage can be decoded and the variable extracted. This kind of encoding can survive a trip to the printer, unlike a purely digital hidden message.

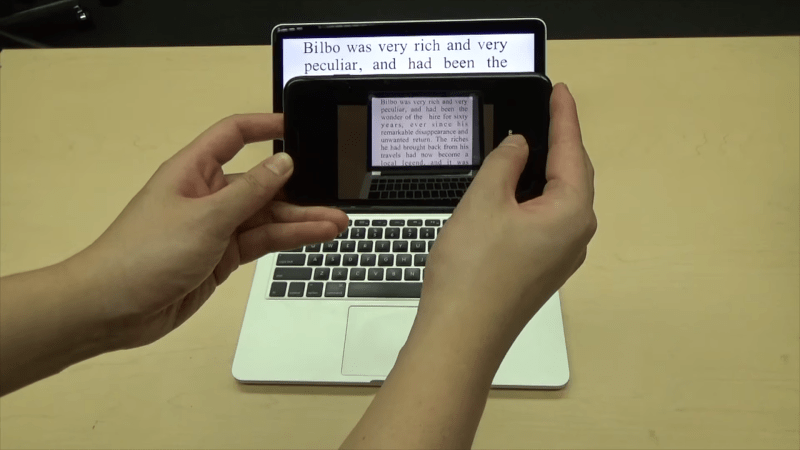

Hidden messages like these are not limited to passing notes, metadata can be attached to any text and extracted when necessary. Literature could include notes without taking up page space so teachers could include helpful notes and a cell phone could be like an x-ray machine to see what the teacher wants to show. For example, you could define what “crypto” actually means.

stekernography

Oooo, good word.

Stekernography Bacon cipher?

Excellent word – I’d love to hear what the printing industry has to say about the concept. Doing one copy is obviously useful, but getting 100,000 an hour exactly right for mass distribution might be something else. Also, what about other alphabets (Arabic, Chinese, Korean etc.)?

Had to look up Bacon. Smart guy, but bit rate on that method is awfully low.

The OP’s method (follow the link) looks very generic to any language that can be written in discrete symbols.

As for accuracy, here’s a nice paper (from 1995!) that analyzes performance of kerning-based methods in the presence of noise: http://web.cs.ucla.edu/~miodrag/cs259-security/low94document.pdf

Is there any softwares currently available or online things that can actually solve this yet?

New way to pass secret notes in classroom. If teacher confiscates the note, it’d look normal and harmless and pass it. But student who knows can get the hidden message.

Kids pass notes via text message these days.

I, for one, write all my secret notes on a computer, encoded with within ordinary characters, then print it and pass it across the classroom.

I manually draw the pixels but to each their own. :o)

Another way of achieving the same is how many AR viewers such as Arilyn work. They apparently run some kind of edge detection on the image, then hash the shape of the edges and use it online to lookup whether there is any matching information. Thus just the layout of text and graphics becomes the key.

So: watermarking /everything/, catching whistleblowers with canary traps, so on? And otherwise fairly useless – who will scan a text with no indication telling that it has ha code? And if it does, why not just replace that indication with (drumroll) a code? QR, url, whatever.

Oh, might be good for ARGs. Thanks :-|

When you don´t have to use a QR code, you have more space on the backside of a granola bar for human readable information. QR codes don´t look nice and perhaps do not fit the rest of the packaging´s appearance. I personally don´t like QR codes because I never know if it is a website, a .vcf file or plain text until I have scanned it.This approach could change that problem.

how??? now you don’t even know if it _exists_ until you scan it.

One solution could be to write in plain text that there is a hyperlink embedded in the text field. It is hard to give you an example, because the distorted text technology does not have a cachy namy yet, as QR-Code has. Another approach could be that in about 15 Years, AR contact lenses are an everyday thing and hyperlinks will pop up everywhere you look. In this scenario, I sincerely prefer hyperlinks hidden in plain text instead of qr codes, since QR codes are visual noise whereas this technology is hidden in plain sight.

Just put little logo there. The same way you put “@” to text to tell people that it has hidden meaning of being mail address.

what are “ARGs”?

alternate reality games. where items in the real world interact with websites and often involves the kind of strange, disjointed problem solving reminiscent of 90’s adventure games.

A friend and I devised a system several years ago that worked simply by altering the kerning. A normal kerning table was used with Times New Roman font at 12 pt (or any non-ornamental font at any reasonable size), and appropriate distances between each combo pair of letters. To represent a “0” the standard was used, and to represent a “1” the kerning was widened slightly by a 1/128th of an inch. Imperceptible to the human eye, but our modified OCR scanner picked it up easily and spat out a binary stream for the encoded information. We eventually cut out the OCR part and simply looked at the gaps between the letters. The only ‘complication’ is that you needed a copy of the kerning table it was rendered with, but that was easily extracted from the .ttf font file.

https://imgs.xkcd.com/comics/kerning.png

AAAAA!!!!

Very interesting idea!

Am I the only one bothered by the process diagram showing the wrong information? https://spectrum.ieee.org/image/MzA1ODA3Ng.jpeg

The first part in step 6 adds up to (10 + 46 + 8 + 2 + 3=) 69, not the indicated 71.

Moreover, the encoded message isn’t the one they indicate (“Hello world”), it’s “Greetings,myfrien!Youfinallyfoundme.”

Nitpick, maybe, but this is supposed to convey the idea and supplying the wrong information undermines that process.