As far as computer architectures go, ARM doesn’t have anything to be ashamed of. Since nearly every mobile device on the planet is powered by some member of the reduced instruction set computer (RISC) family, there’s an excellent chance these words are currently making their way to your eyes courtesy of an ARM chip. A userbase of several billion is certainly nothing to sneeze at, and that’s before we even take into account the myriad of other devices which ARM processors find their way into: from kid’s toys to smart TVs.

ARM is also the de facto architecture for the single-board computers which have dominated the hacking and making scene for the last several years. Raspberry Pi, BeagleBone, ODROID, Tinker Board, etc. If it’s a small computer that runs Linux or Android, it will almost certainly be powered by some ARM variant; another market all but completely dominated.

It would be a fair to say that small devices, from set top boxes down to smartwatches, are today the domain of ARM processors. But if we’re talking about what one might consider “traditional” computers, such as desktops, laptops, or servers, ARM is essentially a non-starter. There are a handful of ARM Chromebooks on the market, but effectively everything else is running on x86 processors built by Intel or AMD. You can’t walk into a store and purchase an ARM desktop, and beyond the hackers who are using Raspberry Pis to host their personal sites, ARM servers are an exceptional rarity.

Or at least, they were until very recently. At the re:Invent 2018 conference, Amazon announced the immediate availability of their own internally developed ARM servers for their Amazon Web Services (AWS) customers. For many developers this will be the first time they’ve written code for a non-x86 processor, and while some growing pains are to be expected, the lower cost of the ARM instances compared to the standard x86 options seems likely to drive adoption. Will this be the push ARM needs to finally break into the server and potentially even desktop markets? Let’s take a look at what ARM is up against.

A Double Edged Sword

At the risk of oversimplifying the situation, ARM has become the go-to for small devices due to the inherent efficiency of the architecture. ARM chips consume much less energy, and in turn don’t get nearly as hot, as their x86 peers. This is a perfect combination for small, battery-powered devices as the increased energy efficiency not only allows for longer run times, but means the processor usually doesn’t need anything more than a passive heat spreader to keep cool (if even that).

At the risk of oversimplifying the situation, ARM has become the go-to for small devices due to the inherent efficiency of the architecture. ARM chips consume much less energy, and in turn don’t get nearly as hot, as their x86 peers. This is a perfect combination for small, battery-powered devices as the increased energy efficiency not only allows for longer run times, but means the processor usually doesn’t need anything more than a passive heat spreader to keep cool (if even that).

But the efficiency of ARM processors isn’t as compelling in the performance driven world of desktop and server computing. In these applications, energy consumption has generally not been a deciding factor when selecting hardware. Modern desktop processors can consume nearly 100 watts at load, even higher for server chips. Nobody denies this is an enormous amount of power to consume, but it’s largely seen as a necessary evil.

That being said, one might wonder why ARM laptops haven’t become popular at this point. Unfortunately there’s another factor at work: software compiled for x86 won’t run on ARM hardware. Under Linux this isn’t much of a problem; there are several distributions which have been adapted and recompiled for ARM processors, thanks in no small part to the popularity of devices like the Raspberry Pi. But under Windows, the situation is very different. While Microsoft introduced ARM-compatible versions of Windows a few years back, it doesn’t change the fact that the decades of Windows software that’s already on the market can’t be used without resorting to emulation.

The AWS Opportunity

Issues with legacy software support may be keeping ARM out of the general purpose computing market, but it wasn’t a problem for mobile operating systems like Android or iOS which launched with ARM processors and had no back catalog of software to worry about. This software “clean slate” might not be possible within the Windows-dominated desktop and laptop markets, but that’s not the case for AWS customers.

For developers who have been using Linux AWS instances (which the vast majority are), the ARM environment will not be significantly different from what they’re used to. In fact, if their software is written in an interpreted language like Python they should be able to move their existing code over to the ARM servers as-is. If it’s written in C or another compiled language it will need to rebuilt from source due to the architecture change, but in most cases will require little to no modification.

In short, moving AWS customers from x86 to ARM won’t cause the same “culture shock” as it would on other platforms. In exchange for lower operating costs, customers will likely be willing to make whatever minimal changes may be required when moving from x86 Linux to ARM Linux.

Of course, from Amazon’s perspective getting more customers onto ARM servers means reduced energy consumption in their data centers. It’s a win-win for everyone involved, and provides a fantastic opportunity to show ARM has earned its place in the server market.

Amazon’s Own Hardware

While there are already a few ARM servers on the market, Amazon decided to go with their own in-house custom silicon for their AWS implementation. Called Graviton, the system is the end result of Amazon’s purchase of Israeli chip manufacturer Annapurna Labs in 2015. It utilizes the now several year old Cortex-A72 microarchitecture, and features 16 cores clocked at 2.3GHz.

Early benchmarks for Graviton indicate that performance is about on par with Intel’s low-end server processors, and that it specifically struggles with single-threaded tasks. This isn’t terribly surprising considering the age of the Cortex-A72 and the fact that Amazon is careful not to promise there’s actually any performance gains to be had when switching to ARM. Of course, benchmarks can be misleading and we’ll need to wait until more developers get experience with Graviton to see what its real-world performance is like.

It should also be said that the first generation of Graviton is really just to test the waters. Subsequent generations of the hardware, with a potential upgrade to a more modern ARM microarchitecture, would have a huge impact on performance. For example the Cortex-A76 is designed for laptop applications and offers over twice the performance of the older A72.

One Small Step

With Amazon’s introduction of ARM servers, a whole new generation of developers are going to get first hand experience with a non-x86 architecture in arguably the most seamless way possible. Nuances will be learned, limits will be pushed, and shortcomings will be overcome. In time these brand-new ARM developers may want to get a laptop or even desktop machine that has the same architecture as the platform they’re deploying their code on. With any luck, that’s when the free market will kick in and start offering them at competitive prices.

Or not. It could be that the hit in performance when switching to ARM isn’t enough to make up for the price reduction, and AWS customers don’t bite. No matter which way it goes, it’s going to be interesting to watch. The results of Amazon’s ARM experiment could end up determining if the ubiquitous chip that powers our myriad of gadgets stays firmly in our pockets or ascends into the cloud.

I guess I understand AWS’s desire for their own version of ARM, but I feel there are too many out there now…

You do know that ARM holdings Limited already have lots of companies that have licensed their technology e.g.Intel, TSMC, Samsung, TI, NEC, ST, ZTE, Broadcom, AMD, Infineon, Apple, Qualcomm, Fujitsu, UMC, Lenovo, Google, Alibaba Group, Gigabyte, NXP(Freescale), Marvell, HiSilicon, Trend Micro, Cypress, Green Hills, Renesas, Silicon Mobility, Xilinx ….. and many many more. Having a custom version just means that you pick which parts of their technology portfolio that your company wants to license for your product. And by dealing directly with the silicon fabs, at scale, you remove at least two thirds of the cost of using some other companies existing chips that do not quite meet your exact needs. There are probably about 100K+ custom ARM chip products since ARM Ltd. started, I don’t think that one more product line will make a big difference. And I can safely bet that the custom Amazon ARM chip will make its way in to future Kindle and Echo products replacing the NXP and TI ARM chips currently used saving a big chunk of change

As I understood, you can also add your own IP to the chip, so it’s ARM + something else in there, and these custom custom jigsaw puzzles are a nightmare to support because you cannot be sure that certain provisions even exist on the SoC, much less the special features.

Kinda like the problem with all the x86 extensions that were meant to make the CPUs just incompatible enough with the rest of them to grab market share for Intel – except 100 times worse.

My colleagues and I are knee-deep in developing a project on AWS right now, and I’m very confident they could change the underlying architecture from x86-64 to ARM, let alone from one version of ARM to another, and we would never notice.

Our project, like many these days, is written entirely in high-level languages, has modest performance requirements, and is designed to scale by adding more servers.

Right, it sounds like your project is precisely the kind of thing Amazon is targeting with the ARM roll out. You could potentially benefit from the lower cost of the ARM instances without having to change anything in your existing code.

When developing system software for Linux, there is no real difference if you are just swapping architecture X for architecture Y. As long as your compiler supports both, it’s merely a matter of recompiling. Likely the biggest change most developers are going to notice is maybe their devices are have different file names and that’s about it.

The more acute problem is that the user software has little to no support for ARM: nobody cares. You get the handful of most popular applications, or at least quasi-recent versions thereof, but if you’re looking at something more specific or more up-to-date, you’re up the creek without a paddle having to compile your own from source.

Nobody cares. And nobody needs to care. Virtually no user code gives a hoot what processor it runs on.

You would care a lot if you’d ever been through the exercise of porting from big endian to little endian, or perhaps porting from a 64 bit system to a 32 bit system.

A large system will have a wonderful variety of bugs that only show up when you do those things.

Historical note:

Early ARM systems ran in BE mode, but these days most systems run the ARM little endian. I do not think it’s too much of a stretch to say that Linux didn’t become really popular on ARM until the the LE port (armel).

As an aside, I note that even the PowerPC is now only supported in mainstream Linux (e.g. Debian) in LE.

“All the world’s a VAX” is still true, it seems.

So, that explains the multitude of “anti-vaxxers”?

B^)

>”Virtually no user code gives a hoot what processor it runs on.”

Okay, so let me just apt-get this package compiled for x86 into my ARM based OS…

Compiling from source is hardly “up a creek without a paddle”. It’s more like up a creek with a paddle, a canoe, a few helpful guides, but not enough bug repellent or sunscreen.

When you make your users compile code, you’ve basically failed as a software distribution system.

“As far as computer architectures go, ARM doesn’t have anything to be ashamed of.”

Ha. Ha ha ha.

ARM has a HUGE problem with venders and their support packages. From:

https://hackaday.com/2018/11/23/five-year-old-bug-spawns-router-botnet-monster/

“t’s not too hard to find a copy of Broadcom’s SDK that contained this vulnerable code. That SDK was dated 2007, but based on the Linux kernel from 2003, release 2.4.20. This is one of the outstanding problems in the industry. Rather than working to upstream support for a chipset into the kernel, manufacturers release an SDK based on an old fork of Linux. Because this “works”, there is very little rush to use more recent software, even when there are known vulnerabilities in the old code. This widespread sharing of low quality and dated code leads to situations like this one, where over 100 devices are vulnerable to an attack.”

This is a MASSIVE problem that affects not just ARM, but dust about every architecture on every SoC from every vender.

The solution of course, is to get venders to open-source and upstream all their code…..but that’s not going to happen.

To what ends? Nobody’s going to bother to fix it anyhow – they’ll just grab it for free, hack it about a little, and then there’s two dozen slightly differently buggy versions out there and the problem just became worse.

Meanwhile, the bad guys just look at the code and find the bugs – just as freely.

Wrong. The difference with open source is everyone can see the code, despite the hackers. The reality is that hackers work harder on closed source code because they can find an exploit and keep it secret for a long time, using it strategically. Open source code has way too many eyes on it and any holes will surely be patched up before they are compromised. Coders scratch and claw at each other to get code changes made to the master. The bigger the project, the more secure it will be. Look at crypto, it’s not super mainstream but the source is open and very few exploits have been successfully carried out. Hackers have a pretty big bounty on it too, because really easy anonymous money is on the line. Open source is just way too much exposure than most product engineers are worth devoting time to. There are IP’s to hold secret for competitive reasons, too.

Then why do critical bugs remain for years and years in OSS?

Very few people are competent, willing, and interested enough, or paid enough, to hunt and expose bugs in all the Open Source software that exists out there. Often the black hats are paid by governments or criminal organizations, while the white hats are paid by nobody and just doing it in their free time. The more technical and esoteric the bugs become the fewer people are even able to understand what’s going on, so the “way too many eyes” argument is moot – those eyes are blind.

A lot more highly competent and highly motivated people are interested in poking holes in the software to steal something or break something. Having the source code readily available just makes their jobs easier.

Freescale are pretty good at mainlining their stuff. They also have “officially supported” kernels with paid for support. It seems they’re rarely touched after the initial release of the silicon and I’ve not come across any examples of backported security patches so far (though I haven’t really been looking, admittedly).

Can confirm. Over the last couple of years I’ve worked with recently released Layerscape parts and as of last spring only needed to create a small handful of patches (mostly for Ethernet PHY and other external hw) to go against mainline for kernel and U-Boot. Even their proprietary FM packet engine works fresh from upstream and has a BSD licensed firmware blob.

Only catch is that you really do need their pricey CodeWarrior tools ($5k/yr license with margining tools + $500 JTAG debugger) when designing a new board. That’s the only way currently to gin up the RCW headers for the bootloader and margin the DDR bus.

ARM != venders[sic] and their support packages

Indeed, however, you cannot by chips from ARM.

I suppose if Amazon are having their own chips made, for their own servers, they’ll be on top of having the software work properly. Vertically integrated, all in-house.

Yeah, I saw that article too. So? That has literally nothing to do with the ARM architecture at all. You may as well blame it on the type of power grid it uses.

Tons and tons of those cheap router don’t run on ARMs but on MIPS. So the problem is maybe not the Hardware…

I’d like to see how Amazon physically sets these up in the datacenter. Blade servers? My last job was DC tech and Atom machines were a nonstarter. Most customers wanted higher performance Xeons in order to host VMs, the smaller clients got VMs on said machines, and almost nobody got Atom dedis. Maybe Amazon can find a place for lower power but we couldn’t.

There is a great deal of call for plentiful, small, lightweight, low power systems that run microservices.

It really would be pretty cool if provisioning was done by literally turning on more systems. The potential savings for a bursty service could be quite large.

As ARM tech and fabrication have advanced, they’re making mobile phone chips with 8 or 12 cores now, where 4 are basic everyday-use, 2 or 4 are low-power, standby-use, and 2 or 4 more are high-power, faster cores for when the pedal is to the metal. These can switch on and off quickly and seamlessly. So if you want burst capability, and even low-power snooze mode, that’s all already been done. So ops per watt can be pretty near optimal if that’s what they want.

This looks like an ‘all in one’ type of solution that hopes user-land is abstracted far enough away to not be affected with what has prevented ARM from getting into the datacenter so far.

In the last round, the service provided had to try and make use of the hardware and software provided to them by the chip makers. The simple issue of not being able to run a particular Linux distro of choice was just one of the issues that prevented adoption. In this case, Amazon is both the chip maker and service provider so they have full control.

To be fair, this looks a bit like what is going on in the DL cloud where companies like Google and Nvidia are targeting frameworks like Tensorflow and building the rest of the stack down to the chips. It kind of reminds me of the old ‘supercomputer’ world.

But I don’t see this as affecting companies that are not interested in rolling their own hardware unless Amazon is feeding the necessary IP into Open Compute. Even then, my experience working with Intel and working at Cisco in servers is that providing customers with the choices they need requires a number of relatively open standards like those for storage interfaces, bus interfaces, firmware interfaces, management interfaces, etc.

Admittedly, I don’t know much about EC2 and how portable apps written for Amazon’s cloud are to other service providers such as Google or Microsoft. If things aren’t portable now, then discussions of vendor lock via this hardware are moot. But if current software is portable, then I wonder if this new server hardware makes it more difficult to take an EC2 application and move it to another company’s service.

Break into the desktop market? ARM started in the desktop market!

ARM is basically a continuation of the BBC Micro.

(Well, not really, but there’s a lineage there)

There are two more contenders. The first is good old MIPS which can be found in many appliances which are to cheap to use ARM. The other is RISC-V. It’s new, it will probably soon be as cheap as MIPS (no IP fees for the core). There is a chance that RISC-V may be also more secure, which in case of servers can’t be overestimated.

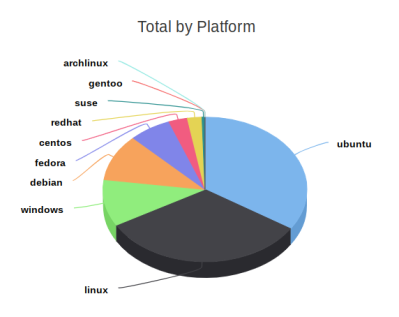

Naive question: in the “AWS Operating System Usage” what is the “Linux”-Platform? (Seeing that all but one of the platforms there are Linux). Is that Slackware? Or Amazon Linux?

Amazon linux is based on CentOS which is the open source version of RHEL

Oh, so about half of AWS Cloud uses Redhat derivatives? Wow.

General availability is key… When anyone (not just Amazon) can buy decent mid-range ARM servers with respectable amounts of memory I think it’ll have a fighting chance on the server end (the x86 monoculture is a new thing on the sever side, unlike on the client side where is is super well-established).

Some folks I know have a few racks full of these at the office…

https://www.marvell.com/server-processors/thunderx-arm-processors/

I wonder if Tony Stark’s “JARVIS” runs on Marvell processors?

B^)

It’s not availability of the systems, it’s the ability to tailor the hardware. The arm world is very much an embedded systems ecosystem where you are expected at the very least to own the entire device from the board up to the OS. For many companies, there is ownership of some amount of IP in silicon as well which means getting silicon fabbed for your device.

In PCs, many different vendors own different parts of the system and they all interact with each other based on a set of standards.

ARM generally doesn’t make sense for most companies providing cloud services. Hardware design is not in their business model and it’s better to only claim responsibility for a limited amount of software on the device (just look at the home router situation so see why taking responsibility for everything might be a bad idea).

But what I see as the big takeaway here is that Amazon sees AWS as mature enough with a large enough customer base to ‘appify’ it. They are trying to take the developer model of the Apple Apps store of the Google Play store to the cloud.

Interesting side note about ARM is the quiet entry into linux based ARM OS of Oracle.

https://www.oracle.com/technetwork/server-storage/linux/downloads/oracle-linux-arm-4072846.html

No-one in their right minds will touch anything provided by Oracle with a mile-long pole.

Cavium (now owned by Marvel) produce server processors and there are a few vendors selling servers with these already in

If power isn’t an issue, what about FPGA’s ? Specifically, what about improving the logic cells to the point you ALMOST have an Intel clone CPU?

If you have everything but the micokernel ready to roll, you improve the efficiency [compared to a standard FPGA]. If combining 4 to 6 “blocks” gives you a 68000 clone, or x86 clone, etc. then you can combine that with a few ARM cores for a reconfigurable main CPU.

“If power isn’t an issue”

Power is explicitly the issue here! They aren’t switching because it’s fun, they are switching because it uses less power which costs less money. Seriously… “If power isn’t an issue”… *sigh*

Indeed. Power consumption is the number one consideration in building data centers. Siting of a data center is largely determined by the availability of cheap electric power AND by climate, since every watt dissipated has to be pumped out of the building as well, with lower ambient temperature being a major advantage. But whether or not an ARM-based server can provide more practical computing per watt than x86 is something to be determined in the real world, not from benchmark tests.

Yeah, didn’t Google build a huge data center in Norway to take advantage of the cooler weather?

(Less air conditioning costs)

And isn’t cooling the reason MicroSoft has explored underwater data centers?

Why not RISC-V instead.

Hah! Came here to say this.

Probably because this ARM shift was in the works years before the recent surge of interest in RISC-V, and possibly because the architecture is/was more mature at this/that point in time.

But you can bet there are some people back at Amazon right now discussing that. Whatever adaptations they had to make to their stack, to make it support ONE additional architecture besides x86, I’m sure it’s now a lot easier to further support MANY more architectures.

At some point, things start to segment up too much and it complicates things from a provisioning and availability perspective, but my gut says that’s around the half-dozen-architectures mark. Figure they already offer different sorts of x86 instances, with and without various types of GPUs and stuff, so this support is probably already baked in and well-understood too.

“Nobody denies this is an enormous amount of power to consume, but it’s largely seen as a necessary evil.”

Reversible computing might help

.https://en.wikipedia.org/wiki/Reversible_computing

Or not using Silicon.

“Metal–Air Transistors: Semiconductor-Free Field-Emission Air-Channel Nanoelectronics”

https://pubs.acs.org/doi/10.1021/acs.nanolett.8b02849

And of course using Memristors.

Some have mentioned this — but the key driving factor in all of this is power consumption, not price of the hardware.

You can get more giga-porks per watt with ARM, and that apparently is the bottom line in a datacenter.

But I look in my crystal ball and seem more ARM on the desktop.

Amazon Thinks A Farm is Bigger Than a Scone, and it rightly so, they tend to be.

I mean… https://en.wikipedia.org/wiki/Apple_A12

“But if we’re talking about what one might consider “traditional” computers, such as desktops, laptops, or servers, ARM is essentially a non-starter. ”

If you’re only looking at the main (central) processor, then yes.

However, consider what is controlling the hard-discs, network adaptors, USB controllers and a whole gamut of supporting peripherals – a significant number of them will have a number of ARM cores in them, not just one. So it’s possible to say that there are more ARM cores than x86 ones in desktop computers!

I wonder what the breakdown is between ARM and 8051 chips, there is a lot of them as well.

‘RISC-V’ is becoming THE ‘go-to’ RISC platform for displacing x86 hardware.

Which is scaring the living bejabbers out of Arm Holdings.

Wonder when Arm Holdings’ next round of fake news regarding RISC-V is scheduled to be released.