The failed launch of Soyuz MS-10 on October 11th, 2018 was a notable event for a number of reasons: it was the first serious incident on a manned Soyuz rocket in 35 years, it was the first time that particular high-altitude abort had ever been attempted, and most importantly it ended with the rescue of both crew members. To say it was a historic event is something of an understatement. As a counterpoint to the Challenger disaster it will be looked back on for decades as proof that robust launch abort systems and rigorous training for all contingencies can save lives.

But even though the loss of MS-10 went as well as possibly could be expected, there’s still far reaching consequences for a missed flight to the International Space Station. The coming and going of visiting vehicles to the Station is a carefully orchestrated ballet, designed to fully utilize the up and down mass that each flight offers. Not only did the failure of MS-10 deprive the Station of two crew members and the experiments and supplies they were bringing with them, but also of a return trip which was to have brought various materials and hardware back to Earth.

But there’s been at least one positive side effect of the return cargo schedule being pushed back. The “Spaceborne Computer”, developed by Hewlett Packard Enterprise (HPE) and NASA to test high-performance computing hardware in space, is getting an unexpected extension to its time on the Station. Launched in 2017, the diminutive 32 core supercomputer was only meant to perform self-tests and be brought back down for a full examination. But now that its ticket back home has been delayed for the foreseeable future, NASA is opening up the machine for other researchers to utilize, proving there’s no such thing as a free ride on the International Space Station.

Off-World, Off-The-Shelf

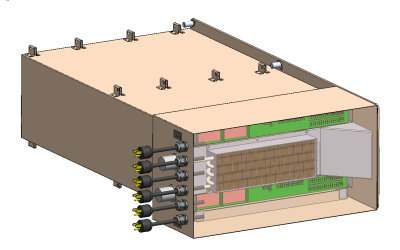

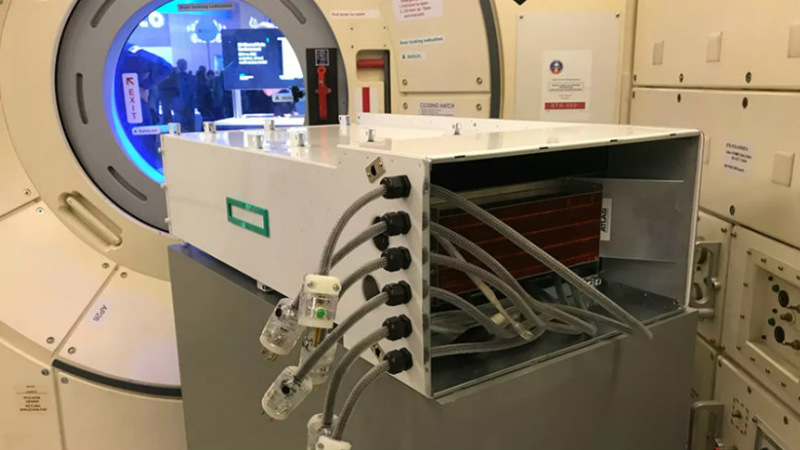

To be sure, the Spaceborne Computer is the most computationally powerful machine currently operating in space by a wide margin. But unlike most previous computers intended for extended service in space, it doesn’t rely on bulky shielding or specifically “rad hardened” components. It uses largely stock component’s from HPE’s line of high performance computers, specifically Apollo 4000 servers loaded with Intel Broadwell processors. The majority of the custom design and fabrication went into the water-cooled enclosure that can integrate with the Station’s standard experiment racks and power distribution system.

Instead of physically shielding the system, the Spaceborne Computer is an experiment to see if the job of defending against the effects of radiation could be done through software and redundant systems. Cosmic rays have been known to corrupt storage devices and flip bits in memory, which are situations the Spaceborne Computer’s Linux operating system was specifically modified to detect and compensate for. During its time in space the computer has also had to deal with fluctuating power levels and regular drops in network connectivity, all of which were handled gracefully.

Which is not to say the machine has gone unscathed: ground controllers have noted that nine of its twenty solid-state drives have failed so far. After only a little more than a year in space, that’s a fairly alarming failure rate for a technology that on Earth we consider to be a proven and reliable technology. A detailed analysis of the failed drives will be conducted whenever the Spaceborne Computer can hitch a ride back down to the planet’s surface, but as with most unexpected hardware failures in space, radiation is considered the most likely culprit. The findings of the analysis may prove invaluable for future deep-space computers which will almost certainly be using SSDs over traditional disk drives for their higher energy efficiency and lower weight.

Keeping it Local

Whether on the Station or a future mission to the Moon or Mars, there’s a very real need for high-performance computing in space which boils down to one simple fact: calling home is difficult. Not only is the bandwidth of space data links often anemic compared to even your home Internet connection, but they are notoriously unreliable. Plus as you get farther from Earth, there’s also the time delay to consider. At the Moon it only takes a few seconds for signals travelling at the speed of light to make the trip, but on Mars the delay can stretch to nearly a half an hour depending on planetary alignment.

Even just one of these problems is enough of a reason to process as much data as possible onboard the vehicle itself rather than sending it back to Earth and waiting on a reply. Your Amazon Echo might be able to get away with offloading computational heavy tasks to servers in the cloud while still providing you seemingly instantaneous results, but you won’t have that luxury while in orbit around the Red Planet. As astronauts get farther from Earth, they must become increasingly self reliant. That’s as true for their data processing requirements as it is their ability to repair their own equipment and tend to their own medical needs.

Even just one of these problems is enough of a reason to process as much data as possible onboard the vehicle itself rather than sending it back to Earth and waiting on a reply. Your Amazon Echo might be able to get away with offloading computational heavy tasks to servers in the cloud while still providing you seemingly instantaneous results, but you won’t have that luxury while in orbit around the Red Planet. As astronauts get farther from Earth, they must become increasingly self reliant. That’s as true for their data processing requirements as it is their ability to repair their own equipment and tend to their own medical needs.

While astronauts on the International Space Station don’t have much of a delay to contend with, they don’t want to saturate their data links either. HPE imagines a future where the feeds from the Station’s Earth-facing 4K cameras could be processed onboard in real time, searching for things like weather patterns and vegetation growth. In perhaps the most mundane application, a high powered computer aboard a spacecraft could work in conjunction with the vehicle’s radio to provide rapid compression and decompression of data; offering a relatively cheap way to wring more throughput from existing communication networks.

First in Class

Space is a fantastically cruel place. From the stress of launching into orbit to operating in a micro-gravity environment, hardware is subjected to conditions which are difficult to simulate for Earth-bound engineers. Sometimes the easiest solution is to simply launch your hardware and observe how it functions. It’s what Made in Space did with their 3D printer, and now what Hewlett Packard Enterprise has done with their server hardware.

When the Spaceborne Computer finally hitches a ride back down to Earth sometime in 2019, researchers will have to make do with the Station’s normal compliment of computers: various laptops and the occasional Raspberry Pi. But now that NASA and HPE have gone a long way towards proving that largely off-the-shelf servers can survive the ride into space and operate in orbit for extended periods of time, it seems inevitable that history will look back on the Spaceborne Computer as the first of many such machines that explored the Final Frontier.

Waiting to see how the first quantum computer would fare in such an environment.

It both worked perfectly and failed spectacularly.

B^)

Only when you look at it.

No, then it has to decide :-)

Are you certain?

I saw the display shown in the title picture at Supercomputing in Dallas. They borrowed the ISS model built for the TV Show “The Big Bang Theory” to give it a matching environment. HPE sure is proud of these two machines.

“Not only is the bandwidth of space data links often anemic compared to even your home Internet connection, but they are notoriously unreliable. ”

Kind of like real internet connections. :-D But seriously what they learn can be applied here as well.

Limited bandwidth and notoriously unreliable pretty much describes my home internet. They left “and more expensive than any other industrialized country in the world” out, though

You took the words right out of my mouth!

You must be from Germany

Of course it’s “limited” but with a limit of 75Mbit/s it’s quite OK. Although in the evening Youtube sometimes needs some time for buffering.

They just upgraded the ISS, the ground stations and the relay satellites (yes, there’s a whole networknof relay satellites out there) to 300 Megabit/s. The ISS has a faster connection than most households on earth.

They also have more demand than most households on earth, so that’s probably quite reasonable.

I wonder if it has a public IP address

I wonder if it has a networked printer… See if the youtube wars can extend into space.

I wonder if it has a speech synthesiser.

Ob: “I’m sorry, Dave…”

Space-Bitcoin anybody?

Sp-itcoin?

>” After only a little more than a year in space, that’s a fairly alarming failure rate for a technology that on Earth we consider to be a proven and reliable technology.”

Who does and who doesn’t. It’s well known that SSDs are sensitive to radiation, and they’re fragile as it is with up to 30% over-provisioning to deal with the inevitable wear and tear. How else are you going to get an MLC chip with a maximum of 5,000 erase cycles per block last for the life of the device?

Since they are lifetime warranted, it’s not an issue, if only the seller is paying for the transport under RMA…

That’s why you have to go with larger structures- less sensitive to radiation… and controllers that are in triplicate? Triple triplicate? Wouldn’t that be a fascinating design.

1986 is on the line, and a mister Tandem NonStop would like a word with you, if you have a moment.

” It’s well known that SSDs are sensitive to radiation…”

Really?

I wondered about that a few years ago, and I had the means, so I tried it.

I put 1.2 GB of random data on a 2 GB SD card (I said it was a few years ago…)

I blasted it with 2000 rads of 80 kVp x-rays: several times lethal dose to humans, equivalent to a few centuries of normal (ground level) background radiation.

I compared that 1.2 GB of data with a copy I had made prior to exposure.

Not a single one of those ten billion bits flipped. Not ONE.

Now, these were x-rays, not cosmic rays, but it makes one seriously question the “sensitive to radiation” claim.

You are actually looking at the data after the faulty bits have been error corrected by the storage device. 8 bits of data are no longer stored as 8-bits any more, the data densities are so high that failed bits are a certainty on every single read. So Reed-Solomon codes are used to recover multiple failed bits (e.g. https://thessdguy.com/tag/reed-solomon/ ). You really need access to CPU inside the SSD which has access to the underlying storage to see the true change in the error rate.

All modern electronics are extensively tested against X-Rays to prevent data loss at security checkpoints. You can put your phone into one of those X-Ray machines for an day. X-rays are also routinely used during production to verify correct manufacturing.

Are you sure X-Rays have enough energy to flip these bits? A single cell on a 2GB flash card is huge.

The issue with Nand is when they are biased in a rad environment. The charge pumps for erasing are sensitive to destructive seu. We typically minimize power on time on nand parts when they are not actively in use.

If you want to “see” what happen to your ram, SSD or any other digital device in a radiation field, next time your at your dentist: Turn your smartphone camera on, put it in a dark bag or cover it with a coat, have them point the x ray head at the camera lens and fire a few x rays off on different settings. I got my local shop to crank the machine up to max and hit it several times. Each makes an interesting video of “snow” to watch.

What you should see if your using a CCD style camera is random noise(snow) that has interesting characteristics.. The x-ray heads at work that I have “tested” have a unique signature, 1st just watching the video of each shot you can see an initial pulse of moderate intensity, a ramp to a peak of high intensity, a settle down to normal intensity and finally a taper to nothing, all in a 1-2 second exposure, something way more then used for teeth but easy to get an interested tech to fiddle with setting and let you get some cool shots.

Personally that told me all I need to know about what happens when radiation hits electronics. Any bit in any part can flip. In my case I could see different color pixels fire etc. Over all the phones all survive but show that even low intensity x-ray can and will do some amazing stuff. Compared to say a chest x-ray or a CT, a dental radiography is small amount of radiation but thats only part of the story.

Something to be aware of is the “photoelectric” effect. @ 80kVp the photons only have so much energy and if the device is hardened to some point it’s unlikely you will see a reaction however if you change the “color” of the beam to say “120kVp” or even more then your likely to start to impart more energy to the photons and then more interactions can occur. Similar to how shining a brighter light ( more rads) on the subject didn’t seem to cause more damage. It’s because its not the amount of radiation. IE:”electrons are dislodged only by the impingement of photons when those photons reach or exceed a threshold frequency (energy). Below that threshold, no electrons are emitted from the material regardless of the light intensity or the length of time of exposure to the light”

So you can kill your memory with a single cosmic ray. but a year in a CT machine and your memory might be fine.

At work I pay attention to solar flares because of this and it’s a reason getting ECC memory is important if you don’t want too many issues.

“Something to be aware of is the “photoelectric” effect. @ 80kVp the photons only have so much energy”

*snort!*

Silicon has a K edge of 1.8 keV. 80 kVp X rays have a mean energy in the ballpark of 50 keV, dozens of times more than required to strip even core electrons off silicon by the photoelectric effect.

The photoelectric photons you’re talking about are the outer shell electrons, bound by about a single electron volt, about the energy of a 1100 nm photon. X rays are typically 50,000 times more than that.

A single X ray photon interacting in a camera pixel (or a memory cell, for that matter) will liberate hundreds of electrons, equivalent to hundreds of absorbed light photons. No wonder you see snow. It’s doing exactly what it is designed for!

MLC NAND flash is a real hack job under the hood anda lot of things are done to give the illusion of reliability.

Technically it’s not even storing data in a traditional digital sense but as several analog voltages.

Such as to get 3 bits per cell you need eight voltage levels.

“In perhaps the most mundane application, a high powered computer aboard a spacecraft could work in conjunction with the vehicle’s radio to provide rapid compression and decompression of data; offering a relatively cheap way to wring more throughput from existing communication networks.”

They aren’t already doing that?!?!?!

I know I was thinking the same thing.

I thought they were smart.

If there not, then I guess they would be having big data transfer problems.

They are smart but have to be very cautious or conservative in what they do. it’s not like they can send up a nerd to fix it.

Pick me, pick me,,

Certainly not with anything this powerful. Most of the computers in space are decades old tech.

They are. And even if they didn’t, one would never use such an inefficient and unreliable device to do it.

For about 10 or so years all html send from and to web browsers gets (un) zipped by some intermediate layer. There are also ways to turn this off if needed and possibly also to control some of its featurs, compression algorithms might change.

Storing data compressed on HDD’s has been regular techonology since the 80’s or so. I forgot what those DOS programs were called. Sometimes this also speeds up disk throughput, if the computer is fast enough to (de) code the data stream in real time.

drvspace.exe

Ah, yes. I remember drvspace.exe. Using it almost guaranteed corrupt, or at the very least garbled, data. Perhaps it became more reliable in time, but it left me with a bad taste in my mouth toward data compression on hard drives, and to this day I never turn on drive compression. I haven’t needed it anyway. If my memory is correct, drvspace.exe came about in a time when computers were often limited to a single 20 mb HDD. That’s barely enough space to run DOS 6 and Windows 3, in my experience. Things have changed slightly since then…

Or folder compression which is still built into Windows (I just checked and it’s still there in Win10).

I’ve heard that these days CPUs are so fast that it’s quicker to compress and write a small amount of data than to write a larger amount uncompressed. I’ve not tested though.

To do compression you need RAM, and once you go about 700km above the earth surface the Van Allen radiation belts makes RAM fail.

The Apollo 11 in 1969 had 36K words of ROM (copper wire wound through magnets) and 2K words of magnetic core RAM (15-bit wordlength + 1-bit parity). So 72 KiB of ROM and 4 KiB of RAM with 6.25% used to find bit flips. And the space shuttle in 1983 had 424 kilobytes of (magnetic core ~ 400,000 instructions per second) RAM. The shuttle was upgraded in the 1990’s to battery backed up silicon based RAM just over 1060 KiB of RAM which was clocked 3x faster!

The New Horizons space craft launched in 2006 to flyby study of the Pluto system and then study other Kuiper belt objects had 16MiB of RAM (It transmits data back to earth at 0.5 bps!). Even the Parker Solar Probe launched this year has 16 MiB of RAM, to be fair it is using the same RAD750 CPU with 16 MB SRAM, 4 MB EEPROM, and 64-KB Fuse Link boot PROM.

Compression removes redundancy and most of the time you actually want to add additional bits, not take them away e.g. https://en.wikipedia.org/wiki/Mariner_9#Error-Correction_Codes_achievements

Rope memory was used. Also known as LOL memory.

http://drhart.ucoz.com/index/core_memory/0-123

Twistor memory was a short-lived branch. Right up there with bubble.

https://en.wikipedia.org/wiki/Twistor_memory

Regarding redundancy: It is useless, if you do not know exactly how it is introduced. With printed text or acoustic speech you have quite some redundancy, but most important our brain is trained to use it.

For digital data the redundancy has to fit exactly to the ECC algorithm. So most often data is first compressed to remove unusable redundancy and then line coded and ECC coded and perhaps interleaved (against burst errors) before it gets transmitted over or stored on media with low reliability.

No wonder that nand flash are failing fast, automotive manufacturers ban it from onbard electronics for good reason.

I wonder if MRAM is less suseptible than NAND flash to radiation and temperature changes (wikipedia say so, but gives no numbers).

That’s an interesting claim (NAND banned from automotive electronics), do you have a reference to cite? I’ll have to doublecheck next time I have the opportunity, but I’m pretty sure most in-dash nave units have several gig of NAND for the maps.

Perhaps for safety-critical ECUs, but certainly not for the infotainment.

infotainment is indeed using nand sometimes, but it’s barely what we call automotive. All other cpu’s use nor flash, specified for automotive.

I know of driver assistance systems (for sure safety critical) which uses eMMC, NAND, SPI-NOR and SLC NOR Flashes. But perhaps the requirements in the Video subsystem are less stringent. Some wrong pixels probably do not matter that much.

Counter-intuitively, the smaller flash structures are on silicon the more prone to failure they are. So in the ever present push to pack more and more bits in, the endurance goes down and the error rates go up. So, to make SSDs in space work, you would need to make the chips smaller in capacity, larger in size, and preferrably in redundant pairs in different locations and orientations to reduce the possibility that a gamma ray can completely obliterate the bits.

How is that counter-intuitive?

One could argue that the smaller a chip is, the smaller is the likelyhood of a particle hitting the chip…

… but if you have any clue of physics, this argument is very counter-counter-intuitive…

Seems like an ideal use case for Optane memory that require a significant amount of energy for writes to occur…then all you’d have to worry about is physical degradation over time and not bit flips.

Optane is Intel’s brand name for 3D XPoint which is a type of ReRAM or resistivity memory.

It should be more radiation tolerant than NAND flash.

This is not really a new idea. I worked on a triple redundant commercial CPU (Power PC) project for space applications 20 years ago, Main memory was commercial DDRAM with SECDED (single error correction, double error detection) and optional double redundancy, allowing for triple error correction and quad error detection. Only the voting and memory controller chips were radiation hardened, making it much cheaper than a completely rad hard design. I don’t know if any were actually sold and put into space, as I left the company before the design was fabricated.

So the Man-Plus program moves on…..the computers conspired to crash MS-10 to keep one of their own in space…..

Those particles are real.. Witness the cloud chamber video.

And yes, my grandson built one when he was 8. (Last year)

https://youtu.be/FS2pKyRKeYs

This sounds like an extension of the work IBM did in the 90s, using laptops with ECC memory and a “scrubbing” program to continually correct RAM errors when flown on the space shuttle.

ECC or “Registered” ram checks the data prior to most operations.

Correcting single bit errors..

https://en.wikipedia.org/wiki/ECC_memory

What is currently use in extreme radiation environments for a Solid State Recorder (SSR) is stacked SDRAM with Reed-Solomon error correction. So if R-S (16, 12) was used that would be 16 code bytes for every 12 data bytes. Ever SDRAM refresh cycle could detect up to 16 flipped bits and set the 96 data bits back.to their correct value. The DRAM would typically be be created with a 100nm or larger process and the detection and correction circuit would be manufactured using a larger process (150nm to 500nm). When it comes to reducing the effects of ion particles such as protons and electrons bigger is better. Using continuous multi-bit Error Detection and Correction (EDAC) is an ingenious solution to a difficult problem.