The greatest hardware hacks of all time were simply the result of finding software keys in memory. The AACS encryption debacle — the 09 F9 key that allowed us to decrypt HD DVDs — was the result of encryption keys just sitting in main memory, where it could be read by any other program. DeCSS, the hack that gave us all access to DVDs was again the result of encryption keys sitting out in the open.

Because encryption doesn’t work if your keys are just sitting out in the open, system designers have come up with ingenious solutions to prevent evil hackers form accessing these keys. One of the best solutions is the hardware enclave, a tiny bit of silicon that protects keys and other bits of information. Apple has an entire line of chips, Intel has hardware extensions, and all of these are black box solutions. They do work, but we have no idea if there are any vulnerabilities. If you can’t study it, it’s just an article of faith that these hardware enclaves will keep working.

Now, there might be another option. RISC-V researchers are busy creating an Open Source hardware enclave. This is an Open Source project to build secure hardware enclaves to store cryptographic keys and other secret information, and they’re doing it in a way that can be accessed and studied. Trust but verify, yes, and that’s why this is the most innovative hardware development in the last decade.

What is an enclave?

Although as a somewhat new technology, processor enclaves have been around for ages. The first one to reach the public consciousness would be the Secure Enclave Processor (SEP) found in the iPhone 5S. This generation of iPhone introduced several important technological advancements, including Touch ID, the innovative and revolutionary M7 motion coprocessor, and the SEP security coprocessor itself. The iPhone 5S was a technological milestone, and the new at the time SEP stored fingerprint data and cryptographic keys beyond the reach of the actual SOC found in the iPhone.

The iPhone 5S SEP was designed to perform secure services for the rest of the SOC, primarily relating to the Touch ID functionality. Apple’s revolutionary use of a secure enclave processor was extended with the 2016 release of the Touch Bar MacBook Pro and the use of the Apple T1 chip. The T1 chip was again used for TouchID functionality, and demonstrates that Apple is the king of vertical integration.

But Apple isn’t the only company working on secure enclaves for their computing products. Intel has developed the SGX extension which allows for hardware-assisted security enclaves. These enclaves give developers the ability to hide cryptographic keys and the components for digital rights management inside a hardware-protected bit of silicon. AMD, too, has hardware enclaves with the Secure Encrypted Virtualization (SEV). ARM has Trusted Execution environments. While the Intel, AMD, and ARM enclaves are bits of silicon on other bits of silicon — distinct from Apple’s approach of putting a hardware enclave on an entirely new chip — the idea remains the same. You want to put secure stuff in secure environments, and enclaves allow you to do that.

Unfortunately, these hardware enclaves are black boxes, and while they do provide a small attack surface, there are problems. AMD’s SEV is already known to have serious security weaknesses, and it is believed SEV does not offer protection from malicious hypervisors, only from accidental hypervisor vulnerabilities. Intel’s Management engine, while not explicitly a hardware enclave, has been shown to be vulnerable to attack. The problem is that these hardware enclaves are black boxes, and security through obscurity does not work at all.

The Open Source Solution

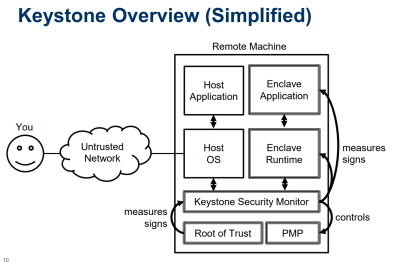

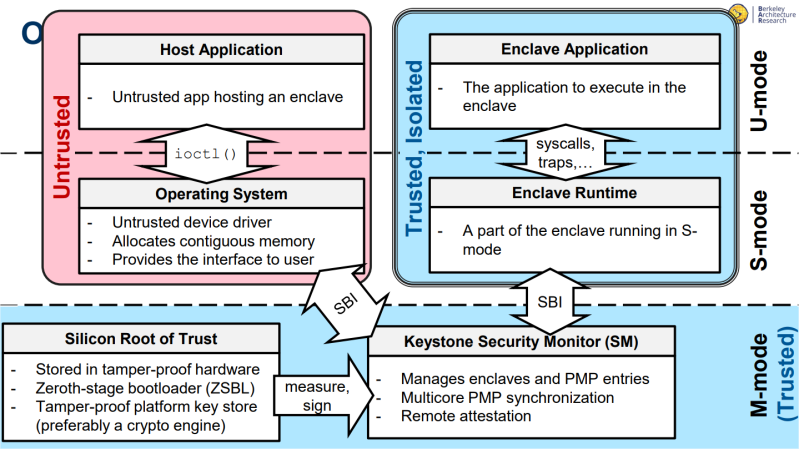

At last week’s RISC-V Summit, researchers at UC Berkeley released their plans for the Keystone Enclave, an Open Source secure enclave based on the RISC-V (PDF). Keystone is a project to build a Trusted Execution Environment (TEE) with secure hardware enclaves based on the RISC-V architecture, the same architecture that’s going into completely Open Source microcontrollers and (soon) Systems on a Chip.

The goals of the Keystone project are to build a chain of trust, starting from a silicon Root of Trust stored in tamper-proof hardware. this leads to a Zeroth-stage bootloader and a tamper-proof platform key store. Defining a hardware Root of Trust (RoT) is exceptionally difficult; you can always decapsulate silicon, you can always perform some sort of analysis on a chip to extract keys, and if your supply chain isn’t managed well, you have no idea if the chip you’re basing your RoT on is actually the chip in your computer. However, by using RISC-V and its Open Source HDL, this RoT can at least be studied, unlike the black box solutions from Intel, AMD, and ARM vendors.

The goals of the Keystone project are to build a chain of trust, starting from a silicon Root of Trust stored in tamper-proof hardware. this leads to a Zeroth-stage bootloader and a tamper-proof platform key store. Defining a hardware Root of Trust (RoT) is exceptionally difficult; you can always decapsulate silicon, you can always perform some sort of analysis on a chip to extract keys, and if your supply chain isn’t managed well, you have no idea if the chip you’re basing your RoT on is actually the chip in your computer. However, by using RISC-V and its Open Source HDL, this RoT can at least be studied, unlike the black box solutions from Intel, AMD, and ARM vendors.

The current plans for Keystone include memory isolation, an open framework for building on top of this security enclave, and a minimal but Open Source solution for a security enclave.

Right now, the Keystone Enclave is testable on various platforms, including QEMU, FireSim, and on real hardware with the SiFive Unleashed. There’s still much work to do, from formal verification to building out the software stack, libraries, and adding hardware extensions.

This is a game changer for security. Silicon vendors and designers have been shoehorning in hardware enclaves into processors for nearly a decade now, and Apple has gone so far as to create their own enclave chips. All of these solutions are black boxes, and there is no third-party verification that these designs are inherently correct. The RISC-V project is different, and the Keystone Enclave is the best chance we have for creating a truly Open hardware enclave that can be studied and verified independently.

A step above a TPM.

“..and security through obscurity does not work at all.”

Security can be a delaying tactic till the information is no longer useful.

“What is an enclave?”

I was still asking myself after the 3 paragraphs gushing about how Apple products have them.

“he iPhone 5S SEP was designed to perform secure services for the rest of the SOC,”

A 5 paragraph section titled “What is an enclave?” has one line about a specific implementation’s function and that’s sufficient?

One of general defintions of this word is “Islet, domain, which has its unity, its own characteristics and which isolates itself from all that surrounds it”. I think it is a good sum up.

Yes, but this is a technical article trying to define a technical term – I want to know the technical definition, not the general one, despite it offering a peek as to its purpose.

I felt there was gushing too. “Revolutionary” more like evolutionary, if even at that. And this is coming from a devote Apple user.

But what is our progress on developing burbclaves?

I see “SEP”, and I think “Someone Else’s Problem”.

Thanks Douglas Adams!

My day job is all about HSMs and enclaves and such.

Enclaves and smart cards have a lot in common. Some enclaves are, in fact, present interfaces that are compatible with smart cards (things like presenting APDUs over i2c). And JCOP smart cards are programmable in Java (it’s not really the same thing when you boil it down, but the syntax similarities lower the entry barrier for programming). So, yeah, it’s entirely possible to make little custom hardware HSMs with your own API that can perform cryptographic operations only when they’ve assured themselves that conditions are correct to do so.

The downside is that they’re essentially Tommy – they’re deaf, dumb and blind except for the API you create, and there’s very little you can do to prevent the API from being used by a simulacrum. The best you can do is things like rate limiting or being intolerant of protocol variance or error or the like.

That said, such devices are helpful in that they generally don’t allow private or secret keys to leave. So in the context of DRM, it’s possible to prevent someone (or at least, make it supremely difficult) from making off with a decryption key so that they could make unlimited copies of a decryption tool, but it’s not nearly as hard to prevent someone from simulating “legitimate” playback and just capturing the output (that said, schemes like HDCP attempt to raise the bar some more).

The other issue with enclaves is that, although there are a bunch of them to choose from – even available from DigiKey – the actual *useful* documentation for them is only available under NDA from the manufacturer, and if you’re just a small potatoes Maker, the chances of even being given an opportunity to sign the NDA are slim.

we need this in silicon @ ghz speeds, without some “security” blackbox hypervisor on the die, then maybe people can feel secure about the cpu in their machine….

Of course tools like the chipwhisperer can still be used for side-channel attacks on these secure enclaves, so it’s questionable how much security they actually offer.

First make socketation of chips mandatory, so I can put in a chip that I own encryption keys for.

DRM and owning your stuff are absolutely incompatible, and I’m very disappointed that none of these nine comments include the obvious “f*ck DRM” that I would expect from hackaday readers

There are reasons for using secure enclaves other than DRM.

My first thought was that while this could technically be a useful invention, practically this would mostly be used in an anti-user manner. Working DRM would easily be the worst invention in the history of computing, so maybe it’s better if our CPUs don’t have secure enclaves?

too late

DRM is not digital “rights”, it’s digital restrictions, full stop. Their only purpose is to prevent an owner of hardware from fully using their own gear.

True enough, but realize that secure elements have more uses than DRM. For the most part, they’re used for secure boot systems or hardware disk encryption support.

That’s what the title says, to prevent hackers (“consumers”) from getting into your (“MPAA’s”) computer.

Indeed. I hate when discussions about infosec devolve into equating petty IP nannyware with security which is crucial to human safety. Security is about protecting people, not locking a product up so its owner can’t use certain features. Typical of our times that serious work is being done to secure the latter category, yet companies can violate an individual’s privacy with impunity and massive data breaches go totally unpunished because it was only a bunch of prole data, who cares.

From the HAD ABOUT page:

“We are taking back the term “Hacking” which has been soured in the public mind. Hacking is an art form that uses something in a way in which it was not originally intended. This highly creative activity can be highly technical, simply clever, or both. Hackers bask in the glory of building it instead of buying it, repairing it rather than trashing it, and raiding their junk bins for new projects every time they can steal a few moments away.”

I don’t like how this article’s title refers to hackers as big baddies.

Besides that, I’m glad that there is an FOSH enclave.

FWIW: I considered changing the title to “Bad Hackers” but then left it b/c it was long enough as-is, and it would have wrapped into another line. And yeah, I’m admitting that I let aesthetics win out over linguistic purity here.

But yeah. We hear you.

Always thought that was pretty damn snooty. Sometimes those “bad” hackers perform very important functions in society. Sometimes law and ethics are at odds, and the world needs people who know how to successfully flaunt those rules. Not usually the case of course, but I don’t like how the term is just being reappropriated to mean “literally any computer-toucher that does their job.” That isn’t really an interesting or useful term.

I think now there’s too many professional silicon-valley types who are just out to design and push viable products and consumer trinkets, or people who do around saying “why build that machine, just buy one it’s only $15k” or “I don’t see any practical application for this” and that’s frustrating to see.

And in which highly trusted country will this hardware be fabricated?

Not Australia or any other ‘5 eyes’ countries one would hope…

Until Risc-V implementations provide gate-level netlists, test architecture definitions, and LEF-DEF data they are neither open nor secure.

Isn’t actually providing that the whole point of RISC-V?

Not really. The whole API/RTL is BSD licenced and that’s all. The hardware itself is not.

You can provide opensource and reviewed RTL to a third party that embed some nasty things in the sea of gate of your chip without you noticing, unless you do a full review of tapeout data.

Ken Thompson, originator of Unix, upon receiving the Turing Award, gave a lecture on trusting the C compiler: https://www.win.tue.nl/~aeb/linux/hh/thompson/trust.html

Similar ideas apply to hardware “compilers”.

Even if they did provide what you asked for, you can’t be sure that’s what was actually taped out. You’d have to inspect the masks, or oversee their creation.

The idea that any hardware that can communicate with the outside in any way is “tamper-proof” is simply a fantasy. “tamper-resistant” sure.

That phrase is supposed to be shorthand.

The rating on safes is in time – the time it takes to break in. Fort Knox would take longer (and cost more) to break into than a crappy little fire-safe I can buy for $100 from Amazon. But I have no doubt that Fort Knox could be broken into given enough resources (say, an invading nation-state’s army).

No security is absolute. But when (mis)using absolute terms, one should take as implied that what is meant is that the cost of overcoming the security exceeds the value obtained by doing so.

I get the feeling that there’s some level of confusion around “enclaves”. Unless I’m missing something, the RISC-V architecture detailed here is more similar to ARM Trustzone or SGX – you run your security sensitive code on the same CPU as the OS. This allows you to minimize the additional components (for example, you only need one bus fabric, you can share the memory controller, you can potentially share cryptographic engines).

Apple’s SEP is very different, and works more like an fTPM, or let’s say AMD’s PSP – it’s an on-die security processor, with dedicated resources, potentially having an access path to main memory, but definitely not running on the same CPU. Isolation is the key here – not just architectural isolation (which we’ve seen with Spectre etc. isn’t necessarily all that solid), but true hardware isolation (meaning that “gates that touch insecure code will never touch secrets”).

Running on a separate CPU allows to optimize for security goals, whereas mainstream CPUs are performance-optimized. Performance-optimized CPU cores for exactly are significantly easier to attack with sidechannel attacks like DPA; a slow security/area/power-optimized CPU will leak much less.

With a separate security CPU, the margin of operation can be much, much higher. For perf-optimized CPUs, you try to operate close to the limits because, well, you want the highest performance for user code. This makes it much easier to attack these CPUs with invasive techniques like glitching. Simple undervolting or clock glitching may be sufficient to manipulate results, which – when for example implementing asymmetric crypto – will very quickly be exploitable. A dedicated security CPU can be run much slower, giving more margin here. Some CPUs even have multiple execution engines that check themselves etc. and shutdown in a controlled way when results don’t match.

Dedicated crypto hardware helps – but once you share the crypto engines with regular, non-security code, that code could be used to leak loaded secrets (for if a crypto context isn’t cleared correctly etc.). You could bank all local state, so “secure” state is unavailable to “insecure” code, but at that point, the benefit of sharing the engine in the first place gets smaller. (A significant area for example is the temporary memory, not the crypto algorithms themselves)

Traditionally, having an extra CPU costs money – these days not a lot of money in terms of silicon area, but it requires to license one more (ARM) core. With RISC-V, this argument may go away. You can just take a royalty-free off-the-shelf RISCV core, add a bit of SRAM and a boot ROM, and be done.

All of this is of course totally orthogonal to what users observe as “secure”; I for myself would like to retain the ability to disassemble any piece of code ever running on the system, and ideally be able to have reasonable proof that the hardware isn’t backdoored. But that is equally possible on a system with a separate security processor or running it on the same core, or not having a secure world at all. A lot of the discussed techniques don’t require signed code (“secure boot”), but would work well with just “measured boot” (meaning you can touch all code, but your secrets will be gone if you touch any security relevant code). That would give everyone equal ability to write the security code – both proprietary or open-sourced.

Kind of why an Amiga X5000 is years a head of the curve on that issue, they already use Uboot on PPC CPU.