When your only tool is a hammer, everything starts to look like a nail. That’s an old saying and perhaps somewhat obvious, but our tools do color our solutions and sometimes in very subtle ways. For example, using a computer causes our solutions to take a certain shape, especially related to numbers. A digital computer deals with numbers as integers and anything that isn’t is actually some representation with some limit. Sure, an IEEE floating point number has a wide range, but there’s still some discrete step between one and the next nearest that you can’t reduce. Even if you treat numbers as arbitrary text strings or fractions, the digital nature of computers will color your solution. But there are other ways to do computing, and they affect your outcome differently. That’s why [Bill Schweber’s] analog computation series caught our eye.

One great example of analog vs digital methods is reading an arbitrary analog quantity, say a voltage, a temperature, or a shaft position. In the digital domain, there’s some converter that has a certain number of bits. You can get that number of bits to something ridiculous, of course, but it isn’t easy. The fewer bits, the less you can understand the real-world quantity.

For example, you could consider a single comparator to be a one-bit analog to digital converter, but all you can tell then is if the number is above or below a certain value. A two-bit converter would let you break a 0-3V signal into 1V steps. But a cheap and simple potentiometer can divide a 0-3V signal into a virtually infinite number of smaller voltages. Sure there’s some physical limit to the pot, and we suppose at some level many physical values are quantized due to the physics, but those are infinitesimal compared to a dozen or so bits of a converter. On top of that, sampled signals are measured at discrete time points which changes certain things and leads to effects like aliasing, for example.

There was a time when it wasn’t clear analog computers wouldn’t dominate. But although they failed to win the computing architecture war, they are still around. As [Bill] points out, analog processing still occurs where you need cheap, fast, or continuous computations.

We’ve only seen part one of the series so far, but it is a great read, laying out the basics and why analog computing is still important. For example, an AC power meter might use a few op-amps to replace a pretty significant digital computing capability.

If you want to be pedantic, yes physical quantities sometimes have quantization — you can’t have a fraction of an electron charge, for example. It is also true that analog computing may introduce some small delay as circuitry settles. But, in general, pushing a digital system to be more precise than the physical quantization or to work faster than an analog computer is very difficult. A few resistors and op-amps are cheap and simple. No real time operating system required. No special techniques for dealing with discrete time measurements.

[Bill] also points out that even a slide rule is an analog computer, of sorts. There was even a time you could even get an analog laptop computer. Sort of.

Nyquist’s theorem says that an analog and digital circuit compute the same thing, both have the same notion of quantization and representational ability. So the only benefit of analog is if you can get your trade-offs more cheaply that way. But digital gives you much tighter control of your error bounds, and many more things you can compute, all in a convenient reprogramable low price unit.

Regarding the AC power meter, I’d take a sampling version over an op amp version any day, as I get low drift and long integration times for free.

Nyquist theorem defines limits for this to be true. And also, it’s only about frequency

Fair point, I contemplated mentioning noisy coding theorem, thermal noise and non-linearity lower bounds too, but I figured others would make the point for me. I think Artenz, mike stone and Luke explain it rather well.

Old power meters use a ball and disk mechanical integrator and work very well. That is the thing that is turning on the inside.

Those things are amazing engineering and I own a small collection of them collected from various rounds of replacements at my properties. But I can build a functional IOT mains electric meter for ~$10 with plenty enough precision and they fits in a matchbox (apart from the CT), and the same straightforward work can build me something else – you don’t get that flexibility with electromechanical or analog designs. That’s my point.

I know power meters with a two-coil meter movement. This works as the magnetic force is the product of the two currents in the coil.

And I know the “Ferraris” Energy meter (normal household electricity meter) which integrates the the force as rotation of the disk and drives the counter wheels. But I do not know, how a ball could come into use in this.

Well, I must have slipped a cog. I can’t find any that work that way. They were in inertial navigation of early missiles and the differential analyzers, process control, etc.

That’s the only kind I saw, in the U.S., before digital meters took over. They have a large aluminum disc (6″?), the edge of which protrudes from a slot in the front panel, which has a single mark on it to make its rotation more visible. The “mechanical” section of https://en.wikipedia.org/wiki/Electricity_meter describes this in some detail. It’s basically a 2-phase induction motor, with one phase being driven by a current transformer, and the other driven by the line voltage, so that the two are multiplied.

If your amplifier’s circuit noise floor is -30 dB then your precision is roughly the same as a 5 bit A/D converter.

If your amplifiers have only 30 dB of dynamic range, you’re doing it wrong.

Yes, but what is the noise floor of the whole system?

To get decent accuracy you do not have to calibrate only the A/D section but the whole thing! it’s a nightmare for lots of reasons…

Even if you have 96 dB of dynamic range, that’s only 16 bits. And common double precision float has 53 bits.

And arbitrary-precision math packages like `bc` are old news.

Being able to calculate lots of digits of resolution doesn’t mean those digits have any useful meaning though. If the numbers represent anything in the real world, you have to deal with characterization of uncertainty in the way the numbers are acquired, and the propagation of uncertainty as you do math with those numbers and others that have their own uncertainty.

In practice, few systems involving calculation need more than 0.1% resolution, and most people would be hard pressed to verify the accuracy of a system with 16-bit resolution (0.0015% or 15ppm).

It’s the same general family of problems as thinking 16-bit ADCs produce 16-bit measurements. Too often it’s more like 7 bits of measurement followed by 9 bits of semi-random noise.. assuming the voltage regulator’s combined error and ripple stays within 1% of the nominal voltage.

Mechanical calculation makes it easy to assume numbers mean something without actually being able to back up that assumption.

In many cases you’re dealing with highly non-linear effects where the interesting values are tightly packed in one end of the range and loose at the other. The signals you’re getting change a little, but over many orders of magnitude.

For example, a gypsum block for soil measurement changes from 500 to 1000 Ohms when the ground is moist, but from 1000 Ohms to 100 000 Ohms when bone dry (and up to Mega-Ohms when really properly dustbowl dry). The signal changes over three orders of magnitude, so if you design your meter to read at 0.1% precision over the full scale, your resolution at the wet end of the range will be terrible. You go from “plants are very happy” to “dear god we’re flooding the field!” in one bit.

Analog systems excel in that, because it’s relatively easy to make a logarithmic converter, but the problem is drift and temperature dependency. It’s a problem that turns really hairy really quickly, and the easiest solution is to just throw more bits into the A/D converter.

analog’s day is likely coming back.

neural nets run faster and on lower power on analog systems then they do on digital one so its very likely thay we’ll see a rise in “analog composers” if you will running A.I. programs.

It never left.

Most of the hard problems in designing digital electronics are analog.. how do you generate a gigahertz clock? How do you propagate that clock across a microprocessor-sized die without having it reach different sections at different times? How do you finesse setup and hold times to use a fast clock effectively? How do you make sure the gates hit those limits within a given power budget? How do you distribute power across a microprocessor without having one section create voltage spikes in another?

Making circuits digital is an analog problem. The faster and more powerful digital gets, the harder the analog design problems become.

Yup, the parts most people forget.

My dad had a story of an aerospace design that used the an analog signal on the input voltage of a DAC as one term of a multiplication with the digital signal coming in the other side. So in that case I guess it would be an analog computer with a digital input?

Actually, many D/A converters are called multiplying converters – they are designed to do that. They output a current that is proportional to the reference voltage * the digital input. The Analog Devices DAC-08, and the Motorola MC1408 are two examples.

“Sure there’s some physical limit to the pot, and we suppose at some level many physical values are quantized due to the physics, but those are infinitesimal compared to a dozen or so bits of a converter”

Not true at all. You’d be very lucky to have a pot that can even do 12 bits. Even good ones have non-linearity errors of 0.5%, which only gets worse as they get dirty or worn.

Yes but we’re talking about resolution, not linearity – even a worn pot would have a huge number of values it could ‘settle’ at

Yes, it’s a huge number of values, but they aren’t useful if they are just random fluctuations superimposed on the actual signal of interest.

I can also perform all my digital calculations in single precision, and at the very end convert the result into double precision and add a small random offset. Nobody would consider that an improvement.

Think about a typical 25 turn trimpot – it would have many more than 2^12 / 25 = 163 ‘settings’ per turn. 2° of rotation isn’t exactly a ‘random fluctuation’.

No, the 2 degrees rotation isn’t random. The problem is the non-linearity between angle and resistance, and random noise because the finish of the resistive track is never perfectly smooth or even. As the wiper goes over microscopic bumps or specks of dirt, resistance increases a bit. If there’s a spot with 1% higher resistance, it doesn’t matter how slowly you turn the wiper. As long as it’s over that bad spot, you will have an error.

You can’t turn the pot at arbitrary resolution because of the stick-and-slip behavior of the wiper.

The wiper is stuck on the surface by static friction. You have to turn the pot a minimum distance so the wiper lever bends, gathers enough force to break the static friction and start moving. You may need to turn it 2 degrees to make it slip, but then you’ve already turned it two degrees and it settles down there.

You may randomly get the wiper to stop at any position, but you can’t deliberately turn it less than a certain minimum amount.

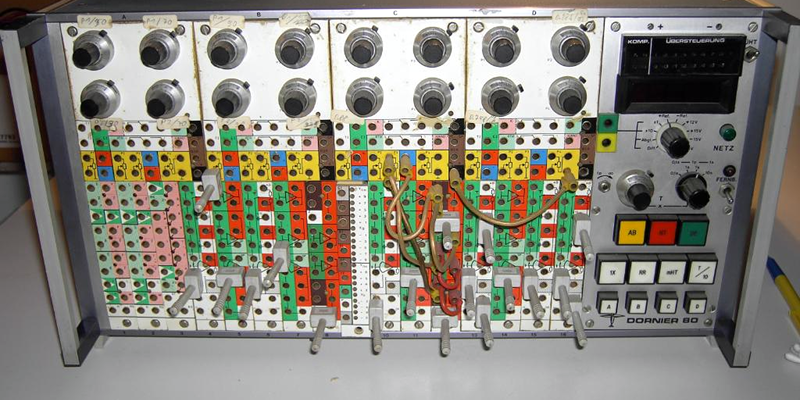

The Beckman pot (like in the photo but bigger) have 10 or 20 turns in a spiral with very fine wire, like nichrome, wound on the spiral mandrel. They have an “analog” counting dial or a digital mechanical one with a 4 digit readout. Some styles were made for analog computers. Probably not more that 4K turns of the resistive wire. They were also used for position feedback in plotters with a cable that wound up on a drum. I had block with 50 of them once. It weighed more than 200 pounds and was full of rods and clutches so that ever one of them could be turned individually to set constants and initial conditions for the op-amp section of an analog computer. All the pots are in a bucket somewhere here. https://www.pinterest.com/pin/553309504199235552/ https://www.ebay.ca/itm/Vintage-Helipot-Precision-Potentiometer-30k-ohm-Model-SA1400A-with-knob/122759129376?hash=item1c9503a920:g:jV0AAOSwdIFXx3Wc:rk:9:pf:0

Pots in analog computers are servo controlled. The servo can be automatic, or a human in the loop. You turn the thing until the DVM reads the voltage you want. You can get 16-18 bits of resolution from a quality 10-turn potentiometer without even trying hard. With a fine-coarse setup with two pots, you are noise limited and it takes a 7-digit DVM to merely adjust it. So yeah, properly designed pots can go a very long way.

Analog computers that are 60 years old already had 4 digit precision and resolution on the servo potentiometers: 0.000-0.9999 was the typical coefficient range, and the 4-digit DVM was made to have nonlinearities in the single counts at worst.

Where it gets interesting is precision levels at which potentiometer become nonlinear due to self-heating. That affects their use as dynamic signal scalers. Interestingly enough, a simple analog computer sub-circuit can model the potentiometer thermal behavior and compensate, improving things by factors of 2-10, depending on how much money you got to spend.

Another thing that works well in precision analog computers is the use of differential currents instead of voltages as the physical representation of signals. This lets you operate over 5+ orders of magnitude. The most accurate multiplier implementations natively take ratios of current inputs as the factors in the product. Modern wideband audio op-amps are so linear in the 1-100kHz frequency range used in analog computing that with proper care you can get results that are much better than half-precision floating point for problems on the simpler side of things.

The major obstacle for experimentation today is the absurdly high cost of high performance analog ICs needed for analog computing. In qty 100, a good summer op-amp is $10+, same for JFET op-amps for integrators, and then the multiplier function blocks of similar precision are $30. Good integrator capacitors cost ~$50 since they have to be shielded air capacitors, and they only work for fast time constants. Even silly stuff like virgin Teflon high impedance standoffs needed to put low leakage integrators together are not cheap. A modern top-of-the-line integrator would be like $100… It would beat the integrators from the 60s-70s vintage machines out of the water of course.

A modern analog computer that fully utilizes technological advances would not be all that much cheaper than one from the 70s, for the same number of functional blocks (summers, integrators, multipliers/dividers, track/holds, resolvers etc). It could use digital techniques to trim analog components exceptionally well using digitally controlled potentiometers (whether integrated, or reed switched resistors, or rotary ones). It could leverage modern op-amps that would make the 70s engineers drool. It would perform well, and be much nicer to use, but cheap it wouldn’t be.

I’ve put together a resolver with 0.03deg FS error and 10kHz bandwidth… took moths of work, it’s a couple hundred bucks worth of parts, including a custom shielding/enclosure and oven system needed for this sort of performance. Sure, you could drop a dozen of those into a modern analog computer. Or literally use an Arduino UNO with a 16-bit ADC and DAC, and have it done in an afternoon for like $25 in quantity. So for hobbyist applications, analog precision is affordable for some things, but still crazy expensive for others.

If you’re interested in analog computers, may I recommend this site, the Analog Museum: http://analogmuseum.org/

Text is in German and English.

The idea that analog computers have better ” resolution” than digital computers may have once been true but not today. That doesn’t mean that there is no place for analog circuits today although I am increasingly finding it easier and more cost effective to digitize early and perform mainly digital processing, even when the output is analog. For example, I am building a device to change the response curve of a Mass Air Flow sensor on a car engine. Doing that with an analog circuit would be very difficult as the mapping function needs to be easily tunable. The digital version is very simple and cheap with one ADC channel, one DAC channel and a simple mapping table. Some care is needed to ensure fast enough conversion but that is well within the capabilities of cheap microcontrollers.

Having said all that, my first foray into ICs was to try to build an electronic slide rule using Plessey SL701 op amps. These were the their version of the Fairchild uA701. Before I could finish it, the early digital ICs came along and I moved on to digital. It would be a great retro project to build an electronic analog pocket slide rule. No … I don’t need another project!

Well, log circuits are not very hard (sort of) and summing is natural for op-amps. Dividing, not so much. Cool project though, and hard in the days of uA701 where an integration slower than a millisecond will drift to a rail.

I think the comparisons people are making between analog and digital are leaving out the important huge body of work on stability in numerical computing. If you don’t do it right, it goes very wrong no matter how many bits of precision. And an op-amp can integrate or differentiate any arbitrary waveform. For digital, if you don’t use sophisticated algorithms for adaptive step size or predictor-corrector or both, and account for various error terms that are inherent in the digital process, you migh get small errors, but more often huge errors or instability. Just saying “But I can use 24 bits of ADC in digital.” doesn’t have much meaning. It is great for the precision of reading a sensor, but doesn’t mean much for computing of the sorts done with analog computers.

Well, if log circuits aren’t hard, and neither is subtraction (I assume you’ll agree that subtraction isn’t much harder than summing), then how can dividing be difficult? I know you know this, but just as a reminder, a/b = antilog(log(a) – log(b)), so it’s no harder than multiplication.

Also, the instability you mention in digital simulations is usually due to time quantization (or other quantization of the independent variable) rather than rounding errors in the dependent variables.

Analog computers made sense when doing real-time calculations reacting to real-world systems, such as gun aiming, bomb dropping, and autopilots. In cases like these, the error in the analog computer can be made smaller than the errors in the input measurements and output actuators. In the early days of computers, analog computers (mechanical or electrical/electronic) could be made cheaper/faster/smaller than digital computers, for a given task. But there was a dark side to this: I worked on an air defense radar system that used 8″ diameter potentiometers and fully analog display scopes to provide height information on aircraft to a digital computer that combined this with other radar systems to send 3D locations to NORAD. It worked just fine, most of the time, but those pots and scopes had to be calibrated DAILY (about a one-hour job for two technicians) to maintain their accuracy. And this was with chopper-stabilized operational amplifiers.

Yeah, you can divide with logs but stringing log/anti-log circuits gets pretty compicated and scale factors get out of control. I think I recall you have to just put gain and offset stages in between to normalize values to ranges acceptable for the next circuit.

Yes, the instability I mention is all about discrete sampling and the surprising way some of the most simple looking straight forward methods go haywire. The methods used to analyze the algorithms (very neat math techniques) show where they go wrong. So instead of Newton, you might need Newton-Feynman, and instead of that you might need Runge-Kutta and instead of that you might need to alternate steps with RK and predictor correcter, or RK with adaptive step size (good for nearly everything), or add an error-fixer, since you can find the size of the error and its direction from the analysis.

Anyway, the mechanical analog will survive the mythical EMP – yeah, I think it is about as dangerous as chemtrails.

P.S. I have a set of tool boxes with all the AT&T tools and spares for teletype and network maintenance from the SAGE bunker at an air force base. I just need a teletype!

Back in 2001, I used an Analog Device AD834 as a programmable attenuator for a 100 MHz square wave once upon a time, to trim the squelch circuit in a fiber-optic receiver chip product. The AD834 is an analog four-quadrant multiplier, that operates by the log-add-antilog method, utilizing on the logarithmic current vs. voltage response of a bipolar transistor B-E junction to do the log and antilog parts. It can also be used as a multiplicative inverter, with an additional op-amp. Thus, it could be used for analog division. I don’t remember how linear it was, or its dynamic range, but it was good enough for my purpose, and was dead stable. I was working for one of AD’s competitors at the time, and I think my superiors were unhappy with my design choice. Two of my colleagues had used two other methods in similar cases, which I wasn’t happy with because from what I could see, neither could really guarantee the accuracy of their methods. One was a discrete transistor approach, the other used a laser diode modulator (which I later found out was essentially a single-quadrant multiplier using a circuit very similar to the AD834). The AD834 was a very expensive chip – something like $50 each, as I recall.

I guess if you’ve held onto those Teletype tools and spares this long, you might as well hang onto them some more. It seems that Teletypes have reached the point where they are highly desirable as authentic “steampunk”. Were you at one of the Air Division HQs, then? I was at radar stations in AAC for a year, 25th AD for a couple years, and the 33rd AD for another couple. Good times.

A childhood’s friend’s father worked for the “telephone company”. We didn’t learn until decades later that it was the SAGE unit at McChord. He kept all the stuff when he retired and I inherited it when my buddy passed away with a huge shed full of old tech. I recall that at one time the entire old switch from Eatonville, WA was in their basement.

He probably DID work for the telephone company. At the radar sites, we always had phone company contractors taking care of our wired communications, and the phone wiring and switching required for a regional control center is similar to a small town.

IIRC, a rudimentary divider circuit with is literally two BJTs with some buffers for nulling of a common factor. It doesn’t even have to be a matched pair, since the performance is based on gm vs Vbe and is not dependent on beta matching. Factor nullers were not a block typically used in analog computers. Yet it’s a very handy function to have. Any time you have ax+b and cx+d and want to get rid of ax and cx, automatically. If you want to extract the log of a/b ratio, you need a matched pair of course. Factor nullers find use in dual path optical measurements, where the light source has a lot of wideband junk from the resonator itself, the pumping switching supply, etc. Such a nuller is also inherently wideband and depends upon the transistor ft and not op-amp performance. It is extremely hard to implement something of matching performance if you just want to digitize the input signals. So there are niches for analog stuff where it beats digital :)

As a grad student at Auburn, I had the great pleasure to build a Heathkit analog computer H1:

https://www.google.com/url?sa=i&source=images&cd=&cad=rja&uact=8&ved=2ahUKEwj25MaT_5bgAhXtV98KHSaNCPAQjRx6BAgBEAU&url=https%3A%2F%2Fwww.pinterest.com%2Fpin%2F449023025329455605%2F&psig=AOvVaw3KW7y0pSmUbkuVVQVMowRq&ust=1548988817824813

It featured 15 vacuum-tube op amps, a plug-board with banana jacks, and enough knobs to satisfy even the most ardent knob-turner. It didn’t work all that well, though. Too much drift in the amps.

In 1968, I worked for a contractor to NASA-Marshall. They had a _HUGE_ Analog Computer Lab that was (I think) three stories tall, all dedicated to analog computing. Can we say “BIG computers”?

I recall one simulation, a simulation of the Apollo docking maneuver. I got to drive it a few times. Mostly, I crashed it by trying to close to the target too fast, then realizing I couldn’t stop in time.

But those computers truly had their day, and were very central to man-in-the-loop simulations.

Yes, well, most lunar landing simulations end up in crashes, due to either slowing down too late, or slowing down too soon and running out of fuel. Same goes for the digital simulations.

See this awesome PDF series “computing before computers” by Ed Thelen. A large part is about analog computers, especially mechanical ones (integration discs etc).

http://ed-thelen.org/comp-hist/CBC.html

Notch filters in analog not possilble. Digital notch filters basis of most reaserch to infinite depths of noise

Notch filters in analog are quite common — a passive twin-t is just a few resistors and capacitors.

https://duckduckgo.com/?q=twin+t+notch+filter&t=h_&ia=web

Match things well, use a good op-amp, and you can get surprisingly sharp Qs.

It depends on how analog you want to get. Switched capacitor multistage notches on ICs can go 100dB down routinely. So I wouldn’t be so hasty to pronounce the demise of analog.

There’s a reason why a lot of power regulator controllers are analog: they consume much less power that way. If you have a switching regulator that has to drive a 100mW load and can’t use more than 1mW for the controller circuit, then careful analog approach is often all you are left with. It’ll also be cheap, since those analog functions on an IC tend to use less space than comparable digital ones. And due to feedback, the open-loop accuracy often doesn’t need to be very good, although this depends on the topology and application.

Isn’t all about hybrid at the end? Why is everybody making it a competition between analog and digital, mixing everything up and creating confusing around it? It is about creating high performance computers with hybrid approaches using both analogies and algorithms.