Eyes are windows into the soul, the old saying goes. They are also pathways into the mind, as much of our brain is involved in processing visual input. This dedication to vision is partly why much of AI research is likewise focused on machine vision. But do artificial neural networks (ANN) actually work like the gray matter that inspired them? A recently published research paper (DOI: 10.1126/science.aav9436) builds a convincing argument for “yes”.

Neural nets were named because their organization was inspired by biological neurons in the brain. But as we learned more and more about how biological neurons worked, we also discovered artificial neurons aren’t very faithful digital copies of the original. This cast doubt whether machine vision neural nets actually function like their natural inspiration, or if they worked in an entirely different way.

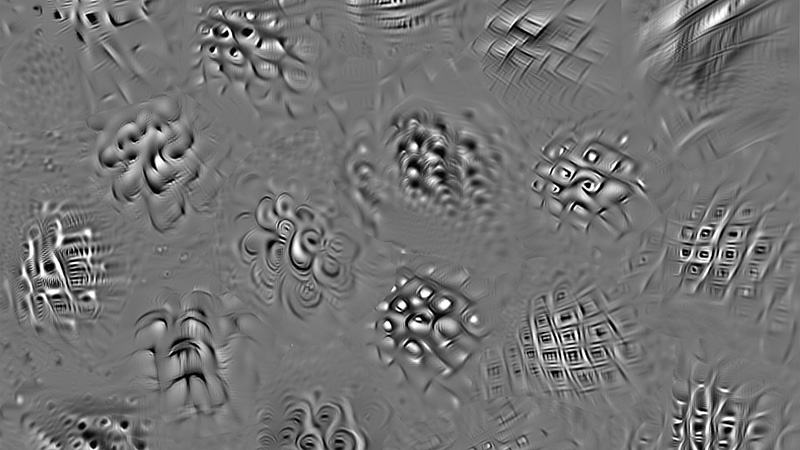

This experiment took a trained machine vision network and analyzed its internals. Armed with this knowledge, images were created and tailored for the purpose of triggering high activity in specific neurons. These responses were far stronger than what occurs when processing normal visual input. These tailored images were then shown to three macaque monkeys fitted with electrodes monitoring their neuron activity, which picked up similarly strong neural responses atypical of normal vision.

Manipulating neural activity beyond their normal operating range via tailored imagery is the Hollywood portrayal of mind control, but we’re not at risk of input injection attacks on our brains. This data point gives machine learning researchers confidence their work still has relevance to biological source material, and neuroscientists are excited about the possibility of exploring brain functions without invasive surgical implants. Artificial neural networks could end up help us better understand what happens inside our brain, bringing the process full circle.

[via Science News]

“we’re not at risk of input injection attacks on our brains” – unless you count those with epilepsy.

Curiously, a lot of the repeating coloured patterns in ‘deep dream’ images look just like patterns you experience during a visual/ocular migraine. In particular the image in this article.

Yeah as someone who frequently experience migraines, I had to look away from the header image because it was very uncomfortable. Determining shapes uses a lot of edgelets so I guess a combination of strong contrasting edges in various directions and meshes is not all that surprising

Glad I’m not the only one! And many thanks to the image creator for not making them brighter. Would have looked exactly like the visual effects of my migraines.

I used to have visual migraine. After some years i’ve managed to learn how to mentaly stop it when i feel it begins. I guess we can try to do similar stuff in artificial neural networks. Maybe have second neural network which will detect misbehavior of the first one and tune it if needed.

I get those occasionally, too. Any advice you could share on the technique? I’d love to be able to prevent them.

First of all. Go out for a walk regulary. Get your spine happy. From time to time do a sommersault on the ground (not too soft, carpet is ok), so your back gets feeling nice and stretched. Maybe drink water, eat healty, don’t overuse salt and sugar, sleep enough but not too much, keep yourself in good mental health, usual stuff everybody knows but nobody does. That’s good begining for prevention.

When it started to happen to me, i was so obsessed with the process and i was very focused on the “blind spot” which started forming in my view (similar to what happens when i look directly into the sun, but without looking to sun). Turns out when you learn how to ignore it, it does not grow and dissapears. At least for me. I know this might sound stupid and too obvious to work, but works for me. However it’s not always easy to ignore something scary going on in your head, that’s the catch. The dreaded “don’t think of a pink elephant” problem. Learn to focus on something that’s not directly in middle of your sight. Eg. lay newspaper on the table, look right next to it with your eyes and try to read few letters by focusing your mind on it without actualy moving the eyes. That’s similar proces to how you steer the focus away from the visual disturbance going on. After a while your brain “forgets” about the process. Keep your mind busy thinking about something else. When this relief happens, to make sure it does not get back, use steps for prevetion described before (because migraine attack means something is wrong in the first place).

Now to be honest i’ve figured out this technique while high on psychedelics during my dumb high school years :-D i’ve noticed that when i was smoking marijuana at least once a few weeks, i didn’t get migraines anymore. then later when experimenting with mushrooms and lsd, i’ve learned how to stay cool even with weird and scary stuff going on in my head (i guess that’s one usefull life-skill psychedelics force you to learn). Brain kinda reveals it’s structure during such experiences and it helped me to understand some of it. I even had experiences remotely resembling migraine, but without the pain (and fear), which might helped me a lot. As a result i learned the technique described in previous paragraphs. Didn’t had fully developed symptoms since then. Even when i don’t do drugs anymore. However i don’t really say you should do this, because it’s easy to mess your mental health up with such experiments if fear overtakes your reality. Especialy when doing it uninformed or using dodgy sources.

I hope this helps. I don’t really know if i can pass this skill of stoping migraine to other people, but one thing is sure as hell. It can be learned. Brain is neuroplastic.

The DeepDream images look like a lot of visual phenomena, such as the phosphenes that appear when we press our eyes, the patterns that appear when we stare at led flashes from “trip glasses”, or various “closed eye hallucination” phenomena …

Which has caused me to wonder whether our wet-ware has middle-layers that are a bit like those in CNNs

Sure. The photosensitive cells (cones and rods) are arranged in a way which does some kind edge-detection right in our eyes. Even before signal gets to the brain… So i guess in brain it gets even more complex…

>”This cast doubt whether machine vision neural nets actually function like their natural inspiration, or if they worked in an entirely different way.”

That’s not sufficient to decide on the matter. ANN’s don’t necessarily need to replicate the exact behavior to replicate the high level functions of natural vision, and even if they do it isn’t necessarily sufficient to replicate the function.

That’s saying, you can make bricks out of clay or plastic – how you lay them decides whether you end up with a house or just a pile of bricks that looks like a house but doesn’t work as a building because you forgot to add doors and windows.

That’s what keeps happening with ANNs. They train the networks, but then when the training is complete they stop the learning function to stop the network from drifting away from the “correct” solution, and that reduces the network to a mechanistic simile that passes a set of synthetic tests, but doesn’t work like the real deal. The real thing is continuously learning, so it has evolved a second function that’s missing from the ANN: self-feedback and reinforcement to keep the learned solutions and improve/improvise on them to adjust for new situations and variations of the theme. In other words, they’ve reached the state of neural networks that resemble the hard-coded instincts found in animals, which are a function of the fixed structure of the network.

Just like a newly hatched chicken already knows to run from the outline of a hawk in the sky, these networks are fragile in function because they react the same even if its just a cardboard cutout held on a stick. The chick cannot question and reason over the stick because they’re only reacting to the shape of the card.

That said, you can make a house out of plastic bricks:

https://www.youtube.com/watch?v=FrLkHn_RlgY

And, on the point of self-feedback: there are experimental ANNs which do implement this. The general problem is called “catastrophic forgetting”: when the network is trained on one task, setting it loose on another task overwrites the solution to the first and slows down learning on the second task, resulting in behavior that’s totally inappropriate for both until the network has totally forgotten the first solution.

Some solutions to the issue involve seeding the network with prior knowledge so that similar problems end up reinforcing and modifying different parts of the network without overlapping, but this isn’t a very robust solution because there’s always bleed-through and eventually the network forgets.

Other solutions loop the network back to itself, so you get a sort of echo chamber that feeds whatever is learned back into the network, which then repeats the same behavior. That forms a higher level of memory, but the difficulty becomes interpreting the result in terms of an external observer. The patterns make sense to the network itself, but for an outsider the pattern is gibberish and they have to interpret what the network is thinking about by correlation. When you show a picture to the network, it no longer gives you an unambiguous answer where particular neurons light up to say “Car” or “Dog”. You then need a second NN to learn what the first one is thinking about when it is repeating a particular pattern, and you’re back to square one.

Do you have a name of the technique used (or, better yet, links to the papers) of the second (echo chamber) solution to catastrophic forgetting? I would like to read more about that technique.

Can’t remember the name of it. Here’s a list of different approaches

https://en.wikipedia.org/wiki/Catastrophic_interference

From what I recall, it was very much like this approach using two networks as a long and short term memory:

>”the final-storage area sends internally generated representation back to the early processing area. This creates a recurrent network. French proposed that this interleaving of old representations with new representations is the only way to reduce radical forgetting. Since the brain would most likely not have access to the original input patterns, the patterns that would be fed back to the neocortex would be internally generated representations called pseudopatterns. These pseudopatterns are approximations of previous inputs[15] and they can be interleaved with the learning of new inputs.”

I agree. If ANN worked correctly, they would produce different output for different input.

Real bird and cardboard cutout are not the same, so system is just not robust enough, or is poorly designed/unsuitable.

“we’re not at risk of input injection attacks on our brains” … not in this experiment, true, but turn the TV on and it is a certainty. It’s best to keep that obamanation off most of the time if not entirely. It’s enough having to stave off attacks from adds on web sites. …. Stay on target!….Stay on target!….don’t follow that flashy light!!