Up until now, running any kind of computer vision system on the Raspberry Pi has been rather underwhelming, even with the addition of products such as the Movidius Neural Compute Stick. Looking to improve on the performance situation while still enjoying the benefits of the Raspberry Pi community, [Brandon] and his team have been working on Luxonis DepthAI. The project uses a carrier board to mate a Myriad X VPU and a suite of cameras to the Raspberry Pi Compute Module, and the performance gains so far have been very promising.

So how does it work? Twin grayscale cameras allow the system to perceive depth, or distance, which is used to produce a “heat map”; ideal for tasks such as obstacle avoidance. At the same time, the high-resolution color camera can be used for object detection and tracking. According to [Brandon], bypassing the Pi’s CPU and sending all processed data via USB gives a roughly 5x performance boost, enabling the full potential of the main Intel Myriad X chip to be unleashed.

So how does it work? Twin grayscale cameras allow the system to perceive depth, or distance, which is used to produce a “heat map”; ideal for tasks such as obstacle avoidance. At the same time, the high-resolution color camera can be used for object detection and tracking. According to [Brandon], bypassing the Pi’s CPU and sending all processed data via USB gives a roughly 5x performance boost, enabling the full potential of the main Intel Myriad X chip to be unleashed.

For detecting standard objects like people or faces, it will be fairly easy to get up and running with software such as OpenVino, which is already quite mature on the Raspberry Pi. We’re curious about how the system will handle custom models, but no doubt [Brandon’s] team will help improve this situation for the future.

The project is very much in an active state of development, which is exactly what we’d expect for an entry into the 2019 Hackaday Prize. Right now the cameras aren’t necessarily ideal, for example the depth sensors are a bit too close together to be very effective, but the team is still fine tuning their hardware selection. Ultimately the goal is to make a device that helps bikers avoid dangerous collisions, and we’ve very interested to watch the project evolve.

The video after the break shows the stereoscopic heat map in action. The hand is displayed as a warm yellow as it’s relatively close compared to the blue background. We’ve covered the combination Raspberry Pi and the Movidius USB stick in the past, but the stereo vision performance improvements Luxonis DepthAI really takes it to another level.

Somewhat of an odd choice for a startup to pick hackaday.io as their platform. Can you guys elaborate on your decision to do that over something more traditional?

Hi jrfl,

Thanks for the question! So most of the cool things we’ve seen with embedded machine learning/AI/etc.have been featured here. And also Hackaday projects and the folks building them tend to lead or at least be signals of what’s coming. So folks here often are trying things that will eventually be mainstream – and we want to help those engineers shape the future!

An example is the ESP8266 that’s now in everything, was first here being implemented in prototypes.

But back to the Raspberry Pi ML projects, there are several interesting ones here, and a lot of interest in them. From killing hornets, to counting bees to help prevent hive collapse, to autonomously guiding robots or sorting parts. So we figured we should have our project here – as we think it’ll be useful for so many who are pushing the embedded AI envelope now.

That, and we’re huge fans of Hackaday.com/io.

That said, we’d love to know what routes you have in mind. As perhaps we should be doing those as well? Maybe we’re missing an opportunity to get awareness and get this in front of folks who would want to use it?

Anyways, would love your thoughts!

Best,

Luxonis-Brandon

Well, the $125K for wining the Hackaday Prize probably helped…

Hi Money Talks!

So my understanding from your comment is that you think that we won the Hackaday Prize and therefore got $125k in winnings already. Is that right?

So we haven’t. And actually the judging for the $125k prize doesn’t start until October 1st, and concludes November 1st. See ‘Dates’ here: https://prize.supplyframe.com/

And (embarrassingly) we didn’t even know about the 2019 Hackaday Prize until we applied to Crowd Supply a couple weeks ago and Darrell there kindly told us that we have a project that would be a great candidate for the prize. He also told us about MicroChip Get Launched, which we’re also going to enter, given that the Microchip LAN/USB part is the key of DepthAI communications.

So we’ve had our Hackaday.io project up since January, and only learned about the Hackaday Prize 10 days ago, and applied 9 days ago. Ooops! We even missed out on the potential to get some smaller prizes in the mean-time, and importantly, the help getting awareness to what we’re doing.

We started the project, when already working on CommuteGuardian, when we saw so many articles on Hackaday.com about using neural networks on the Raspberry Pi. Here are some examples:

https://hackaday.com/2019/01/31/ai-on-raspberry-pi-with-the-intel-neural-compute-stick/

https://hackaday.com/2019/01/25/robot-cant-take-its-eyes-off-the-bottle/

And then this one came later (and there are a ton others that I don’t immediately have links for), but is crazy impressive:

https://hackaday.com/2018/05/30/counting-bees-with-a-raspberry-pi/

So that made us realize that what we’re doing would probably be useful. As it allows ~5x the performance at lower power while also giving you depth information.

And on the Crowd Supply bit. So prototypes of this stuff is SUPER expensive and you need to order ~500 to maybe 1,000 units before the pricing gets reasonable. So we’re working now to make a Crowd Supply campaign, which we hope (if all goes well) to go live in September.

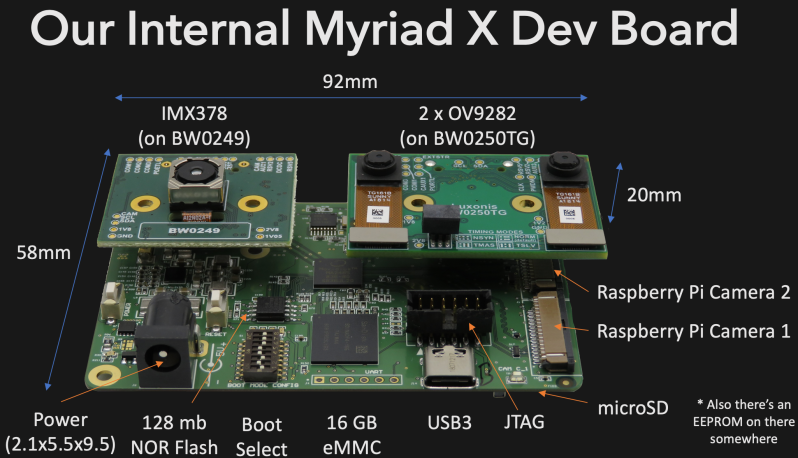

This will allows us to (1) see if people actually want DepthAI for Raspberry Pi and then if they do, (2) order in the ~500-unit to 1,000-unit range to get the price down to a reasonable level per unit. Our first dev. boards cost us $1,500 a unit for example (the dev. boards you see), and we’re HOPING to be able to sell a much-more-capable version (the DepthAI for Raspberry Pi) for $199, by leveraging the volume that Crowd Supply affords (thank-you, Crowd Supply!).

Thoughts?

Thanks,

Brandon

Hey @jfrl just wanted to mention that we have our initial Github up now, and we’re close to being able to launch our CrowdSupply.

https://github.com/Luxonis-Brandon/DepthAI

Also, we weren’t selected as a finalist in the Hackaday prize. Definitely still on Hackaday though, for the reasons I mentioned before.

Ever since the first Pi Noir camera came out, I have wanted to use one or two of them to make a set of night vision goggles for myself, based on an infrared illuminator. This project would enable me to do some of the image processing I have been interested in in real time, making a far more useful cheap night vision setup. I will be watching it with great interest.

Cool idea! And thanks for the interest! Always great to hear. So we haven’t tried yet but we -think- we’ll be able to get the Pi Noir cameras working directly with the Myriad X. We -think- the firmware won’t be too hard. We actually put the hardware provisions for this in our dev boards so that we could take a stab at writing the Myriad X driver/ISP support for these.

We have some major firmware hurdles to surmount first, but if we succeed there we’ll probably quickly circle back to try to make the Pi cameras work.

Oh and I’m not sure (I have to check the spec sheet) but I think the OV9282 we’re using now will work with IR illuminators. Will circle back.

I think they would work with IR floods since they’re near-IR capable already and not likely to be filtered super well. Neat. And thanks for the reply!

Yes, I think so too. And thank you!

Also – we’re currently investigating if our global-shutter gray-scale image sensors (the OV9282) will work w/ IR floods as well. The image sensor will, but we’re not sure how narrow the filter in the lens assembly is. It’s rated for “420nm to 630nm filter, w/ T(min)>=85%, T(ave)>=90%”, but that doesn’t really say whether near-IR will make it through or not, as it doesn’t specify the roll-off of the filter.

So we’re digging! We’ll probably also try some IR illuminators (e.g. Apple’s pattern projector and the D435 pattern projector) to simply see if our cameras see them.

Thoughts?

Thanks again,

Brandon

Interesting project. Especially the part about improving the quality.

I have been looking at the various efforts at using the Pi to create stereoscopic cameras. The main problem with most maker approaches has been that of getting and maintaining sync between the two cameras. Ideally this would be done with genlocking (external sync), so that the sync generators in both cameras would both be “looking at the same clock”, which usually is a crystal oscillator. Do you have any ideas about this?

Hi John –

I worked closely with Brandon on these boards, so I think I can help answer this…

We do use the same clock gen for the stereo pair, but use a separate clock gen for the RBG sensor. For shutter sync, the board comes with a number of jumpered options that allow for a master/slave config, no sync, or an external sync. So, yes… It’s possible to configure all three cameras so they capture an image on the same sync signal.

If you have any more technical questions, ask away!

Thanks,

Luxonis-Brian

Hey John!

Thanks for the kind words and great points!

Yes, so the Myriad X helps a ton on being able to synchronize like you mention. So actually the stereo pairs on the design are driven by the same clock, so that helps, and then also have the capability to have an external sync (or even drive that sync to another sensor or set of sensors). Having so many MIPI lanes and the whole ISP pipeline designed for this stuff makes it so much better and easier. And of course the Myriad X also has the dedicated stereo-disparity-depth hardware (which can actually handle 3 pairs of sensors, all at 720p 30fps, which is nuts). All of this is the root of why we wanted to free the Myriad X from the confines of the NCS2, where the majority of its capability isn’t accessible.

And yes, that’s exactly right we have a programmable clock that drives both stereo camera modules. And then the second level above this is the strobe, which allows the stereo result to say be synchronized at a per-frame basis with the color image sensor (or other sensors, if we made a design with more than 3 sensors).

Happy to answer any/all more questions, here, at our hackaday.io project, discuss.luxonis.com, or my email at brandon at luxonis dot com if any of the stuff is better to talk offline.

Best,

Brandon

Interesting project.

Any idea of the latency (time from the start of the image capture until the data is available in memory for processing) ? (more important than fps for real-time application)

Are the camera on the board synchronized?

Is there a timestamp/capture start signal available?

Any idea of the price?

Hey Arn!

Thanks for the questions and kind words. :-)

Agreed that latency is WAY more important than FPS for real-time application. Good point and definitely should have mentioned/stressed that in our project page (and will do from here on out).

So we haven’t measured latency yet, and definitely not optimized for it -yet-, but I think, based on looking at some reference stuff, that it’s in the ~1.3ms range – and that’s for with feature tracking results. And the note that went along with that was one of those ‘TO-DO this is really lazy code’, so I would guess from that, that it’s possible to get sub-millisecond latency pretty easily.

Synchronization: Yes, the stereo cameras are synchronized (run off the same clock) and have designed into them the capability to ‘strobe’ synchronize between other sensors and other pairs. So the existing stereo pair can be set as master or slave on this strobe. So our existing (and future) boards, I think (Brian can correct me if I’m wrong) can be set to frame-synchronize between the color sensor and the stereo pairs, at the hardware level.

Is there a timestamp/capture start signal available?

Not currently, but this is a great idea so we’ll plan on implementing it. Do you have any thoughts on desired format/etc.?

On pricing, we’re trying to get it as low as we can, as we think this thing really only has value if folks can easily buy it and start implementing their prototype that will eventually change their industry.

So for DepthAI for Raspberry Pi, we’re hoping to get to $199 for the base ‘Tinkerer’ edition and $249 for the ‘Quick Start’ version.

Tinkerer: $199

That would include 3 cameras (2 global shutter for stereo, 1 12MP for color and 4K video), our Myriad X module, and the carrier board for the Raspberry Pi Compute Module. You’d have to buy the Raspberry Pi Compute Module ($25) and an SD-card with this version.

Quick Start: $249

This includes everything, including the Raspberry Pi Compute Module, the SD-card, and we’ll have done the stereo calibration and stored it to the appropriate spot on the SD-card already. So the thing will boot up and already be running calibrated AI + depth.

So we’re doing a Crowd Supply hopefully in September to try to get a big enough order where we can get the prices this low. So as of now these are our goals and we don’t yet know for sure if we’ll be able to hit them.

Thoughts?

Also you have any more specifics on this, or really any other ideas on it, feel free to post here, on our hackaday.io project, discuss.luxonis.com or if there’s anything you need not public feel free to email at brandon at luxonis dot com.

Best,

Brandon

for the latency, some people tried to estimate it for the jetson tx2 board, it would be interesting to have similar experiment results (even if the latency of the screen has some effect too, it could probably be improved by using a uniform color screen (or led with high speed swith on/off) and measuring the time until the module decode the color) : https://devtalk.nvidia.com/default/topic/1026587/jetson-tx2/csi-latency-is-over-80-milliseconds-/post/5222727/

for the timestamp/start pulse, something to know precisely when the frame capture start. for info, the raspberry pi emit this signal : https://www.raspberrypi.org/forums/viewtopic.php?t=190314 (but I never tried it).

btw, there may be interest to control individually the camera-sync. doing half-frame delay can result in double fps (without 3d) that can be useful for real-time tracking.

Precise frame start sync can also be used to make this kind of device : http://www.cs.cmu.edu/~ILIM/episcan3d/html/index.html

Wow, thanks, Arn! That CMU Episcan3D and EpiToF are super cool!

And also, you made use realize that latency is something we should be touting. As the Myriad X is specifically designed to allow low latency… in fact the whole chip is architected around that. So much appreciate you bringing that up and making us realize we’re not mentioning probably one of the most important things of this platform.

So we’ll try to replicate this display-to-display latency test, w/ the Myriad X outputting directly to a fast-response-time display.

We’ll also probably take a stab at an oscilloscope-measured variant, where we use the drive signal of a bright light, and then use firmware to drive a GPIO of the Myriad X when it perceives the light. So that one can also see what the latency is getting to code, rather than the latency getting all the way out the monitor.

Thoughts?

Anyways, thanks again!

Happy to see you consider the latency as important :)

Oscilloscope variant seems great, it removes the display latency.

Another feature that would be great (but not sure if it’s possible, it may depends on the camera sensor) : being able to keep a high framerate by cropping instead of reducing the resolution. it would be awesome for realtime tracking applications, especially if the camera is far away and cover a large surface.

Great idea. I do know there are region settings you can do with the sensors we’re using for stereo depth so what you describe might in fact be possible.

That said I know 720p is doable at 120FPS on these sensors, which is pretty good.

My understanding is that the max frame rate we can handle doing depth calculation is 90fps but with latency commensurate with 30fps, if I’m understanding the hardware correctly.

Anyways, I’ll look into both.

A very smart move by Intel buying Myriad.

A little bit more info about what is inside the VPU (Vision Processing Unit) chip can be found here:

https://en.wikichip.org/wiki/movidius/microarchitectures/shave_v2.0 and

https://en.wikichip.org/wiki/movidius/microarchitectures/shave_v3.0

Ya those are helpful links for sure! Thanks for sharing them. What’s described in shave_v3.0 I believe is the Myriad 2-era technology, FWIW.

This specific project is my dream come true- I have wanted functional stereoscopic thermal vision since I saw Predator at 13.

Laugh if you want, but its an entirely new way to see the world.

drew I love it! Ya and hooking thermal cameras to the Myriad X should be doable. Like 2x FLIR Lepton or their higher-res brother (Boson) would be killer. The whole world, in thermal, in 3D.

We’ve actually wanted to do this. Hopefully if this project is a success we’ll be able to attack making both an infrared-illuminate (and/or pattern-projected) version in addition to a thermal-camera version.

Best,

Brandon

I will shower you with money if this happens, especially if its high rez, over 30hz, and can be connected to realtime eyetaps.

I want to put it in a mask and actually experience this

Taking into account high price and low resolution of thermal cameras, it probably makes more sense to use two regular cameras (perhaps with IR illumination) for 3D and one lower resolution thermal camera to color the 3D point cloud or mesh. Two thermal vision cameras are probably not only going to be very expensive, but also not very good for stereoscopic vision for a number of reasons.

Forget costs. Explain why depth perception would be affected, in detail, for any use like this. I understand how thermal sensors work. Would it be an artifice of lensing issues, emiited scatter versus perception of reflected traditional light, causing some strange impossible to perceive 3d heat cloud?

I have been extremely curious about true stereoscopic thermal vision for many years, but almost noone has ever written about this, specifically. Would love more insight.

I could be wrong, but in general thermal images I’ve seen are much less detailed than normal videos of the same objects. Therefore, calculation of the depth map is going to be inaccurate and the results less than impressive. IMO that’s because closely located points on the same object have high chance of being the same temperature and therefore the same color in thermal image. However, it’s possible that it’s just a matter of camera quality and cost.

I believe if the cost is not an issue you can experience stereoscopic thermal vision buy using two compact thermal cameras mechanically connected together like a binoculars. It’s probably going to be bulky but still possible to use, and you won’t need to wait until somebody else’s project is done. I know I’m station obvious, but for stereoscopic vision (thermal or whatever else) you just need sensors, display and optics, image processing for depth perception can be done by your brain like with regular binoculars.

Right. That approach makes sense. And actually might be able to do such a prototype w/ OpenMV (shoutout to Kwabena and Ibrahim!) as they have the FLIR Lepton working with their system as a module. Just use two of them and a display. Not sure what the display options are w/ OpenMV though.

Ya, I too have been super curious about this as well. And I’m unsure how say the semi-global matching would do on thermal images, say if you have equivalent-resolution 720p thermal image sensors.

Ya I really like that idea of using a single higher-res thermal sensor to colorize onto the point cloud in 3D. So would be combination of passive thermal and dual active IR + global-shutter grayscale for depth map.

How did gou guys go about aquiring the movidius chips?