In a recent study by a team of researchers at MIT, self driving cars are being programmed to identify the social personalities of other drivers in an effort to predict their future actions and drive safer on roads.

It’s already been made evident that autonomous vehicles lack social awareness. Drivers around a car are regarded as obstacles rather than human beings, which can hinder the automata’s ability to identify motivations and intentions, potential signifiers to future actions. Because of this, self-driving cars often cause bottlenecks at four-way stops and other intersections, perhaps explaining why the majority of traffic accidents involve them getting rear-ended by impatient drivers.

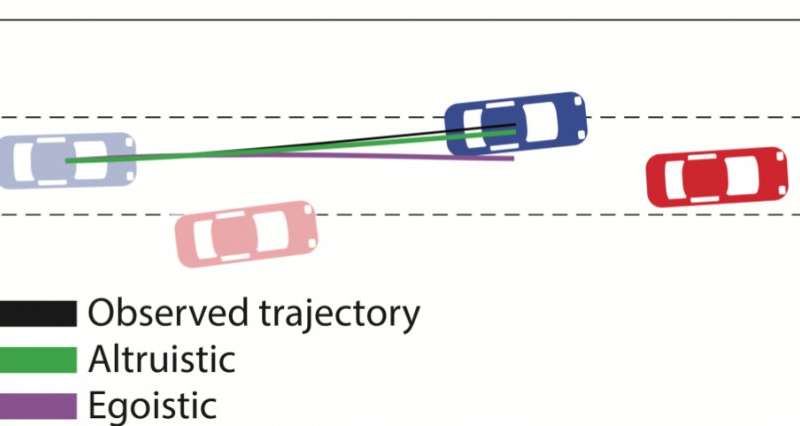

The research taps into social value orientation, a concept from social psychology that classifies a person from selfish (“egoistic”) to altruistic and cooperative (“prosocial”). The system uses this classification to create real-time driving trajectories for other cars based on a small snippet of their motion. For instance, cars that merge more often are deemed as more competitive than other cars.

When testing the algorithms on tasks involving merging lanes and making unprotected left turns, the behavioral predictions were shown to improve by a factor of 25%. In a left-turn simulation, the automata was able to wait until the approaching car had a more prosocial driver.

Even outside of self-driving cars, the research could help human drivers predict the actions of other drivers around them.

Thanks [Qes] for the tip!

Sounds like they should track every vehicle by building 3D models of the drivers, passengers and capture images of the license plates along with the make and model of the vehicle and then build up their private database of social personalities … oh no sorry I was thinking of a police state (or that “Don’t be evil” company, or that social advertising company, or the telemetry company, or the …). {sarcasm}Remember that it is not an invasion of privacy (outside of Europe) if only machines look at and use the data {/sarcasm}

You joke, but I would love to be able to build a box that logs licence plates and gives me warnings about local dangerous drivers.

Same thing, plus be able to note them about their behavior or to “send message” (which is a bad idea because of abuses). Like, hey only on breaking light is still working! and this isn’t the middle one!

However for sure it would be illegal/against privacy to log such data I guess…

It is not like implementing it is in any way complex these days (OpenCV, Python Tesseract, MySQL), but just because mass surveillance is easy does not make it legal. Arson is easy (oxidiser, accelerant, heat) and illegal.

Not exactly mass surveillance, more like personal surveillance. If I had photographic memory I would memorise the licence plate of people who cut me off and remember to keep my distance from that car in the future, but a box that does the same thing would be enough.

If you want your driving behaviour to be private you can achieve that by not driving. The way you drive on public roads is not private data.

” Because of this, self-driving cars often cause bottlenecks at four-way stops and other intersections, perhaps explaining why the majority of traffic accidents involve them getting rear-ended by impatient drivers.”

What? No. They’re getting rear-ended because the self-driving cars follow the traffic laws and dangerous drivers are conditioned to expect other drivers to do dangerous things, like run lights that just turned red.

The solution is not “fix self-driving cars.” The solution is “penalize selfish, dangerous drivers or remove them from the road entirely, temporarily or permanently.”

Citation Needed.

Indeed it is because self-drivers break unexpectedly. Read up!

https://www.wired.com/story/self-driving-car-crashes-rear-endings-why-charts-statistics/

https://www.aitrends.com/ai-insider/rear-end-collisions-and-ai-self-driving-cars-plus-apple-lexus-incident/

The good news is that self-drivers very rarely rear-end others. But there’s a balance between false-detecting a hazard in front of you, and overlooking the hazard. With the exception of Uber, which had tuned their threshold way down before the car killed someone, the industry has been being very conservative. And this means that self-drivers brake unexpectedly when the system mis-perceives an obstacle.

Is it better than ignoring people and plowing through them? Oh yes!

Moreover, if the public _knew_ that self-drivers had a tendency to brake suddenly, and it were obvious which cars were driving autonomously, we could all work around them. “Oh boy, a Google car. Give it extra following distance.” But the companies have no incentive to fess up to their shortcomings, and uninformed people like yourself continue to think that the technology is somehow magically perfect already. I’m sorry to be tough on calling you out here, but it’s a real safety issue.

IMO, automatic cars should have a yellow spinny light on top when in auto mode. Like for wide loads and other vehicles that you have to take extra precautions around.

“The good news is that self-drivers very rarely rear-end others”

Unless it’s Tesla autopilot and it’s behind a firetruck :)

A frequent occurrence for ambulance chasing lawyers?

B^)

Sorry, but there is no excuse for rear-ending, no matter what. Sudden braking is always an option. Something unexpected may happen in front of leading car, or inside it. We must always leave enough space between our car and car in front of us that we can safely stop at the speed we are moving, with pavement condition at the moment, before we pass that distance.

If you do that, you’ll pretty much just cause gridlocks and other people are going to jam into the gap you leave.

While sudden braking is always an option, it is not very likely to happen. It’s overwhelmingly more likely that people fail to brake even though they should, than brake for no reason. Even when people do brake, they rarely do a full-on emergency stop.

Smoothly flowing traffic doesn’t cause gridlock. Allowing appropriate braking distance means you can slow down gently even if someone in front is braking hard, and that means the person behind you (assuming they’re also at an appropriate distance) can brake even more gently.

Thank you! Genuinely, thank you for being that guy. Yes, even humans have a tendency to slam on brakes suddenly and no matter the reason for the braking, the driver following too close is at fault for doing exactly that.

When you give space, yes, a bunch of people jump in front of you and it relieves the vehicle density in that lane, allowing traffic to flow. I hope you get a thank you wave from those folks, but maybe that’s just my city.

Totally agree – And people do know. We are not seeing this yet, but will soon – Kidnappings and carjackings – Because now, when the criminal wants to stop their target, whether it be for kidnapping or carjacking, they simply present an obstacle to the vehicle and it complies and stops. This is not true just for self driving cars – Many newer vehicles have this auto braking feature.

I would imagine that it will first become popular to exploit this feature in south america and mexico where cartel kidnappings and road crimes are the norm, and then it will slowly trickle its way into the more developed countries.

There’s an even better angle to this. You can make the radar based sensors “see” an obstacle with the right type of RF interference. Will we see a handheld device someday, which can be pointed at these vehicles and cause them to stop so that the drivers can be properly robbed, carjacked or kidnapped?

Self-driving cars are not better or safer than human drivers, nor do they stringently stick to the rules at all times. Just because it’s a computer doesn’t mean it’s perfectly competent. Please stop falling for this myth.

Stated another way: until self driving cars make better and faster judgements than humans, the only way to be safe is for them to operate cautiously and to obey all the rules – speed limits, space between cars, stopping on yellows, etc. Like your Uncle Leonard on a Sunday. Except that instead of some warning light, Uncle Len has a hat.

I wonder how far they could get just by looking at the make and color of the cars around. Insurance companies are well aware of the correlations between accident likelihood and make & color, just from statistics, without going into the reasons. On the other hand, maybe that would mean if you drive a green Volvo you would always have autonomous vehicles turning left in front of your path, drive a red BMW and they would keep clear :-P

In the netherlands :

locate brand of car.

if band contains audi, bmw or car type is seat leon or vw golf: alertLevel += 42

if car has ridiculous spoiler: alertLevel += 42

if relative speed of cars around you >=50Km/h alertLevel += 42

Add a +68 for noisy defective exhaust system.

Also:

Ik heb een groene golf, dus ik mag altijd door rijden.

Trouble is you’d need to get rid of most of the human race. It’s been said that the best piece of safety equipment is the human brain but that’s not who we really are. In the extremes, whether altruistic or egoistic, the vast majority of humanity is self-servicing. It’s a rare treat indeed to find a prosocial driver.

This is just some of our psychological and emotional baggage and now multiply that by our knowledge of the task(driving and dynamics involved) times the skill level achieved, practically speaking, each of us are encountering an infinite number of variables every millisecond we are on the road. Unless we eliminate ALL of these variables so called autonomous driving is a entrepreneur’s wet dream. We would need to spend trillions of dollars on infrastructure alone. We’re being led to believe that we are in the Jet Age of autonomy when, in reality, we are somewhere around Kittyhawk.

Well. Cynicism is part of the human brain, and so is the bias to weigh bad actors far more heavily than good actors. There are absolutely a great number of prosocial drivers, and humans are actually ridiculously safe when it comes to driving. The rate of accidents in an all-human transport grid is miraculously low. I don’t like it when people make out humans to be terrible and disastrously unsafe, begging the question that computers are by default better. It’s just not true.

Definitely agree with what you say in that self-driving will never work properly unless it’s an exclusively computerized system with no human operators involved, which I’m afraid will likely not happen in the US for a long, long, looooong time. Car companies ingratiated themselves and car culture into the American psyche for a century. It’s not going to be uprooted easily. And these systems where a backup driver is required and must stay vigilant and ready to take the controls at a millisecond’s notice in order to prevent calamity is just insane. It’s the least safe design for a system like this, where everyone’s understanding and arguably the entire purpose of autonomous vehicles is to free the driver’s attention, and thus it lulls people into this false sense of security until it wrenches that away at random intervals with very little notice.

Self-driving cars are a boondoggle. They might as well be flying, fusion-powered cars, too. They will take many decades longer than the average person thinks they will. The most capable actors are currently tempering their expectations and strategically pulling out, because it’s a level of investment and R&D that only a national research initiative can really tackle. Moonshots are state-level projects, not corporate. Silicon Valley proves once again that it can’t really innovate on its own; it has to steal research from state projects.

re: spinny light; I have had the EXACT SAME THOUGHT!

fwiw, I recently bought a tesla and I also work for a level-4 ‘hopeful’ driving company (not tesla, though). my experience with my own tesla has shown me that the mix of level-2 (there are no level 3 or 4 cars in the world yet, full stop (lol)) and fully manual cars is what is dangerous. all of one type is what we really want, not the dangerous mix of robots and humans.

so my thoughts were the same. alert the drivers that your car is ‘half human, half computer’ (you get what I mean) and drivers can then make extra allowance (they should!) knowing this. sort of a ‘I might brake suddenly, sorry, not always my fault’ indication.

I never mentioned this to my company and I have never heard this discussed; I don’t think it would fly (so to speak) but I have had the exact same though. once you buy/own a level 2 car (eating our own dogfood), you really get an appreciation for how much work is STILL LEFT to get to level 4. personally, I don’t think it can happen on normal streets, but I’m happy to be proven wrong. in the meantime, level-2 is quite cool and useful as it is, and if that’s as far as we get, its still ok!

“so my thoughts were the same. alert the drivers that your car is ‘half human, half computer’”

As in a “motaur”?

B^)

https://www.facebook.com/progressive/videos/motaur-wishes/446253402815073/

Maybe a simpler task should be completed first before jumping to fully autonomous motorised vehicles. Heavy Duty Robotic Arms for the homes of the CEO’s of all the companies. And if they can get the humans to work in the same space as machines autonomously with zero deaths of injuries after a number of years then they could be allowed to work on cheaper models for lobbyists. And then even cheaper models for senators, and then …. and eventually if there are zero deaths and injuries within a static man-machine interface, then they could be allowed to work on physically moving the devices.

The slowdown, and flesh in the game of the company CEO’s would attenuate a lot of the release now and patch later mentality.

Brilliant. A hastily-developed terminator in every disruptor’s home. We need to implement this immediately.

“Even outside of self-driving cars, the research could help human drivers predict the actions of other drivers around them.”

Or, maybe humans should just take driving lessons from other humans who’ve already figured this out…

log plates, and faces…

https://www.youtube.com/watch?v=7p64EgUOqGk

And again a post as replay under another comment, doesn’t go where it was created…

Post was aimed for Truth, Henry and Draco.