One of the goals of programming languages back in the 1950s was to create a way to write assembly language concepts in an abstract, high-level manner. This would allow the same code to be used across the wildly different system architectures of that era and subsequent decades, requiring only a translator unit (compiler) that would transform the source code into the machine instructions for the target architecture.

Other languages, like BASIC, would use a runtime that provided an even more abstract view of the underlying hardware, yet at the cost of a lot of performance. Although the era of 8-bit home computers is long behind us, the topic of cross-platform development is still highly relevant today, whether one talks about desktop, embedded or server development. Or all of them at the same time.

Let’s take a look at the cross-platform landscape today, shall we?

Defining portable code

The basic definition of portable code is code that is not bound or limited to a particular hardware platform, or subset of platforms. This means that there shall be no code that addresses specific hardware addresses or registers, or which assumes specific behavior of the hardware. If unavoidable that such parameters are used, these parameters are to be provided as external configuration data, per target platform.

For example:

#include <hal.h>

int main() {

while(1) {

addr_write(0xa0, LEVEL_HIGH);

wait_msec(1000);

addr_write(0xa0, LEVEL_LOW);

wait_msec(1000);

}

}

Here, the hal.h header file would be implemented for each target platform — providing the specialized commands for that particlular hardware. The file for each platform will contain these two functions. Depending on the platform, this file might use a specific hardware timer for the wait_msec() function, and the addr_write() function would use a memory map containing the peripheral registers and everything else that should be accessible to the application.

When compiling the application for a specific target, one could require that a target parameter be provided in the Makefile, for example:

ifndef TARGET $(error TARGET parameter not provided.) endif INCLUDES := -I targets/$(TARGET)/include/

This way the only thing needed to compile for a specific target is to write one specialized file for said target, and to provide the name of that target to the Makefile.

Bare Metal or HAL

The previous section is most useful for bare metal programming, or similar situations where one doesn’t have a fixed API to work with. However, software libraries defining a fixed API that abstracts away underlying hardware implementation details is now quite common. Known as a Hardware Abstraction Layer (HAL), this is a standard feature of operating systems, whether a small (RT)OS or a full-blown desktop or distributed server OS.

At its core, a HAL is essentially the same system as we defined in the previous section. The main difference being that it provides an API that can be used for other software, instead of the HAL being the sole application. Extending the basic HAL, we can add features such as the dynamic loading of hardware support, in the form of drivers. A driver is essentially its own little mini-HAL, which abstracts away the details of the hardware it provides support for, while implementing the required functions for it to be called by the higher-level HAL.

Generally speaking, using an existing or a custom HAL is highly advisable, even when targeting a single hardware platform. The thing is that even when one writes the code for just a single microcontroller, there is a lot to be said for being able to take the code and run it on a HAL for one’s workstation PC in order to use regular debugging and code analysis tools, such as the Valgrind suite.

Performance Scaling Isn’t Free

An important consideration along with the question of “will my code run?” is “how efficient will it be?”. This is especially relevant for code that involves a lot of input/output (IO) operations, or intense calculations or long-running loops. Theoretically there is no good reason why the same code should not run just as well on a 16 MHz AVR microcontroller as on a 1.5 GHz quad-core Cortex-A72 System-on-Chip system.

Practically, however, the implications go far beyond mere clock speed. Yes, the ARM system will obliterate the raw performance results of the AVR MCU, but unless one puts in the effort to use the additional three cores, they’ll just be sitting there, idly. Assuming the target platform has a compiler that supports the C++11 standard, one can use its built-in multithreading support (in the <thread> header), with a basic program that’d look like this:

#include <thread>

#include <iostream>

void f0(int& n) {

n += 1;

}

int main() {

int n = 1;

std::thread t0(f0, std::ref(n));

t0.join();

std::cout << "1 + 1 = " << n << std::endl;

}

As said, this code will work with any C++11-capable compiler that has the requisite STL functionality. Of course, the major gotcha here is that in order to have multithreading support like this, one needs to have a HAL and scheduler that implements such functionality. This is where the use of a real-time OS like FreeRTOS or ChibiOS can save you a lot of trouble, as they come with all of those features preinstalled.

If you do not wish to cram an RTOS on an 8-bit MCU, then there’s always the option to use the RTOS API or the bare metal (custom) HAL mentioned before, depending on the target. It all depends on just how portable and scalable the code has to be.

Mind the Shuffle

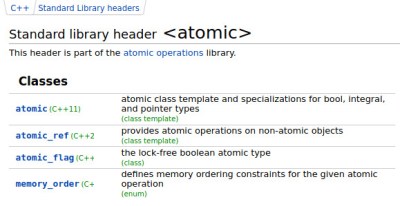

The aforementioned Cortex-A72 is an out-of-order design, which means that the code generated by the compiler will get reshuffled on the fly by the processor and thus can be executed in any order. The faster and more advanced the processor gets which one executes the code on, the greater the number of potential issues. Depending on the target platform’s support, one should use processor atomics to fence instructions. For C++11 these can be found in the <atomic> header in the STL.

This means adding instructions before and after the critical instructions which inform the processor that all of these instructions belong together and should be executed as a single unit. This ensures that for example a 64-bit integer calculation on a 32-bit processor will work as well on a 64-bit processor, even though the former needs to do it in multiple steps. Without the fencing instructions, another instruction might cause the value of the original 64-bit integer to be modified, corrupting the result.

This means adding instructions before and after the critical instructions which inform the processor that all of these instructions belong together and should be executed as a single unit. This ensures that for example a 64-bit integer calculation on a 32-bit processor will work as well on a 64-bit processor, even though the former needs to do it in multiple steps. Without the fencing instructions, another instruction might cause the value of the original 64-bit integer to be modified, corrupting the result.

Though mutexes, spinlocks, rw-locks and kin were more commonly used in the past to handle such critical operations, the move over the past decades has been towards lock-free designs, which tend to be more efficient as they work directly with the processor and have very little overhead, unlike mutexes which require an additional state and set of operations to maintain.

After HAL, the OS Abstraction Layer

One of the awesome things about standards is that one can have so many of them. This is also what happened with operating systems (OSes) over the decades, with each of them developing its own HAL, kernel ABI (for drivers) and application-facing API. For writing drivers, one can create a so-called glue layer that maps the driver’s business logic to the kernel’s ABI calls and vice versa. This is essentially what the NDIS wrapper in Linux does, when it allows WiFi chipset drivers that were originally written for the Windows NT kernel to be used under Linux.

For userland applications, the situation is similar. As each OS offers its own, unique API to program against, it means that one has to somehow wrap this in a glue layer to make it work across OSes. You can, of course, do this work yourself, creating a library that includes specific header files for the requested target OS when compiling the library or application, much as we saw at the beginning of this article.

It is, however, easier is to use one of the myriad of existing libraries that provide such functionality. Here GTK+, WxWidgets and Qt have been long-time favorites, with POCO being quite popular for when no graphical user interface is needed.

Demonstrating Extreme Portability

Depending on the project, one could get away with running the exact same code on everything from an ESP8266 or STM32 MCU, all the way up to a high-end AMD PC, with nothing more than a recompile required. This is a strong focus of some of my own projects, with NymphCast being the most prominent in that regard. Here the client-side controller uses a remote procedure call (RPC) library: NymphRPC.

Since NymphRPC is written to use the POCO libraries, the former can run on any platform that POCO supports, which is just about any embedded, desktop, or server OS. Unfortunately, POCO doesn’t as of yet support FreeRTOS. However, FreeRTOS supports all the usual multithreading and networking APIs that NymphRPC needs to work, allowing for a FreeRTOS port to be written which maps those APIs using a FreeRTOS-specific glue layer in POCO.

Since NymphRPC is written to use the POCO libraries, the former can run on any platform that POCO supports, which is just about any embedded, desktop, or server OS. Unfortunately, POCO doesn’t as of yet support FreeRTOS. However, FreeRTOS supports all the usual multithreading and networking APIs that NymphRPC needs to work, allowing for a FreeRTOS port to be written which maps those APIs using a FreeRTOS-specific glue layer in POCO.

After this, a simple recompile is all that’s needed to make the NymphCast client software (and NymphRPC) run on any platform that is supported by FreeRTOS, allowing one to control a NymphCast server from anything from a PC to a smartphone to an ESP8266 or similar network-enabled MCU, without changes to the core logic.

The Future is Bright (on the CLI)

Though the C++ standard unfortunately missed out on adding networking support in the C++20 standard, we may still see it in C++23. With such functionality directly in the language’s standard library, having C++23 supporting compilers for the target platforms would mean that one can take the same code and compile it for FreeRTOS, Windows, Linux, BSD, MacOS, VxWorks, QNX and so on. All without requiring an additional library.

Of course, the one big exception here is with GUI programming. Networking for example has always stuck fairly close to Berkeley-style sockets, and multithreading is fairly consistent across implementations as well. But the graphical user interfaces across OSes and even between individual window managers for XServer and Weyland on Linux and BSD are very different and very complicated.

Most cross-platform GUI libraries do not bother to even use those APIs for that reason, but instead just create a simple window and render their own widgets on top of that, sometimes approximating the native UI’s look and feel. Also popular is to abuse something like HTML and use that to approximate a GUI, with or without adding one’s own HTML rendering engine and JavaScript runtime.

Clearly, GUIs are still the Final Frontier when it comes to cross-platform development. For everything else, we have come a long way.

java and python is supposed to be universal

Windows drive letters have ruined many crossplatfirm apps. The stupid crap in windows has cost more human life than some wars

Blame it on CP/M. Its drive letters were implemented in QDOS, which Microsoft bought and gave a makeover for MS-DOS, and drive letters have stayed forever a part of the PC landscape. CP/M had the ability to reassign drive letters, which Microsoft didn’t have in MS-DOS until version 3.1 added the SUBST command in 1985.

And it doesn’t even work on Windows. If windows detects your card-reader during installation you end up with H:\windows\. Especially software in the $1k..$10k range and MS gold partners, will assume C:\ instead of looking up the windows drive/folder name from the registry, messing up transferring files or settings between computers.

“Crossplatfirm” on windows means: if the current version is less than 1 year old, it only works on the previous version of the os, else it works on the current version, as long as you don’t install any OS updates or other applications, and having it working on the next version of the OS as well is like winning the lottery.

We all love histrionics on Hackaday!

You can’t take for granted that you will always be able to use Java & Python for every case. Sometimes you need access to underlying hardware, sometimes performance, some libraries are C/C++ only and sometimes your current data structure is a RAM hog & you need pointers to improve it.

That aside, Java & Python assume that you have their respective runtime preinstalled in target environment. Alternatively, you bundle multiple OS & CPU-Arch respective versions of Java or Python runtime which defeats the purpose of being truly portable.

Moreover, as of today, many Python applications contain a lot of OS specific code or use OS limited modules.

Languages themselves are good if you feel comfortable with them but IMO portability is no longer a selling point.

I really enjoyed this article!

Makes me want to Play around with an nice ARM devboard

For your information https://www.crowdsupply.com/piconomix/px-her0-board/

And make it run on Android as well … which is currently horrendous to program for. Java cretinism is strong there.

Partly that is due to Android being stuck on a Java 6 implementation. Java 14 is due out soon and is much nicer to deal with.

There’s also the stubborn “Not gonna, don’t wanna!” attitude of everyone who has owned JAVA since the launch of Android about producing a JAVA Runtime Environment for Android. There was one outfit that said they had, and were going to make it available to purchase, but AFAIK they never released anything.

Is Oracle still being an ass?

Is today a day ending in “y”?

ALL of my days end in “Why?”

On the bright side, they are 5.03% less ass today.

Kotlin is the way forward on Android. Not sure if that helps make it not horrendous to program.

I hear folks talking up dart/flutter as the next big thing in write once run everywhere. I have yet to take the plunge and try it, but it’ll eventually happen.

Audacity is a great example of a multi platform program as is the Eclipse IDE.

I used eclipse all day everyday. The one issue I have with java apps is the resource requirements. Often my PC slows as eclipse or a common MQTT app will merrily eat a half or a whole gigabyte of memory. By comparison the C based MQTT development tool I made out of frustration uses 80KB. Java is good for a tool here or there, but any application designed to scale, really should be compiled.

You know, that SIM card in your phone runs Java? It’s a stripped down, embedded version, but it works with very limited resources and offers some apps that were useful before the smartphones were invented…

The problem is caused by bloat. Java used to be light and even fast. Now it’s heavy, bloated like old corpse left to rot, and slow because bad programmers work on it and no one removes the spaghetti code…

Also every interpreted language will be slower than compiled language – it’s the nature of them. C became such a success because in theory it should be able to be compiled for any platform. Often it isn’t…

I will not tolerate such misinformation from someone who stopped looking at Java 15 years ago. The algorithms are some of the fastest I’ve ever seen, because they actually so their research. The algorithms also get upgraded often, which gives your programs a free boost when you update Java, without having to recompile your code. For example, the sorting algorithm kept changing until they settled on one that uses Insertion-sort for small numbers and Tim-sort for larger numbers (also used by Python). Look at the lock-free concurrent collection implementations if you want to see state of the art algorithms straight from modern research.

The only thing that’s rotting and still somehow indispensable, is the GUI library SWING. It’s built with the proper design patterns (a long shot from your claimed spaghetti) but just a behemoth to maintain because of architectural design choices that can’t be abandoned, because ‘backwards compatibility’. sigh.

I don’t know why you mention interpreted languages, because Java hasn’t been that way since 1.5, or maybe 1.4. Yes, the JIT compiler can interpret code, but it keeps tally of how many times per second a method is executed, and soon switches to a higher level of compilation. Inner loops will soon end up compiled and even inlined inside calling methods, even if in other physical classes. The JIT compiler is so efficient that it outperformed on a bit-vector implementation, compared to C compiled on the same machine.

Thank you

So why are all the web browsers either completely ending support for JAVA or making it a PITA to enable? Why won’t anyone write a JRE for Android so sysadmins can use a tablet or phone to remotely manage equipment that has a JAVA interface over HTTP?

Mozilla, Google, and friends say there are “security issues” with JAVA. Why is Oracle NOT aggressively addressing those concerns in order to keep JAVA in widespread use on the web?

Same story with Flash. When Apple said “We don’t like Flash. Kill it.” Adobe kowtowed so fast it got a skull fracture. Had I been in charge at Adobe I would’ve replied with a “Screw you guys! We’re making Flash better and better! More Flash content online than ever before, if you don’t allow Flash on the iPhone, you’ll lose customers. We’re not going to allow you, Mozilla, Google or anyone else tell us to kill one of our babies!”

Emacs is the original cross platform app. In 1980 there were ports to ITS, Multics, and TOPS20. The Unix Port didn’t happen until the late 80s, then there was the MSDOS Port called epsilon, then there was a Mac OS9 port, then the Unix Port picked up gnome support, then there was a native OSX port… Still actively maintained today.

And is a pretty good example of a write-once, run-anywhere cross platform language (Emacs lisp). I still use Emacs as my primary editor for most things, unless forced to Eclipse (I use several platforms where the tool chain is tied to Eclipse), and still use some of the code I wrote in the 1980’s for special purposes, like arbitrary shape cut-and-paste on text– really useful when working with state tables for embedded applications. Tools that are not practical in Eclipse are easy in Emacs.

Note that I would NEVER push a new programmer to Emacs (or to vi– never much did like vi), though some will find it on their own.

“This would allow the same code to be used across the wildly different system architectures of that era and subsequent decades, requiring only a translator unit (compiler) that would transform the source code into the machine instructions for the target architecture.”

The adventures of gaming and VMs.

Also the adventures of IBM mainframes, the original virtual machines. Run those old COBOL punch card programs on MVS, and a Linux kernel, at the same time, even migrate VMs across hardware without pausing. IBM has been doing this stuff for a long time.

“Clearly, GUIs are still the Final Frontier when it comes to cross-platform development. For everything else, we have come a long way.”

Underlying hardware still evolving.

My mouse and keyboard are 20 years old. The GUI hardware on today’s computers is totally stagnant, its everything else that is evolving. Power supplies are new super efficient designs, CPUs have lots more cores, RAM keeps getting faster and denser, but the GUI gets older and older, just like Matthew McConaughey.

Far as many are concerned, Microsoft pretty much nailed it on the GUI right around Windows 95 OSR 2 with the IE6 additions. Most of the stuff added since has been annoying, in the way, ugly, pointless etc. especially the scrolling menu the’ve been pushing since Windows Me. Someone at Microsoft is effing in love with the concept. Out in userland it’s despised because it’s *slow*.

Anything on the “classic” Start Menu that debuted in 1995 can be accessed with just two clicks, as long as you don’t spazz all over the place with your pointing device.

The problem with cross platform is that the GUI isn’t native to any platforms and the implementation sucks. Toolkit programmers aren’t GUI designers.

For any user friendly app, the GUI should be consistent with the OS environment. The GUI should be written first from the user point of view and the rest of the code is built around it. It should not be written as an after thought as a front end as the user is now forced by the flow of the backend code not the other way around. This is why such programs are painful to use.

This is 100% backwards, write the back end first and put the GUI onto it. This is the only way to make a portable app. You don’t even begin to understand how software development really happens in the real world. If you start coding before you work out all of the workflow then you are REALLY doing it wrong.

> If you start coding before you work out all of the workflow then you are REALLY doing it wrong.

It looks like you are trying to run a project using the waterfall model! Would you like some assistance?

Clippy? Is that you? ;)

If you want to wait until version 57 to have a reliable program, that’s up to you. Where I work, we get it right the first time.

You call it “waterfall,” I call it “business planning” and predicting the outcome of the resources consumed.

The context where “agile” is useful is when you’re doing web consulting for low-information clients. That does not mean that it can replace traditional engineering practices for software that is intended to perform a specific task.

Since we’re talking about microcontrollers and C++, I’ll drop a link to Miosix http://miosix.org

It’s an OS for 32bit (ARM Cortex M, mostly) microcontrollers that comes with C++ standard library integration out of the box. Right now C++11 threads are being added, but posix threads have been supported for quite a long time as a fallback.

The ultimate portage gui (and as a bonus, portable) is a remote desktop. Straight into a desktop inside a microcontroller. Somewhere on the internet someone has done this years ago, I just can’t find it now.

On a simpler note, I like to have my microcontrollers talk via a serial terminal as if it is a ssh session in a Linux machine. Complete with username and password, to unlock access to a virtual file structure. Instructions etc. are called by running the virtual files. Logs are cleared by deleting the log file. The files can have parameters, eg – help, jusl like real applications. To view the “menu” of options, just use ls. Example, cd to the calibration folder to set system calibrations if you logged in as root. Using this scheme it is easy to advise someone over the phone on a system you last touched a decade ago. An added bonus is that the setting up of a system can all be done in a text file which is sent to the system as a setiL stream. This is because CLI input is simple and repeatable.

Portage GUI? There’a an app for carrying boats past non-navigable parts of rivers? ;) I used to be a DOS power user. I knew all the command line stuff. When Windows came along I was calling it the “commando line”. (Not a reference to going without undershorts.) I put MenuWorks on the PC for my parents but when I used the computer the first thing I did was quit that so I could directly launch DOS games.

Just use Java!

Java takes care of the hard stuff, like memory allocation, pointers, etc, so you can concentrate on code!

There is no performance penalty, java once properly profiled, gets FASTER on each iteration of a function, can’t say that for C or ASSembly.

All you need to program java is some basic hardware, just a quad core [min], at least 16gb of ram, and just a few GB of disk space for the helper libraries.

“Hello World” can be compiled and run in as little as a few minutes! try doing that with C or ASSembly. And it will run FASTER on each iteration!

Why bother with the hard stuff, simply let Java do it for you!

Just believe the ad copy “Over 16 million devices use java… the rest of the world figured out how to uninstall it.”

Good grief cut it out. Java runs the planet whether you like it or not. It is calculating your utility bill and your paycheck , keeping track of your groceries, filling your online orders, and deciding your credit score. All you are doing is demonstrating your ignorance for all to see.

Meanwhile Apple and the web browser gang are trying to kill JAVA by either completely not supporting it anymore or by disabling it by default and requiring the user to give it permission to run every time.

Web browsers are killing applets not java. That was a specific use of Java back in the day and that icky hot mess called javascript has replaced most of the tasks that Java applets and even Flash where used for. Yes it was really dumb when people used java to make hover buttons way back when but their where some very handy applets also.

Apple used to include Java standard on Mac OS/X in the hope that they would gain applications. You can still get Java for OS/X today and many people install it.

You are living in a tiny little web browser world. Java is used all over the place in the enterprise. I gave the example of the Eclipse IDE as a multi-platform application written in Java the game Mindcraft is also written in java or was. Oh and Facebook and Twitter use it as well on the server side.

And you really do not know what you are talking about.

Again you have no clue, Apple is 100% behind running java as a server process. I can tell you for a fact that java applications run just fine on macOS, just like they do on linux and Windows. Running java in the browser was always a bad idea, and you’re arguing with a straw man.

JAVA in web browsers is essential because of all the network equipment (especially print servers) that use JAVA with an HTTP interface, through a web browser. No JAVA in the browser? No configuring the thing remotely, unless you want to Telnet or TFTP to it.

Java is indeed great but not on STM32M0, if you want to run “everywhere” you must support these crude ARM CPUs. They are super cheap and super popular.

MCU’s: why nobody said platformio ? i use mostly same code on Arduino AVR, ESP8266 and ESP32 , creating common libraries and only leave specific hardware instructions (wifi, ble, …) using #IFDEF ESP32 … with a HAL layer library , mixing C, C++ even RTos on same project

> The thing is that even when one writes the code for just a single microcontroller, there is a lot to be said for being able to take the code and run it on a HAL for one’s workstation PC in order to use regular debugging and code analysis tools, such as the Valgrind suite.

I’m slightly bothered by the fact that no-one mentioned unit tests. By providing a mock HAL target one can test every bit flip, every interrupt, every timeout etc.

Until the interrupts show up in a different order on the real hardware, then you will have hidden race conditions in your code that the tests will not catch. There is no substitute for real hardware because you really can’t characterize it well enough to simulate it.

Um.. what was the “done right” part, did I miss it? Usually the words done right imply there’s going to be some reason put forth why one particular approach is better than the others. All this was, was a survey of attempts at doing abstraction across different levels of architectural sophistication.

Also not addressed is the fundamental coding question: do you put all the system dependent stuff in one file and make all the other source platform-independent, or do you use #ifdefs in your mainline code and put the system dependent stuff in each source file?

There are pros and cons to both approaches depending on many factors including the maturity of the software and the tastes of the folks on your team.

And look at the AS400 (iSeries, IBM i). Dual abstraction AKA horizontal and vertical microcode, once you compiled your source, that binary could be used on *any* of the AS400 range, from desktop baby models all the way up to multi-rack almost-mainframes.

on Intel we have the exact opposite situation where you must carefully choose your compiler options to extract maximum performance for the given target processor. If you want your program to run optimally on both a resource-constrained laptop and a big NUMA server then you must provide two different executables.

“This ensures that for example a 64-bit integer calculation on a 32-bit processor will work as well on a 64-bit processor, even though the former needs to do it in multiple steps. ”

Is it really like this on ARM? Isn’t it that out of order execution should never change the outcome of the code? That’t why there is register renaming for example. Multi-threading on the other hand is different, you need to make some operations “atomic” sometimes. But as soon as you have just single thread running, than even during out of order execution you should not need fencing. Or do you?

To an extent, HAL concepts are a fallacy. Hardware isn’t abstract – it’s concrete by definition and ‘abstracting’ it usually leaves you with dumbed-down implementations that do everything you need apart from the specific functionality you need in any given project.

What’s worse is that they replace direct I/O access with function calls that achieve less than the direct I/O did. For example, let’s say we implement a HAL based originally on an 8-bit PIC. Here, you get a port register which doubles as a read and write port. Traditional PIC code would be able to read or write to the port in a deterministic 4 clock cycles (because most instructions take 4 cycles), but you can also test and compare based on a single I/O bit. On an early PIC, some bits were dedicated to output while others could function as both input and output via TRIS registers (which in earlier implementations was an instruction); and similarly pull-ups only applied to a limited group of I/O bits (which means that Pullups aren’t normally seen as part of the GPIOs, but a separate concept). Later PICs had LAT registers to separate input from output ports and eliminate Read-Modify-Write issues.

Trying to abstract this without knowledge of later PIC MCUs, still less other embedded architectures would lead to either a very restricted abstraction or a loss of performance. So, for example if we move to 8-bit AVRs we find we have support for separate input and output ports; support for pull-ups on any GPIOs (by setting the output port when the data direction is set to input) and support for toggling an output port (by writing to an input port when it’s set as an output).

ARM processors have a substantially different architecture whereby each GPIO port is mapped to a large number of registers over a significant address range in a 32-bit address space. The GPIOs are usually packed into groups of 16; they support up to 16 different modes per port (where disconnect, output, strong output, input, pull-up, pull-down); usually every port can support interrupt on pin change again with multiple modes (fall, rise, toggle), but these interrupts are often mapped per pin across all GPIO ports. This would contrast with an AVR which might have one or two dedicated interrupt pins that support rise, fall or toggle and on later AVRs interrupt pins mapped to a given GPIO port; and whereby on a PIC, there would be only a couple of dedicated interrupt pins.

Architecture affects the usage of GPIOs at every level. Thus, ARM architectures are suited to software where a GPIO port becomes a pointer to a structure whose offset access its registers, but other architectures are suited to software where ports are accessed by absolute address.

And here we are only considering GPIOs. When it comes to implementations of higher-level functionality there is often even greater variation. For example, how do you properly abstract a timer when some platforms don’t support free-running timers or don’t support drift-free compare timing? Or where some expect to have to synchronise registers due to low-power considerations? Or some support event-driven relationships between the timer, its related GPIOs and DMA?

What this all points to (and I could go on), is that Hardware abstraction, the HAL layer, is the wrong kind of abstraction. What we should be doing is what software engineering was advocating decades ago: the important thing is to abstract only the higher functional modules you need and port those properly to another architecture if that changes. Then the abstractions you truly need for a given project are fit for the job and as efficient as the hardware will allow.

But HAL, it’s an oxymoron.

That’s an interesting abstraction, but it is missing a layer to abstract away the gpio’s to its actual function. lookup_gpio(“blink_led_pin”) ?

I mostly program in C and Python, for their solid cross platform support. Yeah, I know, some out there are now gawking and saying, “But C isn’t cross platform.” Wrong, many C libraries drop down into platform specific stuff, but the standard libraries are specifically designed to be cross platform. If you use platform specific libraries, Java and Python aren’t cross platform either, but that doesn’t make the languages themselves platform specific. I’ve been writing cross platform C for over a decade. I does help that I use a sane cross platform tool chain (GCC and MinGW), so that even most of the non-standard GCC libraries are cross platform.

Writing cross platform code for embedded systems, however, is another beast entirely. Recently, I’ve been sticking to platforms that have CircuitPython support. Adafruit has done an awesome job of producing an HAL for CircuitPython that makes writing cross platform code trivially easy, but even then, it’s literally impossible to write 100% cross platform code for more complex applications, because different hardware has different capabilities. How do you abstract the RP2040s PIO state machines? The answer is you don’t. Maybe for applications like driving a VGA monitor or composite TV you can, but most applications require more application specific use.

For mass market products though, CircuitPython isn’t even feasible, due to hardware costs. A device with HAL (say the RP2040) might cost $1+ for a microcontroller that can handle the space and CPU power the HAL takes (the RP2040 doesn’t have built in flash like the MSP430, so don’t forget to add that cost), in an application that could use a 50¢ MSP430 chip with optimized C. Over several million sales, you would lose over a million dollars to the costs associated with the HAL, when under a month of work from a embedded systems developer porting to the code would have cost you less than $10,000 in labor. Unless you end up needing to port the code to more than 100 different chips, the HAL is a huge waste of money, and that’s not even counting the develop cost of the HAL itself for every new architecture.

So for something like PC or mobile apps, running under an OS that already provides a HAL, the performance cost of a little more abstraction to make cross platform programming trivial is completely acceptable. I’ve been doing it in C for over a decade without any problems. For mass market embedded devices though, cross platform code is just too expensive. The cost of paying devs to port platform specific code is typically significantly lower than the cost of faster hardware with more memory necessary to accommodate the HAL.

All of this reveals a serious problem with modern developers: Premature time optimization. Reusable software sounds great on the surface (though it rarely turns out as good as it sounds, in my experience), and to software developers who aren’t as familiar with the business side of things, like the cost of parts, profit margins, and economies of scale, it’s easy to see to see reusable software as win-win. The fact, however, is that reusable software abstracts away useful features of devices, and it generally comes with a huge performance cost. On systems without very limited resources, this doesn’t seem like a problem (but it generally is, because your software is never the only thing running on systems like that), but on embedded systems, where CPU power is measured in mere MHz and memory is often in the KB, reusable software becomes incredibly expensive. In mass market embedded applications, the cost of dev time for porting code to a new platform is trivial compared to the enormous cost of using more expensive hardware that has enough resources to handle an elaborate HAL (that itself requires significant dev hours to build).