Microsoft’s new Xbox Series X, formerly known as Project Scarlet, is slated for release in the holiday period of 2020. Like any new console release, it promises better graphics, more immersive gameplay, and all manner of other superlatives in the press releases. In a sharp change from previous generations, however, suddenly everybody is talking about FLOPS. Let’s dive in and explore what this means, and what bearing it has on performance.

What is a FLOP anyway?

Typically, when we talk about “flops” with regards to a new console launch, we’re referring to something like the Sega Saturn, in which sales are drastically below expectations. In this case, we’re instead talking about FLOPS, or “floating point operations per second”. This measures the number of calculations a CPU or GPU can perform per second with floating point numbers. These days, it’s typical to talk about FP32 performance, or calculations with 32-bit floats. Scientific or other applications may be more concerned with FP64, or “double” performance, which typically returns a much lower figure.

Typically, when we talk about “flops” with regards to a new console launch, we’re referring to something like the Sega Saturn, in which sales are drastically below expectations. In this case, we’re instead talking about FLOPS, or “floating point operations per second”. This measures the number of calculations a CPU or GPU can perform per second with floating point numbers. These days, it’s typical to talk about FP32 performance, or calculations with 32-bit floats. Scientific or other applications may be more concerned with FP64, or “double” performance, which typically returns a much lower figure.

The number of FLOPS a given processor can achieve is a way of comparing performance between different hardware. While software optimisations and different workloads mean that it’s not a perfect guide to how real-world applications perform, it’s a useful back-of-the-envelope number for comparison’s sake.

How Does The New Rig Stack Up?

Reports from Microsoft state that the GPU in the new Xbox Series X is capable of delivering 12 teraflops, based on AMD’s new RDNA 2.0 technology. Interesting to note is that the release talks about only the GPU performance. Over the last few decades, GPUs have come to exceed the raw processing power of CPUs, and thus this is the focus of the comparison.

For a consumer device that lives under a television, this is a monumental figure. In its time, Playstation 3 represented a huge advance in the amount of processing power tucked inside an affordable game console. Back then, the raw grunt of the PS3, combined with its ability to run Linux, led to a series of supercomputing clusters around the world. The biggest of these, the Condor Cluster, employed 1760 consoles, and was employed by the US Air Force for surveillance tasks. Capable of 500 teraflops, similar performance today could likely be gained with well under 100 Series X consoles. Unfortunately, Microsoft have announced no plans to allow users to run custom operating systems on the platform.

Comparing the Series X to contemporary computer hardware, performance is on par with NVIDIA’s current flagship GPU, the GeForce RTX 2080 TI, which delivers 13.45 teraflops with 32-bit floating point math. Given the capability to run dual-GPU systems on the PC platform, and the fact that the new Xbox won’t be released until later this year, PCs will retain the performance lead, as expected.

Looking into the supercomputer realm is a fun exercise. Way back in June 2000, ASCI Red held the #1 spot on the TOP500 list of the world’s most powerful supercomputers. The Xbox Series X should easily best this, and if you traveled back in time with one, you’d steal the crown. In the next publication in November, it had been beaten by its newer counterpart, ASCI White, with a real performance of 4.938 teraflops and a theoretical peak of 12.288. The Xbox Series X would still have a chance at holding the title until June of 2002, when NEC’s Earth Simulator came online, boasting over 35 teraflops in real-world tests. ASCI Red itself used 850kW in operation, with Earth Simulator using a monumental 3200 kW. It’s nice to know the Series X will sip only around 300 W in use. In twenty years we’ve made it possible for the world’s fastest supercomputer to make its way into the average living room for the most performant game of Fortnite possible.

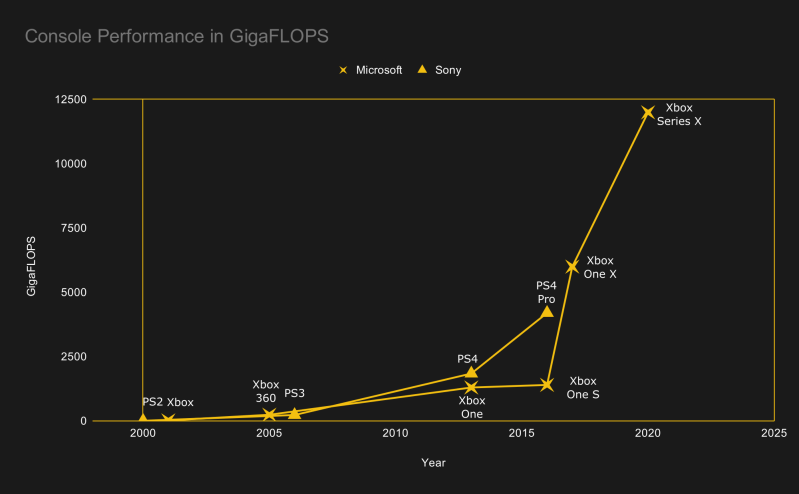

If we look at older consoles, the gap is vast. The Xbox 360 put out just 240 gigaflops, while the original Xbox made do with just 20 gigaflops. Going back a further generation, the Playstation 1 and Nintendo 64 made do with somewhere in the order of just 100 megaflops. Advances in hardware have come thick and fast, and the roughly half-decade lives of each console mean there’s a big jump between generations.

Going back further is hardly worth considering, as the performance is so glacial. Prior to the Playstation 1, floating point units were a luxury, and 32-bit architectures were only just getting started. However, some tests have indicate a Commodore 64 is capable of somewhere in the realm of 400 FLOPS per second — in BASIC, with no FPU. Now, somewhere between 12.5-17 million C64s were sold, making it the best selling computer in history. Add them all up, and you get 6.8 gigaflops – over an order of magnitude less than a single Series X. Obviously, there’s no real way to achieve this in practice, but it gives one a rough idea just how far we’ve come!

What It All Means

Fundamentally, the Xbox Series X continues the roughly exponential growth in computing power in the console market. This will enable ever prettier gaming experiences, with real-time ray tracing becoming a possibility, along with higher framerates and resolutions.

With the availability of purpose-built, high-end hardware in the PC space, it’s unlikely we’ll see the Series X used in other realms, which is a shame because Linux on Playstation 3 was pretty excellent. Despite the similarities to PC architectures, both Microsoft and Sony have been resolute in keeping their platforms locked down, stopping users from running their own code or operating systems. Regardless, it’s impressive to see the computing power that’s now brought to bear in our very own living rooms. Game on!

In Dutch the word ‘flop’ means failure.. I suppose you can’t have enough flops..

Same in German…

Microsoft’s “Modus operandi” has always been to claim bigger/better as soon as a competitor’s specification comes out. Always they attempt to cause the consumer to put off their purchasing decision of a competitor’s product about to reach production/or soon to be on the market while they are still getting their design finalized.

Microsoft has created vapor ware production as a business model. If they could only monetize that!!

They learned this from IBM! It’s called sowing “FUD” or Fear, Uncertainty and Doubt.

Same in English

Also in English ;-)

I wonder how many seconds it will last before it completely flops over.

Lol right

yeah, 2 raise to the 32 bits

In English to, but this isn’t “flops”, it is “FLOPS” which is an acronym, in case you didn’t know that. It stands for FLoating points OPerations per Second.

Now you know.

“Regardless, it’s impressive to see the computing power that’s now brought to bear in our very own living rooms. ”

Unintentional irony that indie pixel games are very popular.

Looks at friends intel core i9 pc. Watches him play Solitare…

And aren’t the card turning over animations just about seamless?

Absolutely seamless except he has an ips so 4ms lag.

‘Except’? 4ms is amazingly fast, that’s 240fps.

unfortunately graphics alone can not make good games. put mechanics first and you get an ugly game that plays well. if you do both, you get the doom reboot.

Makes sense, but doom is a 3rd party game, so this basically would play doom equal to a 2080ti for the cost of a console. Certainly better than “maybe fast loading times” with a 1440p target resolution, upscaled to 2160p.

Yep. Software is in fact a gas; it expands to fill the container available to it. This won’t make for better games, but it’ll make people have to buy a new console. Which brings me to the fact that they really oughta put a baseline consumer gaming PC on that graph up there. Guaranteed this machine exceeded by a huge degree in a month for an embarrassingly lower price point. As always.

Side note: what kind of krokodil mega-crack is the xbox marketing team smoking? What is up with that name progression? xbox – xbox 360 – xbox one – xbox one s – xbox one x – xbox series x?! These aren’t product names, these are the scribblings of an imbecile. I know this is an old dead horse to beat, but jeez. I can’t leave it alone.

Well if you game on pc then Microsoft already has your money.so not sure why you pc enthusiasts have to make fun of a company for doing what it does best.

Buy your overly expensive PC and ill buy my xbox.

having the best possible tools is no guarantee for success.

ah, doom reboots is 64bits not 32bits.

Certainly shows how exponential improvements add up over the years (like CV19 add up over the days.)

PS5 is up to 10.28 TFLOPS (variable clock, but Sony/Cerny claims it will run at that speed most of the time).

I wish sony was honest about the true power. It’s closer to 9 tflops. But if you did variable clock then microsoft can claim 14.6 tflops.

It’d be really nice if we could run a Jupyter notebook (or the like) and be able to train ML models on it. Oh well, one can always dream…

The console price is amortized with the content available digitally so the company can turn a profit.

OTOH they can release without a controller/controller ports, possibly just sell the app for doing computations on a monthly subscription for whatever makes sense to them, $100 a month or something, to get back their investment.

It might even gain them money by having a market for old consoles that stays relatvely high, yet pushes gamers into newer platforms

> Add them all up, and you get 6.8 gigaflops – over an order of magnitude less than a single Series X.

That’s over three orders of magnitude. In a single video game console.

I love this industry.

“Sip only 300 W in use” in a small plastic console?

Presumably its fans will wail like banshees.

Well that’s just what happens when you let kids have microphones… oh you mean the air handling.

It runs only one fan. Mounted on top with very large port holes. I believe it is a 133mm.

Think Apple MacPro trashcan but not round

Now that I think about it, my small waffle maker is around 300 watts.

While it’s fun to compare series X to ASCI Red, it’s an apples and oranges comparison. ASCI Red, White, and all the other top 500 machines in the DOE labs are benchmarked running Linpack, they are not measured by their theoretical peak throughput (ie, number of GPU cores x instructions per second.) In addition, they are benchmarked on double-precison arithmetic. Finally, because these machines were designed for scientific computation, not entertainment, they utilize error correction throughout.

Not only are things redundant, they have fancy infiniband networking which do RDMA between nodes, and super fast parallel storage like Lustre and GPFS. If your dataset is measured in PB, an actual parallel computing platform with purpose built software is hard to compare against.

But, it is a nice thought experiment. If one could gang xboxes (xboxi?) together and do all the fancy infiniband stuff and SAN stuff cheap, that’d be a game changer. If you’re the tinfoil hat type (like me), you could easily envision similar devices without the MS label built by contract manufacturers in China for this same type of parallel computing at a similar price tag. Maybe even specifically built for brute forcing encryption keys or the like.

The C64 was released in 1982, so 38 years ago, and if 12.5-17 million C64 could in theory process 6.8 gigaflops (on a CPU without any floating point processor) that would be about 400 flops per second – which is impressive for about a 1MHz machine), sounds like a totally valid, fair, and totally unbiased comparison.

But this does raise the very serious question of many 16-bit floating point operations per second can the 3.8 GHz AMD 8-core processors in the Xbox Series X process using integer processing only ? Is it more than 12 160 000 flops/seconds (400x3800x8) and how much more ?

ignore the “/seconds” – brain fart.

Well, they did it first :)

“However, some tests have indicate a Commodore 64 is capable of somewhere in the realm of 400 FLOPS per second — in BASIC, with no FPU”

In BASIC it’s probably more like 10 if you’re lucky, for any of the old 8-bitters. Some paid more attention to their BASICs than others. Also some machines had separate integer variables (A% to Z%) so BASIC could skip all the floating-point nonsense if you specificed you didn’t want it, so there’s plenty of speed and no need to avoid ever dividing anything.

Not FLOP-related but the C64 had built-in sprites and arcade-like tile mapping. It’s BASIC had NOTHING, 0, to access these features. Or it’s famous SID chip, no way of getting a squeak out of it short of reading the technical manual, which you’d have to buy from somewhere, and sending a load of POKEs at it.

If you could understand sending bytes to chips like that, and how to work a tile-mapped display, then essentially you know machine code already and don’t need BASIC. Useless!

Mind, the Atari 8-bits were worse. Really beautiful graphics hardware, sound a bit of a letdown. Except when it’s playing “GHOSTBUSTERS!!!” as a sample. But again, no BASIC support. It’s BASIC didn’t even support strings properly! Same CPU as the Commodore except 1.79MHz instead of 0.98MHz, nice!

An FPU would be a weird thing to add. Since there weren’t any for the 6502 AFAIK. I suppose you could somehow strap in an 8087 or whatever the 8-bit version was, and patch BASIC to use that instead of software routines for floating-point. 8087 worked on a weird interrupt system though where you’d give it a job and then carry on doing something else, getting an interrupt when it was complete. The 8086 hardware coped with all this patching cos it was designed that way. You could do it on a Commodore but… you just wouldn’t! The C64 had enough stupid extra pointless chips for everything as it is. Not like the lovely Sinclairs!

All that said… most people only ever used one BASIC command and that was LOAD.

two, load and run

>no way of getting a squeak out of it short of reading the technical manual, which you’d have to buy from somewhere

FALSE. The technical manual was included with every C64 – it had instructions for how to program in BASIC, as well as assembly language, and all the custom chip registers, as well as the schematics. I have several of these books from owning several C64s. Commodore made it extremely easy to learn about the entire C64, all of it, everything.

>The C64 had enough stupid extra pointless chips for everything as it is. Not like the lovely Sinclairs!

Now I KNOW that you are a troll. You are ridiculous. Stop spreading disinformation.

Thank you for bringing me back to 1999, to annual “The C64 was crap!!!” flamewar between comp.os.cbm and comp.sys.sinclair

Fozzey: Nah, commercial games didn’t usually need “run”. Either the OS could recognise machine code and load and run it automatically (the addresses to load and run being in a header), or else they’d have a little BASIC loader that was also set, in the header, to run from line 10, say. So just LOAD!

Neimado: I didn’t have a C64 but my friends did. We tried the little balloon demo in the manual. A lot of poking of mysterious numbers. Are you saying the technical manual could teach machine code to a beginner? I never saw that manual.

The C64 had chips for serial, chips for the tape player, 2 CIA PIO chips for stuff like RS232 and the user port, which almonst nobody actually wiki tells me the C64 used 32 chips. The ZX81 used 4! CPU, RAM, ROM, ULA. Admittedly the ZX81 didn’t compare with the C64’s graphics and sound.

The Speccy used more, most of them were RAM though, which you could only get in 1 bit x 16K or 1 x 64K. Or the 32K cost-saving ones which were 6164s where one or more of the bits didn’t work, so they just sold them as 32K and told you not to use the half of the chip with the errors in it. Speccy peripherals just connected to the CPU bus. You’d need maybe a single logic chip to check for the right address bit being low, and connect, say, the joystick straight to the data bus. Genius! Exactly what’s needed, and coming from a background of someone who made cost-saving his life’s work. Rather than the American machines coming from a mainframe background who thought you had to have a chip for each function, even though the CPU was often doing nothing while it waited for them to do their job. The Spectrum did it all in software, free software!

It’s brilliance in looking at the machine for what it is. What functions do you want, and how might you achieve that simply. Rather than just reimplementing, say, a VAX, with an 8-bit CPU. Not literally Vaxen, but resembling it in philosophy. As a hacker you should admire Sinclair’s minimalism and the ways of extracting the most function from the least hardware, by bending the rules a little. Instead of a bus multiplexor, it used sets of 8 resistors!

One of Sinclair’s pocket tellies used a single transistor as RF amplifier, audio amplifier, and part of the voltage regulator. And I bet he was running it out of spec!

ytmytm: Oh, the fun started before 1999. Them were the days! The CSSCGC was my idea though the Sinclair nerds think it was somebody else. I might admit to it being an idea that arose organically from the community, but we were talking about the Cascade 50 and I posted about doing our own first. Some gobshite from back then claims it was his idea cos he used to post a lot more.

This is not really a fair comparison. If you had 17 million Commodore 64s co-ordinating with each other then the co-ordination would consume much of their resources, leaving few for computation.

OTOH, if you had 17 million Commodore 64s and half of them had working SID chips then you’d be rich indeed.

Nah, you’d give them “work units” like SETI@HOME. Something suitable for the RAM and CPU. You could have a heirarchical star network, a dozen or so breadbins chugging away and sending finished units to their coordinator in the middle, and getting a fresh one back. Coordinator does the same up-line with it’s own coordinator. And that’s like 100-odd machines so far in just 2 levels.

Admittedly 17 million is starting to look large now!

I suppose when you get to the higher levels, the wall you’d hit would be the coordinating C64s not being able to send work units out fast enough. I suppose then the solution might be to balance how big each work unit is, and how long it takes each machine to complete it. An 8K unit takes twice as long to send as a 4K unit, and twice as long to process. But that processing time is measured in perhaps hours, where transmission time is only seconds.

I bet with some really thoughtful network design you could get the throughput optimal within the hardware limits. So bear that in mind if someone knocks on your door with 17,000,000 C64s and miles of wire.

Personally I’d tell him to piss off and come back with Ataris. 1.79MHz CPU you know! Nearly twice the C64’s.

I’m just gonna wait for the emulator in retropie on my Pi 12+ in 15 years.

Looking forward to having one in my living room!

Would a million of these things on the lets-crack-the-CV19-network help?

if so, why aren’t we doing it already?

We are doing it. Google “folding @ home” then download the client and you can use your CPU and GPU in your PC to help “decode” the protein molecular structure of covid 19

Right now there are enough people helping that it equals more power that multiple super computers combined.

“Playstation 3 represented a huge advance in the amount of processing power tucked inside an affordable game console.”

The PS3 was hardly affordable. You PAID for it.

It was cheaper than typical plain Blu-ray players at the time.

That makes it cheap by most measures.

Unlikely you’ll see it used in other realms!? It’s a PC! It’s used in nearly every realm!

As I half remember, I think one of the consoles has a slightly tweaked graphics chipset, and the whole PCB is that little bit tighter cos it’s all identical hardware per machine. But that’s about it. The current-gen (PS4 / Xbox whatever) machines are PCs and so are the ones you mention here. You’re not missing out. It’s peak teraflops! There’s that much tweaking and ingenuity in CPUs now, that only the biggest, or maybe 2 biggest, companies can afford to develop them.

It’s why Apple went with x86, and now consoles have. For your money there’s nothing better, and developing something better would cost you a ton more. Even allowing for Intel being greedy bastards. The rest of the CPU market is cheap ARMs, and that’s about it.

It’s a shame cos the PS3 had that weird 8-core thing on a ring bus with 1 main core that was a bit more powerful. That was a nice smart wacky idea. Bet it was a bugger to program though. Before that, the PS2 had it’s own early-ish vector thing. The PS1 had a MIPS that was just one of a range of similar performing CPUs made by all sorts of manufacturers.

For high-end CPUs now (and I think this even includes supercomputers) there’s x86 and nothing. It’s nice at least MS and Sony are propping up AMD so there’s still some competition for Intel, otherwise they’d be a monopoly. Maybe that’d be a good thing and they’d get broken up. Not sure how well that’d work though.

“Bet it was a bugger to program though.”

No kidding. Systolic arrays like the PS3 are great for certain types of data-oriented algorithms but don’t work very well for general purpose algorithms that don’t pipeline very well. Frequently you have to mangle your algorithm to fit the array, which leads to lots of subtle bugs and sleepless nights. For that reason one project I worked on had a systolic array for processing data streams and a set of general-purpose cores for everything else.

The PS3 didn’t have 8 cores. It was a single core PPC CPU with 8 SPE’s but 1 was disabled. The SPE’s could handle small tasks but they were nowhere near as powerful as a CPU core.

It actually wasn’t that difficult to use them although Sony’s documentation was terrible. The sad thing is with scale the Cell could have been amazing in say… A home PC. Having a proper 4+ core CPU and 40+ SPE’s would be amazing and another benefit is the SPE could network with another device containing a Cell and it could use that devices SPE’s to add power to the master device.

So say you had a PC powered by a Cell CPU and your fridge, microwave, TV, DVD player etc all had Cell CPU’s in them, you could cluster all the SPE’s from all the devices (easily) over a network to make the PC more powerful.

Sadly nobody cares about Cell and billions in R&D went down the drain.

Yeah theoretically any CPU can augment any other CPU over a network, but the latency is enormous and only gets bigger. With the possible exception of supercomputers (which still try to minimise it), but they aren’t really “networks”. That sounds more like a Sony marketing claim than anything you’d ever actually do. Games are another thing where latency is important, you could never remotely render graphics from your fridge. Did Sony actually claim that as something they were going to use in future?

Everyone is always saying we need to get rid of X86 but there’s nothing remotely able to replace it in any practical way.

All the other good chips seem to be ARM, and people complain about those too.

This is why we can’t have anything that lasts more than five years. Everyone is constantly arguing for yet another clean break upgrade.

It’s just as bad in software, where they do completely unnecessary things like rename a function, then remove the old one after short deprecation times instead of waiting till the next major release like people used to.

Seems like if you rename a function you could just keep the old name hanging around and alias it. The C preprocessor can do that at no cost. But programmers aren’t in charge of programming any more and projects get enormous, so little things like that go out the window.

X86 is horrible, though not as bad as it used to be. Might be better to just replace Intel! It’s odd cos a few years ago most CPUs were actually a RISC with an instruction translator in front. So they weren’t really X86 in a meaningful way. I believe VIA let their “native mode” escape the factory, so people could activate and use it. Not much use in writing native software for VIA chips though.

AIUI now Intel and AMD are back to running X86 on raw silicon. Makes sense, shrinks pipelines, and emulation, or sort-of emulation, is always gonna cost.

Most of the advances Intel has invested their billions in are in silicon manufacturing, so would apply to any CPU architecture. So they could launch something better. But they already tried that, and still, after 40 years, people still want that awful CPU to run Visicalc^H^H^HWindows. Then again Windows has an ARM release, but that’s not meant for desktops, just another tendril for Microsoft to take over the world with. Maybe China or India can hack up a Windows 10 ARM desktop, would save a ton of money. Linux isn’t going to do it.

Also China kind of owes the world something, that would count towards an apology. Of course nobody would trust it!

Ramble ramble…

Threadripper owners laugh in 64 cores

2080Ti owners laugh at 3328 CUs.

People who find microchips humourous need their sense of humour upgrading.

not linear with game experience

Stop treating teraflops like they used to treat bits. Different gpu architectures mean a teraflop can mean different performance.

For example 2 teraflops from the Xbox one S GPU are not the same as 2 teraflops from the series X GPU.

It’s a marketing term that means almost nothing. You could have a 24 teraflop GPU that gets smashed by a 5 teraflop GPU. It’s all about the architecture.

It’s like a CPU, GHz means something but doesn’t indicate actual performance because of IPC, instruction set, even distance. A 1ghz CPU could potentially outperform a 3ghz CPU.

While I’m at it lets also talk megapixels. They mean almost nothing. A 1 megapixel image can look significantly better and have more detail than a 20 megapixel image. It’s all about the quality of the sensor taking the image.

Stop using marketing terms as a measurement of performance.

The simple fact is the Xbox series X is going to be the most powerful console for multiple reasons but TFlops isn’t the main one.

None of the things you mention are “marketing terms that mean almost nothing”. Looks like this article attracted a lot of people whose primary education on this is video game magazines.

They’re not the whole story, but they’re not “almost nothing”. A 24 TFLOP GPU had better produce some better graphics than a 5 TFLOP one or something is seriously wrong. All the things you mention are roughly analogous to “performance”. But a lot depends on software too.

All in all the two new machines are almost identical. Which is the “best” is a question for St Augustine. Or kids in playgrounds. It doesn’t matter. Your consumer choices don’t define you as a person. Your moral choices do. There is no “tribe” you join by purchasing this over that. Companies don’t appreciate your “loyalty” except in how it affects their share prices.

Besides that, everyone knows it’s the size of your dick that matters.

I find it funny how people are commenting with what a flop means… Did you… did you not read the article? FLOP in this instance is not an actual word, but 4 different words…🤷♂️🤦♂️😂🤣

I so much want to say:

I find it funny that you appear to have missed the entire sarcasm joke in the comments up above, so much so, that you then actually need to make a comment about it.

But I’m not that kind of person, so I shall not.

Just saying. Anyhoo, I’ve got much coffee to drink.

Sad that so many people appear ignorant, anti-intellectualism isn’t a good look especially if they know better.

An interesting article.

If the C64 can perform ~400 floating point operands, then I do applaud it, its processor running at its 1Mhz..

I have just ran the following on the VZ200 (Laser210 in other countries). This is another 8-bit computer from 1983, Z80 processor running at 3.5Mhz, with Microsoft/TRS80 level ][ interpreted BASIC built into 16k of ROM.

10 A=1.2 : B=2.3 : D%=0

20 C=A+B : D%=D%+1 : GOTO 20

Ran it for 60 seconds, ctrl-break, and then a ” PRINT D% / 60 ” to give a very rough average of 61 floating operations per second. It will of course actually be slightly faster if you remove the D% addition and GOTO overheads. It will still be less than 100.

A far cry from the 12 trillion floating operations per second that this new Xbox can perform.

…further testing reveals :

173 lines of 10x addition floating point operations (over ten seconds) gives a final figure of 176 FLOPs!

184 lines of 10x division floating point operations (over 16 seconds) gives a final figure of 115 FLOPs.

Averaging these two gives 145 FLOPs for the VZ.

Unfortunately I am a little skeptical about the Commodore 64’s figure of 400 without seeing it in front of me.

Xbox Series X? Will it be rated X?

am I the only one two consider that flops on a console is by far one of the most useless value to judge a console? what about games?

“Commodore 64 is capable of somewhere in the realm of 400 FLOPS per second”

One per second too much?

“no plans to allow users”

If you buy it, you are the owner, not the user. Do what thou wilt with it. They might have designed the product to be difficult to run user code on, but there is always a way.

With continued convergence of xbox with windows and windows with linux, I would imagine it’s getting easier rather than harder, as long as you don’t care what it’s running on top of.

I just wish I could put Windows on my XBOX S, would be a great little machine!