Google and Apple have joined forces to issue a common API that will run on their mobile phone operating systems, enabling applications to track people who you come “into contact” with in order to slow the spread of the COVID-19 pandemic. It’s an extremely tall order to do so in a way that is voluntary, respects personal privacy as much as possible, doesn’t rely on potentially vulnerable centralized services, and doesn’t produce so many false positives that the results are either ignored or create a mass panic. And perhaps much more importantly, it’s got to work.

Slowing the Spread

As I write this, the COVID-19 pandemic seems to be just turning the corner from uncontrolled exponential growth to something that’s potentially more manageable, but it’s not clear that we yet see an end in sight. So far, this has required hundreds of millions of people to go into essentially voluntary quarantine. But that’s a blunt tool. In an ideal world, you could stop the disease globally in a couple weeks if you could somehow test everyone and isolate those who have been exposed to the virus. In the real world, truly comprehensive testing is impossible, and figuring out whom to isolate is extraordinarily difficult due to two factors: COVID-19 has a long incubation period during which it is nonetheless transmissible, and some or even most people don’t know they have it. How can you stop what you can’t see, and even when you can detect it, it’s a week too late?

One promising approach is to isolate those people who’ve been in contact with known cases during the stealth contagion period. To do this is essentially to keep a diary of everyone you’ve been in contact with for the last week or two, and then if you eventually test positive for COVID-19, alert them all so that they can keep from infecting others even before they test positive: track and trace. Doctors can do this by interviewing patients who test positive (this is the “contact tracing” we’ve been hearing so much about), but memory is imperfect. Enter a technological solution.

Proximity Tracing By Bluetooth LE

The system that Apple and Google are rolling out aims to allow people to see if they’ve come into contact with others who carry COVID-19, while respecting their privacy as much as possible. It’s broadly similar to what was suggested by a team at MIT lead by Ron Rivest, a group of European academics, and Singapore’s open-source BlueTrace system. The core idea is that each phone emits a pseudo-random number using Bluetooth LE, which has a short range. Other nearby phones hear the beacons and remember them. When a cellphone’s owner is diagnosed with COVID-19, the numbers that person has beaconed out are then released, and your phone can see if it has them stored in its database, suggesting that you’ve had potential exposure, and should maybe get tested.

Notably, and in contrast to how tracking was handled in South Korea or Israel, no geolocation data is necessary to tell who has been close to whom. And the random numbers change frequently enough that they can’t be used to track your phone throughout the day, but they’re generated by a single daily key that can be used to link them together once you test positive.

(In Singapore, the government’s health ministry also received a copy of everyone’s received beacons, and could do follow-up testing in person. While this is doubtless very effective, due to HIPAA privacy rules in the US, and similar patient privacy laws in Europe, this centralized approach is probably not legal. We’re not lawyers.)

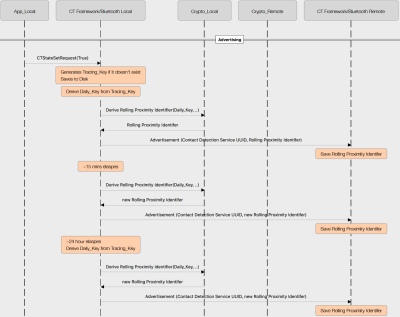

The Crypto

In the Apple/Google crypto scheme (PDF), your phone generates a secret “tracing key” that’s specific to your device once. It then uses that secret key to generate a “daily tracing key” every day, subsequently generating a “rolling proximity identifier” from the daily key every 15 minutes. Each more frequent key is generated from its parent by hashing with a timestamp, making it nearly impossible to go up the chain, deducing your daily key from the proximity identifiers that are beaconed out, for instance, but everything can be easily verified in the downstream direction. The 32 byte tracing key is big enough that collisions are extremely unlikely, and the individual-specific tracing key need never be revealed.

In the Apple/Google crypto scheme (PDF), your phone generates a secret “tracing key” that’s specific to your device once. It then uses that secret key to generate a “daily tracing key” every day, subsequently generating a “rolling proximity identifier” from the daily key every 15 minutes. Each more frequent key is generated from its parent by hashing with a timestamp, making it nearly impossible to go up the chain, deducing your daily key from the proximity identifiers that are beaconed out, for instance, but everything can be easily verified in the downstream direction. The 32 byte tracing key is big enough that collisions are extremely unlikely, and the individual-specific tracing key need never be revealed.

If you test positive, your daily keys for the time window that you were contagious are uploaded to a “Diagnosis Server”, which then sends these daily keys around to all participating phones, allowing each device to check the beacons that it received against the list of contagious people-days that it gets from the server. None of your exposure data needs to leave your phone, ever.

The diagnosis server uses the daily keys of contagious individuals so that your phone will be able to distinguish between a single short contact, where only one identifier from a certain daily key was seen, and a longer, more dangerous contact, where multiple 15-minute identifiers were seen from the same infected daily key, even though your phone can’t otherwise link the rolling proximity identifiers together over time. And because the daily keys are derived from your secret tracing key in a one-way manner, the server doesn’t need to know anything about your identity if you’re infected.

The ACLU produced a whitepaper that predates the proposed plan, but covers what they’d like to see addressed on the privacy and security fronts, and viewed in that light, the current proposition scores quite well. That it lines up with other privacy preserving “contact tracing” proposals is also reassuring.

What Could Possibly Go Wrong?

The most obvious worry about this system is that it will generate tons of false positives. A Bluetooth signal can penetrate walls that viruses can’t. We imagine an elevator trip up a high-rise apartment building “infecting” everyone who lives within radio range of the shaft. At our local grocery store, the cashiers are fairly safely situated behind a large acrylic shield, but Bluetooth will go right through it. The good news is that these chance encounters are probably short, and a system could treat these single episodes as a low-exposure risk in order to avoid overwhelming users with false alarms. And if people are required to test positive before their daily keys are uploaded, rather than the phones doing something automatically, it prevents chain reactions.

But if the system throws out all of the short contacts, it also misses that guy with a fever who has just sneezed while walking past you in the toilet paper aisle. In addition to false positives, there will be non-detection of true positives. Bluetooth signal range is probably a decent proxy for actual exposure, but it’s imperfect. Bluetooth doesn’t know if you’ve washed your hands.

But if the system throws out all of the short contacts, it also misses that guy with a fever who has just sneezed while walking past you in the toilet paper aisle. In addition to false positives, there will be non-detection of true positives. Bluetooth signal range is probably a decent proxy for actual exposure, but it’s imperfect. Bluetooth doesn’t know if you’ve washed your hands.

The cascaded hash of secret keys makes it pretty hard to fake the system out. Imagine that you wanted to fool us into thinking that we had been exposed. You might be able to overhear some of the same beacons that we do, but you’ll be hard-pressed to derive a daily key that will, at that exact time, produce the rolling identifier that we received, thanks to the one-way hashing.

Mayhem, Key Loss, and It’s Only an API

What we can’t see, however, is what prevents a malicious individual from claiming they have tested positive for COVID-19 when they haven’t. If reporting to the diagnosis servers is not somehow audited, it might not take all that many antisocial individuals to create an illusory outbreak by walking a phone around in public and then reporting it. Or doing the same with a programmable Bluetooth LE device. Will access to the diagnosis server be restricted to doctors? Who? How? What happens when a rogue gets the credentials?

The presence of a secret tracing key, even if it is supposed to reside only on your phone, is a huge security and privacy risk. It is the keys to the castle. A cellphone is probably the least secure computing device that you own — apps that leak data about you abound, whether intentionally malicious or created by corporations that simply want to know you better. If we’re lucky, this tracing key is stored encrypted on the phone, at least making it harder to get at. But it can’t be stored safely (read: hashed) because it needs to be directly available to the system to generate the daily keys.

The presence of a secret tracing key, even if it is supposed to reside only on your phone, is a huge security and privacy risk. It is the keys to the castle. A cellphone is probably the least secure computing device that you own — apps that leak data about you abound, whether intentionally malicious or created by corporations that simply want to know you better. If we’re lucky, this tracing key is stored encrypted on the phone, at least making it harder to get at. But it can’t be stored safely (read: hashed) because it needs to be directly available to the system to generate the daily keys.

Will diagnosis keys be revocable? What happens when a COVID test yields a false positive? You’d certainly want to be able to un-warn all of the people in the system. Or do we require two subsequent positive tests before registering with the server? And how long is a person contagious after being tested? We hope that a diagnosed individual would stay confined at home, but we’re not naive enough to believe that it always happens. When does reporting of daily keys stop? Who stops it?

Which brings us to the apps. What Apple and Google are proposing is only an application programming interface (API) to be eventually replaced with an OS-level service. This infrastructure only enables third-parties to create their Bluetooth-tracker applications, the diagnosis servers, and all the rest of the infrastructure. The work there remains TBD. These will naturally have to be written by trusted parties, probably national health agencies. Even if this is a firm foundation, trust will have to be placed higher up along the chain as well. For instance, it says in the spec that “the (diagnosis) server must not retain metadata from clients”, and we’ll have to trust them to be doing that right, or else the promise of anonymity is in danger.

We’re nearly certain that malign COVID-19 apps will also be written to take advantage of naive users — see the current rash of coronavirus-related phishing for proof.

Will it Work?

This is uncharted territory, and we’re not really sure that this large-scale tracing effort will even work. Something like this did work in Singapore, but there are many confounding factors. For one, the Singapore system reported contacts directly to the Health Ministry, and an actual person would follow up on your case thereafter. The raw man- and woman-power mobilized in the containment effort should not be underestimated. Secondly, Singapore also suffered through SARS and mobilized amazingly effectively against the avian flu in 2005. They had practiced for pandemics, caught the COVID-19 outbreak early, and got ahead of the disease in a way that’s no longer an option in Europe or the US. All of this is independent of Bluetooth tracking.

But as the disease lingers on, and the acute danger of overwhelmed hospitals wanes, there will be a time when precautions that are less drastic than self-imposed quarantine become reasonable again. Perhaps then a phone app will be just the ticket. If so, it might be good to be prepared.

Will it work? Firstly, and this is entirely independent of servers or hashing algorithms, if COVID-19 tests aren’t widely and easily available, it’s all moot. If nobody gets tested, the system won’t be able to warn people, and they in turn won’t get tested. The system absolutely hangs on the ability for at-risk participants to get tested, and ironically proper testing and medical care may render the system irrelevant.

Will it work? Firstly, and this is entirely independent of servers or hashing algorithms, if COVID-19 tests aren’t widely and easily available, it’s all moot. If nobody gets tested, the system won’t be able to warn people, and they in turn won’t get tested. The system absolutely hangs on the ability for at-risk participants to get tested, and ironically proper testing and medical care may render the system irrelevant.

Human nature will play against us here, too. If someone gets a warning for a few days in a row, but doesn’t feel sick, they’ll disregard the flashing red warning signal, walking around for a week in disease-spreading denial. Singapore’s system, where a human caseworker comes and gets you, is worlds apart in this respect.

Finally, adoption is the elephant in the room. The apps have yet to be written, but they will be voluntary, and can’t help if nobody uses them. But on the other side of the coin, if contact tracing does get widely used, it will become more effective, which will draw more participants in a virtuous cycle.

The Devil is in the Details

The framework of the Apple/Google system seems fairly solid, and most of the remaining caveats look like they’re buried in the implementation. The tradeoff between false positives and missed cases is determined by how many and what kind of Bluetooth contacts the system reads as “contagious”. App and server security are TBD, but can in principle be handled properly. Assuming that all of the collateral resources, like easily available COVID-19 tests, can be brought into play, all that’s left is the hard part. How can we make sure it goes as well as possible?

The Electronic Frontier Foundation came out with a report just after the Apple/Google framework was released, and while it gives a seal of approval to the basics, it also has a laundry list of safeguards for any applications that are developed on that framework. Consent, minimization of data collection, information security, transparency, absence of bias, and the ability to expire the data once the crisis is over are their guidelines. These also line up with the recommendations of the German Chaos Computer Club as well, who additionally push for open-source code to allow the code to be audited by those who have concerns about how their data is being handled.

The Electronic Frontier Foundation came out with a report just after the Apple/Google framework was released, and while it gives a seal of approval to the basics, it also has a laundry list of safeguards for any applications that are developed on that framework. Consent, minimization of data collection, information security, transparency, absence of bias, and the ability to expire the data once the crisis is over are their guidelines. These also line up with the recommendations of the German Chaos Computer Club as well, who additionally push for open-source code to allow the code to be audited by those who have concerns about how their data is being handled.

It’s heartening to see many of the privacy concerns addressed by Google and Apple as well, but we’d like to hear some discussion about what their criterion are for shutting the whole thing down when it’s all over. A global pandemic is perhaps a good time to doff your tinfoil hat in service of the greater good, but we do not want ubiquitous contact tracing to become the new normal.

While some might dismiss privacy concerns and software details as secondary to getting us through the COVID-19 pandemic, the success or failure of this project lies in whether or not people opt in, get tested when they should, and behave appropriately when they show up positive. People will only opt in if they trust the system, and they will only get tested if that’s made as easy as possible. Only one of these two can be solved with software.

Great article!

More info on the subject by

Bruce Schneier https://www.schneier.com/blog/archives/2020/04/contact_tracing.html

and Ross Anderson https://www.lightbluetouchpaper.org/2020/04/12/contact-tracing-in-the-real-world/

Cheers

Geat article, But seems there is an earlier work called PocketCare (from circa 2015 ?) which used bluetooth to do contact tracing – this was done by a group of people from SUNY Buffalo. I found a paper and there is also .apk https://www.researchgate.net/publication/332932532_PocketCare_Tracking_the_Flu_with_Mobile_Phones_Using_Partial_Observations_of_Proximity_and_Symptoms

Bravo! Excellent article Elliot. I would much appreciate similar attention being paid to the many tracker / self-diagnosis apps springing up (eg: Corotrac, Covid Symptom Tracker, etc.) that seem to be jumping the gun with less thought on privacy and security..

The “tests-available” part (and also the delay that is caused by waiting for the result) could (maybe even selectively to time / region) be mitigated by allowing users to check off potential symptoms, interpreting them to e.g. health authority rules and already start warning the “proximity contacts” before there is a test result. Makes it more uncertain, but also quicker

Yeah. That’s where it can really go off the rails, though. Imagine a high-rise building where each inhabitant can see a couple neighbor’s beacons. Without testing, it would “spread” through the whole building as fast as the update rate on the apps.

With testing, only the actually contagious person is in the daily reports.

Well, zou could also wait for the recipients to show/acknowledge symptoms. but selectively even the automatic thing would make sense. if the region is relatively hard hit, a lockdown of the building is probably a good idea. Instead of a complete lockdown like now in some regions.

A tracing app is not making everything normal. It’s just a possible part to keep lockdowns confined to areas and timespans for individual peoples (or gorups thereof (e.g. building)) as opposed to complete lockdowns.

The problem is you’ve involved worried people whose minds can conjure up all number of symptoms and side effects. Better to rely on a scientific process that doesn’t care if someone nearby has been licking door handles or disinfecting them.

True in the general case. It’s then a tradeoff between “false/overcautious” lockdowns and time til verified result

Am I the only one that doesn’t keep BT turned on except when I’m using it?

No. It saves battery.

BluetoothLE draws virtually nothing. That is why fitness trackers can run on a speck sized battery

Exactly, all of the advice up until now has been DO NOT TRUST BLUETOOTH and the regular stream of security flaws found in the technology seems to support this assertion. Location tracing at the cell tower level is already possible and in my country (Australia) that metadata is already recorded so that should be enough. This BT thing is a great idea except for the fact that people have multiple good reasons why they will not comply with it, and there are also technical reasons why it cannot even be enforced, e.g. on rooted phones with certain mods.

You shouldn’t trust Bluetooth for secure communication. But for sending out a beacon the security shouldn’t matter I guess?

I rarely use BT except in my car maybe I should eliminate that too.

BT is always turned off on all my devices, I can’t stand it as it gives me strong headaches. For instance 10 minutes of usage of a BT speaker and I’m good for a 1 or 2 hours headache.

This is strange because I don’t have this kind of effect with wifi, which should be the same kind of frequencies as BT, around 2,4 GHz (and I’ve played with many wifi equipments).

I’m really interested to know more about why I get headaches with BT and not with WIFI, if anyone has a clue.

You may need to do some testing. For example, do you get a headache if bluetooth is merely enabled (sending a few advertizing packets, very low emissions) or only if it’s sending a continual stream of many packets (eg: for your speaker)? Does bluetooth classic (such as used for your speaker) versus bluetooth LE (eg: as used for fitness equipment) matter? Does enabling a bluetooth speaker (without playing anything, or even without pairing it to a nearby device) still have the same effect? All bluetooth speakers? Do you get a headache if you are around other people with their bluetooth phones or devices enabled?

One key test would be to have a friend enable or disable bluetooth on their phone randomly, without telling you which is which. Is it possible that knowing that bluetooth is on is required for you to get the headaches? (I’m not doubting that you feel the headaches, just tryiing to help you understand the sources). As you note, Bluetooth uses same RF frequencies as WiFi, and at substantially lower power, so it’s odd that it would affect you while WiFi does not; one factor to weigh is that you know which is enabled, so see if eliminating that knowledge has any effect..

Well in some countries, various scenarios like this is already happening. For example here in Czech republic, there is group of companies, half of which are heavily invested in big data processing and internet tracking, who really tried and still tries to convince government, that they can really help, only if they get the right data – mobile operator position data and credit/debit card transaction logs is what they are looking for. Of course they are ready to help for “free” – you know, like charity. And of course it is all like “we value everybody’s privacy”, “we will delete the data after this all ends (this one is especially laughable)”, “we just want to help”. The situation is now a bit chaotic, but it may be that they will succeed.

One of major internet companies here added Bluetooth + GPS based tracking feature into their maps app. It is opt-in and they now claim 750000 users opted in. When asked about privacy, their spokesperson said “The fear of hurting someone else is far greater than the fear of sharing your data.”

Looks like Poland is even worse. Looks like they made privately developed and operated tracking app mandatory. Ouch.

As to my knowledge app is mandatory for people who are under quarentine. At least nobody I know (including myself) was forced to install any app.

In Ch!na they track dissidents using similar technology, and assign negative social points to people associating or even being close to them.

Yeah, China was always a test bed for tracking tech before bringing it to the west. Tech companies designed it over there to keep tabs on their manufacturing slave labor force (and a lot of the farms of people they use to fraudulently prop up what they call “AI”). But they will take any excuse, exploit any disaster–to bring it home. Anyone who thinks that’s a China problem and not something on the cusp of becoming worldwide is a rube. We should dismantle these companies ASAP.

Ahhh, privileged Apple and Google techies, assuming that everyone has a smartphone and is glued to it like they are…

There is no privilege in having a smart phone. Adjusted for inflation the modern phones are less expensive than the dial phones of the 70s, the monthly rate is also much less and there are no long distance charges.

Lots of homeless people have smart phones because their utility is so high and the cost fairly low. I think some places even give them out for free to impoverished people.

Mobile ownership is extremely high even in the majority world. People are more likely to have a mobile than clean water.

Anyway, use your privilege of owning a phone to reduce your risk of infecting others.

I don’t think you understand the situation. In the end the majority have to be either infected or treated with a vaccine for this to be over, the vaccine is expected to take at least one year last I heard anything.

and the vaccine will be 14% (or less..) effective much like the seasonal flu vaccine… so people will still have to get the virus to become immune, but you can bet all this “track humanity’s every move” insanity will continue. Hilarious if anyone thinks this is actually achievable without infringing upon the natural right to privacy we all have, or by creating an atmosphere where everyone loses their mind and starts denying that their natural rights, or anyone else’s, exist. We’re well on our way. The vaccine won’t “save us all” and neither will a bluetooth connection.

“14% (or less) effective” is both wrong and misleading.

It is wrong because the current data from CDC shows that influenza vaccines from 2004 to 2019 have been on average approximately 40% effective *at preventing infection*.

It is misleading because the 40% effectiveness data doesn’t mean that the other 60% got a full blown infection. When someone is infected after vaccination, their infection is significantly weaker and almost never deadly.

So, when a vaccine is produced, it will save most of us. Even those who still get infected will most likely have a weaker infection that will not kill.

Also, scientists have not found the novel coronavirus to mutate quickly enough to thwart a vaccine effort.

I don’t like the idea of “tracking humanity’s every move” either. Notice how Apple and Google are making a voluntary opt-in system. I think if anything, that’s how it should be.

I hope everyone becomes exposed sooner, than later. At some point 100% of people will be exposed, why not speed that up. Yes, some will die, but those remaining will then be mostly immune, preventing re-outbreak.

A vaccine, if any ever comes around, will not be needed as the virus will be defeated. Also, how many will die from the vaccine?

I’m glad I don’t have a phone this will work on.

But many that would have lived (with hospital treatment) will also die because there is not enough hospital treatment to go round if everybody gets infected at the same time.

Read your history – we having people exposed sooner is something we haven’t seen in generations. It is not nice. (People die not just from the disease, but from otherwise preventable causes, lack of services,

people fear to go out, etc.)

Look at yellow fever. Bubonic plague.

The 1918 flu epidemic was a comparatively mild example.

Death is also an alternative you should consider.

Why did you buy it? Seems like a waste.

National health agencies are NOT trusted parties

In some places, that’s true. That complicates the best-case scenario significantly.

This protocol doesn’t necessarily pass any info about your identity on to the agencies, though, so they can only really tie it to you through your IP address / etc. If you’re truly paranoid, yet altruistic, you could presumably pass on your daily keys through Tor if you test positive.

I still don’t know who gets to post to the “diagnosis server”, or who runs them, and those are pretty big details. Anyone in the loop on this?

Sure, let’s see to it that 20+% of the population suffers long term kidney problems, that’s the ticket.

In the end, they will. But we want a bed for this person in a hospital. Hence the intelligent lockdown we have now in the Netherlands. Sliw down the spread so health ogranusations can cope. That is all we need. But I will not use my pgone for this. An API is not what we need. That means EVERY app has a possibility to get to the info, even if this is finally over. If you keep thus in one app only, by unloading the app it stops. This functionality should not be part of an OS.

Sometimes these things aren’t a choice. If you think an app will solve that, you’re too gullible. Too often I see people framing this as if every illness is the result of intentional human decision, as if we have perfect power and foresight. We don’t. You overestimate our agency. Disasters will happen, and forking over all our rights won’t actually prevent them. People who try to sell that to you are taking advantage of your fear and naivety.

This is absolutely terrifying. Between DIY medical equipment and tech solutions that give away far too much to corporations this site is turning into Goop for nerds.

Goop?

As in the hand washing cream?

That’s good stuff. Somehow it combines the best of soap and kerosene in one product! My mind boggles at the chemistry that must be involved.

I think that’s exactly what’s in the cheap versions… turn black oily hands into a uniform grey pallor with one application..

It also works to mitigate poison ivy and pine sap. Have gotten into P.I. and washed with which resulted in no irritation. In my case it was the orange stuff…GoJo?…but they all work about as well.

Marvelous stuff

Just turn it off and put it in a cookie/biscuit tin.

You’ll have it if you need it.

I don’t suggest our governments do this ( Though I’m sure some have already.)…. But Apple, Google, and their network carriers already know where most of us have been pretty precisely. A simple SQL comparison of time and location could generate a “Crossing map” for each of us. We’ve already signed off on letting them track us. Perhaps the APP could just ask for what they already know. 20 feet is good enough for me.

They know only coarsely; so for example they may know that you were probably in or near a given store (or shopping center), but not which aisle you were in at 2:43pm, when somebody else passed you.

The point of the bluetooth based tracking is to do the matching ON YOUR OWN PHONE, rather than at a central location.

If/when testing capacity increases to a level that can manage the active number of infections, then hopefully social media influencers (e.g. Kyle Jenner, etc) will be able to popularize the use of this app and we can break the COVID-19 transmission chains the way they are doing in some other countries (e.g. Taiwan, etc).

The first part of that statement is a real, workable solution that makes sense. The second part is tech kleptocrat vapor. We don’t have any reason to believe in contact tracing. It’s a scam. Serious testing programs do all the work, the app just exists to capitalize on the moment. You know, like a vulture.

This is just the tip of the iceberg and if your really can’t see this coming then I am not sure what to do to warn you. What is to stop them from saying well we see an uptick in crime in this area and to ensure the safety of residents we need the data from all phones. Or whoops new virus strain now we need to know everywhere you have been and oh yea to pay for it we are gonna sell the data associates with your ID to advertisers to see if you are stopping at their product to look or not.

Remember Google/Apple are not charities and one of them has made a literal fortune selling your data, the other swears it charges you so much so they never have to *wink* *wink*

How long till we are dealing with a social currency that dictates when we can and cannot come out based on past actions from this tracking. This screams terrible idea! I really hope you all see that. We have given enough, when do we say enough is enough. Remember you have nothing to fear but fear itself. I for one do not need a device to warn me about possible exposure. I am fine being an adult and limiting my excursions out of the house and if I do leave I make sure to wear gloves and cover my face.

“nd most of the remaining caveats look like they’re buried in the implementation. ” – so great on paper, unworkable in reality..

I think your mistake is that you think the purpose is to fix the covid crisis. This is gonna find permanent, spooky uses forever after, much like the legislation after 9/11. Both for adtech (really, we’re letting Google handle this? Yikes) and for ever-expanding state surveillance, which for some reason we’ve collectively grown apathetic about. We seem to just let it grow unhindered.

Every emergency is a lucrative opportunity for these types. They know everyone is terrified and distracted and will absolutely not let it go to waste. It’s not even radical to suggest; the usual suspects immediately get to work on these kinds of projects after nearly every single dramatic event. It is known.

Certainly a concern, though I’m seeing benefits of forced Work From Home and Shelter in Place too. Wider adoption of communication technologies leading to reduction of unnecessary business travel. Could there be positive uses for this too after the COVID-19 crisis? Like contacting people who may have unknowingly witnessed a crime or Amber Alerts (missing children notifications in the US). There may be many positive uses if enough people opted-into private and distributed contact tracing.

Here in italy they’re working on a similar thing, fortunately there are laws that prevent it from being mandatory. The thing is that this is setting a precedent. Imagine if the US had these tools during the cold war era, or when 9/11 happened, you’d all be living under 24/7 government surveillance. I don’t even have to cite Orwell, just look at china and their social credit system, if you can convince people it’s for their own good then you can do whatever you want.

If italy makes the app mandatory (which they won’t) I’ll consider going back to my nokia or making a faraday cage phone case.

Seems like a relatively simple solution to the bad actor reporting a fake positive would be for “cryptographically trusted” testing centers to have a corresponding tool that could sign the pseudo random positive ID and report it from there.

“In Singapore, the government’s health ministry also received a copy of everyone’s received beacons, and could do follow-up testing in person. While this is doubtless very effective, due to HIPAA privacy rules in the US, and similar patient privacy laws in Europe, this centralized approach is probably not legal.”

Yeah except the Hippies and Snowflakes in California just suspended HIPAA enforcement for Telemedicine. On April 3, 2020, California Governor Gavin Newsom issued Executive Order N-43-20:

https://www.gov.ca.gov/wp-content/uploads/2020/04/4.3.20-EO-N-43-20-text.pdf

Excerpting from Page-1, Paragraph-6: “…in the exercise of its enforcement discretion, will not impose penalties for noncompliance with regulatory requirements imposed under the HIPAA Rules…”

If they can get away with this criminal suspension of your Constitutional rights for Telemedicine, you can be sure they won’t stop there. Just like the Chinese Communist Party, these Goons in California are drooling at the thought of tracking EVERYONE – except themselves of course.

I thougth that HIPAA was federal law and not subject to state law (albeit also not a Constitutional right).

I suppose that police agencies under state authorization (which include local police) could be told that enforcing federal HIPAA law was a low proirity (“using their discretion”).

HIPAA is federal loaw and that means is takes precedence when local laws conflict with it.

will this work on my Nokia 3310?

ghost phones will be a commodity , here in chile they forced everyone to register their IMEI number with the local FCC like office , when the last year some russian hams visited me , they were incredible mad that they werent able to just buy a sim card and operate it on their cellphones because they werent registered on the local network white list , so i ended up buying a phone for them and registering it on my name so i could call them during the expedition! i think i know were we are heading .. i know that maybe there are some plans to force everyone to register their mac addresses onto telco whitelists here.

Ive seen this first hand, some apps scan actively the macs around your cellphone , they know who you sleep with, who you work with, and were you live , and they are selfaware of the device mac address , so if the cross reference y social media profiles with macs, they know who you spend time with , im a very lazy social media user , i never use built in chat features on platforms , yet one of the platforms seems to know who i spend more time with showing me them as first when i open the app, Another great example was a probable pregnancy last year , we were talking all day about a probable baby comming , and suddenly ! bang one of our online shopping apps was showing us baby stuff, (its hearing us), another thing i ran an experiment on google , playing with searches , G0 0gle thinks im a 50somethin male , with a PhD , and with probably 3 kids , married to someone younger , and that i like boating and offroading .. pretty creepy huh ! check your adssettings , its only time for the govt to compile profiles based on your searches , or for corporations or individuals to target you for malicious stuff , we live in a world of resent , hate and envy , and sometimes those things are part of the bureaucracy , be aware that if you are wealthy , you will be targeted , if you are from a minority , you will be targeted too , if you are poor , you will be targeted ..

What are some specific examples of apps which scan for/record the MACs around your phone? Are any of them open source?

you are not alone rabbit , i just got a burner phone and a stack of prepaid sims , just going to leave my main phone at home , and use this flip phone around, along with my ghost glock and my modified Baofeng Uv5r ., this is starting to worry me enough to get a burner phone , it might be that some of our secrets arent as secret as we thought

I turned off BT and NFC on my phone and deleted all games and media accounts from it.

Getting rid of gacha games completely was a good decision.

Might pick up a cheap Nokia slider and some burner sims.

I would be curious to see the privacy implications of this protocol evaluated in the context of actual cell phones.

Cell phones typically have WiFi, other bluetooth services, may have other BT devices, and cellular radios.

What privacy problems does all the rest of this hardware add to the contact tracing context?

e.g. how do you prevent use of WiFi MACs, other BT service advertisements, paired BT devices, etc., from leaking identificaiton data.

Yes, you can. But then you won’t know when you may have been infected, and so you’ll risk taking the virus to your at-risk friends and relatives. This is a tool for our benefit.

The app will not tell you that you were infected. It will only tell you that you might have been near infectetd person but will not tell you that for sure you haven’t been near sich person. Once you are warned that 3 days ago (or 7) you passed near infected person you have already goodnight kissed you relatives 3 times ( or 7) not to mention where you have sprayed the virus around ( staircases, shops etc. for example).

Hogwash; it’s totally unproven. They know the solution, it involves more government spending on testing and healthcare. The contact tracing apps aren’t meant to be a viable solution, they’re a cop-out and have ulterior motives.

This has potential for abuse it needs to have a plan to be retired and mothballed or scrapped as soon as the pandemic is over vs be allowed to become something permanent.

How will you convince yourself the plan is actually implemented and the infrastructure is actually decommissioned?

True I guess it’s another reason to consider BT and NFC the security risk they are.

A lot of federal government agency shills all over the comments, I thought people in this community were capable of free thought.

While contact tracing is a very useful task, it is only one, and probably not even the most important one. The cell phone providers need to implement some other capabilities.

Some other things cell phones could do is identify LOCATIONS that are hotspots (by cross correlating the travels of people and seeing what LOCATIONS are in common, and encourage refinement of say, some business’s, local distancing and cleaning protocols. The improvement of these locations would be visible over time, and, by looking at different measures implemented by different locations, the cost-benefit of those changes could be measured.

Phones could also identify, real time, when a person is close to someone who has themselves had many contacts (and is potentially a super-spreader). This measure would encourage everyone to use better distancing, and avoid “spreaders”.

Using Baysian inference, cell phones could PREDICT who is likely to become positive. Predictions would enable focused testing, greatly reducing the need for the billions of tests that would otherwise be needed. The more data types available to this analysis tool, the greater its “power” in teasing out the highest correlations. For example, if some the cell phone owners’ temperature were available, this proxy information would dramatically increase the selectivity of a prediction—even if only a small fraction of cell phone users actually made the data available. Similarly, if the occupation of the cell phone owner was available, this information would have correlations that would be automatically found, and increase testing recommendation specificity for the entire database. [It may well be that cell phone owners that were near a subway driver, who himself was near persons that had a temperature, would have significantly higher susceptibilitiy to COVID].

Contact tracing is essentially after the fact. Because the incubation period is 12 days, a newly diagnosed patient may have been near 100 persons (8 per day). And those persons may have been near 100 people—all of which makes a huge of list to contact. And, by then, some of those people may have been infected. This simplified contact method won’t work, especially in the case of COVID, when many aren’t diagnosed, and in any case, the delay from infection to diagnosis is long enough that the infection numbers will diverge.

More sophisticated means are necessary.

Today, merely doing a test tells us only whether the patient actually has COVID at that moment. It gives us no information on how to focus our resources, since there is no automatic way to incrementally gather predictive information.

Data mining (using anatomized cell phone data) could solve the biggest problem we have in the post-shelter-in-place world… figuring out the cost benefit relationships between taking selective preventative measures and limiting the spread of the disease. The more information available about each (anonymized) user, the greater predictive power we can use to limit the number of tests required, and the faster we can learn what levers make the most difference in curbing the disease at the lowest economic cost.

“The more information available about each (anonymized) user, the greater predictive power we can use to limit the number of tests required, and the faster we can learn what levers make the most difference in curbing the disease at the lowest economic cost.”

The faster they become DE-anonymized…

Lol the concept of data that is both useful and reasonably anonymized is a total oxymoron. The word itself is a PR lie. This will be a very predictable nightmare and we should all finally fucking know better than to fall for this by now. I can’t believe any disaster will immediately cause idiots everywhere to fall for this trash.

I love the way A&G can keep a completely straight face while they pretend they aren’t doing this all the time anyway. Luckily I exfiltrated some code:

“`

if ( ! Pandemic )

{track everyone,everwhere; Share Data with all the scumbags; lie that you can’t do it}

else

if (GovtPayment < 1e9)

{ track everyone,everwhere; Share Data with all the scumbags; lie but pretend you might be able to with some incentives}

else

{ track everyone,everwhere; Share Data with all the scumbags, and the health department; lie about the money}

“`

As usual, big tech proposes complicated solutions that the general public can not understand.

This is *terrifying*.

The people (especially in this thread) that look at this as an innocuous “tech demo” are utterly hopeless. WHY do people trust the worst offenders in our modern age with something so Orwellian and *creepy*?

Nineteen Eighty-Four was a *warning*… not an instruction manual.

Rolling keys but broadcasting them from the same MAC address seems contradictory.

For sure all of this is going to give a boost to alternative mobile OSes like Ubuntu Touch. Already ordered my PinePhone and planning to join the dev team asap.

the elephant in the room is people showing just how well they’ve already infected each other with the idea that smartphone apps are solutions …but that’s my obsolete opinion, feel free to ignore me

Amen, apps never solved jack shit. It’s just a big pile of overpriced tulip bulbs.

Hmm…an app that can track you and figure out everyone you contacted. No room for misuse there right? I am not comfortable with this.

It’s perfectly understandable not to be comfortable with this and it *IS* important to challenge the idea all along the way and raise questions about it, as there certainly is room for misuse.

Nevertheless, we need to stay minimally rational and clear headed.

Unfortunately you don’t really seem to have understood much of what is described here.

Or did you simply not read it at all ?

The idea is sending around a bunch of unique numbers to other phones around, and receiving numbers from them in return, which will be stored on your phone.

Your phone will also regularly receive lists of keys related to persons who are known to have tested positive for the virus.

Your phone will then use this to check its stored list of the numbers it received over the last relevant period (whatever it is), and alert you if there’s a match.

How is this ‘tracking you’ or ‘figuring out everyone you contacted’ ?

You’re incredibly naive to believe that a.) this explanation of implementation is completely honest and b.) that there are no holes or exploits possible, perhaps already known by the creators themselves and they intend to use them immediately. There is no such thing as anonymized data at this scale, and these big data companies have everything they need to connect the dots and break their own security. You should know better.

You say we should stay rational and clear headed. I bet you really like the term “FUD.” but sometimes fear and doubt are invaluable and shouldn’t be excused and justified away like you do. None of this passes any kind of sniff test. It’s so radioactive it glows in the fucking dark.

Great Article! The news tell us that the corona virus epidemic still going strong and we need help to alleviate the problem. I believe that the Contact Tracing (CT) system could help to contain the virus contamination.

I have a design, using the phone area code that could improve the CT performance. If the phone area code have been considered to be used in the CT system and rejected, please ignore what follows.

Consider the following scenario (it assumes you are familiar with the CT).

There are around 200 millions cell phones in the USA and assume that 10% are part of the CT program. A few days ago, in one day there were around 36,000 cases of infected people and assume that 10% of the cases reported their “Diagnosis Keys” (DK) to the Centralized Server (CT).

That day, the CT was receiving, on the average, 150 DKs per hour. Due to privacy concerns, the CT has “almost” no information about the phones part of the CD system: to inform the “phones” that a new DK has been received and for “checking”, the CT either “Send” the info to all phones or each phones “Ask” (download) for the info.

It seems that in the current CT design, each user must download the DKs for checking. There is performance problem: a phone will be checking DKs not even remotely close to its physical area. A phone in Florida will be checking DKs created from phones all over the country. This would be OK for people that have many contacts with people that “travel” outside their physical areas, like airports, trains, etc., but for most people the contacts are “mostly” local.

In the above scenario, each of the 20 millions phones would be checking 3,600 DKs in a day or 150 every hour or 2.5 DKs per minute. I believe there is an easy solution to improve the performance, let’s use the phone area codes as follows:

After the RPI has been created and encrypted with the Daily Tracing Key (DTK),

the app will get the phone area code and concatenate it (2 bytes) to the encrypted RPI, and let’s name it ERPI. The phone now “Beacons” the ERPI and close by phones will “capture” it and kept on their ERPI list for 14 days.

When an “infected phone” decides to share its info with the CS, it creates a “Area Code” list with unique area codes obtained from the ERPI list ( the first 2 bytes) and uploads the Diagnosis Keys list and this new Area Code list to the CS.

The CS upon receiving the info, assigns an identifier to the uploaded Diagnosis Keys list and “SENDS” a “signal” to all the phones in the Area Code list and deletes the Area Code list.

The “signal” could be a message with the Diagnosis Keys list identifier or the Diagnosis Key list itself (very short only 14 entries).

The phones upon receiving the signal from the CS, get the Diagnosis Keys list and processed it (it will ignore the area code in the ERPI list for the encryption checking) in a similar way as the current design.

Pros: Each phone will process much less Diagnosis Keys and the CS will

download fewer Diagnosis Keys, improving performance in both, phones

and the CS.

Easy implementation

Cons: The Area Code is made public.

Again, If this concept of using the area code has been already studied and rejected, I apologize for wasting your time, but if not, please let me know if this area code idea could improve the CT. This is a quick draft, please ignore typos

Thanks.

I haven’t seen any of the details about how the diagnosis servers are going to work, so I have no clue. This is the kind of nitty-gritty that, to the best of my knowledge, is still TBD.

In the US, there are nearly a million known cases. If 10% of them use the app, that’s 100k. If I remember right, the daily keys are 16 B each, so that’s 1.6 MB of data per day. Is that a lot or a little? I’ve seen animated GIFs that weigh 2 MB easily, and this is obviously a few magnitudes more important.

I don’t know that any location info has to be more granular than country, and I also know a ton of people who kept their old area codes as they moved around the country for work, etc.

But the diagnosis servers could ask you which state you’re in, on a voluntary basis, if this ends up being an issue. That might be easier?

The Daily Tracing keys are 32 bytes long, so the Diagnosis List is 448 bytes long (for 14 days). In your example, 100,000 infected persons would upload 44.8 MB of daily data!

Now, the worst part, we have about 200 million phones in the US. If only 10% of them are in the Contact Tracing program, 20 million phones will be downloading the Diagnosis Keys List (448 bytes long).

By using the area code as I suggested, each phone (person) would download only the Diagnosis Keys list from “known Areas” (area codes). By the way, it does not mater is people moves around and keep the old are codes.

Also, if we also include the phone country code, it would made life easier for parts of the world that are close together, like Europe

To summarized, The implementation of the are codes in the CT program is doable at a very little cost and it

would improve the performance. If needed, I could explain it in more details.