Look, I’ve been there too. First the project just prints debug information for a human in nice descriptive strings that are easy to understand. Then some tool needs to log a sensor value so the simple debug messages gain structure. Now your debug messages {{look like : this}}. This is great until a second sensor is added that uses floats instead of ints. Now there are sprinklings of even more magic characters between the curly braces. A couple days later and things are starting to look Turing complete. At some point you look up and realize, “I need a messaging serialization strategy”. Well you’ve come to the right place!

Message Serialization?

Message serialization goes by a variety of names like “marshalling” or “packing” but all fall under the umbrella of declaring the structure by which messages are assembled. Message serialization is the way that data in a computer’s memory gets converted to a form in which it can be communicated, in the same way that language translates human thoughts into a form which can be shared. A thought is serialized into a message which can be sent, then deserialized when received to turn it back into a thought. Very philosophically pleasing.

Message serialization is the way that data in a computer’s memory gets converted to a form in which it can be communicated.

Serialization are useful in different places. It’s possible to write a raw data structure onto disk to save it, but if the programmer wants that data to be easily readable by other languages and operating systems in the future they may want to serialize it in some way to give it consistent formal structure. Passing messages between threads or processes probably involves some manner of serialization, though maybe more limited. Certainly an obvious application is sending messages between computers over a network or any other medium.

Let’s back off the philosophical rabbit hole and define some constraints. (This is Hackaday afterall, not Treatise-a-Day). For the purposes of this post we’re interested in a specific use case: exchanging messages from a microcontroller. Given how easy it is to connect a microcontroller to anything from a serial bus to the Internet, the next step is giving the messages to be sent some shape.

What to Look For in a Serialization Scheme

The endless supply of “best!”, “fastest!”, “smallest!”, “cheapest!” options can lead to a bit of decision paralysis. There are many parameters which might affect your selection of one serialization format versus another, but a few are consistently important.

The first question to answer first is: schemafull or schemaless? Both strategies specify the format of your data, that’s the point of the message serialization after all. But what goes inside each message can be structured differently. A schemafull serialization scheme specifies the structure – and in some ways the content – of your data as part of the protocol. In a schemafull protocol a SensorReading message always has the same set of fields, say, SensorID (uint32_t), Timestamp (uint32_t), and Reading (float). The exact way to encode these fields into bytes is specified, serialized according to a protocol, as well as their existence and type. If your firmware receives a SensorReading, it knows exactly what shape and size the contents will be.

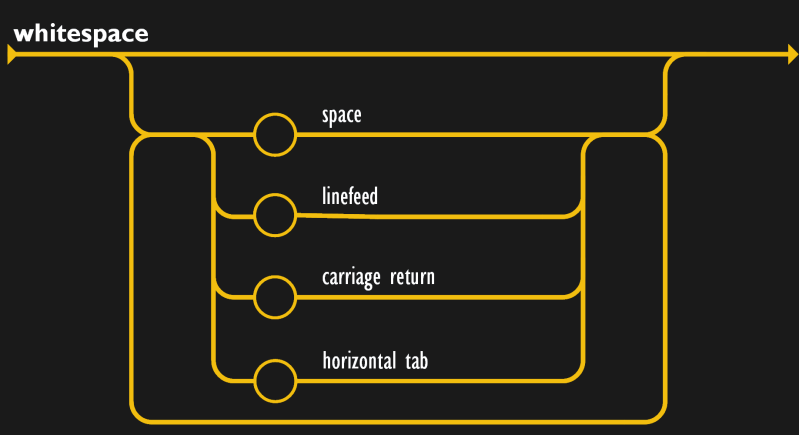

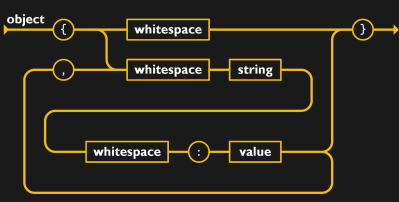

Given that, describing a schemaless protocol is easy, it has no constraints at all! JSON is perhaps the most prevalent example but there are certainly many others. The data is still encoded in a predefined way but the exact contents of each message is not specified ahead of time. In such a schema there is no predefined contents for our SensorReading message. Maybe it has a single float? Maybe ten integers? The receiver needs to check if each field is present and attempt to decode it to get at the contents.

Byproducts of Schema and Non Schema

“But wait!” you ask, “don’t those two methods work the same way?”. Well, yes, sort of. In some ways it’s more of a formalization of the contractual agreement between participants. Obviously to decode a schemaful message you must attempt to decode each field individually, just like in a schemaless system. But if you assume the format is specified ahead of time then other decode strategies like casting directly into a data structure, or code generating an encoder and decoder, may become available.

If structure isn’t your thing then the flexibility of a schemaless protocol might be in order.

There are other byproducts as well. Often a schemaful solution will allow the developer to specify the protocol separately from the implementation, then use a code generator to produce the serialization and deserialization functions in whatever language is needed. This may produce more efficient purpose-built code, but more importantly it makes it clear to the developer what is being communicated and how.

If structure isn’t your thing, then the flexibility of a schemaless protocol might be in order. If incoming data is to be treated as a whitelist, with an application only accepting exactly the fields it’s looking for, then the overhead of authoring and maintaining a schema may be more work than is warranted. Just make sure everyone is on the same page about what is sent and how.

When choosing libraries for microcontrollers, the other constraint to be aware of is what type of memory allocation is required. Sometimes there is a preference to entirely avoid the potential hassles of dynamic allocation altogether, which can constrain choices significantly. Requiring static allocation may force the developer to contort their code to deal with variable length messages, or force constraints like a fixed maximum size. But more than forcing a serialization scheme the memory management requirements constrain which libraries are available to use.

Let’s take a moment for an aside about libraries. It’s obviously possible to hand craft both the protocol and the library to decode it, avoiding most of the concerns mentioned above and producing exactly the code needed for any constraints. Sometimes this makes sense! But if you expect to extend the schema more than once or twice, and need it work with multiple languages or systems, than a standard serialization protocol and off the shelf libraries are the way to go. A common schema will give many options for languages and tooling across operating systems and platforms, all of which can literally speak the same language. So you can choose the library with a small memory footprint and static allocation for the tiny microcontroller, and the big dynamic fully featured one for the backend.

Favorite Serialization Schemes and Libraries

Here are some serialization schemes I’ve happily used in the past which I think are worth considering. Note, these recommendations are based on work I’ve done with microcontrollers in the past, so they are written in pure C for maximum compatibility and support static allocation.

JSON

JSON has become a sort of lingua franca of the API-driven Internet. Originally used as the serialization format for communicating the contents of JavaScript objects it now appears more or less everywhere, just like JavaScript. JSON is encoded to human readable and writable text, making it easy to inspect or edit in a pinch. It is schemaless and fundamentally allows storage of objects and arrays of objects which consist of typed key value pairs. If you can speak JSON, you can communicate with just about anything.

A side effect of JSON’s human-friendliness is a reliance on strings, which can make it a bit of a pain to deal with in C, especially when avoiding the use of dynamic allocation. One strategy to cope with this is a heavy reliance on flat buffers to hold the strings, and pointers to point in at various objects and fields without allocating memory for them. I’ve happily used jsmn to handle this when parsing JSON.

Jsmn is simple enough to be completely contained in a single header file and is a very, very thin thin wrapper around the pointer-based technique mentioned above. It validates that JSON objects are properly formatted, and allows the developer to get pointers to the various keys and values contained withing. It’s up to the developer to figure out how to interpret the strings therein, though it gives you their type. It’s a little like strtok on steroids.

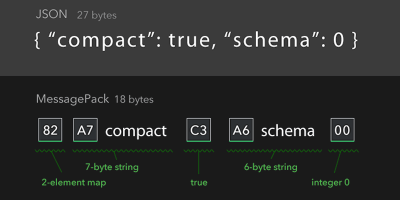

MessagePack/msgpack

MessagePack is an interesting medium between human friendly JSON and a maximally efficient binary packed protocol. The homepage literally bills it as “It’s like JSON. But fast and small.” (Ouch, sorry JSON.) Like JSON, it’s schemaless, and is fundamentally composed of key/value pairs. Unlike JSON, it isn’t designed to be human readable, and the typing feels a little more explicit. See the figure below for a comparison of one message in JSON and MessagePack. It pretty much sells itself. MessagePack is fairly common, so finding libraries to read and write it is easy. I’ve used it in Rust and C, but there are at least one or two options for most major and many minor languages and platforms: the project claims support for at least 50. If you’re interested in a little more detail, [Al Willaims] wrote about it recently.

When speaking MessagePack from a microcontroller, I’ve used CWPack. Documentation can be a little thin but the code is easy to trace through and is broken down very nicely into small functional blocks. In fact, that’s my favorite feature. CWPack is very easy to understand and use. Serializing and deserializing a message is a breeze with small, self contained functions. The biggest thing to watch out for is that it can sometimes be more manual than expected. For instance when sending a map, you must encode the start of the map, and number of elements, then for each element separately encode the key name then value. Once you get used to the CWPack way of doing things it produces some of the easiest to understand code posible.

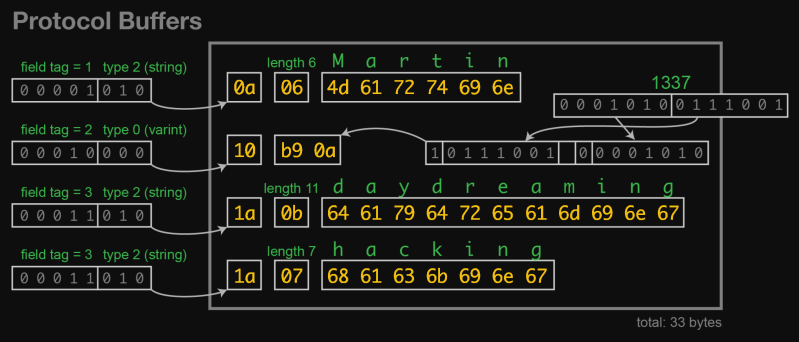

Protocol Buffers/protobuf

Protocol Buffers are a heavy hitter in the schemeful messaging world. Originally built by Google it can, and probably has, been used to encode data for every kind of data link and application imaginable. Protobuf is specified by separate files in a dialect called proto, proto2, or proto3. These define messages, the basic hierarchical unit, and all the various fields and relationships between them. These .proto files are the absolute specification of what can and cannot be consumed by the encoders and decoders that are produced by code generation from the proto specification.

Because your Protobuf is separate from the implementation, referring to a “library” in this context really refers to the code generator used to produce the sources that get compiled into the final application. “protoc” is the generator provided by Google and for proto2 can produce implementations in Java, Python, Objective-C, and C++. The implementation for proto3 adds support for Dart, Go, Ruby, and C#. But that’s just Google’s official tooling, and there are many, many more options available. Remember that Protobuf specifies the bytes on the wire, not the API to interact with individual messages, so each code generator may produce radically different code to interact with the same messages.

All that said, especially for a large project, Protocol Buffers do an excellent job forcing all participants to agree on exactly what data is sent, and how. For microcontrollers, I typically turn to nanopb as my code generator of choice. The compact size of the generated code and support for static allocation make it easy to integrate into almost any project. Ultimately you hand it structs to encode and buffers to encode into, or visa-versa.

Go Forth and Encode

No matter your constraints, there is a message serialization format for every need. These are some of those which I have found useful but there are obviously many more options. Have a favorite format or library you use all the time? Chime in in the comments; I’d love to hear what to try next!

I’m becoming a fan of ASN.1 DER. It’s nice to have a variable-length binary format that’s legible in a hex editor.

I wonder how many CVEs were caused by buggy ASN.1 parsers…

Yeah, ASN has a bad name. But just because some parsers were buggy does not mean all of them are. I was actually pretty surprised Google engineers that designed protocol buffers never heard of ASN.1 (can’t find the link now, but they admitted that).

My work involves a growing amount of managed schema message serialisation mechanisms using Avro and versioned schemas stored in a registry. Pretty heavyweight, but finance…

Ha! I knew it! Arrow still exists in secret somewhere!

Protocol Buffers – meh. Supposedly can be light-weight, but typically are not. Data serialization should be done using old and proven tools, and be the minimum required for the job at hand.

“This is Hackaday afterall, not Treatise-a-Day.” So this means HAD is hereby relegated to shallow coverage?

For binary packing, stream decoding and efficiency constrained resources, I’ve used Compact Binary Object Representation CBOR. https://github.com/jimsch/cn-cbor

Specifically this one.

We used it as a firmware update mechanism and it was wonderful.

ASN.1 PER?

I think CBOR (https://cbor.io) is an interesting option for embedded applications. It’s an IETF standard, has a signing extension (COSE), a schema extension (CDDL), but can also be used in a schema-less manner.

I upvote “Treatise-a-day”

There’s also https://github.com/X-Ryl669/JSON for a very compact, SAX like JSON parser (that is, parsing JSON on the fly without having to store all the document in memory, only a small buffer is required), that does not allocate any memory and compiles to less than 2.5kB on X86 (and probably a lot less on transilica). It’s like JSMM but on steroid!

Doing embedded systems design this was an ongoing battle. Last year I decided to create my own solution. You define your protocol in YAML, and it generates the C code for the service which includes message handlers. You just feed it bytes and it handles the dispatching similar to gsoap. It is made to be pretty compact for embedded systems, but does include the ability to print packets in json for working with cloud based platforms.

There is also a python based command line tool for talking directly to devices with the protocol.

https://github.com/up-rev/PolyPacket

Cool! Were you ultimately happy with your choice to build something yourself?

Ceedy?

It’s an old data storage medium, originally used to store audio files and touted as a replacement for vinyl records and magnetic tape storage. It’s written to and read by lasers. Quite a technology! Not sure how they got into the title of this article though… ;-)

I notice it’s now seedier, I thought it was a pun I wasn’t getting.

Well if they had left it as ceedy it might have appeared to be a swap of the c with the s in serialization. Hinting at cereal and hence the pun with seeds. But as cereal has ea and serial has ia, I think someone has decided the pun was too much of a stretch for people to think about and/or perhaps was just distracting.

Yep, this was more or less it. Was intended as a terrible, terrible pun.

Mission accomplished! I enjoyed pondering it. And I loved the article (which I should have mentioned well before this point) – thank you.

Haha, thanks! Glad at least a few people seem to have picked up on it 🙂

perhaps it meant that we all inevitably cede to the futility of serializing sanely

I have found libbson version of JSON with datatype syntax tags a simple and relatively performant option for passing structured data. It forces one to type-check the object structure during parsing, can handle regular JSON, and is human readable.

Takes away most of the guesswork when you start passing data though various platforms and languages.

Also liked the options Apache’s Xerces offers, but XML syntax usually is not as popular these days.

I dont use cerealizaton as I do paleo/keto. Nor want infinite froot loops in my code.

MessagePack is great! I wish they would hurry up and make it a built-in everywhere. Human readability is nice and all, but debuggers should be able to decode it for you.

Take a look at my C implementation of MessagePack(EL), which also does not require malloc, and is more convenient to unpack by the searching function: https://github.com/dukelec/msgpackel

Take a look at my C implementation of MessagePack(EL), which also does not require malloc, and is more convenient to unpack by the searching function: github.com/dukelec/msgpackel