All of us who do some programming know that logging is a time-tested way to output messages about the internal state of our code. With varying degrees of adjustable granularity, these messages allow us to keep track of not only the state of the application, but also its overall health. When things do end up going FUBAR, these log messages are usually the first thing we look at as a software equivalent of a Flight Data Recorder in an airplane.

Spending some time and care in not only designing the logging system, but also in deciding what should be logged and at what level, can make our future self appreciate life a lot more. We’re all familiar with the practice of ‘printf-debugging’, where logging is added as part of the usual post-crash autopsy as one tries to figure out what exactly went wrong. It’s one way of doing it, and eventually it works, but we can do much better.

People are lazy, and you’re only going to stick to good logging practices if they are at least as easy if not easier than sprinkling printf() statement throughout the code. Yet at the same time we want to be able to handle things like log levels and newlines without too much extra typing. The most successful logging libraries are built with this

Setting the Context

Not every application calls for the same kind of logging. Consider the context of your project when deciding on the logging that will fit your needs.

Many embedded platforms are highly application-specific. Whether it’s a simple 8-bit MCU inside a washing machine or a powerful SoC-based system, it follows that a microwave’s MCU firmware does not require the same level of logging as a car’s ECU or an industrial PID controller. However, they all benefit from good logging practices, whether those messages are being stored, or only visible when actively debugging the hardware.

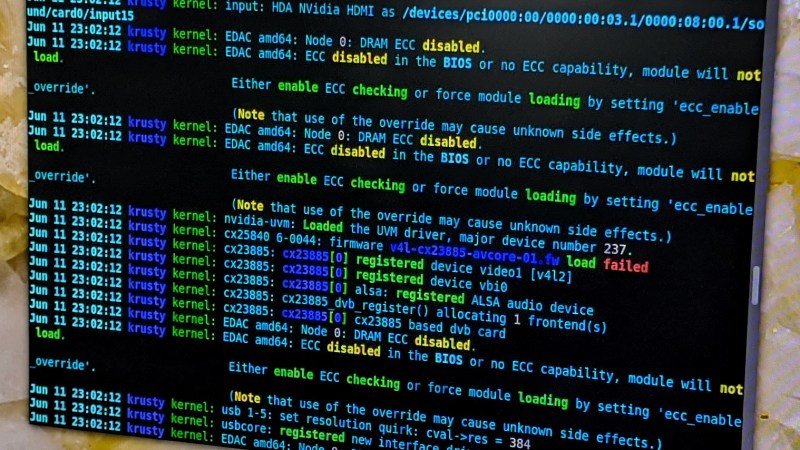

For server applications, logging is a pretty central part of operations, as a system administrator will want to read out the logs from a central location for all services that run on the servers for which they are responsible. This implies that these logs should only contain the information that a system administrator might find useful, which is not the same information developers will be looking for.

Desktop platforms are similar, where on most platforms a central logging service will record the standard output from any running services. For regular applications (i.e. not services), any logging output tends to either be a more ephemeral thing. Either the standard output is displayed in a terminal window, or vanishes into the void without a terminal or other output associated with it. For the sake of this article we will assume that we just have to write to stdout without concerning us with any further details.

As a small aside, mobile platforms are a rather special case when it comes to logging. They are more similar to server platforms, in the sense that every application’s standard output is logged. This way one gets the logging for every single service and application that is running, without having to configure anything. The exact details of how to obtain this log on mobile platforms like Android, iOS, Tizen, or ChromeOS will differ per platform.

Use Log Levels Wisely

Why have log levels, you may ask? Because you want to differentiate between the importance of log messages, possibly even filter just for particular types of messages. Common log levels are:

- Fatal.

- Critical.

- Error.

- Warning.

- Notice.

- Info.

- Debug.

- Trace.

Normally you’d run a service at a log level of at most ‘Info’ level, but if the service is too ‘chatty’ at this log level one might want to bump it down to Notice or even just Warning. The onus is hereby on the developer to properly consider what log level the message they’re about to add to the code should exist at. Is it an informational message? A notice that should be looked at at least once? Or is it a warning that must be addressed?

Obviously, an Error level message is bad news, but we have two more levels beyond that. Here ‘Critical’ level should be used for errors that fall between ‘well, bugger’ and ‘the system just caught on fire’. It follows then that ‘Fatal’ messages involve the service reaching an unrecoverable state, a fatal runtime error, and anything else which might mean that a sysadmin is about to get paged out of bed at 3 AM on a Saturday.

Finally, the ‘Debug’ level is for information that is useful while debugging the code, like values of certain variables and verbose logging of application activity. For truly fine-grained debug output, there is the ‘Trace’ level, which is for tedious information. This is generally used for maximum verbosity logging.

Using Poco::Logger; — A Practical Server-Based Example

Over the years, I have had to add logging to both hobby and commercial projects. Among my favorites was an embedded server project that would run on countless headless systems around the world, with the only feedback being the built-in logging that could be read out with the right software and sent back to HQ. For this I used Poco::Logger from the libPoco library. It takes care of the more tedious stuff, such as herding different logging streams (channels) together and other logistics.

The right logging was essential not just for myself to diagnose problems, but also for colleagues who could use the same logging to figure out where (and why) things had begun to catch on fire by tracking requests and their data. It worked well and and I’ve ended up using the same kind of logging in some of my hobby projects, including NymphRPC, a Remote Procedure Call (RPC) library in C++. The part that I implemented is contained in nymph_logger.h and nymph_logger.cpp. It allows NymphRPC-based applications to write to the log as follows:

NYMPH_LOG_DEBUG("Added new connection with handle: " + NumberFormatter::format(handle));

The NYMPH_LOG_DEBUG () part is a preprocessor macro, which is defined in nymph_logger.h as:

#define NYMPH_LOG_DEBUG(msg) \

if (NymphLogger::priority >= Poco::Message::PRIO_DEBUG) { \

NymphLogger::logger(loggerName).debug(msg, __FILE__, __LINE__);\

}

Here we can see how the line is replaced with an if-statement that checks at which log level the application is supposed to be logging at. The preprocessor statement saves us from typing all of this every single time, while also expanding the __FILE__ and __LINE__ text into the current filename and line in the (unprocessed) source code where the log statement is used. The static NymphLogger::logger() call returns a specific logger, which is an instance of Poco::Logger.

A single NymphLoggerChannel (inherited from Poco::Channel) is provided to Poco::Logger as an output channel. This channel merely passes a formatted string along with the log level to an externally provided function pointer, from where it can be printed to stdout, written to a file, stored in a database, or whatever else is appropriate.

The formatting code:

string msgStr; msgStr = NumberFormatter::format(msg.getPid()); msgStr += "." + NumberFormatter::format(msg.getTid()); msgStr += "\t" + msg.getSource() + "\t"; msgStr += NumberFormatter::format(msg.getSourceLine()) + "\t"; msgStr += msg.getText() + "\t\t- "; msgStr += msg.getSourceFile(); (*loggerFunction)(level, msgStr);

When we then run the NymphRPC test client and server, we can see log output like the following:

6 - 14524.2 NymphSession 130 Read 37 bytes. - src/nymph_session.cpp 6 - 14524.2 NymphMessage 79 Method ID: 2. - src/nymph_message.cpp 6 - 14524.2 NymphMessage 93 Message flags: 0x0 - src/nymph_message.cpp 6 - 14524.2 NymphUtilities 170 NYMPH_TYPE_STRING - src/nymph_utilities.cpp 6 - 14524.2 NymphTypes 306 String value: callbackFunction. - src/nymph_types.cpp 6 - 14524.2 NymphSession 148 Calling method callback for message ID: 0x3 - src/nymph_session.cpp 6 - 14524.2 NymphMethod 92 Calling callback for method: helloCallbackFunction - src/nymph_method.cpp

Looking at the test server’s code we can see that the first number is the log level (6: Debug), with the rest being the previously formatted string. This contains the process ID, the thread in this process which logged the message, the name of the logger, the line in the source, the message, and finally the relative path (from the Makefile) to the source file.

Final thoughts

I have found that having to regularly eat one’s own dogfood and dealing with commentary from colleagues and customers about the logging output from one’s code has been highly motivating to polish the rough spots. The one which this article cannot cover is what is important to log in your code. That is something which you, the dev, either knows already or will find out through bitter experience while digging through a log file.

That said, a hobby project is a good place to get started with trying out different logging approaches. Once you find yourself working on a commercial project, it is definitely too late to get creative. On the positive side, once you figure out how to do logging right in different situations, it can turn weekend-long bug hunting sessions into a brief, 10-minute log-reading-code-tweaking and CI-bothering session on a Friday afternoon.

Big advantage to using a logging library that can log in the background is it can *really* help for applications that need consistent latency and can avoid logging-related performance issues. Printf in a running terminal will block when the screen is full, for instance, and anything writing to a file will inconsistently pause while writing when buffers fill. Those delays can be *much* bigger than you think, too.

Poco’s Async channel handles this correctly since it also drops messages when its queue is full, essentially providing consistent latency to the call.

Well, I’m sold. I’m working on a hobby project for which I have to choose between printing GPIO reads or having a working result within a cycle of a few hundred milliseconds. It doesn’t actually matter for my Mickey Mouse problem, but scaling beyond my attic would present a challenge.

Yeah, turn on all the debugging code and then wonder why you can’t reproduce the customer’s timing problems. I just love it when that happens.

Sending log data over the network? That’s great until you have to diagnose network problems,

Implementing logging is a great way to blow through a whole bunch of your time when you need to pad up your billable hours.

You are absolutely right about the timings. In embedded systems or time-critical locations, such as device driver interrupts, it is not possible to spend a lot of time with logging, because it falsifies the measurement. We ran into this a lot of times. Even a simple string formatting function (ex printf), can take a lot of time specially on smaller MCUs.

In our own operating system, for example, logging takes place in a shared memory and processing takes place later. With a debugging task, which can make all sort of things, most of the time it’s dumping on some sort of interface (serial)

You can also write fancy write functions for this (we do that too), but there are times when only a few bytes can be written at the critical stage.

The measurements are evaluated later, so it doesn’t matter if we see the result in 5 or 25 seconds.

The shared buffer must be large enough to buffer until the logging task process the data. This way logging is not a big deal.

> logging takes place in a shared memory and processing takes place later.

Worked in some timing sensitive embedded systems and I’ve done this.

But you still need to get the information off the system and into something you can use to debug it. If you aren’t reading the memory block with a debugger, you are probably converting it to string. Some of the points in this article become useful at that point even if the libraries and examples are not.

You can get the memory content without any special debugger.

We have a low priority task, which is running occasionally (there is no higher task to run), and that task is dumping the content of the memory raw or in nice formatted string, most of the time to some interface like serial port.

In our case it’s a little bit more complex, we use debug streams, and the low priority trace collecting task scans frequently for new content 1/10th of a second or something like that.

Every debugging stream is a consumer producer ringbuffer, there is no need for synchronization between the producer (debugger) and consumer (trace collector) and they can work async.

This way we can debug even from interrupts without any hassle.

In our OS for device driver debugging and development a device driver most of the time uses 2 debug streams.

One for the device driver task, and one for the interrupt service in the driver, this way nobody blocking the other.

(I did not mention the trivial features like log levels or time stamping, which is crucial for device driver development with cycle accurate resolution)

HAHAHAHA. You haven’t really lived until you made use of the absolute fastest logging methods – writing a byte into RAM before you give up the ghost, on an MCU where the RAM just happens to be external DRAM (that can be accessed by external tools). Which, as everyone knows, is “D” because it forgets its content immediately as soon as you stop refreshing it, and just to make things very clear, after a crash/reset the MCU in question did NOT start refreshing the DRAM until you told it to do so manually, through said external tool.

Except, here’s the kicker: even with the refresh off, DRAM WILL RETAIN ITS CONTENTS (including your “squeal” byte) for quite a few seconds most of the time, long enough to note the crash, manually re-engage DRAM refresh and reading your byte. Manuals certainly don’t tell you about this sort of thing…

If you manage it, a poor man’s breakpoint AKA jump-to-self is a great way to keep the lights on when everything else has fallen apart around you.

Oh, and my paritcular favourite – stash __FILE__ in a static const, then wrap malloc and free in macro that logs a pointer to the aforesaid variable, __LINE__, and the result/argument. A little post processing, and you can hopefully find which use-after-free has sent your Program Counter into hyperspace.

No love for jtag?

You haven’t lived til you’ve used JTAG to debug your new JTAG debugger debugging your final target.

The Google logging library is pretty good for C++ (https://github.com/google/glog). In addition to various logging levels it has things like LOG_EVERY_N, LOG_FIRST_N etc.

Are these better logging practices part of a plan for sustainable forestry?

Plant 3 trees for every one you fell, that’s the best logging practice….. though I dunno if it’s sustainable, by my calculations the entire world is trees by 2109

What’s wrong with that? As long as there is some sky view starlink dish.

Tree density is self-limiting-even if there is no attrition due to deer browsing or insects, larger trees shade out the smaller. Easily seen if you watch the regeneration of a clearcut aspen woodland over time. It goes from really high density small trees spaced less than a foot between, to a couple feet apart after 5 years, to marketable and 10 feet apart after 15-20 years. Competition for sunlight and nutrients…

I’ve been a fan of https://github.com/sharkdp/dbg-macro, a single header logger w/ quite a few options and easy to no-op out by setting a flag (DBG_MACRO_DISABLE)

sadly it’s a C++ header, I wish it was named .hpp instead of .h to make that more clear.

In the C universe I tend to have my own based on the syslog API. The macros can be turned off at compile-time and can be easily modified to hook into a real logging system rather than fprintf(stderr, …).

Once I get past the development / proof-of-concept phase I usually want logs either going to syslog or going into a database. Towards that end, rather than writing a fancy library that provides every option. I write a small stub/shim with 1-3 lines per function that is easy to modify later.

This seems like a very ‘ground level’ article for people new to this.

I was hoping there might be some discussion of techniques to make logging more productive.

One think I’ve messed with in the past is to spit out a CSV list of info I can import into excel to better visualize what is happening. But this is a crappy technique for large systems.

I’ve played a little with some JSON formatting of data to make a log easier to parse without a lot of arcane regexs (the old joke goes that you have a problem you want to solve with regular expressions? Now you have 2 problems). I haven’t taken the idea that far though.

I was thinking I could use something like a schema to keep the data obfuscated unless you have the correct file to decode it. That way, you could have a system that produces extensive debugging info (with the log level configurable at run time) while at least obfuscating the details of the code from reverse engineers.

And being able to consume the string data with tools in a more robust manner allows for the possibility of creating tools to help understand the system state through the lifetime recorded in the log file.

One of the costs are the extra charters needed for the markup. Those extra bytes really hurt a bandwidth constrained interface such as a serial port.

AI based log parsing tools have the potential to make all this moot. That assumes that creating the infrastructure for training the neural net isn’t too bad. I think that would mean having very good unit and system test coverage along with an error injection system to generate logs with enough errors for a DL system to train with.

I spent a *lot* of time getting GStreamer’s logging system built up once upon a time. I don’t know how it compares with more recent systems, but it seems to have done the job for a while now…

Have you guys ever considered using QDebug()??

Quite versatile and one of my favorite methods — but that probly dates me a little bit.

These seem outrageously high level and heavy weight for the given audience. No mention of logging to a circular memory buffer for post crash retrieval via JTAG? How about strategies for IRQ safe logging where you can’t malloc and don’t have the time to format strings, such as emitting a message number and parameters for later formatting? How about emitting state machine state numbers out of whatever spare pins you have going, so they can be picked up with a logic analyser, and then correlated with logs after the fact? Or even hijacking existing protocols for debug, e.g. emitting debug logs via MQTT for developing IoT devices, so you don’t need to add another thick layer of RPC.

Embedded debugging is a whole art unto itself, and given the size of MCUs most people will be using, it’s a shame to see the boring unreliable techniques used for debugging desktops being wheeled out.

+1. Even if “embedded development” tends to be interpreted these days as “writing Python scripts under Armbian and figuring out which /gpio/ file to write to to turn on an LED” we should not pretend that’s all it is supposed to be about. Or supposed to be about at all to be quite frank.

As an aside, if you can get your debug logs appearing on another screen, then this is invaluable :

tail -f mylog.log

Watch the output appear in real time.

Now I’m messing with uControllers, I dream of plugging in a small lcd screen and watching output, but of course as outlined in discussions above, all that io will no doubt skew results vastly.

The other aspect of logging is analysis of logs using a log analysis tool.

Anyone that works in Enterprise Software Development will know logging is very important, and then it will get published to a cluster logging inspection tool like Splunk or Elastic for various use cases (dashboards, alerting, investigation, and so on).

Obviously high level development in Java/Scala/C#/etc is not the target of this site. However the logging practices are relevant in this discussion. I see that horrible multiline msgStr concatenation and wonder ‘why’? You should call your logging library with your message string (with argument placeholders), arguments, level, etc, and that should be built only if the level is met, and all of the logging needs to be Asynchronous outside of the application execution.

But that’s too much for most embedded development, and yes, just stick your trace messages out over a suitably fast serial link, and have the other side do the work.

It’s wrapped in a macro – the expression will probably never have even reached the compiler if the log level filters it.

I am very much if favor of log formats that are machine-readable, especially for products with big install bases that require some sort of fleet management system. However, in my experience you still need a logging format that can be easily read by developers. Otherwise your developers won’t bother logging anything.

JSON is everyone’s go to for a human-readable structured data format, and I’ve seen plenty of logging systems that chose to output log data in JSON format. But frankly, JSON makes for a crappy human interface format. It is visually noisy, and difficult to parse by eye. Furthermore, it has a fiddly syntax owing to its support for complex structured data. In most cases, however, the ability to represent deeply structured data in logging output is unnecessary, as evidenced by the fact that many systems make do with completely unstructured printf-style logging.

Still, the syntax of JSON primitives (numbers, strings, etc.) is relatively easy to parse for both humans and computers. I built a logging system for a high-volume consumer IoT product that borrowed this syntax and the approach was quite successful in practice. We had tools that would automatically reconstruct, and even diagram, the historical behavior of the device from log data, and well as systems that would bulk-analyze data across millions of devices looking for patterns. And yet the logs themselves were easy to interpret by eye as well.

An added benefit of a simple, structured logging system (regardless of formatting style) is the ability to tokenize the data at the source, which is one way to address the issue of output bandwidth. You lose the ability for humans to immediately read the log output, but you drastically reduced the amount of data generated, and often the code size as well. In one system, we had tools that would post-process compiled object files to replace fixed strings (e.g. field names) with numeric ids, while simultaneously constructing an off-line map of id to string values. Other tools would use these maps to reconstitute the text form of the log from the tokenized output. This made it possible to have extensive logging on fairly small systems (~256K code space, ~32K RAM) with limited output bandwidth.