OpenCV is an open source library of computer vision algorithms, its power and flexibility made many machine vision projects possible. But even with code highly optimized for maximum performance, we always wish for more. Which is why our ears perk up whenever we hear about a hardware accelerated vision module, and the latest buzz is coming out of the OpenCV AI Kit (OAK) Kickstarter campaign.

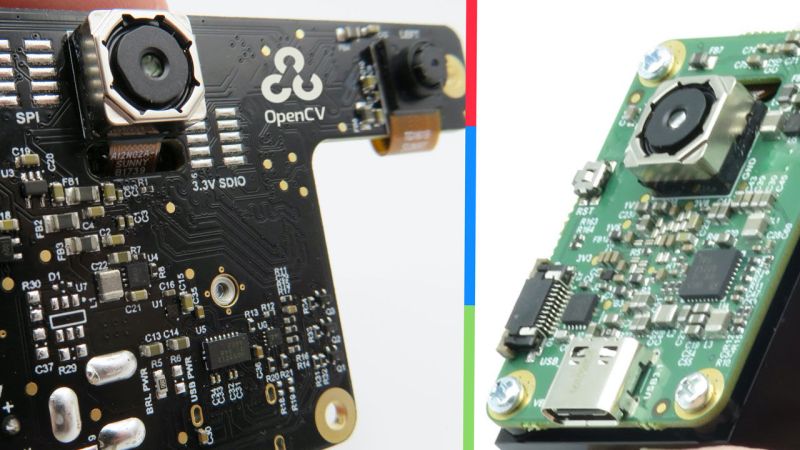

There are two vision modules launched with this campaign. The OAK-1 with a single color camera for two dimensional vision applications, and the OAK-D which adds stereo cameras for that third dimension. The onboard brain is a Movidius Myriad X processor which, according to team members who have dug through its datasheet, have been massively underutilized in other products. They believe OAK modules will help the chip fulfill its potential for vision applications, delivering high performance while consuming low power in a small form factor. Reading over the spec sheet, we think it’s fair to call these “Ultimate Myriad X Dev Boards” but we must concede “OpenCV AI Kit” sounds better. It does not provide hardware acceleration for the entire OpenCV library (likely an impossible task) but it does cover the highly demanding subset suitable for Myriad X acceleration.

Since the campaign launched a few weeks ago, some additional information have been released to help assure backers that this project has real substance. It turns out OAK is an evolution of a project we’ve covered almost exactly one year ago that became a real product DepthAI, so at least this is not their first rodeo. It is also encouraging that their invitation to the open hardware community has already borne fruit. Check out this thread discussing OAK for robot vision, where a question was met with an honest “we don’t have expertise there” from the OAK team, but then ArduCam pitched in with their camera module experience to help.

We wish them success for their planned December 2020 delivery. They have already far surpassed their funding goals, they’ve shipped hardware before, and we see a good start to a development community. We look forward to the OAK-1 and OAK-D joining the ranks of other hacking friendly vision modules like OpenMV, JeVois, StereoPi, and AIY Vision.

You lost me at kickstarter.

Not everyone is willing to back Kickstarter campaigns and that’s fine, this is exactly why I put the information up top so people are informed.

So we will also have a pre-order store after the KickStarter campaign ends on Wednesday which will have discounted pricing (but not nearly as discounted as the KickStarter campaign).

It’s also open source @Joe so you could just buy the components, order the boards, and assemble the boards yourself, or pay someone to assemble them.

https://github.com/luxonis/depthai-hardware

Did you consider Crowd Supply (not affiliated with them other than buying one product)? I have little confidence in Kickstarter, but Crowd Supply seems to “curate” more. It doesn’t have the wider audience, but for this type project, I think it is in line with their market / audience.

Hi Tim,

Great suggestions!

So we actually did do two Crowd Supply campaigns:

DepthAI: https://www.crowdsupply.com/luxonis/depthai

megaAI: https://www.crowdsupply.com/luxonis/megaai

And we’ve actually fulfilled both of them already as well.

And then our third campaign is on KickStarter with OpenCV:

https://www.kickstarter.com/projects/opencv/opencv-ai-kit

So we would have done CrowdSupply for the third campaign but OpenCV already had many followers on KickStarter from their previous OpenCV AI Courses KickStarter:

https://www.kickstarter.com/projects/satyamallick/ai-courses-by-opencvorg/description

So we wanted to remain on the same platform since there was already such strong backing/interest through KickStarter (and so many already had accounts there).

Thoughts?

Thanks,

Brandon

The Myriad X might be underutilized from the perspective of “team members who have dug through its datasheet”, but that datasheet is not available to us ordinary schlubs.

So is it underutilized, or overprotected?

How can you have a development community if the community doesn’t have access to the data sheet?

Brandon from the OpenCV AI Kit team here. So we actually aim to solve both the underutilized and over-protected problem. It’s hard to say which it is, but our mission is to democratize the power.

So to that end we do all the grunt work to make the chip do all the things it is designed to do, and then make those capabilities flexibly programmable with an open-source ecosystem.

More details on this approach are here:

https://github.com/luxonis/depthai/issues/136

But long-story short this allows:

– Flexible/modular use of a bunch of accelerated CV/AI capabilities (depth, neural inference, 3D object location, 3D feature location, tracking, h.265/HEVC encoding/etc.

– microPython source code running as nodes on the device. So you can write your own code and have it run directly on the Myriad X, with access to SPI, UART, I2C, etc. And then these outputs can be fed to other nodes including neural inference/etc.

– OpenCL support – so you can run whatever CV code (w/in memory constraints – 512MB total) directly accelerated on the SHAVE processors.

And more reading on the open-source API is here: https://docs.luxonis.com/api/. And the list of Githubs around this are here:

https://docs.luxonis.com/faq/#githubs

So better than the datasheet, there are full, open-source complete Altium designs, which you can also straight-up purchase. So you can use them, prototype with them, and then leverage the design files to make your own things, or modify things as desired.

So a constraint is that the datasheet is not available for the chip, but the way we work around this is making the chip do all the things that most would want, and then documentation that and making all the bits that interact with the part open-source so it’s easy to take and modify to your needs. And it’s all MIT-licensed so it can be integrated into commercial products.

And as a background on the chip: It is a 24-core assymetric multiprocessor architecture where each core is connected to every other core via a network-on-chip architecture. Such a structure is extremely powerful, but also means that it takes years of working on the chip before one is proficient. So a lot of the value add is figuring out how to abstract this crazy power into an easy-to-use solution.

Thoughts?

Brandon from the OpenCV AI Kit Team here. So it’s hard to say between underutilized or overprotected, but either way we plan to solve that problem and democratize the AWESOME power of this chip to solve real-world problems.

So yes, it is a constraint that the community does not have access to the Myriad X data sheet, but what we are building makes it so that is not necessary. We have a system on module with a wealth of information on how to use it, and a huge, OpenCV-backed ecosystem of open-source hardware, software, and AI-training that works with this system on module.

So then the (multi-year) effort here has been to discover what are the core things that are needed by the market, and implement those in efficient ways that make use of the resources in the Myriad X – while allowing them to be flexibly and modularly combined together with other functions.

The way we are going about this is summarized here:

https://github.com/luxonis/depthai/issues/136

In short, here’s what this allows:

– Hardware-accelerated functionality like disparity depth, 3D object detection, neural inference (OpenVINO compatible), H.265 (HEVC encoding), feature-tracking/optical flow, etc. which are drag-ang-drop nodes to make pipelines of CV/AI processing that runs entirely on the Myriad X.

– microPython so you can write and run your own rules/logic/interface protocols and have them run directly on the Myriad X as nodes in the pipeline. Say for example having a node that talks over SPI to control stepper motors to control a gimbal or robotic motion based on the pipeline results. Or a node that filters bounding box results between nodes to choose which bounding boxes are processed by later neural inference of CV stages.

– OpenCL support so that arbitrary CV/AI algorithms can be executed performantly on the SHAVES. There is an open source compiler for this.

And we contribute to microPython to support that open source effort since we take advantage of it on the Myriad X.

And a lot of the value-add here is abstracting away the asymmetric-24-core, network-on-chip real-time C++ programming that is required to configure the chip to do these things. So that folks don’t have to re-invent the wheel to get the chip to do what is designed to do. We did that so others don’t have to. To try to add engineering efficiency to the world.

And then all of this is backed by open source designs, summarized here:

https://docs.luxonis.com/faq/#githubs

And so these provide not only how to interface with everything the module can do, but are also directly purchasable, and are open source hardware designs so you can leverage them directly to make your own designs.

And they’re MIT-Licensed, so you can build and sell your own commercial products off of them without worrying about rights to do so. You can do so and not tell us. :-). And you can integrate with closed-source systems.

Thoughts?

Thanks,

Brandon

On behalf of the whole OAK team, thanks @Roger Cheng for your coverage! Super appreciate it in helping get OAK out to the world! (Brandon Gilles here from the OpenCV AI Kit writing here.)

How does this differ from Depth AI? They appear very similar

The efforts are on and the same. We’ve been working with OpenCV on the back go round to release this as part of OpenCV for quite a long time now.

The DepthAI githubs will move to OpenCV repo after the KickStarter concludes.

https://hackaday.io/project/163679-luxonis-depthai

Thanks,

Brandon

Now this is an idea i had that i just cannot be bothered to follow up on. Can someone make a camera that goes in the rear window of your car, that uses its AI to scan for police cars, and undercover cars (leave this part for someone else to figure out) so that i can travel freely without fear of police behind me/