In 2020, our digital world and the software we use to create it are a towering structure, built upon countless layers of abstraction and building blocks — just think about all the translations and interactions that occur from loading a webpage. Whilst abstraction is undoubtedly a great thing, it only works if we’re building on solid ground; if the lower levels are stable and fast. What does that mean in practice? It means low-level, compiled languages, which can be heavily optimised and leveraged to make the most of computer hardware. One of the giants in this area was Frances Allen, who recently passed away in early August. Described by IBM as “a pioneer in compiler organization and optimization algorithms,” she made numerous significant contributions to the field.

Early Days

Trained as a maths teacher, Allen worked at a high school in New York for two years after graduating. She went back to complete a Masters in mathematics and was recruited by IBM Research on campus. Though planning to only stay long enough to pay off her debt and quickly return to teaching, she found herself staying with IBM for the rest of her career, even becoming the first female IBM fellow in 1989.

Allen’s first role at IBM was teaching internal engineers and scientists how to use FORTRAN — apparently a difficult sell to people who at the time were used to programming in assembly, which did just fine thank you very much. In an interview, Allen talks about the resistance from scientists who thought it wasn’t possible for a compiled language to produce code that was good enough.

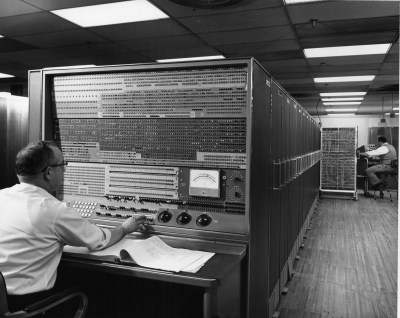

After teaching, Allen began working on the compiler for a 100 kW “supercomputer” called Stretch. With 2048 kB of memory, the goal of Stretch was to be 100 times faster than any other system available at the time. Though this ultimately failed (to the dismay of a few clients, one finding Stretch took 18 hours to produce their 24 hour weather forecast), it caught the attention of the NSA.

Because of this, IBM designed a coprocessor addon, Harvest, specifically for codebreaking at the NSA. Harvest ended up being larger than Stretch itself, and Allen spent a year leading a team inside the NSA, working on highly classified projects. The team didn’t find out many things about what they were working on until they were leaked to the press (it was spying on the Soviet Union — no prizes for guessing).

An engineering feat, Harvest used a unique streaming architecture for code-breaking: information loaded onto IBM Tractor tape was continuously fed into memory, processed and output in real time, with perfectly synchronised IO and operations. Harvest could process 3 million characters a second and was estimated by the NSA to be 50-200 times faster than anything else commercially available. The project was extremely successful and was used for 14 years after installation, an impressive feat given the pace of technological advancement at the time.

Speed is of the Essence

The success of the project was in large part due to Allen’s work on the optimisations performed by its compiler. Compiler optimisations are magic. Some of us think of compilers as simple “source code in, machine code out” boxes, but much of their complexity lies in the entirely automatic suite of optimisations and intermediate steps they use to ensure your code runs as swiftly as possible. Of course, this was important for the limited hardware at the time, but the techniques that Allen helped develop are present in modern compilers everywhere. The New York Times quotes Graydon Hoare (the creator of Rust and one of today’s most famed compiler experts) as saying that Allen’s work is in “every app, every website, every video game or communication system, every government or bank computer, every onboard computer in a car or aircraft”.

So what do compiler optimisations actually look like? Allen wrote many influential papers on the subject, but “A catalogue of optimizing transformations” which she co-authored with John Cocke in 1972 was particularly seminal. It aims to “systematize the potpourri of optimizing transformations that a compiler can make to a program”. It has been said that compilers that implement just the eight main techniques from this paper can achieve 80% of best-case performance. Here are some of the most basic ideas:

So what do compiler optimisations actually look like? Allen wrote many influential papers on the subject, but “A catalogue of optimizing transformations” which she co-authored with John Cocke in 1972 was particularly seminal. It aims to “systematize the potpourri of optimizing transformations that a compiler can make to a program”. It has been said that compilers that implement just the eight main techniques from this paper can achieve 80% of best-case performance. Here are some of the most basic ideas:

- Procedure integration: replacing calls to subprocedures with inline code where possible, avoiding saving/restoring registers

- Loop unrolling: flattening loops by writing out statements explicitly, avoiding unnecessary comparison conditions

- CSE (common subexpression elimination): eliminating redundant computations which calculate values already available

- Code Motion: moving subexpressions out of loops where it is safe to do so

- Peephole optimisation: replacing known instruction combinations with more efficient variants

Some of these might seem obvious to us now, but formalising and standardising these ideas at the time had great impact.

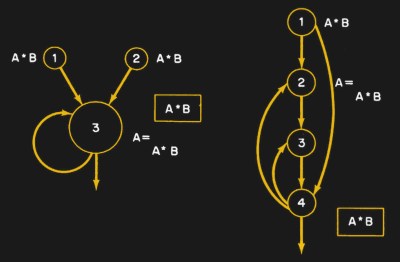

Parallelism

Allen’s last major project for IBM was PTRAN, the Parallel Translator. This was a system for automatic parallelism, a special type of compiler optimisation. The aim was to take programs that weren’t written with parallelism in mind and translate them for execution on parallel architectures. This concept of taking sequentially written code and automatically extracting features from it to be run in parallel led to the extensive use of dependency graphs, now a standard representation in compilers. One of the recurring themes throughout Allen’s career was her ability to take highly technical problems and abstract them into maths — often graphs and sets — and solve them precisely. On this project Allen led a team of young engineers, churning out industry leading papers and compilers for parallelism for 15 years.

IBM Academy and Beyond

In 1995 Allen became president of the IBM Academy, an internal steering group of IBM’s very top technical minds. She was able to use the position to advocate in two areas: mentoring and women in technology. In interviews, she frequently talked about how she didn’t have a mentor, and how important it is for people starting out in tech. Her visibility as an expert in the field inspired others — at its peak in the 70s/80s, half of the IBM experimental compiler group were women. Her advocacy for women in tech never ceased, even as she described a drop in participation after the early days of computing:

Later, as computing emerged as a specialized field, employers began to require engineering credentials, which traditionally attracted few women. But the pendulum is swinging back as women enter the field from other areas such as medical informatics, user interfaces and computers in education.

In 2006 Allen received the Turing Award (considered the Nobel Prize for computing) — the first woman to do so.

So the next time you fire up gcc, write anything in a language above assembly, or even use any software at all, remember that Frances Allen’s ideas are invisibly at play.

To this I would only add that Fran was a wonderful human being. I miss her.

Sounds like the problems I had running a weather forecast model on an old Turbo PC-XT in college in 1993. Didn’t run much better on the college’s brand new 486s, either.

When I was in college we had a bunch of old dual PII and PIII systems we built into a small cluster…. running WRF-EMS…. unfortunately we didn’t get it to scale (probably due to lack of enough memory in each PC) and it mainly just rain on the host at slower than realtime! The installer was also quite terrible with jokes in it like “wow you made it this far” its almost as if it was not meant to be installed at all on purpose!

Or maybe just learn the story instead of getting upset that it’s not about you.

I’m with you about being nauseated by articles that feature women simply because they are women, when similar work done by a man would remain unrecognized. Too many times this “woman quota” thing does more harm than good.

This may be an exception. She did recently pass away. A posthumous retrospective of her impressive work on the birth of the digital age I think is warranted. I’ve turned my nose up at several “woman” articles as they seemed as nothing more than pandering to the ideologues that care what gender a scientist is.

Personally, because I don’t care what gender a person is, also immediately rankle when I see gender specific fluff pieces. I’m willing to forgive this one.

You should watch the movie Hidden Figures (2016). I think you’d love it.

Perhaps if clever, capable women had historically been as free to succeed as men, unconstrained by societal norms, offering them up as role models for upcoming generations wouldn’t be as necessary.

“So the next time you fire up gcc…”

Free work indirectly brought to you by a proprietary ” no one ever got fired for buying IBM” company.

It’s a shame the vintage photos I find online don’t show her in them. We need to see more vintage photos of women at work at IBM and elsewhere.

Wonderful write up