In the world of software development the term ‘optimization’ is generally reason for experienced developers to start feeling decidedly nervous, especially when a feature is marked as an ‘easy and free optimization’. The final keyword introduced in C++11 is one of such features. It promises a way to speed up object-oriented code by omitting the vtable call indirection by marking a class or member function as – unsurprisingly – final, meaning that it cannot be inherited from or overridden. Inspired by this promise, [Benjamin Summerton] figured that he’d run a range of benchmarks to see what performance uplift he’d get on his ray tracing project.

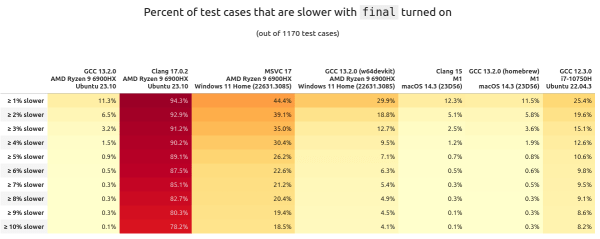

To be as thorough as possible, the tests were run on three different systems, including 64-bit Intel and AMD systems, as well as on Apple Silicon (M1). For the compilers various versions of GCC (12.x, 13.x), as well as Clang (15, 17) and MSVC (17) were employed, with rather interesting results for final versus no final tests. Clang was probably the biggest surprise, as with the keyword added, performance with Clang-generated code absolutely tanked. MSVC was a mixed bag, as were the GCC versions other than GCC 13.2 on AMD Ryzen, which saw a bump of a few percent faster.

Ultimately, it seems that there’s no free lunch as usual, and adding final to your code falls distinctly under ‘only use it if you know what you’re doing’. As things stand, the resulting behavior seems wildly inconsistent.