[Serge Vaudenay] and [Martin Vuagnoux] released a video yesterday documenting a privacy-breaking flaw in the Apple/Google COVID-tracing framework, and they’re calling the attack “Little Thumb” after a French children’s story in which a child drops pebbles to be able to retrace his steps. But unlike Hänsel and Gretl with the breadcrumbs, the goal of a privacy preserving framework is to prevent periodic waypoints from allowing you to follow anyone’s phone around. (Video embedded below.)

The Apple/Google framework is, in theory, quite sound. For instance, the system broadcasts hashed, rolling IDs that prevent tracing an individual phone for more than fifteen minutes. And since Bluetooth LE has a unique numeric address for each phone, like a MAC address in other networks, they even thought of changing the Bluetooth address in lock-step to foil would-be trackers. And there’s no difference between theory and practice, in theory.

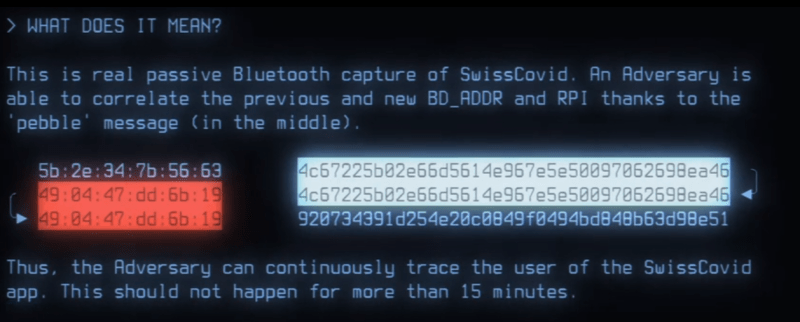

In practice, [Serge] and [Martin] found that a slight difference in timing between changing the Bluetooth BD_ADDR and changing the COVID-tracing framework’s rolling proximity IDs can create what they are calling “pebbles”: an overlap where the rolling ID has updated but the Bluetooth ID hasn’t yet. Logging these allows one to associate rolling IDs over time. A large network of Bluetooth listeners could then trace people’s movements and possibly attach identities to chains of rolling IDs, breaking one of the framework’s privacy guarantees.

This timing issue only affects some phones, about half of the set that they tested. And of course, it’s only creating a problem for privacy within Bluetooth LE range. But for a system that’s otherwise so well thought out in principle, it’s a flaw that needs fixing.

Why didn’t the researchers submit a patch? They can’t. The Apple/Google code is mostly closed-source, in contrast to the open-source nature of most of the apps that are running on it. This remains troubling, precisely because the difference between the solid theory and the real practice lies exactly in those lines of uninspectable code, and leaves all apps that build upon them vulnerable without any recourse other than “trust us”. We encourage Apple and Google to make the entirety of their COVID framework code open. Bugs would then get found and fixed, faster.

Nice article and good conclusions. I agree completely.

Agreed! Was informative and a great read. Not sure how practical this is large scale, but still worrying enough that it’s on the radar.

Of all things to leave closed source “the thing I made that I swear is 100% just to help people and in no way to help certain goverments track it’s citizens” has to be the most amusing. Google has tons of open source projects out there but this one oh no it has some special secret sauce that we need it to be closed source.

Open sourcing would solve a lot of issues… but it may not be legally possible. An argument can be made that HIPPA in the USA could prevent the source code from release if it deals with medical information… and COVID-19 lands in the medical realm. Some lawyers need to hash this out first.

I’m not a lawyer, but the source code itself is not personal medical information, and the resulting code doesn’t actually handle medical info either. The information is purely location only and requires another separate server back end to handle the actual tracing if you test positive. The server back end would definitely be HIPPA, as it is specifically tracing for possible infections, but the code for the Bluetooth tracking shouldn’t be. It’s only handling location data with definitely is not HIPPA on its own.

Hello, it is closed source even on Android, since it’s currently part of the Google Play services.

However Google and Apple have published the source code, you’ll find them there:

https://github.com/google/exposure-notifications-internals

https://developer.apple.com/exposure-notification

these are not complete, for example googles code is only for the transmission, not the reception of beacons or anything else related. these are merely snippets.

Pretty sure they even state this is a possible issue right here?

>Because Android doesn’t have a callback to notify an application that the Bluetooth MAC address is changing (or has changed), this is handled by explicitly stopping and restarting advertising whenever a new RPI is generated.

Since there isn’t any callback, it is possible for the Bluetooth MAC address to rotate before a new RPI is generated. In this case the following would happen:

Time Bluetooth MAC RPI

:00 00:00:00:01 AAA

:09 00:00:00:02 AAA

:10 00:00:00:03 BBB

The risk posed by this is minimal, since even though an observer may be able to tie the MAC addresses 00:00:00:01 and 00:00:00:02 together using the common RPI, the duration of both MAC addresses is no longer than a single RPI period (~10 minutes). When the RPI rotates, the MAC address rotates again, making it difficult to track the association of either the MAC address or RPI to a common device.

Yep, looks like it to me. At least as I’m understanding this, unless you have a massive BLE monitoring network/area, this doesn’t do you much good. All it takes is missing the bridge on one rollover and any previous tracking is dissociated from further tracking data. That said, I’m completely against contact tracking in the first place (a restaurant near me requires one for entry – really? – people are fine with this?) – but don’t see this as an overly big deal unless you have a way to catch EVERY rotation over time.

even without the callback mechanism from the bluetooth API it’s easily possible to align RPI and bluetooth address change by doing this time synchronised, for instance every 10 minutes and then just pause transmitting for a second after the change moment. I see no disadvantages to this solution ?

HIPAA has been suspended in California, due to the chore of making sure telemedicine is actually secure. And breach of confidentiality is also prohibited from being prosecuted.

In any case, medial rights in times of infectious disease doesn’t usually fall under the usual protections. Examples are that people can (and have been) forcibly quarantined, your records used against your will to warn those exposed, etc.

A recent scholarly Harvard article in JAMA about masks said they waived IRB and informed consent and just audited all their employee’s health records and published the data. Classy move.

COVID has, in a few months, stomped out nearly all your health information protections. Things that privacy rights warriors have fought for decades to establish. Not applying judgment just pointing out.

– So true, and seems people should be applying judgement to this, rather than just letting it all slide, at least in my opinion.

“We encourage Apple and Google to make the entirety of their COVID framework code open.”

Won’t work, if it’s part of the OS updates, they have to make the whole OS open source.

Aren’t you confusing “open source” and “viral license”? One does not imply the other.

Why should that be so? Not every open source license is a viral license.

If law makers have written in law that the solution has to be “open source”, shipping “non open source” components violates the law.

Furthermore, it’s a bad idea to trust:

1. Google: a company based on profiling people

2. Apple: a closed source OS, using “privacy” as a marketing tool

3. A device connected to the internet

Publish the source and allowing everyone to use it how they want are two pair of shoes.

Some examples:

GNU GPL has a viral part, MIT doesnt, but both allow commercial use.

Creative Commons, while not really suitable for software, can deny commercial use.

A license similar to the last one, but better suitable for software would be the way to go, as everyone could verify what its doing without being legally able to use the source code for commercial usage.

Covid19 is viral. Why not the framework? :P

I’ll see myself out.

Mac os already contains many, many pieces of free software, for example the command line program curl. This stuff is all updated in the standard way along with the rest of the system.

Good discussion. What’s missing is that this is likely a problem in the interaction with the lower layers of the Bluetooth stack, because beacons are generally handled by firmware or hardware independent of the OS.

Also, because it’s so obvious a problem, it’s literally number 4 on the list of attacks to prevent: https://covid19-static.cdn-apple.com/applications/covid19/current/static/contact-tracing/pdf/ExposureNotification-BluetoothSpecificationv1.2.pdf?1 (page 5).

The fault here falls squarely on the hardware vendor.

And that vendor’s name? Broadcom. Good luck with that.

Totally guessing, but I’d bet that the BTLE stack/drivers weren’t designed to handle multiple frequent MAC/BD_ADDR changes, much less handle those changes with precise (~100 ms) timing requirelements.

“The fault lies with the hardware vendor” seems a little harsh. There’s no way they had this application in mind when developing their firmware. There’s probably no “change BD_ADDR now” command that’s specced with timing guarantees. Given that, you’d have to make sure it’s being done at the driver/OS level. Ball’s back in Google’s court, IMO.

But I totally agree with the rest of your analysis.

So they knew it was an attack vector and they either didn’t test it or deployed it anyway. Smells intentional.

Yep. Apple straight up says “if your hardware is even good enough to support this, this is how to do it”. Sure, all the iPhones and iPads can handle it just fine, but an alarmingly large portion of Android phones could not, as per the researcher’s testing. Yet Google (or the manufacturers?) chose to enable contact tracing on those phones despite knowing it was vulnerable.

Yet another reason I’m glad to not be an Android user / used by Android.

Why not just send fixed packets (to distinguish them from other packets) and use the Bluetooth ID as the ID?

Also, it looks like it would be trivial to break the system by building a repeater to generate lots of false positives. With a directional antenna, it can be done from quite a distance and it doesn’t take very many repeaters to flood the system with false data.

But what’s the benefit? You can already spam bluetooth pairing requests to phones (and sometimes even messages), but that gets old and boring pretty quick.

Not everyone likes the idea of being tracked, even if such tracking is “anonymous”. Making the data from a tracking system useless is a form of protest against tracking.

Did we _really_ expect a different result?

Nope. No surprise here.

I got so much smugness from others about the crypto schemes they published back when those lame-ass PR infographics were going around. Obviously meant to sell a bad idea with marketing, not reason. Complete with flat design humans and all that twee garbage. People just bought it! As if publishing a theoretical crypto scheme meant it would actually be implemented that way with no flaws or externalities. Here we are, dummies. People did see it coming, believe it or not.

In theory, there is no difference between theory and practice. But in practice there is. Seriously, you people—PLEASE learn from this for the future. I can’t keep watching useful idiots fall for this obvious crap over and over. Especially when they claim to be developers or “hackers” or something ridiculous like that. You utter chumps. Shed some of that credulity already.

That terminal emulation in the video is a very nice way to present their results. Anybody knows how this is done?

Maybe they are using this https://github.com/Swordfish90/cool-retro-term

Affirmative, I had been looking at that software already but was thinking that was not quite it due to the curve in the renders I found.

However: if you set the screen curvature to ‘0’ then you get the effect from the video.

Adding this to my toolbox, might come in handy one day or another…

The ‘movie-alike’ decryption effect in the beginning is most likely https://github.com/bartobri/no-more-secrets

“ Download published source code to learn how Apple implements the internals of Exposure Notification.”

https://developer.apple.com/exposure-notification/

Yep, and here’s Google’s: https://github.com/google/exposure-notifications-internals

Not an expert, haven’t looked through the code. Wouldn’t understand it if I did.

But everything I’ve heard from knowledgeable folks points to this code release being only partial.

I’ve spent a fair bit of time reviewing a few contact tracing solutions as part of my job. This is an attack I clearly saw as a possibility when I first reviewed the Google/Apple document several months ago. However, I read it more thoroughly with this in mind, and the *specification* says that the Bluetooth ID and the contact token must change *at the same time*. If this isn’t happening “on some phones”, then it’s not a design flaw in the protocol (though I would argue there are in fact several fundamental ones), but a bug in the implementation.

And that’s where “show me the code” shines. It’s not the spec that’s bad, it’s the implementation.

So the attack requires a wide and geographically dispersed network of attacker controlled bluetooth receivers to essentially log *all* activity in order to correlate the latency between the ID updates? In what kind of radio environment did they capture signals up to 50 meters from? Because I sometimes have trouble with my bluetooth signal working between my head and my pocket in a city.

Anyone that’s worried about a state actor using this as an attack vector is being silly. They wouldn’t bother. They’d just subpoena the cell tower records.

any app can listen to beacons, so this can be implemented by others than state actors. you should consider also that lots of advertising companies have bluetooth trackers in their billboards, lots of national companies run such for various reasons, for example in the netherlands schiphol airport and all trainstations have such trackers… furthermore cell tower records are much less granular than these beacons.

I said it in the writeup: I’m not sure how big a deal this is because of the need for proximity. OTOH, _someone’s_ phone is probably close enough to yours all the time. And the adversary who has access to this data is Google. I do _not_ like that they’re proposing to write the app component as well.

Point is, this was a security guarantee that we were given, and it’s not being implemented. Needs fixed.

+1 for ‘OTOH’…

Indeed, the external tools we used for this demo are:

cool-retro-term (Filippo Scognamiglio)

[https://github.com/Swordfish90/cool-retro-term]

no-more-secrets (Brian Barto)

[https://github.com/bartobri/no-more-secrets]

We thank the authors of these tools for the awesome work.

Thanks for weighing in!

I am afraid that the main effect of this issue, discovered already 26.6.2020, will spread nms and retroterm to wannabe cool scriptkiddies ;-)

(sorry, only pdf-link) [https://www.melani.admin.ch/dam/melani/de/dokumente/2020/SwissCovid_Public_Security_Test_Current_Findings.pdf.download.pdf/SwissCovid_Public_Security_Test_Current_Findings.pdf]

Hey I absolutely loved the video and research. Would you mind sharing how you made the video, what settings/configs/scripts did you use and how did you capture it?

Anyone know what font that is in the screenshot?

https://github.com/google/exposure-notifications-internals/blob/main/README.md#ble-mac-and-rpi-rotation

Google has already addressed this

Because Android doesn’t have a callback to notify an application that the Bluetooth MAC address is changing (or has changed), this is handled by explicitly stopping and restarting advertising whenever a new RPI is generated.

Since there isn’t any callback, it is possible for the Bluetooth MAC address to rotate before a new RPI is generated. In this case the following would happen:

Time Bluetooth MAC RPI

:00 00:00:00:01 AAA

:09 00:00:00:02 AAA

:10 00:00:00:03 BBB

The risk posed by this is minimal, since even though an observer may be able to tie the MAC addresses 00:00:00:01 and 00:00:00:02 together using the common RPI, the duration of both MAC addresses is no longer than a single RPI period (~10 minutes). When the RPI rotates, the MAC address rotates again, making it difficult to track the association of either the MAC address or RPI to a common device.

Yes, this was published by Google on 21 July 2020. I wonder who found this first, Google or [Serge Vaudenay] and [Martin Vuagnoux]?

Anyway, Google seems to assume that this doesn’t happen _every time_ on a given device – otherwise, the evaluation “The risk posed by this is minimal, …” would make no sense, because obviously after ~10 minutes the same thing could be done again.

I would like to know whether this is correct, or if it happens _every time_ on affected Android devices.

(Also, I assume that this doesn’t happen at all on iOS devices.)

While certainly a security bug, the authors of “Little Thumb” need to do much more to demonstrate the practicality of this attack. For example, what is the duration during which the ‘pebble’ is visible? How many BLE transmissions contain the pebble? What types of hardware have been demonstrated capable of reliably detecting the pebble? (Obviously ubertooth, but nobody is going to mount a wide ranging tracing attack using an army of uberteeth). Can prevalent mobile devices reliably serve as passive observers of pebbles, even while in a low power state? How many observers would be required to have reasonable success at tracking an individual across a significant geographic area (e.g. tracking a government official as they attend supposedly secret meetings)?

Without a meaningful analysis of its practicality it is difficult to assess the urgency of defeating this attack. And given the systemic security failures across the industry as a whole (and certainly at Google in particular), I suggest that any effort which prioritizes this fix over more meaningful change would likely be irresponsible.

They listened to BTLE with an Ubertooth, but whatever. You could do the same with a $10 DVB dongle. Or a cellphone, if you have one of those. Listening to the beacons is trivial. But…

The pebble is one frame, one transmission, per 15 minute rolling ID cycle. It’s not “visible” in the sense that it can be called up on demand. It’s broadcast once. You either are in range and hear it or not. This limits the impact of this vulnerability. But…

You live in a sea of devices that are all listening, all the time. Not just potentially, but currently. How many of the pebbles will be heard by my phone? Very few. But by any phone? Probably something like all of them. Nothing in the framework prevents the OS or any app with BT permissions from harvesting this data and reporting it on.

So it’s a middle-big deal.

But whatever, it was part of the security design, and it’s been displayed to be flawed. It’s gotta get fixed.

Time to go back to dumb phones. Been thinking about reverting back for some time now.

This seems to not be a massive issue. The goal was always to make the data reported to the health services private, not to keep you private from a system which has to be installed where you physically are.

Even if the two IDs changed simultaneously, you can still correlate them if you’re physically present: the phone is still transmitting from the same location, and can be triangulated if need be.

News flash: CCTV breaks ‘privacy’ of COVID-19 system.

Your presumption of feasibility is no substitute for actual rigorous analysis of the possibilities. Battery constraints on cell phones absolutely limit their ability to detect single frames, especially if one is trying to be surreptitious about it. Furthermore, the mobile platforms actively limit the ability for apps to aggressively scan over long periods of time (again, mainly for power reasons).

Beyond this, one is often surrounded by fewer phones within BLE range than one might imagine (for example I am currently in a house with 4 other people, none of which are within range). But to be successful, an attacker must not miss even a single pebble over the course of an attack. This suggests that recruiting a dense enough network of pebble observers to carry out a real-world attack is likely to be a herculean effort. And carrying this out surreptitiously almost certainly requires subverting security measures on the mobile platform (e.g. to backdoor install an observer app or equivalent functionality in the OS), rendering this a second-order attack.

> But whatever, it was part of the security design, and it’s been displayed to be flawed. It’s gotta get fixed.

This is a flawed and failed philosophy. There is absolutely no chance that the industry can find and fix every single security bug that exists in modern software/devices. It is as infeasible to us right now as is flying a man to another star. Thus every time a flaw like this is encountered we must always consider whether the effort to fix it could be better spent elsewhere. I can’t say for certain in this case, but I suspect this flaw is very low priority. But a rigorous analysis of the possibilities would shed light on this. Unfortunately, neither the authors, nor this publication, endeavored to provide one.

(Please see reply below).

“[Serge Vaudenay] and [Martin Vuagnoux] released a video yesterday documenting a privacy-breaking flaw in the Apple/Google…”

Wait!

What?!?!?

Apple and Google worked together on something?

Covid really is the end of the world!

Time to put on some REM.

We have tested the Canadian version of contact tracing. which is called COVID-Alert. It has the same pebbles bug! MAC address changes asynchronously.

ESON, maybe you could answer this, then?

Does it change asynchronously _every time_? Or only sometimes?

And does it happen on Android only, or also on iOS?

Thanks

I just tested the Android version. It happens every time and I monitored it over 2 hours. This issue has been mentioned in Google documents and I refer you to this existing issue with Android devices:

https://github.com/google/exposure-notifications-internals