One of the things we love best about the articles we publish on Hackaday is the dynamic that can develop between the hacker and the readers. At its best, the comment section of an article can be a model of collaborative effort, with readers’ ideas and suggestions making their way into version 2.0 of a build.

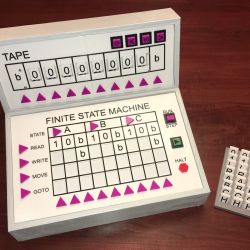

This collegial dynamic is very much on display with TMD-2, [Michael Gardi]’s latest iteration of his Turing machine demonstrator. We covered the original TMD-1 back in late summer, the idea of which was to serve as a physical embodiment of the Turing machine concept. Briefly, the TMD-1 represented the key “tape and head” concepts of the Turing machine with a console of servo-controlled flip tiles, the state of which was controlled by a three-state, three-symbol finite state machine.

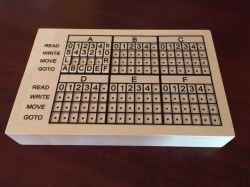

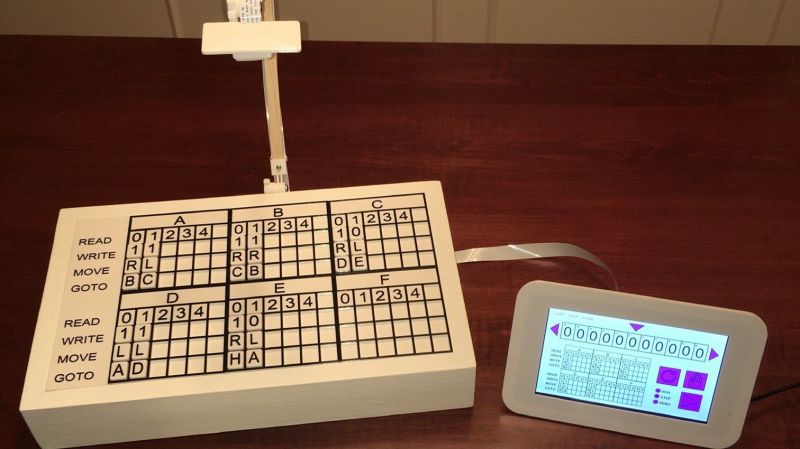

TMD-1 was capable of simple programs that really demonstrated the principles of Turing machines, and it really seemed to catch on with readers. Based on the comments of one reader, [Newspaperman5], [Mike] started thinking bigger and better for TMD-2. He expanded the finite state machine to six states and six symbols, which meant coming up with something more scalable than the Hall-effect sensors and magnetic tiles of TMD-1.

[Mike] opted for optical character recognition using a Raspberry Pi cam along with Open CV and the Tesseract OCR engine. The original servo-driven tape didn’t scale well either, so that was replaced by a virtual tape displayed on a 7″ LCD display. The best part of the original, the tile-based FSM, was expanded but kept that tactile programming experience.

Hats off to [Mike] for tackling a project with so many technologies that were previously new to him, and for pulling off another great build. And kudos to [Newspaperman5] for the great suggestions that spurred him on.

While I shouldn’t object to taking something far beyond its usefulness, I have two words: feature creep.

The Turing machine was a thought experiment. Alan Turing recognized that everything that could be calculated could be broken down into small steps, and that there is a very small set of instructions that can be combined to express ANY procedure, no matter how complicated. This was the software version of “Any digital machine can be made from NAND gates”. Or to put it another way, the beauty of Turing’s machine was how little it needed, to be able to calculate anything that COULD be calculated, reducing computing to its essence.

I’m afraid that projects like this only muddy the waters, because they make the Turing machine unnecessarily complicated, missing the whole point. Sure, it makes a more capable Turing machine, but so does EVERY OTHER DIGITAL COMPUTER EVER DESIGNED.

Well, okay, with the possible exception of Brainf*#k (https://hackaday.com/tag/brainfuck-interpreter/) (https://en.wikipedia.org/wiki/Brainfuck), but that’s the exception that proves the rule, since it also was a thought experiment involving an impossibly simple and hopelessly obfuscated computer language. And by the way, I’ve seen someone make BF* almost usable, just through the use of a bunch of macros that execute more familiar machine instructions. Which just illustrates Turing’s point. (I don’t think it was this one, https://github.com/cornwarecjp/brainfuck-macros, but apparently that’s a thing now.)

I’m fully aware if Turing himself ever saw this or some of the other Turning machine implementations that have graced these pages, he probably would have said something like, “You’re not supposed to build the f***ing thing you bloody idiots!”, but what ever happened to doing something because it was interesting, fun, and a learning experience to boot.

Sure, and I DO see the value in building an implementation of Turing’s proposed machine, which is all the more challenging because it requires either a moving read/write head or moving media, so as long as you include some way of seeing the state of the bits on the tape, you can follow what it’s doing. It’s just the idea of making an “improved” version that struck me as missing the point, especially when the implementation contained a CPU that’s many millions of times faster than the overall machine.

But I could be wrong. I got about two-thirds of the way through the “Nand2Tetris” course, which has been mentioned here (https://hackaday.com/?s=nand2tetris), in which you first build a collection of logic gates starting with only NAND gates, then build a CPU using a hardware description language and and corresponding simulator, that uses that collection of gates, then go on to write an emulator for a virtual machine based on that CPU, as well as an assembler for the CPU and the back end of a compiler to translate the virtual code into machine code, then go on to build a Java-like compiler for that, that translates the high-level code to the virtual machine language, and so on, the point being to understand every single hardware and software component necessary to build a functioning computer from what I consider “scratch”.

To me this was just what I was looking for, since up to now, it seemed like there would be no point in designing a CPU, since where am I going to get a compiler to produce object code for it? Or do I just have to be stuck in machine language?

I got to the middle of the compiler chapters before being overtaken by events, so it’s been on the back burner for a few months now. (Not just Covid – that was actually what gave me time to get started on the course in the first place.)

Now, the computer described in Nand2Tetris is pretty crude, and while it would be possible to actually build it out of NAND gates, I wouldn’t do so because the CPU is so simple that in many cases it takes twenty or thirty machine instructions to execute a single virtual instruction. Implementing stack instructions was almost unbearably tedious. Which means that it would take a considerable amount of memory to actually implement a game such as Tetris on it. The emulated machine has 1k x 16 bit words of storage to share between program, data, and stack, and there is no sample program provided to prove that anybody was able to fit Tetris into it. And yes, that machine could be improved to make something slightly more practical, including having more memory available, but only at the cost of making the CPU itself more complicated to implement, missing the point of the course. Just the same, if I can do this without adding TOO much to the CPU, I may take this as a challenge, not using NAND gates, but a CPLD (which of course is just a bunch of NAND gates and D flip-flops). Just because I think it would be great to produce a one-chip CPU without committing to the whole ecosystem of an FPGA.

By which I mean, yes, I now see that there IS some value in building a more practical Turing machine.