Perhaps the best-known ridesharing service, Uber has grown rapidly over the last decade. Since its founding in 2009, it has expanded into markets around the globe, and entered the world of food delivery and even helicopter transport.

One of the main headline research areas for the company was the development of autonomous cars, which would revolutionize the company’s business model by eliminating the need to pay human drivers. However, as of December, the company has announced that it it spinning off its driverless car division in a deal reportedly worth $4 billion, though that’s all on paper — Uber is trading its autonomous driving division, and a promise to invest a further $400 million, in return for a 26% share in the self-driving tech company Aurora Innovation.

Playing A Long Game

Uber’s driverless car research was handled by the internal Advanced Technologies Group, made up of 1,200 employees dedicated to working on the new technology. The push to eliminate human drivers from the ride-sharing business model was a major consideration for investors of Uber’s Initial Public Offering on the NYSE in 2019. The company is yet to post a profit, and reducing the amount of fares going to human drivers would make it much easier for the company to achieve that crucial goal.

However, Uber’s efforts have not been without incident. Tragically, in 2018, a development vehicle running in autonomous mode hit and killed a pedestrian in Tempe, Arizona. This marked the first pedestrian fatality caused by an autonomous car, and led to the suspension of on-road testing by the company. The incident revealed shortcomings in the company’s technology and processes, and was a black mark on the company moving forward.

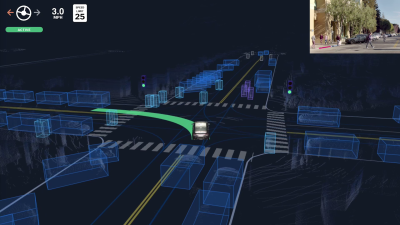

The Advanced Technology Group (ATG) has been purchased by a Mountain View startup by the name of Aurora Innovation, Inc. The company counts several self-driving luminaries amongst its cofounders. Chris Urmson, now CEO, was a technical leader during his time at Google’s self-driving research group. Drew Bagnell worked on autonomous driving at Uber, and Sterling Anderson came to the startup from Tesla’s Autopilot program. The company was founded in 2017, and counts Hyundai and Amazon among its venture capital investors.

Aurora could also have links with Toyota, which also invested in ATG under Uber’s ownership in 2019. Unlike Uber, which solely focused on building viable robotaxis for use in limited geographical locations, the Aurora Driver, the core of the company’s technology, aims to be adaptable to everything from “passenger sedans to class-8 trucks”.

Getting rid of ATG certainly spells the end of Uber’s in-house autonomous driving effort, but it doesn’t mean they’re getting out of the game. Holding a stake in Aurora, Uber still stands to profit from early investment, and will retain access to the technology as it develops. At the same time, trading ATG off to an outside firm puts daylight between the rideshare company and any negative press from future testing incidents.

Even if Aurora only retains 75% of ATG’s 1,200 employees, it’s doubling in size, and will be worth keeping an eye on in the future.

Judging by the capchas I’ve been getting where it insists I identify a bicycle shaped sculpture as a bicycle, a distant crosswalk as not one, and ignore a traffic light partially obscured by a tree… computer visual recognition and cognition of the environment has only advanced from cubes and spheres to slightly more irregular solids and is still incapable of internally modelling the behaviour of such beyond simple billiard ball physics. Think we’re getting one of those illusions of competence like the 1930s robots that could pour you a cup of tea, yet we still don’t have robot butlers.

And those kinds of perception problems are the “easy” ones. If human drivers encounter a confusing situation, they don’t do crazy things. They slow down until they understand. Computer just says 99% confidence level, let’s go.

That’s largely because of how we model the AI. Back in the 70’s we started treating the problem of sensor inputs and decision-making as separate problems in order to split the issue in half and reduce the complexity to something that could be solved.

This produced the so-called “expert system” AIs that were extremely fragile because they disassociated the sensory input of the outside world from the “thinking” part and turned it into simple symbolic representations. It was assumed that some magic black box would tell the computer there’s a bird, or a car, or a person in sight, and the information was then pushed through a long list of IF-THEN statements to decide what to do with it. As John Searle points out, this can never constitute intelligence. The failure of the expert systems brought about the “AI winter” that brought the field to a halt since nobody had any better ideas.

This is still how AI works in general – we’re just building them by artificial evolution now, via neural networks. The sensing and the decision-making parts are still separated, so the algorithm that sees what’s out there doesn’t understand what it is, and the algorithm that supposedly understands what it is doesn’t see what’s out there – it just has to trust the first part when it says “there’s a human on the road”. This makes the AI fundamentally stupid, and nobody has any idea how to make it better.

An example of the problem in the form of a joke:

Husband and wife were driving along and stopped at an intersection. The wife said to the husband, “See if there’s any cars coming in from the right, dear.”, and the husband replied “No…”. The wife stepped on the accelerator and drove right into an oncoming vehicle. After the dust settled, the husband managed to finish the sentence “… just an old LADA”.

So we’ve got a blind guy driving the car, and 7 guys from the amazon rainforest, taught English out of a book, staring in fixed directions through a toilet roll middle and yelling what they see at him. “Blue thing, moving across, big, another thing with circles…”

That’s a pretty accurate description.

Though not all of the amazons are looking through a toilet roll. Some of them are listening through a horn and playing a squeaker, saying “echo long, echo short, echo long, echo short…”

And the guy behind the wheel, who has never seen anything in his life, has to understand what all of that means, without any first-hand experience. He’s not even allowed to experiment (no on-line learning) so he just has to go by the book. The book was taught to him by running through a million iterations of a simulation until he learned to make the correct actions by trial and error. Actually, the blind person doesn’t even know he’s driving a car.

Human stages of learning to drive.

0-6 months integrate sensory perceptions, begin to co-ordinate movements.

6m to 3y learn basic mobility and navigation in a 3 dimensional environment.

3y-5y apply mobility and navigation learning to piloting of low speed simple vehicles in low traffic environment, preferably open space (tricycles etc)

5-7y continue developing above while developing reasoning skills, knowing why rules are rules, consequences etc.

7y+ continue developing all of above, possible addition of higher speeds of bicycle and riding in light traffic with supervision. First exposure to formal rules of the road.

10+ possible independence on bicycle in low traffic areas

early teens, pretty much trusted on most roads on bicycle.

16 yo, begin formal study of road regulations, begin formal driving tuition <<< Where most devs think AI can start :-D

Afaik George Hotz comma.ai does everything in one net, camera/radar data goes in, driver output comes out. A lot of people dont like it for that particular reason.

The reason why the sensing and thinking parts are separated is because you want to test them independently. You want to prove that the AI actually sees the stuff you’re showing it, so you can train the visual recognition algorithm and check its output..

Likewse for the decision-making AI, you have to be able to run it through millions of different scenarios without having to produce a life-like emulation of the real world to it. The computer would have to process to the training simulation in real time, so the process is accelerated greatly by just showing the AI the symbols that represent the situation.

This opens up the black box and allows the programmers to show that the car will behave in certain ways in certain situations, but it is severely limiting the AI to a feed-forward system, where the dumbest part of the whole is the limiting factor, and that happens to be at the very beginning at the input processing stage.

This is why all the self-driving cars are an attempt to cheat your way around the fundamental stupidity of computer vision. For example, Waymo and Chrysler use geofencing which limits the use of the autonomous feature to areas which are precisely 3D mapped in advance, so the car doesn’t need to understand what it sees: it is told what it should expect to see and what it means already, so then any difference pops out as clear as day.

You never know what it’s gonna latch on to and whether it’s going to be reasonable or not. For instance, when training AI to recognise a certain fish, it was found to be using human fingers as a model, because most pics of that fish had fishermen holding them.

Same could happen with car AI, you give it images of a busy commercial district and it starts recognising pedestrians by the similarly shaped shopping bags they are carrying, first person to cross in front of it without a shopping bag, and blam. Same if you did it in a university area with backpacks maybe. Okay so you make it seem to recognise a “normal” person shape, think it’s good then it only runs over 8+ month pregnant women, parents carrying toddlers on their shoulders, and crabbed over frail old people with walking frames.

So it’s a bit of a problem when you’ve got inputs, then mysterious spaghetti which is all the training data, neural connections etc and correlations, which is all organised you don’t know how, and outputs, which give the illusion of working correctly at the moment. It’s that quality assurance joke but a billion times more complex.. https://twitter.com/brenankeller/status/1068615953989087232?lang=en

Uber also puts daylight between its business and legal issues from future testing incidents.

I was thinking the same. The new company appears to be a rat king, which is formed when the car companies try to shed the autonomous driving programs to get rid of the liability issues, and the project managers – who are well aware that the technology isn’t up to snuff, yet they keep pushing it anyways – all merge together into a ball of hype.

All I know is if one of my family members was killed by a self driving car The CEO would be in my basement. Feel free to read into that.

It depends. Did it veer up onto a sidewalk and run them down? Did plow through a red light hitting them as they legally crossed at the crosswalk?

Or were they the type that seems to think that they are invincible and just steps right into the road without looking? Did someone jump right out in front of it?

I think anyone who puts a self-driving car on the road that isn’t safe should be just as responsible as if they were driving the car themselves. But I also think that there are a lot of luddites out there and they will likely blame the self-driving car no matter what the situation was. That’s going to make it a long up-hill battle.

But I do hope that some time before I retire I can take back my hour/day commute time. It’s almost all expressway so maybe true, hands-off self driving will be a thing? Unfortunately nobody is starting a bus or train route that gets me where I need to go.

“Did someone jump right out in front of it?”

I heard that is why dashcams are so popular (required?) in Russia.

>I also think that there are a lot of luddites out there and they will likely blame the self-driving car no matter what

It doesn’t really take ludditism to make the same point. If we’re being sold the AI as practically infallible, then it better be exactly as advertised. The question becomes, how much is it allowed to err and in what terms? If the company can show that the computer made an error because of A and B, then the question is why wasn’t it programmed to take that into account? Or, if the error cannot be explained, the company has to admit that they don’t understand how their program works.

Either way, eventually you have to admit that this is the best it will be, because the problem exceeds the competence of the programmers, and the program will keep killing people.

In a sense, it’s better if the error was a random glitch than a systematic issue with the programming, because then the risk is distributed instead of certain.

That’s also the reason why human failure has a lower bar than for a computer program failure: computers will be consistent in making the same error and you’re guaranteed to get killed in the same sort of situation, whereas with a person behind the wheel you at least have a chance. Better to play russian roulette with a revolver than a glock.

Ummmmm ,,, wont the bullet in the glock always be at the top of the magazine waiting for the next cocking? Revolver doesn’t do this.

Yes. That’s exactly the point. The program failure gives no-one any lucky breaks because it is perfectly deterministic: under certain conditions the car will kill you.

And after it has killed few people the rest of us know how and when it does that and we can avoid it, unlike human drivers that can kill you anywhere, anytime and in millions of different ways.

If one of your family members was killed by a human driven car, would the driver also wind up in your basement?

And which scenario do you think is more probable?

It’s not a matter of probabilities. It’s about a machine that sometimes kills people because it has a flaw. We want that flaw to be fixed. If there are too many of those flaws, we want all of them fixed before the machine will be allowed to operate again.

Human-driven vehicles kill people every day. At least we can fix the flaws in autonomous vehicles.

That’s a tall assumption.

If a human driver kills someone, the end up in Her Majesty’s basement (unless, of course, they drive on the wrong side of the road and then make a false claim of diplomatic immunity to flee the country).

Then shouldn’t the CEO also face Her Majesty’s justice, instead of winding up in Mike’s basement?

For some reason Mike seems more concerned about a family member being struck by an autonomous vehicle and less concerned about a family member being stuck by a human-driven vehicle.

It goes back to the russian roulette argument: human failure is stochastic, computer failure is deterministic. Getting killed by bad luck is more acceptable than getting killed by a machine with a faulty programming, even if the overall rate of such failures were identical.

This is because the programming error is avoidable in principle, and ignoring this is willful negligence, so using a program you know to be faulty to drive people around is a moral hazard. If we accept that there will be faults in the program, that we could fix but don’t because it would cost too much, the self-driving car company literally gets away with murder. People driving themselves take their own risks, and are naturally responsible for the risks they cause to others.

(A moral hazard: “any situation in which one person makes the decision about how much risk to take, while someone else bears the cost if things go badly.”)

From what I understand of these not-a-taxi ride companies like Uber and Lyft this seems kind of pointless. They pay their human workers so little that after you subtract maintenance and car value depreciation with mileage the driver might actually lose money!

Why would they want to automate that?

Or has that changed in the last few years?

Exactly because of that. The drivers require you to pay them a net income or they just drop out, or the quality of service goes way down.

Pre-pandemic, I got the impression ride-share drivers were doing it as a side job, not full time employment. But, then again, a man flipping burgers, seem to think he should be paid enough to support a family, buy a house, and everything else. Not sure where part time, and entry-level, minimum wage jobs, became career choices.

They did that at the point when we started subsidizing people to take credit as welfare, especially for housing, which created labor mobility traps. People are stuck in neighborhoods and areas without any real jobs, so they’re forced to do menial services to keep up with the payments.

When considering this issue I agree that costs would be similar. However when an all electric fleet is considered it changes the numbers drastically. The cost of energy is about half when comparing the two & of course prices will vary depending where you are located, Here is a link to a chart where You can check your state’s statistics.

https://www.energy.gov/articles/egallon-how-much-cheaper-it-drive-electricity

The other big factor is the maintenance, again the electric vehicle comes out head simply by virtue of the reduced number of parts required to build. This where the comparisons become quite subjective however I am not even going to attempt to unravel that ball of yarn but give you a simple answer. When comparing the drivetrains alone the Traditional (ICE) vehicle has about 10,000 parts where an Electric vehicle has about 20 parts. I know You want to tell me about this or that consideration but I just want to make this simple and uncomplicated, so save it for someone else.

Finally the Driving Software itself and again there will be all kinds of factors & opinions but I’m going to base my answer on “real world miles driven”. So the nod here goes to Tesla with no others even close to the numbers that Tesla has accumulated.

As to the eventual winner in this space I think that Elon Musk, in particular his view on manufacturing, and the team he has put together will be the leader in this space by a country mile, that is of course unless another disrupter, if you will an Elon Musk 2.0 comes along.

That depends on whether you actually believe what Musk is saying. He has a habit of selling two and delivering half – the trick is just distracting the customers so they forget to check the difference between the original offer and the delivery invoice.

> the Traditional (ICE) vehicle has about 10,000 parts where an Electric vehicle has about 20 parts.

What do you count as a “part”? The Tesla battery alone has some 8,000 cells in it, each with a tab weld that can fail and lead to a fire than destroys the car in seconds. Think about that.

Society isn’t ready for self-driving casualties, even if the cars do better than average human drivers. Our tolerance for accidents is not a cold rational statistical calculation. If and when Tesla rolls out their FSD program, it will only take a few bizarre accidents for the program to get cancelled.

There’s reasons for that.

There’s the odd bad driver, but most of us are doing as best we can not to kill people.

Computers hold no such aim, and between programmers, managers, bean-counters, and office politics, there’s any number of reasons why safety will not be #1 on the list.

We also have no mechanism to find and punish those who make such decisions in companies – particularly as it’s usually a shared culpability. Perhaps everyone in the automated car team should get a week’s jail time every time one kills someone?

If you create punishment for developers, they will switch jobs, and the work won’t get done.

This is the same point as the ancient Romans who made the architect stand under the arch they designed when the scaffolding was removed.

The arch that won’t stand won’t get built. That’s exactly as it should be.

– Sounds like a great plan to me!

“Society isn’t ready for self-driving casualties, ”

“First, kill all the lawyers”

-Shakespeare

Please note that Tesla’s “Full Self Driving” isn’t at all fully self driving. It requires human supervision at all times, making it level 2. I think they’re talking about it soon being able to self-drive, under certain circumstances, with a human deciding when to pull it out of self-drive mode, which is level 3.

When normal (non-marketing) humans say “self-driving” they mean level 4 — driving most of the time, and able to hand control back to the human when it gets hairy. Adding in “full” makes it sound like level 5 — no human involvement necessary.

“Autopilot” and “Full Self Driving” aren’t what they say they are. All Tesla fatalities are, according to the courts and the fine print, the drivers’ fault, because the accidents occurred while a human was using a driver assistance feature. (Yes, they have their cake and eat it too.)

That’s a common trend with technology and safety. In the end, the blame is always rolled onto the person and the accident chalked down as “human error”, which the marketing department then turns around to say that the technology is safe.

Then there’s the other tricks of statistics, such as Tesla saying “Look, when the Autopilot is on we see half as many accidents per mile”, but the Autopilot only functions along the easy bits where you can drive like a zombie anyways and not get killed. The statistics for the human drivers includes everything.

The Autopilot only just gained the ability to make a left turn at an intersection, under driver supervision. Intersections are where most crashes happen in an urban setting. Previously so far as the statistics are concerned, it was only driving the straight bits and at most making a lane change. If you compared only the bits where both could operate, you would see fewer accidents made by people because the Autopilot still does some incredibly stupid things, like driving into lane dividers.

Yep, an artfully constructed & funded stop-loss.