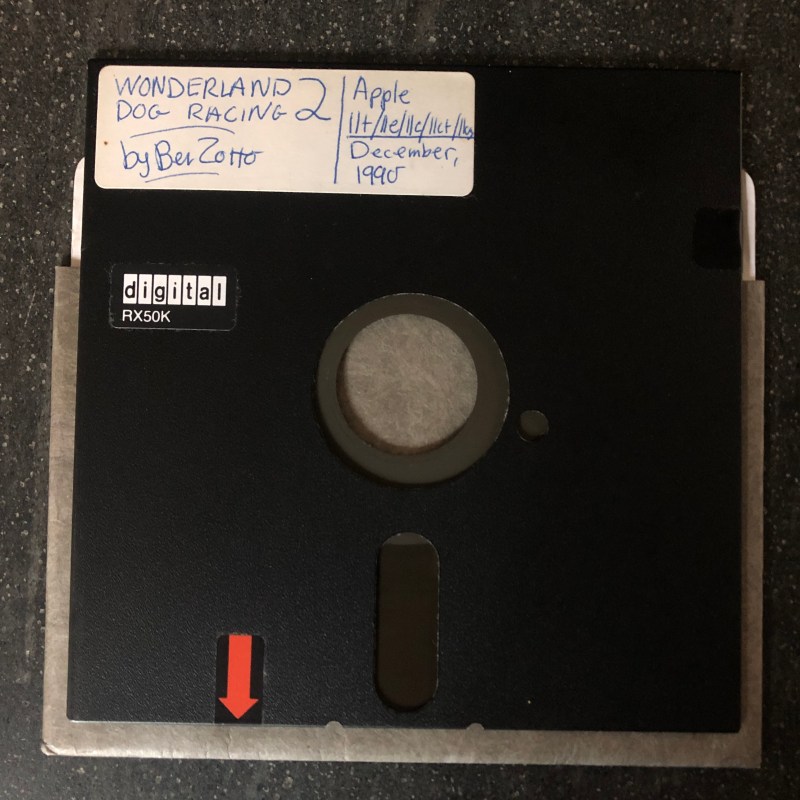

Back in 1990 [Benjamin Zotto] wrote – while in elementary school – a dog racing game called Wonderland 2. The BASIC source code and images for the game were stored on a single ProDOS formatted, soft-sectored 5.25″ floppy disk. Fast-forward thirty years to today and [Benjamin] found to his dismay that ProDOS could no longer read the floppy, giving an I/O error. Not deterred, he set about to recover the data, as documented in this Twitter thread.

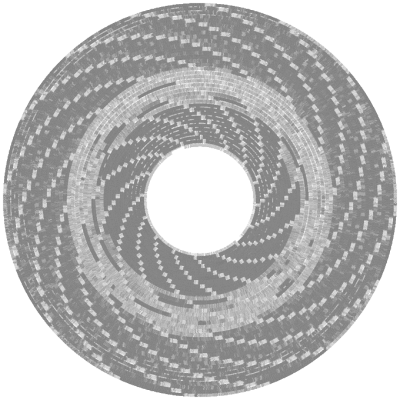

The gist of the story is that the floppy disk’s surface could still be scanned with help from the aptly named Applesauce Floppy Drive Controller, which got the following visualization of the magnetic patterns on the disk surface:

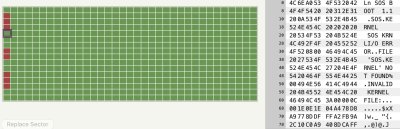

This data could then be analyzed sector by sector, with the bad sectors and the cause for ProDOS flaking out with its reading attempts here marked in red.

Checking the data recovered so far confirmed that it was a ProDOS disk. It also confirmed that the sector containing the directory listing was shot. This required diving into the technical reference manual for ProDOS and its filesystem to figure out how to reconstruct the directory layout. This required figuring out the offsets and sizes of the files, assisted by knowing what was likely on the disk, and having some bits and pieces of the original volume listing still intact. This allowed for the directory volume to be rebuilt, one byte at a time.

At the end of that arduous and highly educational journey success waited, and [Benjamin] was once again able to relive his memories of 1990s BASIC and hand-drawn bitmap graphics.

Nice visualisation. We still have some 8″ floppies to read. This will come handy.

Wow! Cool story with a happy ending!

Last weekend I was searching in my parent’s garage for my cassette tapes of a Z80 editor/assembler/debugger I made in the same year, 1990. I found the pages where I wrote out the opcodes and their mappings, but no tapes. May have a similar problem if/when I ever find them.

There are a lot of older formats that become harder and harder to read/recover. For some formats, like this one, solutions exist. There are a lot of other formats (like old 8″ disks and tapes) without this luxury.

I’ve been wondering if it would be possible to make a magnetic 2d scanner that creates a high-res map of any magnetic media, regardless of the format. Software could then be written to convert those scans to disk images for various formats.

I fully expect it to be severely density limited and only work on old floppy and tape formats, but being able to have a generic hardware solution to digitize old magnetic media would be pretty useful.

I bet the GMR read heads from hard drives would be an interesting place to start for a high resolution scan. Maybe cheat the whole fast floating on air thing by using something more viscous like silicone oil. Or for thin stuff like tape mount 2 read heads at an angle on opposite sides to get a differential signal over the intersection. Perhaps the signal could be enhanced by using a magnetically permeable material like used in vertical recording write/read heads. Oh and don’t forget magneto-optics. Maybe immerse the tape/disk in olive oil and use the Kerr effect to read it with a polarized CD/DVD laser. Seems like there is lots of room for interesting exploration.

Some monopole dust would make short work of it. There’s a shop right next door at Proxima that has both N-dust *and* S-dust, if you can believe that. I know, right, what if some kid un-corks both kinds at the same time, what a mess! Anyway, that’d show you where your 1’s are. Or the 0’s, but that’s easy enough to account for.

I basically had this problem when trying to recover data from an old 14″ 5-platter disk pack. For a lot of these devices, the read heads need to literally ‘fly’ over the media, and you’re measuring the voltage induced in a coil from the change in flux (rather than reading an absolute magnetic field value). Intercepting the analog signal from the read-head and over-sampling it with modern electronics (as well as stepping it radially in/out using my own electronics) wound up being a much more effective solution than either trying to build my own magnetic scanner or getting the entire original disk drive working: http://www.chrisfenton.com/cray-1-digital-archeology/

I seem to remember standing next to a DEC PDP 11/70 disk pack head crash just after mounting in the late 70’s. I cant remember the size, though 14″ 5 platter sounds about right – it was large, they didn’t call them washing machines for nothing….

That’s something i’ve also dreamt to be able to do! I think i’ve already post a question about this idea here once or twice, without much success…

I’m thinking of being able to digitize either digital but also analog medium, like various kind of video tape formats without having to rely on finding working very old and sometimes very rare players.

Back in the day there used to be many utilities that would scan all the sectors for file blocks which contain links to preceding and following blocks. Once these are known the original directory tables can be rebuilt bar any corrupted unlinked blocks

This is really cool.

I reconstructed corrupt disks tims ago directly on an Apple II with Beagle Bros. Bag of Tricks and Nibbler.

Great job!

I have had similar success using the excellent FluxEngine since I read about it here on Hackaday:

https://hackaday.com/2019/02/19/flux-engine-reads-floppies/

For £10 in hardware and an old 3.5″ PC floppy drive from the bits box I was able to recover a bunch of my old floppies.

FluxEngine also supports 5.25″ drives but sadly I chucked my drive many years ago. I still have a load of 5.25″ Apple II disks but it never occurred to me I’d be able to read them in the future with a PC drive.

FluxEngine is here: https://github.com/davidgiven/fluxengine

Note: I’m not affiliated with the Flux Engine, I just use it and lurk on the GitHub page. David Given who created the project seems like a smart and all round nice bloke too.

I can confirm the ease of use, great results, and lack of expense of the FluxEngine. And the nice bloke status of David Given, for that matter.

I also discovered that a number of my old 3.5″ diskettes have had the metal hub come loose from the disk. If that happens, there’s not really much else you can do short of professional data recovery.

Applesauce hardware is by far too expensive!

The Kryoflux tool ist much more flexible and can be connected nearly every type of floppy drive.

https://www.kryoflux.com/

Even cheaper is the Greaseweazle: https://github.com/keirf/Greaseweazle

You can hack one of those up from a blue pill board and a 34 pin IDC cable. For the really brave you could just wire one directly to the edge connector of a floppy.

I’d love to see a re-release or upgraded version of the Central Point Option Board. It connected inline between a PCs floppy controller and the floppy drive. With the DOS software it was supposedly able to copy any disk, except possibly Amiga (I think this predated Amiga) and 400/800K Macintosh. Dunno if it could handle Commodore’s disks that didn’t use the timing hole.

That’s pretty much what a greaseweazle is–but it’s a USB device. It acts as a floppy drive controller and just digitizes the data stream coming off the drive. It can also do the opposite and recreate bitstreams to write to the drive.

But you can read/write Amiga, Macintosh, C64, etc. as well. Because bits are bits, the boards don’t care about the meaning–that’s left to other software like the previously mentioned FluxEngine (@Lanroth)

I dove down the floppy recovery rabbit hole this last summer. It’s pretty darn deep, too. I managed to find my way back before I got too lost.

“Dunno if it could handle Commodore’s disks that didn’t use the timing hole.”

Well, Apple ][ disks/drives/controllers didn’t use the timing hole either, so I bet that’s not an issue. :-)

I built a device that not only captures the digital signal from the disk drive, but also the analog signal from the read heads. So far the analog signal hasn’t been useful, but it might in the future. http://www.sardis-technologies.com/ufdr/ufdr.htm

kryoflux is toxic. betamaxthetape 19 days ago :

“Jason Scott (textfiles on HN) explained[1] the main reasons that make several people (including myself) uncomfortable with KyroFlux.

https://old.reddit.com/r/vintagecomputing/comments/buyj9f/til_that_computer_archivists_image_the_magnetic/epjwsut/ ”

Thats Jason Scott of Archive Team.

Read the license https://www.kryoflux.com/download/LICENCE.txt They claim rights to things you can and cannot do post sale. This is a “good” version of the license, previously they also claimed _copyrights over anything you ripped with their tools_.

That whole scene is full of a sort of people I like to call ‘gatekeepers’. They refuse any help or even a hint that someone can do better or are equal to what they can do. They blow people off and never look back. Then years later wonder why no one is helping out. Even the *slightest* of suggestions is met with a level of contempt that is borderline abusive. The people who get things done suffer these gatekeepers and tolerate them because they have wiggled themselves into some odd position of power. I read the pull requests and comments on them and read the posts and scream at my screen you would lose your crap if someone treated you even slightly like that.

I love that the data was stored on digital Equipment RX50K floppy media. The RX50 drives were quad density and used a proprietary format to store 400KB. That media was not easy to obtain – wonder if the author had family working for Digital or if they went to a school that used the somewhat odd digital PC’s (the rainbow, etc)? I can’t help but wonder if the original proprietary formatting (though overwritten on the Apple) was part of the cause for the data loss over time. Unless the floppy had been bulk erased, I’m sure the original formatting was causing crosstalk where ever the tracks overlapped (Apple vs digital).

On an Apple Disk][ the nibbles are RLL (Run Length Limited Group Codes) encoded in a way that is error correcting – sometimes – in ProDOS. Actually it is because the hardware is very simple and everything is done in software, allowing many formats, copy protection schemes, and methods of handling errors. A change in the nibble translation scheme software is what allowed Apple drives to go from 140K to 160K. Every drive is a little different and the software calibrates by writing and reading a test track to find the length of the dead spaces and adjust the buffers. This means the drive motors did not have to be precisely controlled for speed from drive to drive. https://www.metafilter.com/114962/jmp-TRIGSPIKES#4301468

Nice catch. (OP here.) You nailed it– my dad was a DEC lifer and he had a Rainbow 100 at home for work. It was no fun at all– there were no games on it, and there weren’t even graphics! Terrible. So eventually we got the Apple, and it was easy to just filch and reformat his old Rainbow disks, which he just got from the office I guess. And why wouldn’t you think, as a kid, that all these 5.25″ disks looked exactly the same so they must be exactly the same? After all, it did seem to work! I suspect you’re on to something around the formatting differences becoming a latent threat in eventual data corruption.

There was maybe also a factor in that they were well used media maybe before you used them.

Do you remember the Maxell disks were $20 a box? That was a lot of money at the end to the 70’s. The DS,DD ones were anyway. I remember as a teenager I had to cut quite a few lawns to afford a box.

I would think things like disk interleave for the sectors would be a major problem when reading a bit wise recovery given I bet many manufacturers used their own, although on things like an 8-inch disk the physical size of the thing I should have helped on reducing data loss given they didn’t have a huge capacity to begin with. If I remember the ones I used had 72 tracks with 32 sectors per track at 256 bytes per sector, double sided of course – not too big.

There used to be a CPM based machine portable called a Telcon Zorba. It had a built in 5 1/4 drive that could read like 30+ formats from other machines. It was the cheapest way to convert between two machine formats without having to wire transfer files. It wasn’t until the PC era that things like Laplink became a quick and easy way to move files, although there were rs232 comms for doing 9600 baud transfer on those machines using XMODEM. Not very reliable and labor intensive.

But the disks even then didn’t store data very reliably. Apple II machines were notorious for losing disks due to read head alignment if you bumped the disk drive or moved it a lot between locations, and given they were just coming out with copy protected formats – it made it harder. I remember my single disk drive cost $500 on the Bell & Howell Darth Vader Apple II Plus, WAY better than tape though.

Heck, elective dental surgery was better than tape

And tape recovery is its own mess. I think a recent VCFed conference had someone who was recovering data from wide tape, but for microcomputers it’s almost always analog cassette tape.

Back in the day I made a little TRS-80 (Model I) program that basically streamed from tape directly to the screen. It was certainly interesting to see the raw data, as well as the effects of tweaking the volume level.

About the time that I was using a Catweasel to read disks, I also tried doing some tape decoder code. Model I tapes were used one pulse vs two pulses, but I didn’t come up with good enough math to handle some of the level variations over time. Other formats used sine waves and might be easier to decode.

It shouldn’t be too hard to develop a decoder for PulseView to decode the various magnetic tape encodings:

https://sigrok.org/wiki/PulseView

using one of the existing decoders as a starting point:

https://sigrok.org/wiki/Protocol_decoders

I wrote an FM/MFM decoder for PulseView.

It seems to me they had plans in one of the electronic magazines for a small audio box that you could build that used a Schmitt trigger to clean up the pulses coming from the cassette player with some amplification built in too.

Yah I’ve seen that. The one I saw didn’t sound terribly useful to anything not using Tarbell or Kansas City standards though.

“Built”, I still hate no edit function. Yeah, but it did clean up the signal enough on things like the TRS-80 and Apple II to recover programs in many cases, although technically these weren’t digital recordings as opposed to analog I think. Disk drive was the way to go on apple if you could afford one. I wonder what that $500 drive would cost in today’s money?

An interesting experiment would be to take heads from a 720K or 1.2M 5.25″ drive, mount them in an 8″ drive, and use the same track density as the 5.25″ along with variable numbers of sectors per track – to see how much data at double density recording can be put on an 8″ floppy.

If HD media film was ever made wide enough, the next step would be cutting 8″ disks from it and bumping up to HD.

Maybe, but didn’t the disk speed have something to do with encoding? I know CD’s & DVD’s spin at different rates – but I can’t remember if floppies do. I would think that the 8-inch disks wouldn’t have the density to store higher bit rates/encoding from a smaller more concise read/write head. But I could be talking out my rear – it’s been a while since I worked on that stuff.

5.25 floppies spun at 300, but 8″ ones spun at 360. But if, for 1-time data recovery operations, you’re treating the tracks essentially as an analog recording that you’re going to use DSP techniques to decode, wouldn’t think spin speed would matter a whole lot.

Didn’t 1.2meg rotate at 360?

The Macintosh used variable speed rotation.

So I’m not totally crazy and I did live during those years? Nice to know! The things stuck in my head about OS’s that don’t exist anymore and hardware that only exists in Museums, frontal lobotomy? Ignorance may be bliss….

If I remember correctly, when Microsoft threw out the millions of diskette copies of Windows in 5 1/4 inch, they bought them overseas and used the cut up floppies for the backs of credit cards, cutting the disks into strips. This might be a rumor though

Modern bit-wise disk controllers record the time between transitions, so the only thing that really matters is having the correct read head that isn’t too far out of alignment.

And CP/M formats were usually different only in terms of a few parameters like density, sectors per track, sides, and directory track. The only official format was 8″ SS SD.

Twitter is probably the worst way to document anything on the internet.

Idiocy doesn’t need to be challenged to breed a better idiot, they’ll be holding up hand scrawl on tik-tok next.

Have to agree. Why! Use a decent forum – there are plenty many times better than twitter.

I’m the OP– it was my thread. A couple thoughts here. Like all mediums, Twitter has pros and cons to it. I was using it for the first time in this way, as a deliberate way of testing out how it would feel to use a lightweight play by play format. I usually write medium-to-longform posts on Medium about technology and design and urban history, and wanted to try something new.

Turns out, Twitter is actually *great* for this: It’s trivial to add new tweets, trivial to paste or upload images along the way so it reduces friction to just getting out the play by play of a process you’re doing without drafting and editing and adjusting images and re-editing, etc. It also, plainly, went viral in a small way (I don’t have many followers there and didn’t expect an audience whatsoever) which is a testament to how interesting niche work can reach interested people very quickly and effectively.

Finally re forums, as much as I love the communities that exists on domain-specific forums, they are certainly small subsets of the larger internet interest in that topic. I myself don’t participate in any retro tech or apple forums, for example, and I was the one who did the thread. Had someone else done something similar I’d have been interested but would never have seen it. ¯\_(ツ)_/¯

Twitter has an impermanence to it, not just in spirit, but in the mechanics of how people clean up their tweets and accounts go fallow or disappear over time. So in that sense, John above is right that it’s not a great way of *documenting*. I agree with that, and some day my thread on this too may disappear. But my goal out of the gate wasn’t documentation– when it is I always use more formal means– and there are third party solutions like that thread unroll service that people used to capture the text and images in a single page, which seems to me a nice way around the impermanence inherent in the medium.

Back in ’08 or so I got a Catweasel 4 (PCI) and got it working in an old Linux box. After imaging the hundred or so of my old TRS-80 disks, I started reading other formats. Atari and 5 1/4″ CP/M disks were easy enough, since they used a standard FDC chip. Then I started on Apple II. 16-sector wasn’t too bad, but my 13-sector decoder was a mess because of how the bits were arranged. Then I tried reading C-64 disks and gained more appreciation for the work of Woz. Apple’s GCR had unique data mark nibbles, but C64 had no such luxury, you just had to kind of guess where the data started. Then I got tired of the whole thing, since my main objective was done, and I wasn’t familiar enough with the others to do much with them.

I still have a box of 8″ disks to read, some CP/M, some DEC, some Tandy, and assorted drives squirreled away, but it’s not quite trivial to hook them up. One of these days I’ll get a pinout converter board. And the Linux box is in the front of a closet, so at least I won’t have to dig for it when I’m ready.

And I love that visualization of the disk status. I’m going to guess it can handle the 1/4 and 1/2 track stepping. There were some weird tricks done with those Apple II drives.

I had one of those piggy back boards when Apple II went from 13 sector to 16 sector, you could flip a hardware switch and get the new ROM on the piggy back, or the old, one of those.

I transferred all my 8″ disks from a Cado via comm I wrote and have them here in storage on my PC, about 200 applications, mostly general business stuff. I was writing a machine emulator for that system years back, but I must confess I’m not smart enough anymore to finish it (8080 assembler), I did get it to boot a system disk though.

Those machines were 4 user (3 MicroBee terminals and a printer) with a single 8080 that time sliced multi-user. They used a really efficient disk hash for storing data, one of the fastest systems of that time where pulling data records were concerned, at least in the mini market. This was a machine built in 1976, I tried my stuff as a lark in the 90’s but programmed them professionally in the early to mid 80’s .

I have incredibly mixed feelings about this. I agree with you in that some individuals associated with archiving are abrasive beyond all reason; that said, I think that for the most part, their intent is good/non-malicious.

That said: I do agree with the opinion that Kryoflux should be avoided at any cost. Their terms, as I understand them, are altogether unreasonable. FluxEngine, Greaseweazle, and Applesauce are excellent alternatives (IMO), depending on your needs.

A lot of IT people, not just the data preservation folks are as mentioned above. My theory is because at the end of the day, the finished product is not a good representation of the work & skill required. When a building goes up, architects, construction, etc can all point and say “this is what I (we) did…” The quality of work sustains the ego.

With data, everything is abstract and people with less of an ego start to hoard knowledge…. “Oh the power” It flufs themselves up.

Personally, I have found that a simple thank you for all your work & time on … goes a long way in dissolving all the bullshit. So does recognition for everyone’s help. Other times, just send them a pizza with a thanks note. From that forward should be good.