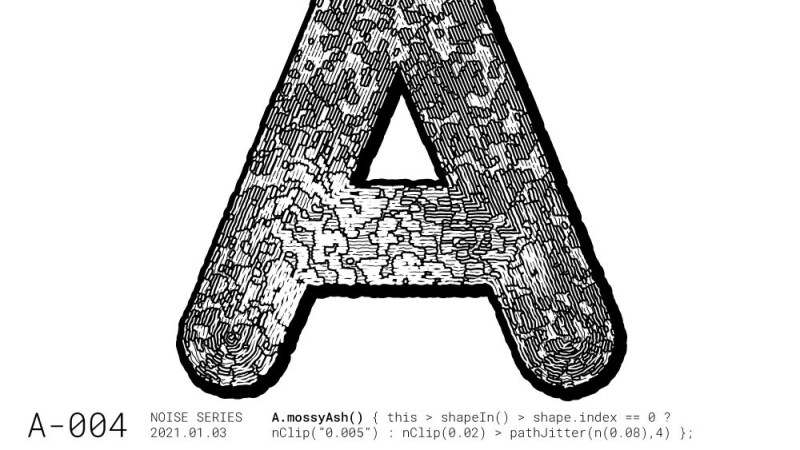

Perlin noise is best explained in visual terms: if a 2D slice of truly random noise looks like even and harsh static, then a random 2D slice of Perlin noise will have a natural-looking blotchy structure, with smooth gradients. [Jacob Stanton] used Perlin noise as the starting point for creating some interesting generative vector art that shows off all kinds of different visuals. [Jacob] found that his results often exhibited a natural quality, with the visuals evoking a sense of things like moss, scales, hills, fur, and “other things too strange to describe.”

The art project [Jacob] created from it all is a series of posters showcasing some of the more striking examples, each of which displays an “A” modified in a different way. A few are shown here, and a collection of other results is also available.

The art project [Jacob] created from it all is a series of posters showcasing some of the more striking examples, each of which displays an “A” modified in a different way. A few are shown here, and a collection of other results is also available.

Perlin noise was created by Ken Perlin while working on the original Tron movie in the early 80s, and came from a frustration with the look of computer generated imagery of the time. His work had a tremendous and lasting impact, and was instrumental to artists creating more natural-looking textures. Processing has a Perlin noise function, which was in fact [Jacob]’s starting point for this whole project.

Noise, after all, is a wide and varied term. From making generative art to a cone of silence for smart speakers, it has many practical and artistic applications.

So what was Jacob’s end point for this whole project? Open source code? Also it’s pretty clear what is more likely censored rather than simply not described.

Please elaborate?

Processing is neat but don’t forget ImageMagick either. Not owned by Hasbro, plus it’s open source.

http://www.fmwconcepts.com/imagemagick/perlin/index.php

Shockingly (or not) it also can create an image composed of Perlin type noise.

“Not owned by Hasbro…”

What’s this all about?

I want those as TTF files :) animated fonts would be cool as well.

That is beautiful!

The Perlin function reminds me of the “Bozo” texture option in PoVray (which might have been an implementation of Perlin) which was described as: “Point close together will have a value that is close together. Points far away will have a value that has a random distance”

Lush, do this for a vader head and you’ve pretty much got your Etsy store setup.

You will get far more mileage out of Blender 3D, just add procedural noise to your geometry and or displacement maps then render with a custom shader. And don’t give me the “but but vector vs raster” argument because that isn’t an issue either as there are output options along those lines too, and it is moot when you can render to very high raster resolutions anyway which is what the RIP does in prepress for print, duh. Vector files were for moving data around when the final data was so huge (by the standards of the day) that sending the pre RIPed file was infeasible even if it was actually the one way to ensure that the end result was exactly what you wanted. Been there done that, I’ve taken machines worth more than your house and made them fail from the workload. Anyway back to 2021… With procedural 3D graphics that is your vector source anyway and if you are open source you can send that, or you RIP it ro raster and send those pixels customised to the exact endpoint, print or display, even set up a service to do it on demand on a cloud server. Never forget that it is important to throw away all of your technical traditions that were evolved out of the need to compromise.