Augmented reality (AR) and natural gesture input provide a tantalizing glimpse at what human-computer interfaces may look like in the future, but at this point, the technology hasn’t seen much adoption within the open source community. Though to be fair, it seems like the big commercial players aren’t faring much better so far. You could make the case that the biggest roadblock, beyond the general lack of software this early in the game, is access to an open and affordable augmented reality headset.

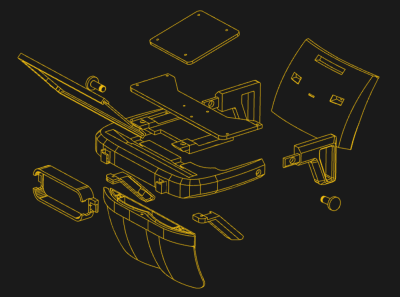

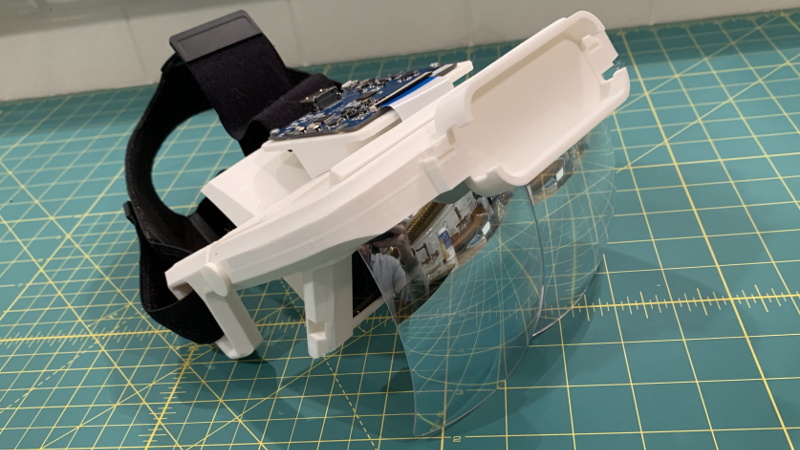

Which is precisely why [Graham Atlee] has developed the Triton. This Creative Commons licensed headset combines commercial off-the-shelf components with 3D printed parts to provide a capable AR experience at a hacker-friendly price. By printing your own parts and ordering the components from AliExpress, basic AR functionality should cost you $150 to $200 USD. If you want to add gesture support you’ll need to add a Leap Motion to your bill of materials, but even still, it’s a solid deal.

The trick here is that [Graham] is using the reflectors from a surprisingly cheap AR headset designed to work with a smartphone. By combining these mass produced optics with a six inch 1440 x 2560 LCD panel inside of the Triton’s 3D printed structure, projecting high quality images over the user’s field of view is far simpler than you might think.

If you want to use it as a development platform for gesture interfaces you’ll want to install a Leap Motion in the specifically designed socket in the front, but otherwise, all you need to do is plug in an HDMI video source. That could be anything from a low-power wearable to a high-end gaming computer, depending on what your goals are.

[Graham] has not only provided the STLs for all the 3D printed parts and a bill of materials, but he’s also done a fantastic job of documenting the build process with a step-by-step guide. This isn’t some theoretical creation; you could order the parts right now and start building your very own Triton. If you’re looking for software, he’s also selling a Windows-based “Triton AR Launcher” for the princely sum of $4.99 that looks pretty slick, but it’s absolutely not required to use the hardware.

Of course, plenty of people are more than happy to stick with the traditional keyboard and monitor setup. It’s hard to say if wearable displays and gesture interfaces will really become the norm, of they’re better left to science fiction. But either way, we’re happy to see affordable open source platforms for experimenting with this cutting edge technology. On the off chance any of them become the standard in the coming decades, we’d hate to be stuck in some inescapable walled garden because nobody developed any open alternatives.

Nice! I’ve got one of those headsets on my desk but don’t currently have a phone that fits in the original holder, so having a starting point for a custom mount instead is awesome.

Well, nice project using the classic birdbath optics concept but this isn’t really an augmented reality headset – the article neglects to mention that this has *NO* position tracking at all, only hand tracking using the forehead mounted Leap Motion.

Which is cool and probably enough for what the author wants to do with it – but if you can’t really place overlays over real world objects (with the exception of the user’s hands) it is not AR but only a souped up HUD (or as it is also known – “smartglasses”). An image floating in front of the user is not automatically augmented reality.

And getting the tracking precise and fast enough to permit the overlays is a huge task (both sw and hw) unless you want to use markers (cheap, simple but pain to use) or stick something like the HTC tracker “puck” on top and use the Lighthouse technology from Valve (expensive and works only in prepared space).

Although i see what you mean, i don’t agree. The video with the leapmotion controler clearly shows an augmented reality relative to the hands. You could build a minority report style interface around that ;)

Other AR scenarios with headtracking might even be possible by using a smartphone as image source attached to your head of course, perhaps with a holder at the back of your head?

Why would you think position tracking is even necessary outside of a cheap camera and an accelerometer? The overlay has to be redrawn each frame and the accelerometer would only be useful for speculative motion. Valve’s system is designed to help track the user in a relative, virtual space and wouldn’t be very useful in dealing with real space outside of re-creating real space in a virtual environment for easier interaction. It would seem like a easier choice would be to use something like machine learning algorithms for edge, classification, and object recognition and overlay that data with virtual objects for the illusion. Outside of a few extra parts, which were already summed up in another reply, you’re talking mostly software when this guy is tackling cheap hardware problems, a significant barrier to entry to AR. Stop complaining that this isn’t “real AR” because it doesn’t look like that Florida company’s fake demos and get to expanding what’s being built here to be accessible to other hackers that can continue to iterate until some other company can button it all up in a nice package for the end user without access to a 3D printer.