Unimpressed by DIY wearables powered by dinky microcontrollers, [Teemu Laurila] has been working on a 3D printed head-mounted computer that puts a full-fledged Linux desktop in your field of view. It might not be as slim and ergonomic as Google Glass, but it more than makes up for it in terms of raw potential.

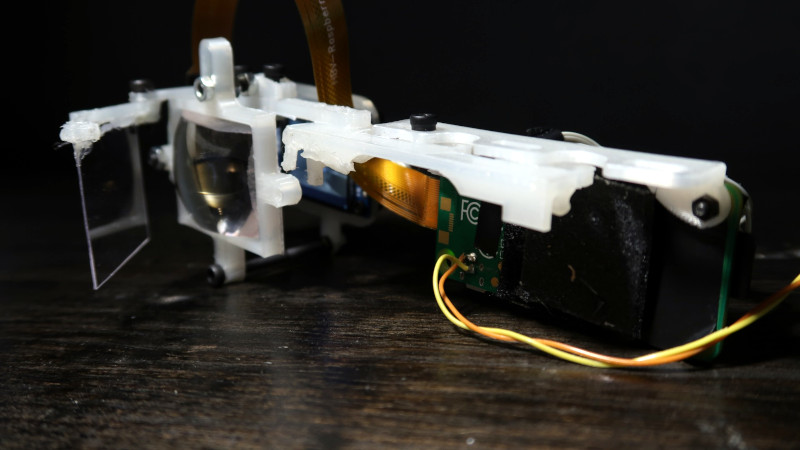

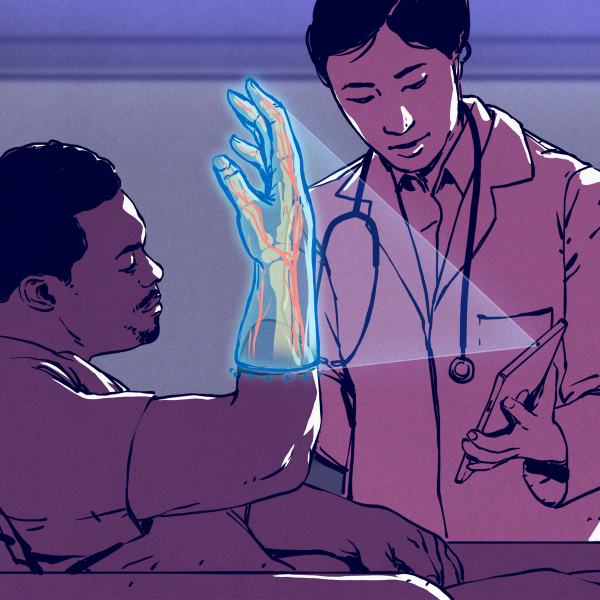

Featuring an overclocked Raspberry Pi Zero W, a ST7789VW 240×240 IPS display running at 60 Hz, and a front-mounted camera, the wearable makes a great low-cost platform for augmented reality experiments. [Teemu] has already put together an impressive hand tracking demonstration that can pick out the position of all ten fingers in near real-time. The processing has to be done on his desktop computer as the Zero isn’t quite up to the task, but as you can see in the video below, the whole thing works pretty well.

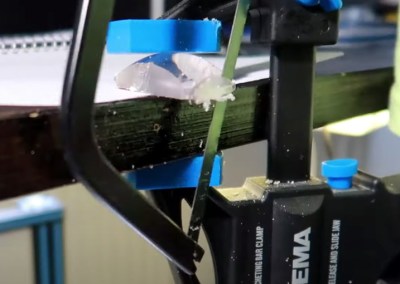

Structurally, the head-mounted unit is made up of nine 3D printed parts that clip onto a standard pair of glasses. [Teemu] says the parts will probably need to be tweaked to fit your specific frames, but the design is modular enough that it shouldn’t take too much effort. He’s using 0.6 mm PETG plastic for the front reflector, and the main lens was pulled from a cheap pair of VR goggles and manually cut down into a rectangle.

The evolution of the build has been documented in several videos, and it’s interesting to see how far the hardware has progressed in a relatively short time. The original version made [Teemu] look like he was cosplaying as a Borg drone from Star Trek, but the latest build appears to be far more practical. We still wouldn’t try to wear it on an airplane, but it would hardly look out of place at a hacker con.

It’s so subtle is didn’t even notice he was wearing anything!

I wonder when we will have hack-able smart glasses that allow me to censor advertisements from my FOV.

What you are looking for exists already. It’s a bit clumsy, though: https://theartvertiser.com/

I’d rather have it in a daily usable form factor, but that is a nice start!

This is super cool. We definitely need people making hobbyist AR glasses from the ground up (like this project) so that they can be improved incrementally and not be the “guesswork” of big players like Facebook or Google.

A strong entry for the HAD prize – Win or not, I hope he keeps working on this even after the contest.

(As a proof of concept, I might take the hand tracker and have it try to interpret the ASL (American Sign Language) alphabet. Not to any good purpose, but just to see if it can be done.)

(Or maybe recognize arcane hand motions to trigger supernatural sfx in the living room to impress visitors.)

+1

Seems to me a Sign language is an ideal solution to most chat/text entry in such a heads up device, got to be easier than typing with no haptic feedback in mid air… Though with how much of most sign languages seems to be gestures that will leave the camera’s FOV and most of the time you would be seeing the rear of the hand not the fingertips could be very tricky.

Might need a ‘new’ language or some AI smarts to know that when all we can see of this motion means it is one of this set – but the previous words mean only these two make sense or something.. I’m not at all an expert of Sign language – but seems having watched it that all the gestures are designed to be clear from an observer watching you from the front, which makes logical sense – you don’t need to tell yourself what you just said… They also seem wide enough gestures that with a wider FOV camera, and maybe some depth sensing you might be able to infer correctly from the wrong angle..

Of course this all becomes simple including the arcane hand motions if you are viewing from an observers point of view… Now I actually want some RGB lighting, as that SFX stuff sounds great fun, already have the good surround sound system hooked up to a Pi4 (among other inputs) so just need the light effects to really make it awesome – I wonder how far you can levitate a polystyrene ball from the transducers reasonably – that would be an awesome thing to trigger, a real drawing in mid air… But I don’t think you could really do that over 8 ft floor to ceiling heights, rather hard to hide away and over such vast distances and probably nearly impossible to keep control…

You could probably have dedicated gesture input gloves with sensors and a wireless interface.

Very true, though less convenient for the user, it is the method I think I’d want to control a HUD with myself, only does anything when in active mode – perhaps as simple as making the activate gesture does it do anything to the hud, and you can add some degree of haptic feedback, and that really makes an interface feel nicer to use, one of the few things that did tempt me to pay the Apple tax some time ago was the ‘feel’ of their trackpads being so nice and nothing else like it on the market at the time.

But if you are already doing hand position analysis for any other purpose adding in a dataset for sign language makes sense, it really didn’t cost you anything hardware wise, and the training time I would not expect to be that hard – any ambiguously signed words from the glasses POV can be put through the normal predictive text type stuff to select the most likely correct response, though I don’t actually know anything about sign language beyond what I’ve seen overlaid on some TV programs maybe it is a much tougher task than I think to add to the processed hand tracking…

Look at Project Northstar from UltraLeap…

This project is crying for jetson nano…

Won’t say you are wrong, but even stepping up the Pi family a little would help… the Zero is so anaemic for this application.

If it was my project I would either go Pi4 compute module for compactness and low power to mount to the headset, or one step up from a Jetson and use a ‘real’ computer on a tether, probably stick with small so ITX maybe mini-atx – so its still backpack/belt mountable with some battery for the semi-portable experience…

I was thinking along the same lines. Start with the tech for the sack of usefulness even if clunky to start than work towards reducing weight and portability. Possibly tie to supercomputer with higher capabilites and tether keeping the head portion itself as lite as possible, good for extended use.

Imagine equipment inspections in future fission/ fusion plants looking through and being fed images of areas approaching questionable tolerance levels, as data is fed through AI to your HUD.

sorry byt this, destroy you eyes ;(

glasses with this way is not for human eyes

How would this destroy your eyes exactly?

There is a fairly hefty lens to create some ‘distance’ to the projected image – so it should be in a comfortable enough focal length, perhaps not ideal I’ve not dug into exactly what lens is in the project yet, assuming its in the documentation at all but clearly not in the impossible to use range.

So about the only harm this can do you is the same as any screen – be too bright or focused upon for too long without break..

Is it too late to vote?

Yeah.

where can I get the design to the 3d printed parts

Where can I find the designs for the 3d printed components?