If you’ve looked into GPU-accelerated machine learning projects, you’re certainly familiar with NVIDIA’s CUDA architecture. It also follows that you’ve checked the prices online, and know how expensive it can be to get a high-performance video card that supports this particular brand of parallel programming.

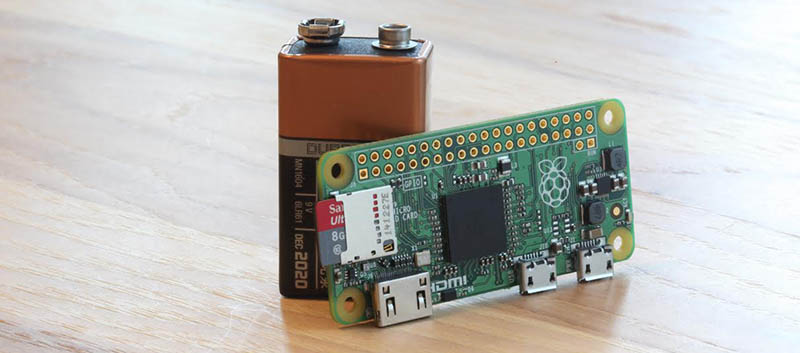

But what if you could run machine learning tasks on a GPU using nothing more exotic than OpenGL? That’s what [lnstadrum] has been working on for some time now, as it would allow devices as meager as the original Raspberry Pi Zero to run tasks like image classification far faster than they could using their CPU alone. The trick is to break down your computational task into something that can be performed using OpenGL shaders, which are generally meant to push video game graphics.

[lnstadrum] explains that OpenGL releases from the last decade or so actually include so-called compute shaders specifically for running arbitrary code. But unfortunately that’s not an option on boards like the Pi Zero, which only meets the OpenGL for Embedded Systems (GLES) 2.0 standard from 2007.

Constructing the neural net in such a way that it would be compatible with these more constrained platforms was much more difficult, but the end result has far more interesting applications to show for it. During tests, both the Raspberry Pi Zero and several older Android smartphones were able to run a pre-trained image classification model at a respectable rate.

This isn’t just some thought experiment, [lnstadrum] has released an image processing framework called Beatmup using these concepts that you can play around with right now. The C++ library has Java and Python bindings, and according to the documentation, should run on pretty much anything. Included in the framework is a simple tool called X2 which can perform AI image upscaling on everything from your laptop’s integrated video card to the Raspberry Pi; making it a great way to check out this fascinating application of machine learning.

Truth be told, we’re a bit behind the ball on this one, as Beatmup made its first public release back in April of this year. It might have flown under the radar until now, but we think there’s a lot of potential for this project, and hope to see more of it once word gets out about the impressive results it can wring out of even the lowliest hardware.

[Thanks to Ishan for the tip.]

Pytorch, one of the two most popular machine learning toolkits, is slowly picking up support for running on top of the vulkan graphics API. The support is intended for running machine learning models on android smartphones, but since the Pi now has a vulkan driver I don’t see why it wouldn’t eventually work there too.

It would be amazing if this eventually matured, as vulkan is quickly becoming ubiquitous. When every GPU driver eventually supports it, no special or unusual hardware will be required any longer.

Is vulkan support planned for more than PI4, PI400, CM4?

Vulkan support in pytorch works on any GPU with a vulkan driver (though isn’t necessarily bug-free).

Not just smartphones. Not just the pi. Not just AMD, or nvidia, or intel.

I could be wrong but I think the point being made was that on the Pi, Vulkan is currently only on Pi4

The difference has little to do with cuda vs opengl (they’re nothing but software interfaces) and a lot more to do with how powerful the GPU itself is. Even if you implemented a cuda driver for the pi, it’s still one of the weakest GPUs on earth.d

Hmm. comment I replied to disappeared.

It got deleted for some reason. I’m as confused by it as you are. I didn’t mean to say that it was a CUDA vs OpenGL difference either. I meant that the article here is a bit confusing with the use of “GPU” in reference to both the CUDA GPUs and the builtin Pi GPU and referred to them both simply as “GPU”. Yes, they’re both GPUs, but not quite the same thing, and the change in topic lead me to believe they were connecting PCI-e cards to Pis. It was an assumption on my part perhaps, but I didn’t get there on my own. Do authors delete comments they don’t like on their own posts, or is that a task reserved by a specific editor? What on earth is going on over at Hackaday?

The two GPUs are mostly the same, though there do tend to be some architectural differences between the style used for mobile GPUs and the style for desktop GPUs. Interestingly, the architecture of the M1 is closer to a desktop GPU than a mobile one. https://rosenzweig.io/blog/asahi-gpu-part-3.html

I suppose on the Pi 4 you could attach a large CUDA-capable GPU (and many people have tried). I’m not 100% sure if anyone has gotten past the BAR address space mapping issue yet? Maybe with a modified kernel/device tree?

If comments aren’t constructive or useful, they will get deleted. What sounds like a comment about you not comprehending the meaning of the post certainly doesn’t sound worth keeping to me.

If I need to scale something tiny to be gigantic I usually run it through hqx4 a few times

(ref: https://en.wikipedia.org/wiki/Hqx )

It does not use AI, but does an insanely impressive job.

Nice! I’ll have to remember that one for later!

It is unfortunate that these AI frameworks were based on a proprietary platform (Cuda) rather than OpenCL.

Research grants, no doubt…🙄

CUDA has been king far, far longer than any of these AI frameworks have existed. Neural network techniques only date back to about 2010.

As for how CUDA beat out openCL to become the defacto standard, that’s another story.

New Something Pi’s with 0.01 cent used Intel integrated gpu chips incoming…🤣 Just the socket!

I have a Google Coral USB Accelerator sitting on my desk waiting to be played with, which is another cheap & accessible avenue.

https://coral.ai/products/accelerator/