Join us on Wednesday, April 27 at noon Pacific for the Software Defined Instrumentation Hack Chat with Ben Nizette!

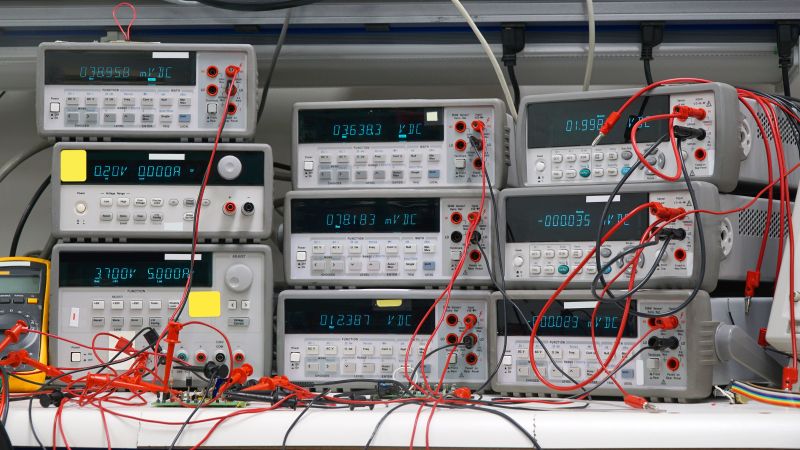

Imagine, if you will, the perfect electronics lab. Exactly how it looks in your mind will depend a lot upon personal preferences and brand loyalty, but chances are good it’ll be stocked to the gills with at least one every conceivable type of high-precision, laboratory-grade instrument you can think of. It’ll have oscilloscopes with ridiculously high bandwidths, multimeters with digits galore, logic analyzers, waveform generators, programmable power supplies, spectrum analyzers — pretty much anything and everything that can make chasing down problems and developing new circuits easier.

Alas, the dream of a lab like this crashes hard into realities like being able to afford so many instruments and actually finding a place to put them all. And so while we may covet the wall of instruments that people like Marco Reps or Kerry Wong enjoy, most of us settle for a small but targeted suite of instruments, tailored to our particular needs and budgets.

It doesn’t necessarily need to be that way, though, and with software-defined instrumentation, you can pack a lab full of virtual instruments into a single small box. Software-defined instrumentation has the potential to make an engineering lab portable enough for field-service teams, flexible enough for tactical engineering projects, and affordable for students and hobbyists alike.

Ben Nizette is Product Manager at Liquid Instruments, the leader in precision software-defined instrumentation. He’s the engineer behind Moku:Go, the company’s first consumer product, which squeezes eleven instruments into one slim, easily transported, affordable package. He’s been in the thick of software-defined instrumentation, and he’ll drop by the Hack Chat to talk about the pros and cons of the virtual engineering lab, what it means for engineering education, and how we as hobbyists can put it to work on our benches.

Ben Nizette is Product Manager at Liquid Instruments, the leader in precision software-defined instrumentation. He’s the engineer behind Moku:Go, the company’s first consumer product, which squeezes eleven instruments into one slim, easily transported, affordable package. He’s been in the thick of software-defined instrumentation, and he’ll drop by the Hack Chat to talk about the pros and cons of the virtual engineering lab, what it means for engineering education, and how we as hobbyists can put it to work on our benches.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, April 27 at 12:00 PM Pacific time. If time zones have you tied up, we have a handy time zone converter.

Having worked with neither an oscilloscope nor a logic analizer, I always failed to understand why they need to be two separate devices.

A scope is optimized for looking at a few analog signals at a time, while a logic analyzer is optimized for looking at a lot of digital signals. Because logic analyzers distill the inputs down to 2 levels, they can capture much deeper traces (sometimes infinitely long with PC-USB connected LAs) than a scope. But a scope will tell you the truth about a signal whereas a logic analyzer hides signal integrity problems.

It used to be that a logic analyzer had hundreds of inputs, such as the HP/Agilent 16xxx series, usually connected to address/data buses and control signals for monitoring the state of a processor or bus.

Now that most buses are serial, a scope with protocol decoding is often enough. I won’t break out my logic analyzer for basic I2C or SPI debugging when I can use my Rigol. But if I need to capture a long series of I2C transactions then I’d use the logic analyzer which can easily convert hours of I2C traffic into a log file.

The logic analyzer I use cost like $15 and allows me to add in multiple layers of custom decoder, which a scope would not permit: you end up spending like $1200 just to get I2C decode enabled on my scope at work, and all that gets you is chunking out address, payload, and ack. Sigrok will let me decode the payload itself, if I want to: if I’m sniffing a thermal camera I can write a decoder that’ll break out all the intensity data as rows and columns and separately break out the hardware-generated max and min temperature extents and their row/column locations included in the entire packet.

A proper Mixed Signal Oscilloscope (MSO) can actually do both. Usually there is an additional piece of hardware that has to be connected to the scope to facilitate it. My Siglent scope is an MSO and I spent the money on the additional device to allow me to do digital.

Software defined instruments are like cellphones. They can do 10-20 different things, most of them poorly, and sacrifice their core features to serve some niche needs.

I’ll take a good Fluke/Agilent/HP/Teradyne over any of these.

For the home user, might be “good enough”.

In most real life cases, “good enough” is the main goal is often enough less achievable then “perfect”

Even in a couple of professional scenarios, good enough might be the way to go. As someone who works in R&D, let me tell you that not everything in a company is R&D. You might need service technicians or or someone from quality control to be able to make measurements. In that case a cheap device that is good enough and also highly automatable is way better then any tricked out, high bandwidth scope that costs a fortune.

Ah, a single instrument, programmable, that can do many things, none of them very well, but just “good enough”. Reminds me of a cellphone.

A so-so, camera, so-so gps, so-so microphone, so-so sensors, etc.

And of course, it does all of these mediocre things, at the cost of sacrificing it’s “core” function, even doing that poorly.

Additionally, software defined instrumentation is reliant on software – ask Insteon owners about that one (yes, I realize that it’s not web-connected or some subscription model…yet)

From the website:

*This summer, Multi-instrument Mode and Moku Cloud Compile will be available on Moku:Go, enabling users to use multiple instruments simultaneously and even develop custom signal processing to deploy on Moku:Go hardware.*

Anyone get a whiff of “real soon now” ?

In any event, best of luck to them and I hope it all works without the hardware of the company turning into an embarrassing mess….

Heh, dude, upgrade your cellphone more than once a decade. :-D

Meaning it’s not a great analogy since phone cams blew through about 8 megapixel near on a decade ago and got better than most mid-end point and shoots, they’re not DSLRs of course, but do what most of the market wants.

Yep, 10-20Mp of blurry, noisy, low sensitivity, fingerprints on the plastic lens megapixels. Using algorithms to take “good enough” pictures, while burning though the battery. Reminds me of those cheap plastic cameras with “enhanced” resolutions, some of which are still being sold.

crappy software, paired with so-so hardware, with so-so sensors.

My mid end point and shoot has a real glass lens, not plastic, a real zoom, not “software enhanced”, real aperture control not “software defined”, real EV, and ISO control, not “software enhanced” , and a much larger, much better, much more sensitive sensor.

Thats just like saying your cheap calculator app is as good as an HP48, or even a Casio EL-946G.

My cellphone has 4 cameras including a 108Mp main camera and a 12Mp telephoto all capable astrophotography. The GPS, accelerometer, gyro, magnetometer and pressure sensor are as sensitive or more sensitive than those used in autonomous vehicles. The 8 core clocked up to 3.09Ghz with 12GB of Ram and the Adreno 640 GPU allows me to game on high settings at 1440p.

I think your analogy fails in every way.

108Mp “software enhanced”, with a high noise sensor. It’s not really 108Mp, more like 3x36Mp, noisy, low quality sensors, with low quality plastic lenses, with a lot of software to compensate for it.

the sensors are nowhere near the quality of autonomous vehicles, they are not safety/life-critical certified, more like factory seconds at best from the autonomous car factories.

8 Core ARM CPU, with a so-so FPU, a so-so GPU, and 12GB of slow, LPDDR RAM. The GPU allows you to play bad games, on a small tiny screen, that are not too CPU heavy.

“capable of astrophotography”, if you attach it to a telescope. My Raspberry Pi HQ Camera is also capable of same, and probably has a better lens, a bigger sensor, and less noise.

A cheap plastic “telephoto” lens. Why do you think they have so many cameras? they are cheap, it’s more like 7 cameras, all very cheap ones.

3x36Mp cameras “software enhanced” R,G,B

4 other cheap cameras “sofrware enhanced”

My scope can be used to play pong, but it makes a bad game system. Just because you can, doesn’t mean you should.

Look at the sensor size, and pixel density of a phone sensor to even a mid/low end point-and-shoot, there is no comparison. algorithms can produce “good enough” photos from all that poor quality noise, but why?

Smartphones are also very optimized for convenience. For test equipment, I would not order priorities the same way as something that needs fitting in my pocket.

To me the Moku:Go looks like a nice and shiny but closed (proprietary) system. I would definitely prefer Red Pitaya (https://redpitaya.com/) where at least the software is open source and where I do not need some company cloud for further features.

I don’t want to spend an hour on some advertising chat of a company. Sorry.