Most computer operating systems suffer from some version of “DLL hell” — a decidedly Windows term, but the concept applies across the board. Consider doing embedded development which usually takes a few specialized tools. You write your embedded system code, ship it off, and forget about it for a few years. Then, the end-user wants a change. Too bad the compiler you used requires some library that has changed so it no longer works. Oh, and the device programmer needs an older version of the USB library. The Python build tools use Python 2 but your system has moved on. If the tools you need aren’t on the computer anymore, you may have trouble finding the install media and getting it to work. Worse still if you don’t even have the right kind of computer for it anymore.

One way to address this is to encapsulate all of your development projects in a virtual machine. Then you can save the virtual machine and it includes an operating system, all the right libraries, and basically is a snapshot of how the project was that you can reconstitute at any time and on nearly any computer.

In theory, that’s great, but it is a lot of work and a lot of storage. You need to install an operating system and all the tools. Sure, you can get an appliance image, but if you work on many projects, you will have a bunch of copies of the very same thing cluttering things up. You’ll also need to keep all those copies up-to-date if you need to update things which — granted — is sort of what you are probably trying to avoid, but sometimes you must.

Docker is a bit lighter weight than a virtual machine. You still run your system’s normal kernel, but essentially you can have a virtual environment running in an instant on top of that kernel. What’s more, Docker only stores the differences between things. So if you have ten copies of an operating system, you’ll only store it once plus small differences for each instance.

The downside is that it is a bit tough to configure. You need to map storage and set up networking, among other things. I recently ran into a project called Dock that tries to make the common cases easier so you can quickly just spin up a docker instance to do some work without any real configuration. I made a few minor changes to it and forked the project, but, for now, the origin has synced up with my fork so you can stick with the original link.

Documentation

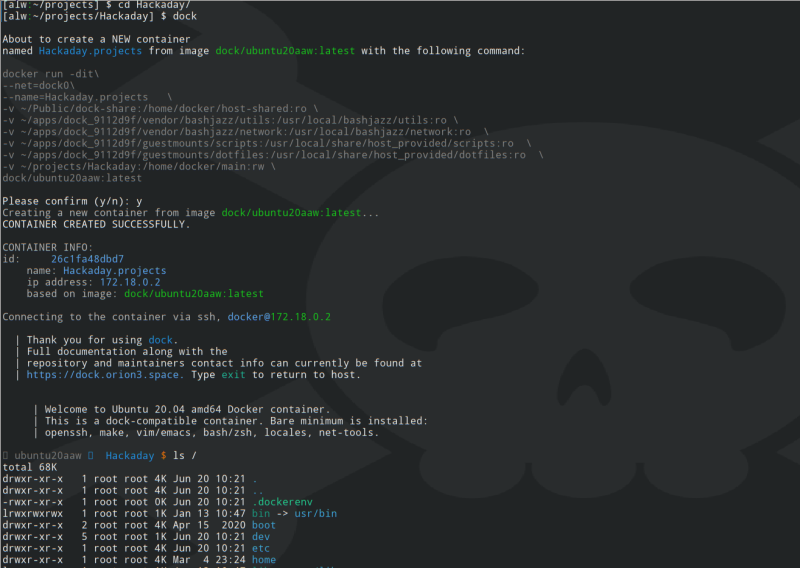

The documentation on the GitHub pages is a bit sparse, but the author has a good page of instructions and videos. On the other hand, it is very easy to get started. Create a directory and go into it (or go into an existing directory). Run “dock” and you’ll get a spun-up Docker container named after the directory. The directory itself will be mounted inside the container and you’ll have an ssh connection to the container.

The documentation on the GitHub pages is a bit sparse, but the author has a good page of instructions and videos. On the other hand, it is very easy to get started. Create a directory and go into it (or go into an existing directory). Run “dock” and you’ll get a spun-up Docker container named after the directory. The directory itself will be mounted inside the container and you’ll have an ssh connection to the container.

By default, that container will have some nice tools in it, but I wanted different tools. No problem; you can install what you want. You can also commit an image set up how you like and name it in the configuration files as the default. You can also name a specific image file on the command line if you like. That means it is possible to have multiple setups for new machines. You might say you want this directory to have an image configured for Linux development and another one for ARM development, for example. Finally, you can also name the container if you don’t want it tied to the current directory.

Images

This requires some special Docker images that the system knows how to install automatically. There are setups for Ubuntu, Python, Perl, Ruby, Rust, and some network and database development environments. Of course, you can customize any of these and commit them to a new image that you can use as long as you don’t mess up the things the tool depends on (that is, an SSH server, for example).

If you want to change the default image, you can do that in ~/.dockrc. That file also contains a prefix that the system removes from the container names. That way, a directory named Hackaday won’t wind up with a container named Hackaday.alw.home, but will simply be Hackaday. For example, since I have all my work in /home/alw/projects, I should use that as a prefix so I don’t have the word projects in each container name, but — as you can see in the accompanying screenshot — I haven’t so the container winds up as Hackaday.projects.

Options and Aliases

You can see the options available on the help page. You can select a user, mount additional storage volumes, set a few container options, and more. I haven’t tried it, but it looks like there’s also a $DEFAULT_MOUNT_OPTIONS variable to add other directories to all containers.

My fork adds a few extra options that aren’t absolutely necessary. For one, -h will give you a short help screen, while -U will give you a longer help screen. In addition, unknown options trigger a help message. I also added a -I option to write out a source line suitable for adding to your shell profile to get the optional aliases.

These optional aliases are useful for you, but Dock doesn’t use them so you don’t have to install them. These do things like list docker images or make a commit without having to remember the full Docker syntax. Of course, you can still use regular Docker commands, if you prefer.

Try It!

To start, you need to install Docker. Usually, by default, only root can use Docker. Some setups have a particular group you can join if you want to use it from your own user ID. That’s easy to set up if you like. For example:

sudo usermod -aG docker $(whoami) newgrp docker sudo systemctl unmask docker.service sudo systemctl unmask docker.socket sudo systemctl start docker.service

From there, follow the setup on the project page, and make sure to edit your ~/.dockrc file. You need to make sure the DOCK_PATH, IGNORED_PREFIX_PATH, and DEFAULT_IMAGE_NAME variables are set correctly, among other things.

Once set up, create a test directory, type dock and enjoy your new sort-of virtual machine. If you’ve set up the aliases, use dc to show the containers you have. You can use dcs or dcr to shut down or remove a “virtual machine.” If you want to save the current container as an image, try dcom to commit the container.

Sometimes you want to enter the fake machine as root. You can use dock-r as a shorthand for dock -u root assuming you installed the aliases.

It is hard to imagine how this could be much easier. Since the whole thing is written as a Bash script, it’s easy to add options (like I did). It looks like it would be pretty easy to adapt existing Docker images to be set up to be compatible with Dock, too. Don’t forget, that you can commit a container to use it as a template for future containers.

If you want more background on Docker, Ben James has a good write-up. You can even use Docker to simplify retrocomputing.

Let’s keep hackaday docker-free, shall we?

Be funny if it was all a docker-instance, making it easy to roll back any forceful changes.

https://thehackernews.com/2022/06/over-million-wordpress-sites-forcibly.html

Agreed!

I would rather not see Hackaday teach kids how to smoke cigarettes, either.

I’ll probably get some eye rolls but NixOS provides a surprisingly good solution to this problem.

Inside your project folder, you have a “shell.nix” file with build environment configuration – library packages, tool packages, environment variables, and any other system setting imaginable. You run “nix-shell” in that directory, and NixOS gives you a temporary shell with exactly the dev environment you requested. When you are done, you close the console and your system isn’t any more cluttered than it was before.

If you want tools permanently installed on your development machine, put them in your system configuration file. New identical machines can be spun up by feeding them the same one global config file.

The downsides are:

– Syntax is funky

– It’s a bit of a pain if you need something custom

-Requesting a specific package version is currently a bit difficult, but this will change soon

“Most computer operating systems suffer from some version of “DLL hell” — a decidedly Windows term, but the concept applies across the board.” and “One way to address this is to encapsulate all of your development projects in a virtual machine. Then you can save the virtual machine and it includes an operating system, all the right libraries, and basically is a snapshot of how the project was that you can reconstitute at any time and on nearly any computer.”

Read an article awhile back but basically this was an inelegant solution to the real problem. With that said it’s a useful solution towards getting tricky and difficult to working software made functional.

It has become somewhat common on critical software where you need to be able to rebuild and debug in the EXACT same environment you did originally. Docker is probably a harder sell in that context because at the bottom you still have the “new” machine which changes but at least most of the stuff you are using is “original.” Again, probably depends on what you are doing exactly.

I can see devops needing something like that. Packaging is still a problem looking for a solution.

https://ludocode.com/blog/flatpak-is-not-the-future

Meh – I eagerly clicked, hoping for an article about how to make a Linux laptop behave nicely, all the time, when docking and undocking…..

So I wasn’t the only one? Lol

Community, sorry to say this, do not take it personally, I really love this project, but please let me picture some of the problems I found so often around the net, specially in projects that wants to make such beautiful goals like this one that is making something more straightforward:

This tool is pain suffering in the moment you wrote this:

1 – install Docker

2 – Go check how to install docker

3 – Realise all this tool is for linux and continue:

3.1 – you found out about the missing dependencies

3.2 – Install bash >= 4.2

3.3 – install git

3.4 – install sed

3.5 – install awk

3.6 – install ssh

4 – sudo usermod -aG docker $(whoami)

5 – newgrp docker

6 – sudo systemctl unmask docker.service

7 – sudo systemctl unmask docker.socket

8 – sudo systemctl start docker.service

9 – From there, follow the setup on the project page,

9 – Edit your ~/.dockrc file and make sure the below things are correct somehow:

9.1 – DOCK_PATH is set correctly.

9.2 – IGNORED_PREFIX_PATH is set correctly.

9.3 – DEFAULT_IMAGE_NAME is set correctly.

9.4 – Among other things (WHAT OTHER THINGS?)

10- Once set up, create a test directory, (HOW?)

11- type dock and enjoy your new sort-of virtual machine. (SORT OF?)

11.1 – If you’ve set up the aliases, use dc to show the containers you have. (THAT I HAD? BEFOREHAND?)

12 – You can use dcs or dcr to shut down or remove a “virtual machine.” (NO EXPLANATION WHAT IS LOCAL OR REMOTE BEFORE THIS POINT)

13 – If you want to save the current container as an image, try dcom to commit the container.

14 – Find the link to github to discover the explanation there is even more shallow than here.

15 – Get some error and google around

16 – Learn a lot more about Docker, realise how much it sucks and how wonderful this project could be

17 – Stuck to Docker cause to use this you have need to learn so much docker that now this became useless.

What would make this cool:

1 – install Dockawesometool (please name the tool something distinctive to find the tool itself, problems, its repo, etc)

2 – you will be asked to accept some other installs and environment variables answer yes to all (if you are advance user and wants to handle this use some flags during installation)

3 – type in a console Dockawesometool

3.1 – You will be asked for an image/container path to be opened OR if you want to create a new one.

3.2 – Follow the questions and you are done

Extra:

4 – Advance users can use Dockawesometool with arguments like –containerpath –image –createwizard etc…

Bonus:

We have a step by step hello world to create a container, or use an existing image in “link”.

Benefit:

We have make what initially was a Hassle-free tool really hassle-free without requiring existing knowledge of docker, system dependencies, and whatsoever.

Well, my point wasn’t to do a step-by-step since the official instructions are pretty good. I presume that most people who want to use this will already have Docker setup, though. And if you don’t know how to create a new directory, this is probably not the right tool for you yet. Granted, there is a little setup, but at the end, 90% of the time, you enter “dock” and that’s it. It does the right thing. That’s pretty easy.

Of course, you are free to do what I did — fork it and fix it/document it all you like. It sound like you have a lot of interesting ideas so that would be great!

a few years ago i tried to create a tool that would allow you to list/search linuxserver.io list of docker images, scrape dockerfile configs direct from that projects github. even that got too unwieldy due to inconsistent setups.

Community, sorry to say this, do not take it personally, I really love this project, but please let me picture some of the problems I found so often around the net, specially in projects that wants to make such beautiful goals like this one that is making something more straightforward:

This tool is pain suffering in the moment you wrote this:

1 – install Docker

2 – Go check how to install docker

3 – Realise all this tool is for linux and continue:

3.1 – you found out about the missing dependencies

3.2 – Install bash >= 4.2

3.3 – install git

3.4 – install sed

3.5 – install awk

3.6 – install ssh

4 – sudo usermod -aG docker $(whoami)

5 – newgrp docker

6 – sudo systemctl unmask docker.service

7 – sudo systemctl unmask docker.socket

8 – sudo systemctl start docker.service

9 – From there, follow the setup on the project page,

9 – Edit your ~/.dockrc file and make sure the below things are correct somehow:

9.1 – DOCK_PATH is set correctly.

9.2 – IGNORED_PREFIX_PATH is set correctly.

9.3 – DEFAULT_IMAGE_NAME is set correctly.

9.4 – Among other things (WHAT OTHER THINGS?)

10- Once set up, create a test directory, (HOW?)

11- type dock and enjoy your new sort-of virtual machine. (SORT OF?)

11.1 – If you’ve set up the aliases, use dc to show the containers you have. (THAT I HAD? BEFOREHAND?)

12 – You can use dcs or dcr to shut down or remove a “virtual machine.” (NO EXPLANATION WHAT IS LOCAL OR REMOTE BEFORE THIS POINT)

13 – If you want to save the current container as an image, try dcom to commit the container.

14 – Find the link to github to discover the explanation there is even more shallow than here.

15 – Get some error and google around

16 – Learn a lot more about Docker, realise how much it sucks and how wonderful this project could be

17 – Stuck to Docker cause to use this you have need to learn so much docker that now this became useless.

What would make this cool:

1 – install Dockawesometool (please name the tool something distinctive to find the tool itself, problems, its repo, etc)

2 – you will be asked to accept some other installs and environment variables answer yes to all (if you are advance user and wants to handle this use some flags during installation)

3 – type in a console Dockawesometool

3.1 – You will be asked for an image/container path to be opened OR if you want to create a new one.

3.2 – Follow the questions and you are done

Extra:

4 – Advance users can use Dockawesometool with arguments like –containerpath –image –createwizard etc…

Bonus:

We have a step by step hello world to create a container, or use an existing image in “link”.

Benefit:

We have make what initially was a Hassle-free tool really hassle-free without requiring existing knowledge of docker, system dependencies, and whatsoever.

Or one could not update your computer thereby having all the tools and dlls stay the same such that you can change your custom code.

What’s the difference between running in Docker with an obsolete O.S. or just not updating at all? I suppose if the O.S. within your Docker image has a security flaw and running your program creates an opportunity for an infection, and you get infected, then you would still need to redo your software to run with a new “secure” O.S.

And we assume that since your program is running in its own Docker Container being infected does not harm other programs running on your system.

I think that the real benefit of using Docker is that your program contains and O.S. image that allows it to run on other systems that no longer have to have the same O.S. You still need to maintain it. You still should be updating security flaws in the O.S. you chose. But you may expect it to run almost anywhere.

Docker is a poor solution to the problem. However, it’s fantastic if you are closed source software company looking to get around statically linking which would invoke the GPL requirements. The people using this outside the context of closed source software probably have used it before with close source software or don’t understand the value of building packages.

i use containerization to run my web browser. i love that inside of the container, the browser’s dependencies are the only ones that matter. and outside of it, they don’t matter at all. every few months i reinstall the browser and all of its dependencies in a new container.

however, i am going to just blanket caution against docker, *especially* for newbies.

the first thing is that docker is decently hard to install. it’s a fast enough moving target that the documentation you’ll find isn’t really up to date. and there are a million choices you can make, but to understand them would be a major work. plus, it has a *lot* of dependencies on the kernel, which are unfortunately poorly-documented as well. maybe people who use their default distro kernel don’t have this problem? it was a real pain for me.

the second thing is that docker is severely insecure. it’s *possible* to do a good job on isolating what happens inside the container from the rest of the system, but it’s also possible to leave it wide open. by default, the container root account is the same as the host root account and the only separation is it’s living in a different namespace (it can’t necessarily see your /dev, etc). anyone who has access to run the ‘docker’ commandline utility can get root on the host in an instant. it’s not a bad design, per se, but it’s a gun that’s so heavy only superman is strong enough to aim anywhere other than his own feet.

fwiw, i use lxc. it is not as well-traveled a path but it’s *much* simpler, much smaller, much easier to comprehend, has much smaller kernel dependencies, and i was able to run it entirely within a user account (i.e., without root at all). when i had a problem with its network configuration, it was simple enough i was able to diagnose it using normal approaches. it has a hack where a normal host userid can have control over a big namespace of container uids, and i’m a little uneasy with some of that magic but i did get it all working for my uproses. it was overall comparable difficulty to docker but i like the result a lot better (no root!).

ymmv. but if you are a person installing docker from a tutorial, the odds of you understanding the security implications of your choices are almost nil. i was rather astonished, tbh. containers definitely have their place but they’re no silver bullet and i’m kind of disappointed to see the way they’re being overused these days. but, you know, different strokes.

I’ve used Docker a small amount and really wanted to like it.

But the performance hit on my i7 Win laptop was excruciating, it runs like a three-legged greyhound. Sometimes it just hung.

If I know I’m not going to be using it for a time I disable the Docker services, console etc. and the machine runs acceptably again.

If an application needs a library that can not be installed in the usual place because there is an incompatible library with the same name, all you need is LD_LIBRARY_PATH. These cases are rare btw. because most projects change the soname when a library becomes incompatible.

the biggest reason i’m using docker (podman) is that there is shitty software out there (minecraft bedrock server) that won’t let you move the ports it runs on around. Using docker + macvlan lets each instance have it’s own IP and think it’s running by itself. Also i get some security running the server in a container. This is local network only, so it’s not a huge issue.

I am however running a bunch of LXC container. basically I treat them as light weight VMs and run Alpine linux and the small group of services on them.

I think that Red Hat’s Toolbx will solve this problem for you and others.