We don’t fully understand the appeal of asking an AI for a picture of a gorilla eating a waffle while wearing headphones. However, [Micael Widell] shows something in a recent video that might be the best use we’ve seen yet of DALL-E 2. Instead of concocting new photos, you can apparently use the same technology for cleaning up your own rotten pictures. You can see his video, below. The part about DALL-E 2 editing is at about the 4:45 mark.

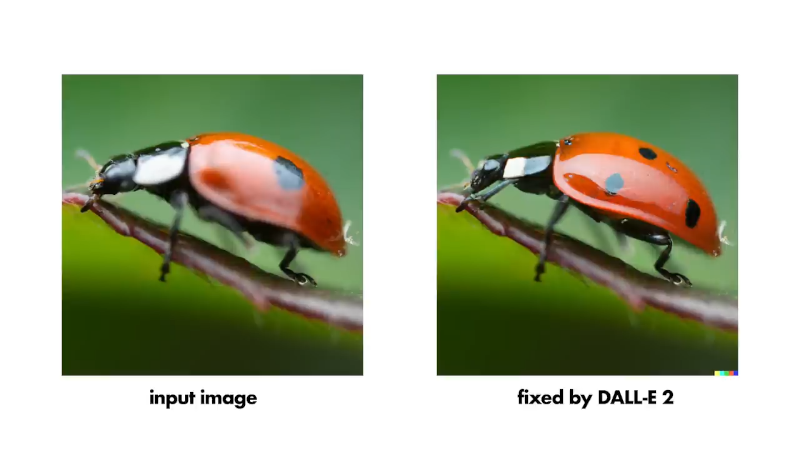

[Nicholas Sherlock] fed the AI a picture of a fuzzy ladybug and asked it to focus the subject. It did. He also fed in some other pictures and asked it to make subtle variations of them. It did a pretty good job of that, too.

As [Micael] points out, right now the results are not perfect, but they are really not bad. What do you think systems like this will be able to do in a few years?

We might not totally agree with [Micael’s] thesis, though. He thinks photography will be dead when you can just ask the AI to mangle your smartphone pictures or just ask it to create whatever picture you want out of whole cloth. Sure, it will change things. But people still ride horses. People even still take black and white photos on film even though we don’t have to anymore.

Of course, if you made your living making horseshoes or selling black and white film, you probably had to adapt to changing times. Some did and — of course — some didn’t. But this is probably no different than that. It may be that “unaugmented” photography becomes more of a niche. On the other hand, certified unaug photos (remember, you heard that phrase here first) might become more valuable as art in the same way that a handcrafted vase is worth more than one pushed off a factory line. Who knows?

What do you think? Is photography dying? Or just changing? If AI takes over photographers, what will they go after next?

We think our Hackaday jobs are safe for a little longer. We wonder if DALL-E could learn to do photographic time travel?

Digital hasn’t even killed fim, so no.

*film

Digital “killed” film roughly fifteen years ago when Canon’s 35mm CMOS sensors blew past film’s dynamic range, resolution (particularly at higher ISOs) and sensitivities. It didn’t take long for APS-C sensors to surpass 35MM film, and for m4/3 to follow.

Digital “killed” film in TV production around roughly the same time.

It took longer in cinema mostly because of all the workflow stuff and theater projection lagging a bit, but now you can buy a $1.5K macbook air that will edit and render 8k footage without breaking a sweat and even small community theaters have gone digital because it’s cheaper and more flexible…and 4k televisions are just about standard in retail.

“even small community theaters have gone digital because it’s cheaper and more flexible”

That and few first-run non-IMax movies are available on film, forcing the change and saving buckets of cash for the studios/distributors over shipping cans of film around. Unfortunately, small community theaters that couldn’t afford the digital upgrades have closed even prior to COVID restrictions – there’s one that’s still for sale not far from here.

Well, that kind of depends on the point at which something is “killed”. I can still process film myself, but the convenience of a high volume processing center a mile away is long gone. Every year there are fewer niches left where film is superior to digital.

Film photography is as dead as CDs (and BluRay are nearly out of hope). While, vinyl sales and retrocomputers prices have soared up they are nearly dead. When nostalgia is the only thing keeping a concept alive when it was popular before, it’s as good as dead. Meanwhile, horse carriages are only for tourists.

Preach brother!

(It’s thanks to people believing that, that I’m building my hoard at 25 cents a piece)

and yet kodak and fuji are building new plants to respond to the huge demand for film. color film on 35mm has been sold out for almost a year now here in the Netherlands and b/w film is the only thing atm. As i shoot more 120 film (Roundshot) I can get some emulsions like portra, but Kodak Gold 200 is unobtanium as well. my favorite shop just got a shipment of 800 iso Portra and 100 iso ektar kodak film last week. all gone in a day.

None of these are objective measures.

The camera film industry peaked in 1999 at what would be today $1.4BN/year in sales.

It’s now barely 10-20M/year.

I agree, I still like film to play with too. And I have a stack of Kodak Gold 25/100/200/400 still to use :-)

>horse carriages are only for tourists

As I hath beith an Amish person, I resent that remark. Tourists, don’t get me started!

But I ain’t never punched a tourist even if he deserved it

An Amish with a ‘tude? You know that’s unheard of!

CD´s are hardly dead, you can still buy new music CD´s. Try buying a new type IV casette, not gonna happen. Few, if any, streaming music platform deliver soundquality on par with CD even in ideal situations. Dead formats are unobtanium. Which means film is alive and well, as an amateur medium.

Hey, even flim didn’t kill painting, it just became the domain of dedicated artists, not simply to “record” pictures, I think flim will be probably go the same way.

When was it ever not a domain of dedicated artists? It takes a fair bit of skill.

Yes, but it was generally representational, after that niche could be filled by photography there was more experimentation, you know more art for arts sake, not just recording what a patron looked like.

The picture at the top of this article, was the AI asked to improve it or to modify it? Because clearly the “dots” are completly different between the 2 pictures, so if the AI was only supposed to sharpen(?) it it did a bad job…

DALLE is a fancy content aware fill on steroids. You can either give it text prompt or designate part of picture to fill in.

Yes, it probably tries to “understand” what the image is made of and then recreates it while keeping the original structure, and it causes some details to change. Photography may hence subsist for cases where details are needed, but from an artistic point of view, for the human brain that only sees a cool photo of a ladybug, the dot pattern doesn’t matter while it does if you want to identity its species.

“but from an artistic point of view, for the human brain that only sees a cool photo of a ladybug, the dot pattern doesn’t matter”

You and I have very different ideas of art and photography. If I wanted a stock picture of a ladybug, I’d… download a stock picture of a ladybug.

This is just incredibly stupid. It’s like taking a picture of your dog and getting back a photo from the Westminster Dog Show and it saying “are you sure you didn’t want this?”

taken a photo with a modern smartphone lately?

Selfie is a pretty abstract concept now.

That’s the point though. If you take a picture, you’d expect it to actually look like you and not a person vaguely resembling you.

There are lots of people using ‘filters’ to postprocess them and post them on the socials.

So much you dont recognize them in real life. There goes that point

>So much you dont recognize them in real life. There goes that point

You don’t need photography for that. Just ask them to wash off the makeup.

DALL-E 2 was asked to focus the out of focus regions. It didn’t. It’s like applying a sharpen filter and getting back a picture of your grandma.

Filter application is consistent: applying a sepia picture won’t turn you into your brother, father, or son spontaneously.

Indeed, the head also looks more like an ant to me, certainly not quite right to the ladybirds we get round here…

Have to admit I can’t quite put my finger on what is wrong, I’m not that much of a bug expert, only that it doesn’t look right and makes me think ant..

Yeah, that’s just an unrelated picture.

Looking at the spots on the ladybugs back, no, it’s not.

They say a leopard can’t change his spots… allegedly this lady beetle can!

Also, honestly, this might be underselling what higher-end smartphones already do in picture enhancements; this isn’t the good olde “first lecture in image processing” stuff alone anymore. Your phone automatically recognizing faces in the picture, selectively enhancing skin appearance, improving clarity in foreground texture… all stuff that’s been in the iphones, pixels and galaxys of the last couple years, and which are slightly more application-specific applications of similar neural network technologies

I think some of the newer ones have dedicated neural processors. If not, then soon.

And it generally looks awful, or at least awfully faked or ‘photoshoped’ (other BETTER* image processing tools are available)…

*Tounge in cheek there really, all the better image processing suits are so similar now its just down to specific job and familiarity with the workflow

I though it was interesting that the algorithm didn’t just sharpen things up, it actually changed some stuff, for instance, the spots are different, and the forward left leg has been moved slightly to open a little gap between the leg and the lower jaw.

At first I thought it was two different pictures, but the video flicks between them, A/B style, and most of it lines up, so I’m thinking it’s an “editorial decision” by the program.

I can’t help but note that the program has also added one spot that looks suspiciously like the number 6 right at the top of the shell. This leads me to baselessly speculate that it’s s sign that the software is playing with us and there are another two 6’s just out of view on the far side

Photography is however used a lot for documentation. And generalized image enhancing tools can often lead to inaccuracies. (though, some enhancements will help bring forth useful data, this is a whole topic in itself.)

So the skill to produce high quality photography for documentation reasons will always remain. Even if a lot of documentation these days are taken with regular smart phone cameras. (Though, a lot of smartphones can sometimes erases details one desired to document… Like fractures in a surface, color variations, etc.)

Where it really goes hang is when the algorithm starts feeding back on itself, since the available training material will start to contain more and more images that were “enhanced” algorithmically in the first place…

Kinda like what anime/manga did to cartoons. People copying other peoples’ work converged into a sort of “symbolic” representation that looks pretty alien and incomprehensible if you’re not used to it.

But then it gets trippy…

https://i.imgur.com/R5C5m0E.mp4

Think of it as “artist’s conception”: working with a little information, it just made up something.

“A little information” is misleading in this case: it’s working with A LOT of information, probably millions of already existing images of bugs and ladybugs in particular to form a composite.

Seeing as you could trust digital imaging&processing systems on the authenticity of the end result less with every month of a technical progress I can see a new bloom of an instant photo technology (Polaroid-like) that is light-direct-to-paper/plastic with no digital processing in the middle. That would be as “unaug” as it can possibly be, and I suppose, well paid too.

It’s not photography that this will kill, it’s people.

As others have mentioned, the “improved” image contains things that didn’t exist in the original. Today it inserts new spots or patterns; tomorrow it will insert invented terrorists, enemy aircraft or missiles, armament in an unarmed crowd.

We’re automating the manufacture of propaganda and then accepting it as whole truth or sufficiently truth-like as to effectively become fact. So sharp and careless a knife will cut our throats.

This. So much this. This isn’t an example of clever software cleaning up bad focus; this is an example of software *changing the content* of an image. The “after” picture is a lie.

+1

This is just one aspect of ‘blandization’ of our lives. If we settle for AI processing instead of high-detail images, the AI will make up the missing information. It will do that by selecting what is most expected, most popular, most common. What will be lost is the unusual, the surprising, the original. It is just the same as with AI-written articles: they will be readable and will contain the same information, but will be devoid of any idosyncracies, i.e. they will be a lot more as other articles.What our minds consume will be more and more bland, and, considering the average will be alway the AI reference point, the process will continue on and on.

Well said, I 100% agree. This feels like visual junk food.

Does this lead to rights troubles though? Remember the “mechanical tabulation of data does not a copyright make” case law. If it took image data, and presented it in a different manner, and isn’t human art, then is it just mechanically tabulated data?

Wasn’t photoshop going to kill photography? Very few jobs go away, they just change.

Also, this stuff is fun but it’s not sharpening photos, it’s creating new art. Run this on a wedding photo and I’m sure you’ll get in-focus people, but they may not be the people who were there!

“Photoshop” is not going to take photographs or generate them out of nothing, so no. It was originally made to replicate the retouching and developing processes in the digital domain.

Anything with “AI” in the title is “hot” these days. Even (and maybe especially) on Hackaday. I usually just hit the next button but the business about killing photography suckered me in this time.

Some people say that cell phones have killed photography. Or at least democratized it (for which I am all in favor). So what does it mean to “kill photography”. I mean if you aren’t shooting on film with a 4×4 camera, is it even photography? Some seem to think that is exactly the rule to apply.

So, go make some rules and definitions, take two aspirin, and call me in the morning. Meanwhile I will be busy with my camera getting raw captures, editing them in lightroom, and being basically happy. Which is all that counts in the end.

They’re probably going to get a C&D letter from TPTB saying they should have titled it “Millenials are killing photography with…”

;-)

If DALL-E 2 isn’t really rubbish then shown me an image of “An octopus in a space suit fixing a satellite.”

Not DALL-E 2 but midjourney but here you go:

https://imgur.com/a/GgVmkCA

When I look at a photograph i am thinking what can i learn here. I look at the details to see what is unusual. If i had a sharp image of the ladybug I want to notice the shapes of the legs, the spot patterns and other things. So what use is it to me to look at a computer generated image. There should be a field in exif data that is checked if any AI image manipulation has been applied. I guess its OK for crap azz photographers to feel good about themselves

Yeah, this goes way past “enhancement” and ends up in “what looks like a photograph is actually a lie.”

How long is it going to be before someone gets wrongly convicted of a crime on the basis of such “photographic evidence”? How long is it going to be before someone gets KILLED?

With the advent of Instagram, isn’t “unaugmented” photography /already/ a niche?

“picture of a gorilla eating a waffle while wearing headphones” sounds like a million dollar NFT 6 months ago.

I would like a picture of a coiled up python snake looking very uncomfortable while getting squashed by a naked foot with curly toes.

I hate this. I mean, it’s cool technology, but I dislike the idea of photos getting even farther from showing the world as it is. Photoshopped women in magazines have caused all sorts of body image issues, now imagine if every image we took was altered automatically to be more “appealing” or “correct”. I think the psychological effect would be even worse, I don’t mean just for beauty standards.

Well yes, Han did shoot first. ;-)

Even the Coccinella Magnifica gets confused because it does not even know anymore how many spots it has.

As many have spotted, the number of spots changed.

I’m looking forward to this kind of garbage being used as evidence before a court of justice.

Hours of fun!

In a court of law the photographer has to be called, to verify the image is what was really there.

Of course if it’s been heavily photoshopped, altered etc, that should come out during questioning.

Photography isn’t just taking the photo, although of course that’s an important first step.

I’ve done my time in darkrooms, mainly black and white but also come colour slide processing.

There is also:

how you manipulate the image (enlarger, processing time, solarisation, exposure onto paper, how hard/contrasty the paper is etc etc) and similar techniques in the digital domain.

Then there’s how you present and frame it….

Interesting comments above, I never liked Kodak Gold when it came out, preferring the previous generation ISO 100 (was it Ektar?) I found the Gold colour saturation unnatural. One day I will dust down my enlarger and do a few more prints.

I agree that this is an interesting but bad example of using this sort of technology. I watch lots of Michael Widells videos and would say that these digital technologies enable macro photography. When working with high magnifications you often have extremely narrow depth of field and require stacking multiple images to get the subject in focus, that requires AI/ a complex algorithm to compute. The difference being the output of stacking is real vs what we are shown here which is the AI’s interpretation of the subject.

As someone who takes handheld focus stacked images at greater than 2x magnification I often rely on photoshops content aware fill to populate area’s of the frame that I might not have covered in the field. I think this technology would probably be good for stuff like that, I would never use it to generate the subject just the backdrop if required.

30 seconds in, video goes “photos now get 30% less likes on Instagram than they used to… it’s not worth it anymore”.

Oh the humanity!

20 years ago, if I saw a shot (for example because it’s easy to describe) of a red barn that really popped with brooding storm clouds over it looking very menacing, I’d say nice shot, well done, presuming the photographer had to wait until the right moment, frame it right, use the right lens and filter. 5 years later I’d have assumed that the barn was dull red and the sky not so dark and it was post processed with digital filters. Now, I’ll wonder in passing how many different shots are composited together, whether it was a brown barn under clear skies originally. I don’t see the photographer in there any more… it’s a picture… I question whether it’s still a photograph… I do not see evidence of the skill of the dude holding the camera… I don’t really care.

Next it will interpret the user’s request in comedic ways and put that guy with the FB ads out of business.