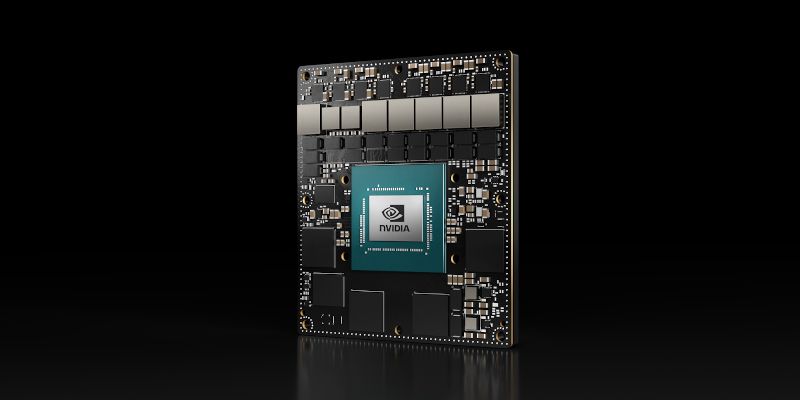

Back in March, NVIDIA introduced Jetson Orin, the next-generation of their ARM single-board computers intended for edge computing applications. The new platform promised to deliver “server-class AI performance” on a board small enough to install in a robot or IoT device, with even the lowest tier of Orin modules offering roughly double the performance of the previous Jetson Xavier modules. Unfortunately, there was a bit of a catch — at the time, Orin was only available in development kit form.

But today, NVIDIA has announced the immediate availability of the Jetson AGX Orin 32GB production module for $999 USD. This is essentially the mid-range offering of the Orin line, which makes releasing it first a logical enough choice. Users who need the top-end performance of the 64GB variant will have to wait until November, but there’s still no hard release date for the smaller NX Orin SO-DIMM modules.

That’s a bit of a letdown for folks like us, since the two SO-DIMM modules are probably the most appealing for hackers and makers. At $399 and $599, their pricing makes them far more palatable for the individual experimenter, while their smaller size and more familiar interface should make them easier to implement into DIY builds. While the Jetson Nano is still an unbeatable bargain for those looking to dip their toes into the CUDA waters, we could certainly see folks investing in the far more powerful NX Orin boards for more complex projects.

While the AGX Orin modules might be a bit steep for the average tinkerer, their availability is still something to be excited about. Thanks to the common JetPack SDK framework shared by the Jetson family of boards, applications developed for these higher-end modules will largely remain compatible across the whole product line. Sure, the cheaper and older Jetson boards will run them slower, but as far as machine learning and AI applications go, they’ll still run circles around something like the Raspberry Pi.

I will use it with GPT3, and see if it can seduce old men/women into sending me money. It would pay for itself faster than a Bitcoin minner. It would learn from its own output, out to the point of becoming the most seductive entity in the planet. In fact, it will also seduce hackers into buying more modules. See? You already want one, dont you? Well hurry, before they run out of stock.

I’ll take 8.

No, 300!

(French guards on castle wall)

We already have one of our own!

The more you buy the more humans you enslave!

Sois this a reference to The Jetsons?

At whatever point of the pandemic it was that I, unfortunately, discovered the subculture that snorts and drinks their own aged urine as a health aid, I found some of them referring to it as “orin”.

Harmful, disgusting and useless as a cure for anything, but still not as harmful or useless as a lockdown.

Here I am looking for a coral at less than 1000% markup. At least this should only end up at 2-300%..

Unfortunately Jetson Nanos have been impossible to find for well over a year. The “$59” board is a $350 purchase, if you can find one (spoiler: you won’t). The prices and availability still haven’t recovered as of the beginning of August.

That will seriously increase the count of detected bananas !

At $399 for the smallest SO-DIMM, I’d be pleasantly surprised if someone makes a successful IoT product out of any of these.

In my experience, things retail for a minimum of 4x the BOM cost, with the distributor and retailer each charging double their cost. Any quantity discount a developer would get would be eaten up by the cost of the labor and materials to turn a bare SO-DIMM module into a marketable product. Even selling at $1200 would leave no margin for the developer/producer, and what IoT device are people going to buy that costs the same as a refrigerator?

They’re all vastly over-priced, IMHO. Cool and interesting, to be sure, but ultimately not viable. Arm and RISC-V will eat their lunch if NVidia can’t get their prices down.

Someone is buying otherwise the prices wouldn’t be staying that high for that long.

The price is high, because the demand is low. They somehow need to pay back the investment they made for launching such a product. Not worth for Tinkering IMHO, but is a nice gimmick into tricking investors to buy more stocks.

https://finance.yahoo.com/quote/NVDA/

People don’t use these for IoT – they use them for robotic perception/sensing and control, and they use these as opposed to a less capable CPU-only ARM device because the onboard GPU is extremely powerful, and they have far more ISP channels for cameras than most other ARM SoCs.

A retail $999 SoM optimized for inference and low power consumption? Sounds like Nvidia is targeting auto makers to support their efforts toward autonomous driving and similar applications where cost sensitivity is not the primary concern.

Also, large auto makers will be paying a fraction of that price in volume.