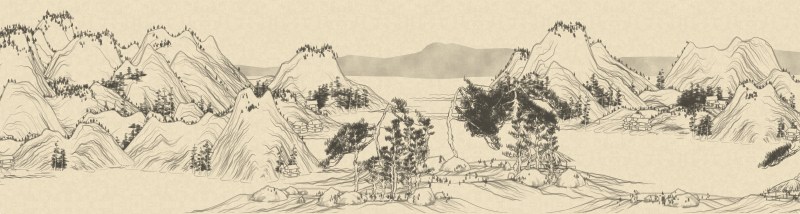

Traditional Chinese landscape scrolls can be a few dozen feet long and require the viewer to move along its length to view all the intricate detail in each section. [Dheera Venkatraman] replicated this effect with an E-Ink picture frame that displays an infinitely scrolling, Shan Shui-style landscape that never repeats.

The landscape never repeats and is procedurally generated using a script created by [Lingdong Huang]. It consists of a single HTML file with embedded JavaScript, so you can run it locally with minimal resources, or view the online demo. It is inspired by historical artworks such as A Thousand Li of Rivers and Mountains and Dwelling in the Fuchun Mountains.

[Dheera]’s implementation uses a 10.3″ E-ink mounted in an off-the-shelf picture frame connected to a Raspberry Pi Zero running a forked version of [Lingdong]’s script. It does a decent job of avoiding the self-illuminated electronic look and creates a piece of decor that you could easily just stand and stare at for a long time.

Computer-generated art is making a lot of waves with the advent of AI models like Dall-E and Stable Diffusion. The ability to bring original art into existence with a simple phrase will have an undeniably profound long-term effect on the art world.

No video of it in action who then cares.

E-ink is not noted for its “action” capabilities. But thanks for your comment.

The action of the scrolling e-ink art work is perhaps the main attraction of this art work. So yes, I would say it is rather essential.

The slow update capabilities of e-ink are almost a feature given the speed of the scrolling action.

But a timelapse would have been nice… No?

I care. Thank you for your attention.

It runs at (roughly) 0.0033 fps.

A 5 minute video would just show one single frame change. Not much action, there is.

I wonder if time lapse has been invented yet…

+1 to the other 3 comments you got, just wanted to make sure you noticed the url? theres a website if you wanna see it “in action” without e-ink limitations :)

I agree. A video would be nice. The demo is useless, since it only scrolls one frame.

And for the smart guys who answered before: there is this thing called timelapse.

Theres a button to (generate more and) scroll further ;)

This link shows the algorithm in action:

https://lingdong-.github.io/shan-shui-inf/

It scrolls to the right of the image by itself. After that you need to press the right arrow (on the side of the image) to generate a new extension of the image. Then you can test the MTBF of you mouse by endlessly clicking on the right arrow… for a endless landscape.

Or …. you can install a mouse autoclicker (there’s probably a project for that on this bat channel)

Mice are getting too expensive for live MTBF testing, after all.

Upper left there is a Settings tab where auto-scroll (2 seconds) can be enabled. So no need to mouse click!

Yes, that would do it, :-D

Thank you for the link! The generated landscapes are quite beautiful. It’s something I might enjoy on a panel that’s much larger than 10″, so I wonder whether they might look as nice on Samsung’s The Frame TV (QLED, but anti-glare matte displa in Art mode) vs. on an E Ink display.

I made a list of the people who are interested in your comment:

1.

Not to be a jerk, this is not a “landscape that never repeats”. The display is only capable of displaying 16^(1872×1404) possible distinct images, so that is the maximum number of frames that it can possibly scroll before it it has to start repeating them. Just sayn.

Heh! I bet you are the kind of guy who watches static on old analogue TV’s in the hope of seeing something meaningful, eventually.

Technically correct, the best kind of correct.

Never mind that the sun will be burnt out before the display gets to show even a thousandth of a % of that

If a single frame in a video is identical to an earlier frame, do you say the video repeats?

Hmm meh, every frame has to repeat after that – even though they could be shown in infinitely different orders.

That is such a large number that you’d have to be chewing through them at 2.35*10^3154660 frames per second to go through all combinations before the heat death of the universe, so I think the fact that it can’t refresh that quickly means there is, effectively, no repetition.

By that logic, all images including real images of all time in the past and future would have been generated in that process…

By your logic, it would be just as correct to say that about a single binary pixel – it too will have shown all images of all time past or future after blinking just once! :)

The landscape doesn’t repeat. Frames might repeat, though.

Mathematicians will now start to talk about “disjunctive sequences”, but that would get pretty unfunny and pretty boring pretty fast.

Consider the number “pi” (π), where digits do re-appear, but the decimal representation never ends, nor enters a permanently repeating pattern.

I will point out that the current value of pi is about £1.25 for a steak and kidney one.

Frankly, with the number of frames available and the time taken to change frames, the practical difference between “it never repeats” and “you’ll probably never notice a frame repeating” is both trivial and meaningless ….

…. unless you are really into that kind of thing (yes, I accept that theorists raise valid aguments, but they are irrelevant to most of us, not being theorists).

Where are you procuring your pi? Last month the ones I bought were 2.5 quid.

The local farm shop bakery – they’re best straignt from the oven.

Don’t get many theorists around here …. mostly dairy farmers, who tend to deal purely in down to earth stuff.

If I am looking at the display now and I see an image of a landscape, then it changes, and then I look at it again next week and see the exact same that I saw the first time then I’d describe that in english as “the landscape repeated”! :)

If I say something and then I later say it it again, then I am repeating myself – even if I subsequently say something else in between and after.

At 0.0033fps, each of your 16^(1872×1404) images should show up once every 10^3164777 seconds. The age of the universe is less than 4.4*10^17 seconds (estimated 13.82 billion years). I think it’s a safe bet you won’t see the same two frames in your lifetime.

The difference between 1 nanosecond and 10^3164777 seconds is infinitely smaller than the difference between 10^3164777 seconds and infinity! :)

There’s infinitely many pizza huts in there, see if you can spot one! Should be easy.

there’s a link to demo in the description….

None of the actual e-ink display though. Am wondering if runs really slow to avoid e-ink artifacts.

Lovely idea. I might just steal, um, borrow the code and run it as a screensaver.

I just found an easter egg in the demo – or should I say pizza egg (Pizza Hut).

…… and period accurate power pylons?

easter egg or dall-e artifacts?

Easter eggs – they are in the code.

>The ability to bring original art into existence

Is it original, or just unique?

As far as I understood, the whole system is basically like making a google image search with some keywords for what the image is supposed to have, and then selecting the top ten images out of the search result. Then you run an evolution algorithm over a bunch of noise until the result is statistically similar to the previously selected images, then finally having someone pick the best looking result out of multiple iterations.

At all points, the algorithm is not making original art, but comparing, “Does this look like Andy Warhol? No? Then does this look like Andy Warhol? No? Then does this… “

No.

This gets very meta very fast, but making art in the style of another artist is still original art. I don’t see any reason to not call this original art, other than some vague definition of original art being limited to being created by humans. The question is not “Does this look like Andy Warhol?” it’s “Does this look like *any* Andy Warhol?”

In a sense, humans are using a GANN process to refine their ability to draw a human figure, or to paint photorealistic images. You start basics when you’re young and continue to refine your technique until you are very good at it. If you make an impressionist-style painting, it will be compared to the famous impressionists of history. That will be how its qualities are defined. That doesn’t mean it’s not original.

Go read the linked github repo. It’s no AI, the author of this article just added that at the end with no relation to the actual project they were highlighting. The algorithm used in this project seems to just use good old perlin noise, as far as I can tall.

DallE and similar trained NNs have effectively learnt in the same way human artists do: by looking at a whole lot of existing art, remembering what subjects looks like in different styles, and then producing object in style when asked. People have the idea that they are somehow “copy and pasting” from a set of sample images, but that is not the case. They are somewhat cargo-cultish in what they produce (e.g. a NN trained on a bunch of images with signatures will often generate images with a word-like squiggle, because it has learnt that art will often have some sort of squiggle near a corner), but so are human artists (e.g. artists who grab random japanese characters to slap into an image).

Amusingly, “copy and paste from random images” is a human art style often used for concept art, and has a physical analogue in kitbashing.

Playing around with the demo, I noticed a little “Chinese traditional hut” appear. Wit a clear label on it’s roof spelling “Pizza Hut”, funny Easter Egg :-)

Unfortunately I can’t share the screen shot here.

You mean that funny pizza egg.

There are also power transmission towers.

Just finished an artist collab event here in Hawai’i. Being the tech geek in the mix, this looks like a really fun piece for next year. The power pylons are a nice touch.

What a cool project. I love it.

This is cool! I do want to voice that Generative Art is not the same as AI art like what dall-e or SD does, though! Generative Art is programming the computer and it often takes good programming and maths skills (e.g. demoscene, vfx, graphics). And, it’s often original. AI art uses ML models like GPT-3 to associate pictures with words and then tries to “predict” where pixels and colors should go–it is not original because it’s only possible by sourcing and processing huge amounts of content and examples, and it’s not programmed with generative or mathematical algorithms, but trained with millions of existing pictures and often needs tons of compute resources. This is Generative Art. It’s programmed by a human. All it needs is that html file and it runs on low-end hardware.

Lol… I didn’t know ancient Japan had so many pizza huts

This is extremely cool. I feel inspired to build this! Thank you for sharing it!

Custom picture frame, not off-the-shelf. Thank you for posting this. I’ve been meaning to tinker with E-Ink. 🥰