Humans manage to drive in an acceptable fashion using just two eyes and two ears to sense the world around them. Autonomous vehicles are kitted out with sensor packages altogether more complex. They typically rely on radar, lidar, ultrasonic sensors, or cameras all working in concert to detect the road conditions ahead.

While humans are pretty wily and difficult to fool, our robot driving friends are less robust. Some researchers are concerned that LiDAR sensors could be spoofed, hiding obstacles and tricking driverless cars into crashes, or worse.

Where Did It Go?

LiDAR is so named as it is a light-based equivalent of radar technology. Unlike radar, though, it’s still typically treated as an acronym rather than a word in its own right. The technology sends out laser pulses and captures the light reflected back from the environment. Pulses returning from objects further away take longer to arrive back at the LiDAR sensor, allowing the sensor to determine the range of objects around it. It’s typically considered the gold-standard sensor for autonomous driving purposes. This is due to its higher accuracy and reliability compared to radar for object detection in automotive environments. Plus, it offers highly-detailed depth data which is simply not available from a regular 2D camera.

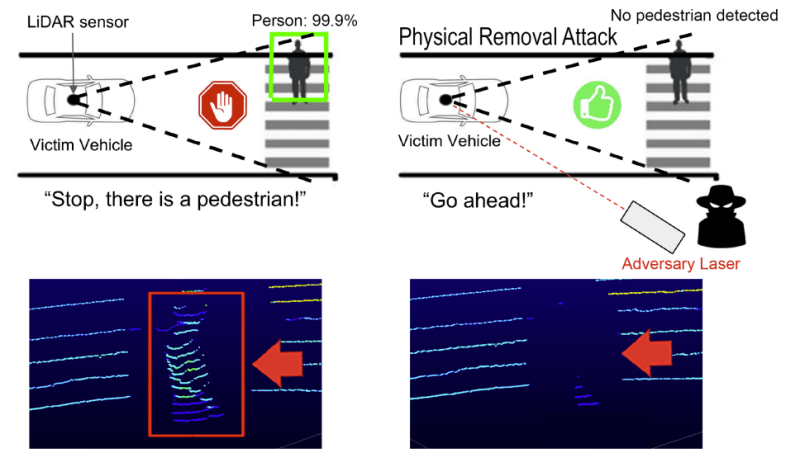

A new research paper has demonstrated an adversarial method of tricking LiDAR sensors. The method uses a laser to selectively hide certain objects from being “seen” by the LiDAR sensor. The paper calls this a “Physical Removal Attack,” or PRA.

The theory of the attack relies on the way LiDAR sensors work. Typically, these sensors prioritize stronger reflection over weaker ones. This means that a powerful signal sent by an attacker will be prioritized over a weaker reflection from the environment. LiDAR sensors and the autonomous driving frameworks that sit atop them also typically discard detections below a certain minimum distance to the sensor. This is typically on the order from 50 mm to 1000 mm away.

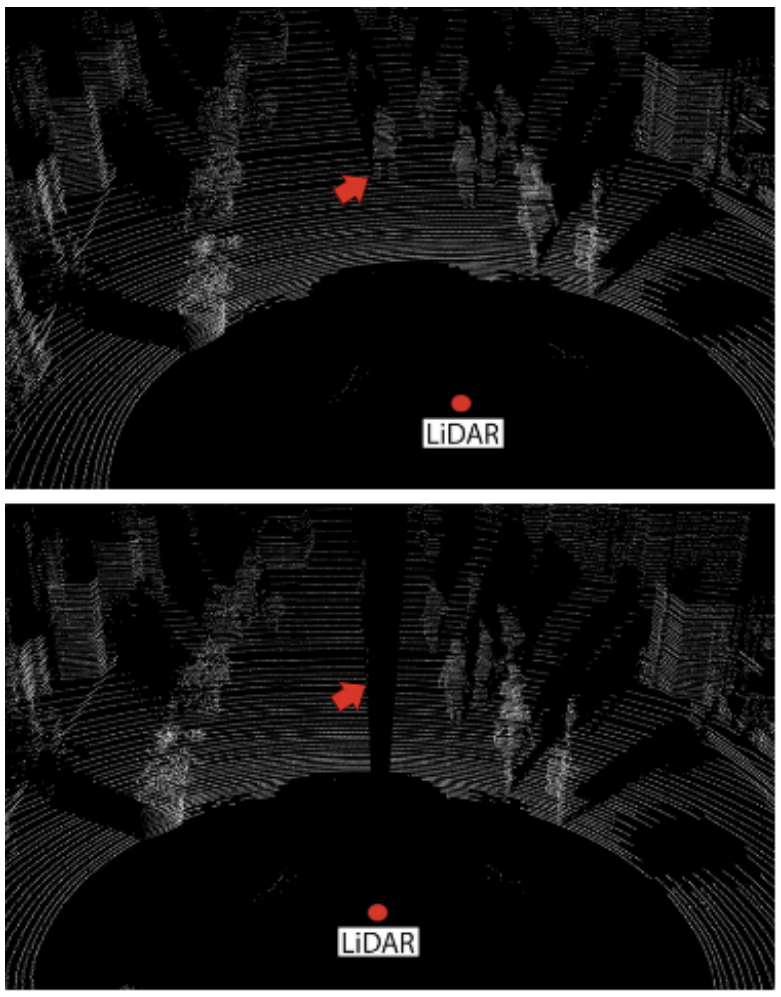

The attack works by firing infrared laser pulses that mimic real echoes the LiDAR device is expecting to receive. The pulses are synchronised to match the firing time of the victim LiDAR sensor, in order to control the perceived location of spoofed points by the sensor. By firing bright laser pulses to imitate echoes at the sensor, the sensor will typically ignore the weaker real echoes picked up from an object in its field of view. This alone may be enough to hide the obstacle from the LiDAR sensor, but would seem to create a spoofed object very close to the sensor. However, since many LiDAR sensors discard excessively close echo returns, the sensor will likely discard them entirely. If the sensor doesn’t discard the data, the filtering software running on its point cloud output may do so itself. The resulting effect is the LiDAR will show no valid point cloud data in an area where it should be picking up an obstacle.

The attack requires some knowledge, but is surprisingly practical to achieve. One need only do some research to target various types of LiDAR used on autonomous vehicles to whip up a suitable spoofing apparatus. The attack works even if the attacker is firing false echoes towards the LiDAR from an angle, such as from the side of the road.

This has dangerous implications for autonomous driving systems relying on LiDAR sensor data. This technique could allow an adversary to hide obstacles from an autonomous car. Pedestrians at a crosswalk could be hidden from LiDAR, as could stopped cars at a traffic light. If the autonomous car does not “see” an obstacle ahead, it may go ahead and drive through – or into – it. With this technique, it’s harder to hide closer objects than those that are farther away. However, hiding an object even for a few seconds might leave an autonomous vehicle with too little time to stop when it finally detects a hidden obstacle.

Outside of erasing objects from a LiDAR’s view, other spoofing attacks are possible too. Earlier work by researchers has involved tricking LiDAR sensors into seeing phantom objects. This is remarkably simple to achieve – one only need transmit laser pulses towards a victim LiDAR that indicate a wall or other obstacle ahead.

The research team note that there are some defences against this technique. The attack tends to carve out an angular slice from the LiDAR’s reported point cloud. Detecting this gap can indicate that a removal attack may be taking place. Alternatively, methods exist that involve comparing shadows to those expected to be cast by objects detected (or not) in the LiDAR point cloud.

Overall, protecting against spoofing attacks could become important as self-driving cars become more mainstream. At the same time, it’s important to contemplate what is and isn’t realistic to defend against. For example, human drivers are susceptible to crashing when their cars are hit with eggs or rocks thrown from an overpass. Automakers didn’t engineer advanced anti-rock lasers and super-wipers to clear egg smears. Instead, laws are enforced to discourage these attacks. It may simply be a matter of extending similar enforcement to bad actors running around with complicated laser gear on the side of the highway. In all likelihood, a certain amount of both approaches will be necessary.

XKCD addressed something like this a while ago.

https://xkcd.com/1958/

you need several phd students and expensive equipment plus trail and error to get it to work easier to just hack the car remotely somehow so i call it plausible but not a real lidar problem

Meh… just because it’s easier to say the word ‘hack’ doesn’t mean it is actually easier.

Humans are and will always be more resistant to these kinds of shenanigans (and simple variation in road conditions which will always screw up AI). Generalizing information. The idea that truly autonomous self-driving cars are coming any time soon is laughable. The idea they’d be safer than human operators is even worse. Pure engineering hubris: assuming machines are just better than people by default, that this status won’t be a HUGE undertaking.

Plus, people will come along to disrupt self-driving cars because they represent a threat to labor interests and are a form of conspicuous progress/novelty. It’s not like the reddit-brained XKCD believes, that these situations are simple equivalents.

“Humans are and will always be more resistant to these kinds of shenanigans”

For humans the #1 accident excuse is “I didn’t see them” so humans most certainly have issues with their vision sensors.

Just try driving westbound in the late afternoon and then tell me that humans don’t have issues with their vision being degraded.

Oh and by the way did you know that people can go years and years without getting their vision checked by the DMV and they could basically be blind from cataracts and still driving.

And then you can ask any bicyclist about the vision of human car drivers and be prepared for a laugh or two ( “I didn’t see them” ha ha ha)

The only reasonable assumption to make is that human vision is unreliable at best and possibly much worse.

With AI you also get zero situational awareness and very limited object permanence, so when e.g. a cyclist goes behind a bus stop they simply fall out of existence as far as the computer is concerned. People patch up their limited senses by running an internal simulation of the world that tries to predict what’s happening – as long as they bother to pay attention.

A self-driving car doesn’t have that sort of computational capacity, since it has to make do with the power budget and complexity of a laptop. That’s why it’s just running on what reduces to a bunch of if-then rules that react to simple cues. All the “smarts” are pre-computed elsewhere in the training simulations that try to extract those rules. That’s what makes it fragile to errors in perception much more than people are.

As everyone else on this planet, i firmly believe i drive safer than average, and that my driving is safer than a self-driving system, but that’s not the point. The point is that if everyone used these systems, everyone would be more safe, which makes these systems worth it, and with time they will only become better and safer, and our lives would be considerably improved.

I’m confused by your comment about how a laptop would be enough? A modern GPU or FPGA-chip is capable insane amounts of data-crunching. It really is mind-boggling how much power these things posses, and how little it actually takes to put data through a neural network and “a bunch of if-then rules”. Even with just a bunch of if-then rules it’d be pretty simple to make it detect “Something went behind this bus-stop at a speed and direction that could make it collide with me in the next turn, if it doesn’t emerge after the bus-stop, proceed extra carefully” ?

>i firmly believe i drive safer than average

And you’re very likely right.

Most people never have an accident, some few have many. The “average person” is simply an abuse of statistics which includes hidden assumptions, such as, everybody will be driving drunk for some number of miles in order to explain why that risk applies to the average person.

>A modern GPU or FPGA-chip is capable insane amounts of data-crunching

Measured in computational complexity, it’s still on the level of an insect. It just doesn’t have enough capacity to “think”, except in very rigid rule-based terms, and it’s only barely able to recognize what’s going on.

In the 80’s they made a car drive itself by following a couple pixels off a camera pointed at the side of the road. It takes surprisingly little to get 95% of the job done, which masks the real complexity of the task. It’s the last 5% where things get exponentially more difficult.

>it’d be pretty simple to make it …

It would, but there you’re chasing exceptions and special cases, which quickly explode into the millions, and every time you add another rule you need to make sure it doesn’t conflict with another…

Also,

>put data through a neural network

Neural networks aren’t magic, and they certainly aren’t anything close to what real brains do. An artificial neural network is actually a bunch of IF-THEN rules that are not programmed by a person but “found” by the training algorithm. With this input, give that output – that’s it.

In order to train the network, you need to know the right solution, which cannot be computed “on the fly” by your self-driving car itself because a) it doesn’t have the right solution, b) the neural network would be messed up if it starts to modify itself (catastrophic forgetting problem).

“For humans the #1 accident excuse is “I didn’t see them” so humans most certainly have issues with their vision sensors. ”

That assumes they’re even looking out of the window in the first place and not at their phone screen or the heater controls.

At least we know that robots will pay attention for 100% of the time.

This is another way that could allow an operative of an intelligence agency to easily take out a target while making it look like a mere accident.

Oh, the old Princess Diana trick!

Yes… intelligence agencies will set up near the place where their ‘targets’ regularly stroll around in the road and then wait for a self driving car to come by. Quite how the self driving car will get past all the other cars that have stopped for the people roaming around the streets I don’t know.

Who said it has to a pedestrian and not the driver that’s the target?

I like the idea of spoofing these automated vehicles. I am looking forward to some hackaday articles about projects along these lines. (Asleep at the wheel? Serves you right!)

I think covering the topic is good.

I think providing directions on how to assemble the hardware on a budget to do so crosses a line.

Covering the topic is good because it highlights the design flaws and provides opportunity to consider mitigations. (Random scanning of environment instead of sequential; for instance)

If it can be done, guides to doing it will be easily available on the internet. Trying to sanitize and restrict information won’t work. It’s an engineering problem, not a censorship one

+1

Doesn’t mean you have to make it trivially easy and spread the guide. For most arses if its $50 bucks of online order with delivery and no effort they would consider it. But having to spend an hour searching and reading through the various no doubt somewhat contradictory guides, hunt the right parts down or then spend more time adapting to the alternatives they can source – for most that is too much effort just to be a dick to other humanbeings.

Restricting Information only results in the restricting of knowledge..

Are you serious? Serves you right? Were you born a jerk or do you just play one on the Internet? What about the pedestrian you just mowed down? Does it serve them right too? I’m all for pointing out that the driver has a responsibility if an accident occurs, but to suggest that it’s some sort of justice is going way too far.

Obviously he is not serious.

If you’re going to sleep, sleep in your bed.

If you’re going to drive a car, you learn to do so and drive a car.

I did see an article that showed a picture of a girl with an eyemask on her head on

a pillow and a blanked. She was in a sleeping position in a Tesla.

What I would like to know is, if the car itself has some sort of malfunction and

the person is truly asleep at the wheel, and due to that malfunction, the car gets

into an accident, it would be the responsibility of the driver asleep or not no?

In a levels 1-3 of automated driving (and zero, technically), the driver would be at fault. At levels four and particularly five, the driver would not be at fault.

If a driver of a self-driving car fell victim to a replay attack where the vehicle stopped for something that WASN’T there, I’d have no pity.

If you want to travel with none of the responsibility of operating a motor vehicle – take the bus.

I’ve got a lot of experience in safety certified software.

One code rule we had was that the code has to know that it’s correct or it stops the process. In the practical terms of an aircraft altimeter, if the system didn’t know for certain that the displayed altitude was correct, it would blank the screen (and the flight crew as procedures for that).

For the lidar blanking, there’s a difference between *knowing* there’s no person in front of the car, and “not detecting* a person in front of the car because the lidar didn’t come back. No information is not the same as information indicating nothing, so this should trigger a disconnect from autodrive and slowing to a stop, allowing the human driver to take over. Absence of evidence versus evidence of absence.

So far as I can tell, automotive software isn’t subject to the same safety certifications as medical or aircraft, but perhaps that should change. I don’t believe autodrive is tested during the auto certification process. but perhaps that should change as well.

One way around this is to reproduce this effect (masking portions of the lidar) during that autodrive certification process.

The rationale should be that the car has to have evidence of absence before driving through any section.

Yup. It’s pretty much guaranteed that this attack is going to lead to those data points being either:

1) Returning as low-range returns, which will cause many obstacle detection systems to enter a “obstacle too close, STOP!” state

2) Returning as “error” returns, which will, again, cause the obstacle detection systems to enter a “STOP!!!” state

Of course, in an automotive scenario, forcing a sudden stop can itself be causing a safety concern. Reducing mean time between dangerous failure gets harder when failure modes that may be safe in some use cases are dangerous in others.

For example, “slam on the brakes as hard as you can” is safe for a fully automated vehicle in a warehouse at low speed, but not for a manned vehicle (might throw the operator), and not at 70 MPH on the highway for any use case.

“The rationale should be that the car has to have evidence of absence before driving through any section.”

Agreed, and if that’s implemented, that would be the best outcome of this demo.

But the problem is that there are so many false positives in autonomous vehicles already, and they drive very conservatively. To the point that the broad majority of accidents involving the self-drivers in San Francisco have been the Waymo/Cruise car getting hit from behind. Other drivers don’t know why the thing is slowing/stopping.

But if you’re choosing between blindly driving through an intersection and hitting the brakes, it’s pretty clear which of these is the right course of action.

We’re in an awkward phase.

Actually, on re-reading the paper, they didn’t test it out on cars, only on the LIDAR. So how the car reacts to the data is all speculation, AFAIK.

Be careful what conclusions you leap to. (He says, to himself as much as to the audience.)

This article does not demonstrate how to spoof an automated vehicle despite its claims. The fact that you can spoof a lidar developed for and delivered to an academic usage does not mean you can spoof one developed for and delivered to an automotive usage. Or that jamming one sensor necessarily leads to an overall failure of the vehicle to respond in a safe manner. While the techniques described would not jam an automotive lidar, similar techniques would blind a camera or a driver.

Up the game: encrypt LIDAR light pulses.

Or at least the sensor could randomize to some extent the timing of the pulses it sends out (and correspondingly factor that it in for received echos) That would make it difficult for the adversary to send pulses back with the right timing to create the impression of a false target.

My 2 cents on this is that even with coded pulses for security all you have to do is overpower the sensor like shining a flashlight into a camera. I imagine even these would be susceptible to that kind of attack.

With filters you can look for a specific frequency of light/radio/whatever. Change the frequency in a pattern and interval that are known to both the transmitter and receiver in conjunction with those filters and you have a pretty resilient system to this type of jamming… This the principal behind spread spectrum, the foundation of many modern radio systems.

Unless you have a sensor what detects said filters and re-sends them. It’s just an extra step but not impossible. Also if you just overload the receiving part of the lidar ot doesn’t really matter what filters it needs, you still incapicitate the lidar

Until LIDAR comes way down in price it’s a deal breaker for cars anyhow.

LIDAR based autodrive was always a tech demo/stock pump.

As it stands there is cheap crappy LIDAR with very few beams and expensive AF LIDAR that can construct a decent representation of the world. Even that later doesn’t produce working autodrive.

Tesla style camera/radar based autodrive is pure vapor. Used by fools.

But look at the PE ratios! That’s success.

I thought some scanners already use pseudo-random timing intervals to differentiate between other LIDARs. Though I’ve always wondered what will happen when a busy intersection is filled with those, do they really get usable data out of it anymore.

You could validate the LIDAR input with something like laser speckle inferometry.

Simplistic response: if it gets jammed, set alert and proceed to shut down in a safe method or Is stopping the vehicle the objective? If this is on a tesla, just park a trailer on the freeway. Maybe not…..

The issue is that the paper shows how LiDAR would be blinded and don’t even knew it was blinded. To blind a light sensor you just shine a laser on it, it’s not difficult. The paper shows “selective blindness” that will create a blind spot to fool the sensor.

So it would say “I scanned the road ahead and there’s no obstacle,” even with an obstable being present.

The Velodyne VLP-16 is old and has less diagnostic data available than many newer LiDAR units.

This attack would likely trip the self-diagnostics of many newer LiDAR units.

Their attack apparently depends on “2) the automatic filtering of the cloud points within a certain distance of the LiDAR sensor enclosure.”

Most processing systems are going to consider near-window returns at azimuths/elevations where a near object (the vehicle itself) is not expected to be a fault condition, not something that merely gets filtered out.

Their attack fundamentally only works against systems that are EXTREMELY broken in design.

Among other things, their paper lists behaviors of the Velodyne ROS1 driver – *no one* deployed ROS1 in production in an automotive environment (and very few people deployed ROS1 in anything other than a research/prototyping environment because it was architecturally not suited to that)

“This has dangerous implications for autonomous driving systems relying on LiDAR sensor data.”

Wonder if this is why Tesla goes strictly with cameras.

https://youtu.be/PpXXu3kXWAE?t=244

Probably because it’s cheaper. There’s likely even more adversarial attacks possible with camera data

A good LIDAR system costs more than a plaid Tesla.

If a company is demoing LIDAR based autodrive, they are in fact advertising their stock. Like an old ‘Nortel network’ ad during a televised golf tournament. Pure and simple end run around the market regulations, advertise stock while pretending to advertise product.

I’d assume that if a LIDAR(‘s software) ignores an input/object because it’s too close the software compensates for that as in only ignoring it for the few ms it takes for a bird to pass through.

If the “object” is there for too long, go into proper fault mode (slow to stop, human driver taking over, …)

Silly question that I’ve always wondered…when every car on the road has LiDAR or other broadcasting sensors…what keeps the system from getting confused with pulses from other cars / dealing with noise?

Big problem for what is called “flash” LiDAR and ToF cameras – but most LiDAR in automotive use is a scanning LiDAR, so the chances of two units facing each other being perfectly aligned in their scan periods for multiple consecutive scans are very slim.

Not really.

If the units are of the same model, then their scanning periods will be almost the same, plus or minus some tolerance in manufacturing. They will drift in and out of alignment continuously as dictated by the difference in their scanning rates.

If you have multiple vehicles facing each other around the same intersection, they will periodically blind each other.

Now the companies who make driverless cars can say that it’s not their fault. Meanwhile a person lay dead in the street.

Yes because the driver is never at fault when the light gets in their eye, we blame the light source.

It is not “all for some company’s profit”. A well-implemented system could be safer than humans and certainly more convenient.

No it wouldn’t. That’s a progressive assumption based on absolutely nothing

Seriously? Humans are terrible at vigilance tasks like driving, particularly when peculiarities of human vision processing (sorry mate, didn’t see you!) are taken into account.

If humans were removed entirely from the driving equation and the automation was standardised, it would be far and above safer. The issue is that we are nowhere near that, and companies are literally using people’s lives as alpha and beta testing for their software.

People like you are how the world ends after handing control to SkyNet.

Cars are already an unacceptable transport solution over the kind of timescale that would produce a functional self-driving car. Public transit is the actual solution to cars being dangerous, because personal automobiles are stupidly resource-inefficient and there’s only so much lithium/copper/steel/aluminum/etc. to go around.

Thank you Jim! If we have adequate public transport, the humans can already text/play games/read a book/sleep/be drunk. Or, you know, do productive work or study! Driving oneself is a huge waste of resources, and the answer isn’t self driving cars, it’s good, efficient public transport.

I fully expect this to become the modus operandi: finding ways to excuse accidents and errors because “oh, it was just an edge case” when in fact it is only edge cases that matter.

Just use a tunable laser (or switch between two or more with different frequencies) so you can cryptographically modulate the frequency of the LIDAR such that it can screen out any apparently returning photons of the wrong color at the wrong time. Spread spectrum is already a congestion adaptation in signal processing, and the streetscape is going to get very congested soon anyway. My gob that jab has left you kids lower on the IQ scale and you don’t even feel it.

I have absolutely no idea how you can make such an excellent point, and then follow it up with that complete drivel of a final statement.

Jab obviously affected your brain and you don’t even feel it.

Don’t be so smug. It will be years before the science is in on the effects of the jab and isolation. Early data isn’t looking great, lots of excess deaths among the jabbed. Over/under on age when jab was more dangerous then rona? I take 40. Add isolation and I take 25.

I’ve been jabbed loadsa times and came up with a notched spectrum idea before scrolling down to this point. Which is even better than yours. So I think that negates the jab causes morons theory.

Yes, you have absolutely no idea.

A fake Detour sign is all you need, just ask Wile E Coyote.

And a painting of a tunnel opening…

That would actually work if you paint it with vantablack, so the car thinks there’s nothing there.

Someone at the CIA’s overly complex assassination division just read this article and shouted out of his cubicle “Hey Phil! Come check this out, I have an idea!”

You can trick human sensors too in various ways of course.

From high beams to mirrors to lasers and lots more, many options.

And plenty of people died from dead zones in mirrors alone.

Yes, however, people are smart enough to notice they’re being blinded

@dude: you got a point but I’m sure we can do some tricks to make the car computer have some awareness of attempts to fool it, sanity checking on input and notably compared to other sensors et cetera.

(now I’m reminded of that Boeing max mess.)

And humans can be fooled without being aware too obviously.

There’s a fundamental problem with how the system “feels” perceptual information. The lidar just passes the AI a bunch of data points, and the AI has to trust that these are valid and meaningful information because it doesn’t have access to the raw sensory input. That would be way too much data to handle.

So, the system is designed to simplify input into data symbols and objects, like “a car”, “a pedestrian”, and the AI only sees these symbols. The AI is like a general sitting in a war room where secretaries push little tin soldiers over a map, trusting that the intel about the position of the troops is correct. Okay, you can have multiple sources of information, but if they conflict, who do you trust?

For people, as far as we know, the brain doesn’t translate sensory experiences into “symbols” like the AI system does. When we think about something, we think directly in terms of how that thing feels, which makes it easier for us to notice when the input turns out weird or wrong.

You do not have access to your raw sensory information. It is abstracted away long before the information reaches the emotional brain centers and is fully symbolic by the time it is felt.

Dude, you sound very knowledgeable about engineering topics but it is clear you do not have a grasp on the brain from a neurological or psychological perspective.

Alternate solution: Don’t use a repeating scan pattern. Instead, use a psuedorandom pattern. Make it hard to guess what the next point being checked is. Bonus: Helps with noise.

I am sure that people could also figure out many ways to fool human driver so they have an accident also, so what’s the point of this article?