[Carson Katri] has a fantastic solution to easily add textures to 3D scenes in Blender: have an image-generating AI create the texture on demand, and do it for you.

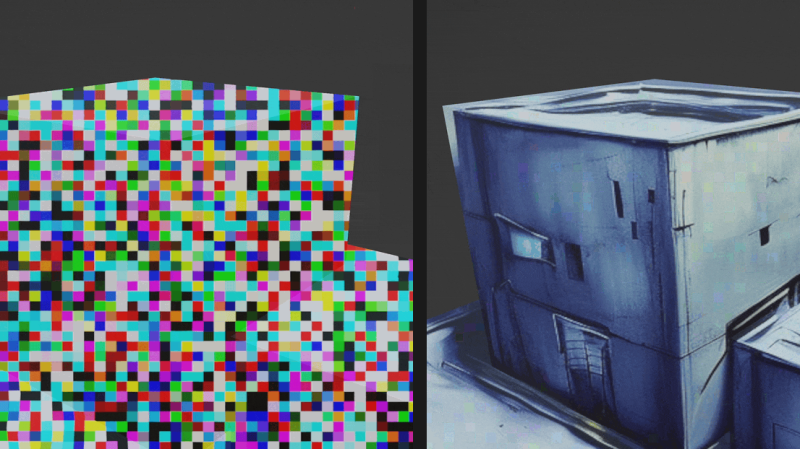

As shown here, two featureless blocks on a featureless plain become run-down buildings by wrapping the 3D objects in a suitable image. It’s all done with the help of the Dream Textures add-on for Blender.

The solution uses Stable Diffusion to generate a texture for a scene based on a text prompt (e.g. “sci-fi abandoned buildings”), and leverages an understanding of a scene’s depth for best results. The AI-generated results aren’t always entirely perfect, but the process is pretty amazing. Not to mention fantastically fast compared to creating from scratch.

AI image generation capabilities are progressing at a breakneck pace, and giving people access to tools that can be run locally is what drives interesting and useful applications like this one here.

Curious to know more about how systems like Stable Diffusion work? Here’s a pretty good technical primer, and the Washington Post recently published a less-technical (but accurate) interactive article explaining how AI image generators work, as well as the impact they are having.

I think there have been some amazing changes in AI recently, such as that Chat thing writing code or emails, and generating “art”.

But, I am not sure if it is in the direction that will make improvements to our existence. (No, I am not suggesting a Rise of the Machines scenario)

It just seems to be deeper into the Uncanny Valley.

I imagine people saying:

– Why do you still play with casting bronze? Stones are good enough for everything.

– Why bother with agriculture? Berries and mammoths are fine.

– Why make houses out of bricks? Wood is everywhere, bricks are not.

– That jass music is just noise, nobody will like it.

– Cyebrnetics shall be banned because it is bourgeois nonsense with no use for workers!

– 640 kB is enough for everyone.

Not seeing future of an invention does not mean it won’t have impact on society.

We have already left drawing mechanical parts on paper because it makes no sense in a world dominated by CNCs.

Not sure yet what these inventions are going to help, but maybe – texturing games could be faster and take less people, textures can be compressed using AI, games could evolve in-game with new items having new and unique textures made directly by the game.

Sometimes you have a technology first and then you find a use for it later.

Did anyone need electricity in 18th century? They didn’t think so because they didn’t know how much it could ease their life.

completely agree except for one point. Mechanical drawings are still made for most parts and structures, CNC and otherwise. outside of the obvious benefits of having references on hand when building, most drawings also serve as an insurance and legal document when disputes happen. In piping (chem industry and cooling) drawings are mandatory since they also have to contain all testing parameters etc. And any larger mechanical structure needs drawings. Any company worth their salt will also have technical drawings available.

Oh as an addition:

Tolerances need to be put on a drawing as well (even if it’s just a general tolerance).

All these details that come up in actual manufacturing where more people then just yourself work needs a technical drawing.

source: myself, As a professional Draftsmen with experience in a diverse range of industries big and small.

IFC files are already replacing paper. Especially with complex structures where there are no two identical parts. They provide ability to detect clashes before they occur on site, one thing that is very difficult to foresee from the paper drawings and it is already an automated process where software runs the check including for the tolerances.

I didn’t intend for my comment to be a Luddite rant.

It seems the developments in AI have taken some interesting twists, but nothing I would want to trust my life with yet.

I am very excited for the future of texturing.

Hope to make my own game sometime, and being able to “teach” an AI with a bunch of photos of rocks from an area into generating my own procedural rock textures to use with only a few button pushes, would be amazing.

Talk about making things more accessible for the indie-dev team.

You can do that now already. The difficulty with some of the recent “AI” is the use of thousands of rock photos that you didn’t take, but other photographers and artist’s did and hold the copyright to. I agree that AI methods are very cool, I work with them a fair amount. There are indeed some other cool methods though too, check out using wave function collapse for instance.

I love this argument, how do you think rock textures are made now? Do you think a texture artist is going to pay a photographer for a picture of a rock and just use that one picture for that one rock texture? No, that artist pulls up Google, gets a lot of little sample images from a bunch of different rocks and paints his own seamless texture.

He probably samples thousands of rocks in his own hand made work, each sample such a small piece of the overall work that it’s no different from the millions of artists looking at somebody else’s work to make their own.

This whole thing about AI being theft is ridiculous, unless it recreates an already existing picture, it’s no different from any other artist in the world that takes other people’s work and copies it to make their own art.

At some point, an AI for generating special purpose AIs…

Current AI are not a new technology though. They differ from other AI mainly in the scale of the content theft. Sometimes old technologies stick around, e.g. houses have sort of come full circle back to wood. Even when brick is used for houses these days it is a thin layer that is largely cosmetic (as some other materials are just as good as final element shielding).

Generating images for an Indie game is indeed an appealing use case, but I don’t believe it is possible with current copyright concerns. It currently reproduces artist signatures even, lmao. Sure, it could be trained not to, but that doesn’t change how it fundamentally works, so I would fully expect it to still reproduce steganographic marks, it would probably even learn them on purpose thinking they’re part of the artist’s style.

Better tools, sure, I’m on board.

Better technology, ok, I’m in.

Efficiency costing other jobs… well, the cat is out of the bag so to speak so I’m not sure it is a moral question for the individual.

Theft? I’m not willing to there. That’s the “AI” line I’m not crossing, and this absolutely is theft. Copyright law has always been capricious, but even if the courts rule in favor of AI generated content they could only do so through a lack of understanding on the part of the court with regard to how the methods work and how training inputs are used.

Copyright does not and cannot apply here. Contrary to the loudest voices in the room nothing was actually stolen here beyond style, you can’t copyright style. The ethics are somewhat dubious, sure, but legality is not in question here.

Nope. Not how copyright works. Copyright covers duplication of media–you can’t reprint someone’s work and call it yours. You can absolutely imitate that work in terms of style or content, though, as long as it is obviously not a duplication of the work.

The only question is if AI developers overstepped the law using that art for AI training without permission–the art produced by the AI, itself, is in no way a problem.

This seems to me to be a logical extension of what Blender has already accomplished for the individual creator. Before, you’d need a large space, a motion control camera rig, a lot of model making supplies and an optical printer to realise your creation. Now, that individual creator can do a great deal to bring their vision to life with a fraction of those resources.

I don’t believe that an individual who lazily uses this particular tool to create low quality content will in any way undermine another individual who uses this to create something incredible in far less time than they otherwise would have taken or which they might not have been able to achieve at all.

As a 3d artist is actually very useful. If I were making an entire city.. this would speed things up for me by at least 50 percent.

Texturing thousands of buildings takes forever, and if you cheat they all come out looking noticeably the same.

I’d use this, then replace any textures close to the camera. Stuff far away.. You’d never notice the difference. An object on screen for 3 seconds that only takes up 5 pixels doesn’t need great textures. Same for forests, a field full of rocks , or even crowds of people etc. I’d say that’s a useful improvement.

And I’ve used stable diffusion to make high quality textures of things I couldn’t find online.

It’ll never replace photo textures.. But it’s an amazing supplement.

Certainly better than ‘programmer art’, and may be useful fore the greyboxing/orange-boxing stages of level design. May even be useful for producing a UV map for paintover if that doesn’t conflict with existing workflows.

Might even be ‘good enough’ for user-side level customisation. If anyone remembers the Timesplitters series’ level builder feature, you blocked out a level on a grid suing various sized rooms and corridoors, then picked a ‘theme’ that would then turn that map into a level full of thematically appropriate geometry and props. This method could allow for arbitrary theming along the same lines using user provided prompts, or used to add variety to pre-selected themes.

This is sweet. There is AI game world generation in our future. Actually … I I bet somebody could mod this into Minecraft realtime.

At minecraft resolution maybe! But it is super cool, yes. Updating Blender to try it out…

Mostly rubbish results if you need it for production (except for distant objects perhaps), but where it shines is as a very good rapid 3D sketching tool for producing specifications to pass on to human specialists who then produce a closely matching, genuinely photorealistic, and coherent result from it.

It’s interesting to see the capabilities of these tools and the efficiency with which they can create visually appealing results. It’s clear that AI image generation technology is advancing rapidly and will continue to have a significant impact on various fields.

There is a big missing piece here: the UV texture projections are currently, like an anamorphic painting, completely view-dependent (that’s why the camera in the above example only “wiggles” within a narrow range)…the texture mapping completely falls apart if you change the view too far. The tedious aspect of creating a proper UV mapping isn’t addressed by this tool (though I hope it one day will be). I tried to redo just specific faces of an object from a view perpendicular to them, hoping to composite them later, but even using the same seed the texture is (I guess, of course) radically different based on the view angle. It’s a super cool hack, but not super useful yet except in limited cases.

Absolutely correct, I would imagine a proper UV unwrap would be necessary before something like this could be used. That being said, an AI based UV mapping be system sounds almost as attractive as an AI based texturing system

Pretty sure most 3D artist would love to see a UV editing, retopo-ing, and rigging all automated. Personally I find it infuriating that with all the things we want AI to handle, AI creators seem to be ignoring while focusing on automating the fun stuff.

Visit this site to know how to fly drones legally.

https://thedroneshome.com/

To my big regret, this praised plugin doesn’t work with my old video card having no DirectX 11 support with low amount of RAM (1GB). I wonder why it wasn’t possible to make it work in emulation mode where CPU would “assist” GPU…

Amazing AI.. that can’t figure out doors and windows have right angles.

Yeah call me underwhelmed with those ‘recent advancements’ if this and chatGPT are the advancements you refer to.

Seems to me more like we are stuck in place in AI development but instead advancing in making things available to the masses. And that ‘making available’ is powered by people’s efforts not an AI one.