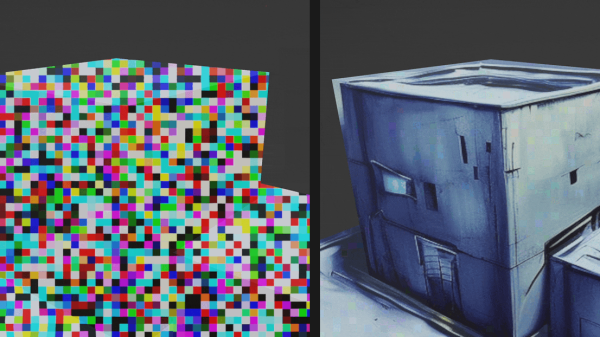

The LucidDreamer project ties a variety of functions into a pipeline that can take a source image (or generate one from a text prompt) and “lift” its content into 3D, creating highly-detailed Gaussian splats that look great and can even be navigated.

Gaussian splatting is a method used to render NeRFs (Neural Radiance Fields), which are themselves a method of generating complex scenes from sparse 2D sources, and doing it quickly. If that is all news to you, that’s probably because this stuff has sprung up with dizzying speed from when the original NeRF concept was thought up barely a handful of years ago.

Gaussian splatting is a method used to render NeRFs (Neural Radiance Fields), which are themselves a method of generating complex scenes from sparse 2D sources, and doing it quickly. If that is all news to you, that’s probably because this stuff has sprung up with dizzying speed from when the original NeRF concept was thought up barely a handful of years ago.

What makes LucidDreamer neat is the fact that it does so much with so little. The project page has interactive scenes to explore, but there is also a demo for those who would like to try generating scenes from scratch (some familiarity with the basic tools is expected, however.)

In addition to the source code itself the research paper is available for those with a hunger for the details. Read it quick, because at the pace this stuff is expanding, it honestly might be obsolete if you wait too long.