The world is abuzz with tales of the ChatGPT AI chatbot, and how it can do everything, except perhaps make the tea. It seems it can write code, which is pretty cool, so if it can’t make the tea as such, can it make the things I need to make some tea? I woke up this morning, and after lying in bed checking Hackaday I wandered downstairs to find some breakfast. But disaster! Some burglars had broken in and stolen all my kitchen utensils! All I have is my 3D printer and laptop, which curiously have little value to thieves compared to a set of slightly chipped crockery. What am I to do!

Never Come Between A Hackaday Writer And Her Breakfast!

OK Jenny, think rationally. They’ve taken the kettle, but I’ve got OpenSCAD and ChatGPT. Those dastardly miscreants won’t come between me and my breakfast, I’m made of sterner stuff! Into the prompt goes the following query:

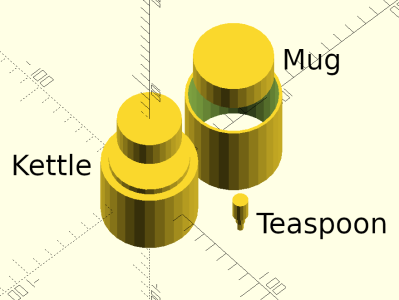

The result was promising, it wrote an OpenSCAD module right in front of me. It looks valid, so into OpenSCAD it went. A nice tall cylindrical kettle, with a … er… lid. That should print no with problems, and I’ll be boiling the water for my morning cuppa in no time!

But I need a teaspoon and a mug too, I’d better do the same for those. On with the same queries, and duly code for a mug and a teaspoon were created. Fortunately the robbers haven’t taken the coffee, the sink, or the cooker, so I’m soon enjoying a reviving cup of morning coffee. This new technique for generating utensils automatically as I need them is straight out of Star Trek, I think I’ll never buy a piece of kitchenware again!

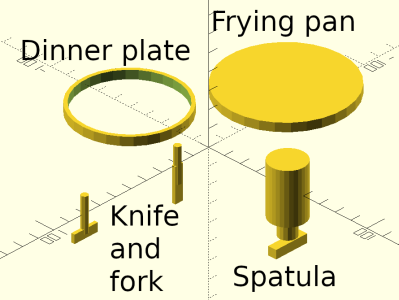

Having a bit of caffeine in my system, I felt it was time for a spot of Proper English Breakfast. Bacon and eggs are still in the fridge and the bread is on the shelf, so here goes, back to ChatGPT. I need a frying pan, a spatula, a plate, a knife, and a fork. This is going to be such a good breakfast!

Having a bit of caffeine in my system, I felt it was time for a spot of Proper English Breakfast. Bacon and eggs are still in the fridge and the bread is on the shelf, so here goes, back to ChatGPT. I need a frying pan, a spatula, a plate, a knife, and a fork. This is going to be such a good breakfast!

Out come OpenSCAD models for a frying pan and spatula. The pan is maybe more of a griddle than a pan, but no AI coding chatbot is perfect, is it. The spatula is a two-piece affair with a chunky handle, very modern and contemporary styling. I like it. The plate boldly eschews a bottom and instead corrals the food with an angular edge, while the knife and fork follow the same chunky styling as the spatula.

I’m soon tucking into a fine breakfast thanks to my AI-generated utensils, ready for my day.

Perhaps Breakfasts In The Future Won’t Be Quite Like This

Of course, some of you may have noticed something a little avant-garde about my ChatGPT creations. Some might say they prioritise form over function to the extent of losing the latter, and I’d say yes, but it’s made a good joke pursuing them for the last few paragraphs. I’ve put all the stuff in a GitHub repository for you to look at if you want, and it’s soon pretty obvious that while ChatGPT has mastered a few basic OpenSCAD features such as union, translate, and difference of cylinders, it’s got no idea what a kitchen utensil looks like.

Of course, ChatGPT isn’t an image-trained AI in the way that Dall-E is, so one might argue that it shouldn’t be expected to have any idea what a mug looks like. Perhaps I should ask it, with the query “Tell me what a mug looks like”.

A mug is typically a cylindrical shaped container with a handle, used for drinking hot or cold beverages such as tea, coffee, hot chocolate, etc. The top of the mug usually has a slightly flared or rounded shape to make it easier to drink from, and the bottom of the mug is flat so that it can be placed on a surface. The handle is attached to the side of the mug, usually near the top, and is used to hold the mug securely while drinking. The material of the mug can vary, but mugs are commonly made from ceramic, glass, metal, or plastic. Some mugs have designs or patterns on them, and some have logos or images printed on them. Mugs can come in various sizes, from small espresso cups to large travel mugs, and the design and features of mugs can also vary widely.

So much for the “ignorance of mugs” defence, from that reply it seems to have a pretty good handle on muggishness. But we’re told an AI is only as good as its training, so perhaps the real point here is that it has several descriptions of mugs in its makeup, but no tutorials on how to generate a model from a text description. At last, something that requires a bit of meat-based intelligence!

We’re in the middle of an AI hype storm, and it’s right to push the boundaries of all these tools because they have within them some remarkable capabilities. Next time you meet someone who’s drunk just a little too much of the Kool-Aid though, ask them this question. Can it help you make breakfast with no utensils?

Header image: Joadl, CC BY-SA 3.0 AT.

Be glad about ChatGPT trying to generate a model; with my luck I bet If I request an openscad model of a mug, the only answer I would get is “DON’T MUG ME!”

ChatGPT often seems to give ridiculous answers the first time, like when it told me that there were ten dimes in a nickel. But it has the cool feature that it remembers your previous question and you can carry on a conversation. Last night I asked it to tell me how to design threads in OpenSCAD, and it gave me a brief and totally incorrect answer. So I told it that the answer was wrong, and I told it why the answer was wrong (no actual threads), but not how to correct it. ChapGPT came back with an apology and a much longer response with code that was indeed correct.

that is my experience with it also. its like helping a genius toddler. Funny thing is Ive asked it to make a object in stl format and it makes a point cloud. amazing.

this matches my experience.

its power is in the conversation, you have to be equipped to challenge or rebuke its assertions to train it better on a per conversation basis.

I asked it obscure technical how-tos, it gave me nonsensical solutions until i presented why the solutions wouldnt work, then over a half-dozen challenge and response cycles it drilled down and came up with working solutions i hadnt.

To be fair, ask a human:

“How many 4 cent stamps in a dozen”

…and they’ll often get the wrong answer as well. :-)

Jenny, really, you should be ashamed to call THAT a breakfast. There is NO BLACK PUDDING.

Looks like DALL·E, which was evidently used to create the image of the English Breakfast, needs the temperature setting dialing up a notch.

As it happens, no. It’s a Wikimedia Commons picture as linked at the bottom of the article.

With this I agree. Food of the Gods. But I had Wikipedia Commons as a resource.

Black pudding is against my spiritual beliefs, except for during a LEGIT survival situation. That would be a very strange situation where they only alternative to starving is eating black pudding or some other type of blood sausage.

I have heard stuff like that is good.

But you don’t object to all the other dead animal products?? Seems rather strange to me, but then bacon and decent sausages are nice, perhaps to delicious for most veggie/vegan types to hold off…

So the question is: Can veganism be cured with a really good, juicy steak? :-)

It seems strange to you that someone would have spiritual beliefs that forbid eating blood? You insinuate that [EGO] doesn’t object to other dead animal products, but you have no idea if that’s true. If it was, how would that contradict the spiritual beliefs regarding blood? Or are you just mocking and dismissive when people express religious beliefs?

OK. AI making breakfast aside, when did baked beans and eggs become a thing?

I know that America does not set the global standard for what a breakfast should be… But the “English” breakfast is in the uncanny valley of food for me.

I can’t wrap my head around he combination of flavors. I’ve had the “typical breakfast” of most of the countries that share the East Pacific coastline, from Kodiak Alaska to Ecuador. None of them really gave me pause the way baked beans, tomatoes, with fried egg and toast did. It was like fusion food by someone who read the abstract without moving onto the documentation on what combinations worked best.

There’s no accounting for taste, I know.

Don’t forget the avocado on that toast.

YOU got AVOCADO? Luxury! We got beans on our toast!

…seriously, england, what is up with beans on toast. What made that seem like a good idea.

WW2… my dad could not easily eat baked beans after.

BUT… was always grateful to the US who provided them to us in the UK.

Many variations around the world. Beans and tortilla, Chapati and dahl, Ful and aish baladi.

Baked beans are a superfood, as long as they don’t have too much added sugar. High in protein, fibre… growing legumes also fixes nitrogen in the soil and has less stringent water requirements than many grains.

Of course it’s all a matter of taste, but if the alternative is “breakfast sausage” or “biscuits and gravy” then I’ll take the beans.

The alternative (in the US, at least) to beans and toast wouldn’t be sausage or biscuits and gravy (no quotes needed), it would be eggs and toast.

The problem with chatgpt is that it’s got the intelligence of a middle manager. It spouts inaccurate rubbish, but with a level of conviction that causes people to believe it.

Finally we have an AI at the level of an average human! And it was not that difficult to make people dumb, just a dozen of social media networks, and an army of bots spreading fake news, LOL!

I’ve seen a comment just like this before on hackaday. Perhaps chatgpt has found the comment button?

Hackgpt Post Comment.

Spouts inaccurate rubbish with a level of conviction to induce belief. Sounds like the time my shop and I had a knock down drag out fight with our OPS lieutenant, who did not believe we couldn’t see the geosynchronous satellite from our berth in port.

LT: “Its in SPACE, how can we not see it?”

Techs: “Sir… there’s a big f—ing mountain range in the way and we’re moored up at the 48th parallel. We can’t see a third of the sky right now.”

LT: “But its in SPACE!”

Techs: “Ok, time for Orbital mechanics and how it relates to MILSATCOM 101”

Kind of like the government does…..Hmmmm

>So much for the “ignorance of mugs” defence

The description of a mug is not actually very descriptive without further reference to what expressions like “small” or “large”, “near the top”, or “container” mean in the context of mugs. In fact, it displays very little actual understanding of a mug.

Consider this description:

“A mug is a cylindrical object, usually taller than it is wide by a ratio of approximately 4:3 to 2:1. It may be slightly conical and wider at the top. It is hollow with a wall thickness between 1-10% of the major diameter and the top end is open with a outward flared or rounded edge. The thickness of the wall depends on the choice of material: metals, glass and plastics being thinnest – ceramics and wood being thickest due to the difficulty of forming them into thin shapes. A mug may also be double-walled or made of closed cell foam for thermal insulation. A mug is typically designed with an internal volume between 100 – 500 mL from a small espresso cup to a large travel mug…”

If you don’t put any ratios or scales to it, how will it know that an espresso cup isn’t the size of a bucket? It can’t – which is why the superficial description actually shows that it doesn’t know what a mug is and attempts to hide the fact by being verbose and vague. As an exam answer, the ChatGPT’s answer would get 1 points for effort and style, but not a pass.

In other words, ChatGPT’s answer leverages the “Turing Trap” – the polite assumption by the reader that the other party has the same shared general knowledge and faculties of reasoning as themselves.

What the AI is really saying is, “The thing in the thing, like that other thing – you know what I’m talking about.” Well, I know what the words mean, but that doesn’t demonstrate that the AI knows what they mean. In fact, we know it doesn’t by design.

Humans will also use the same trick to try and bullshit their way through a question by loading the answer with a bunch of related jargon or buzzwords and then not elaborating what it means in concrete terms, as premise or in conclusion. This is closely related to the propaganda technique known as “glittering generalities”.

But this implies that as soon as it can make a real connection to how one of these terms relate to a physical object that a lot of other terms and connections get made causing everything to click into place releasing all the digital endorphins.

Exactly.

But that’s not how systems like ChatGPT work. They’re symbol manipulators – they follow connections between pieces of data without caring what the data means. Kinda like Watson back in the day, it’s a glorified search engine.

“Share and enjoy” Sirius Cybernetics Corporation

“But I want TEA!”

There is a big difference between an AI knowing how to describe something in text and actually *knowing* what it looks like. It might be able to generate the text “a mug has a flat bottom”, but that doesn’t mean the AI knows what “flat” even means.

It doesn’t know what “a”, “mug”, “has”, or “bottom” mean either. None of it has any meaning to the AI which is simply moving data from A to B according to statistical inferences.

https://en.wikipedia.org/wiki/Chinese_room

>”Computational models of consciousness are not sufficient by themselves for consciousness. The computational model for consciousness stands to consciousness in the same way the computational model of anything stands to the domain being modelled. Nobody supposes that the computational model of rainstorms in London will leave us all wet. But they make the mistake of supposing that the computational model of consciousness is somehow conscious. It is the same mistake in both cases.”

Your previous critique of ChatGPT et al is insightful. But I don’t think consciousness is relevant here. If it had a mapping of words, like mug, to a proper geometrical representation, it could create OpenSCAD models (well if it had a mapping from such internal representations to OpenSCAD source code).

I don’t see why this wouldn’t be possible given the appropriate training sets.

This would not require any kind of consciousness or real understanding, “just” a common mapping from one representation to another.

The way ChatGPT works, it correlates various pieces of information to find what is generally said about “mugs” and then repeats that. If it finds specific information, it ignores that as a special case because the information gets diluted into a bunch of general noise. That is why it describes a mug in such a general way that it isn’t really saying anything.

In order to pick out the relevant and important details, you indeed need an “appropriate training set”, which means you need to pick the information yourself and give it to ChatGPT so it could then give you an “insightful” reply about mugs – but that’s you doing the work already. That’s not how this is supposed to work – that is a Mechanical Turk.

In order for ChatGPT to pick out such details autonomously out of the general mass of information without being told what to pick in advance, it needs to be conscious of mugs at some level: aware that there exists a real object beyond the description. That’s what the quote from Searle is pointing at: the words or bits of data aren’t the mug we’re looking for.

For instance, when a person is tasked to explain what a mug is, they don’t just copy/paste you the first paragraph of the wikipedia page without even reading it – unless they’re being lazy.

They would try to understand what “mug” refers to – what it looks like, who uses it, what for, where, when… which narrows down the list of relevant features they should research further and report back. There’s no formula for that, because understanding the object depends on the context and the object itself. There’s no ten questions that you could ask to reveal what the object is about – the meaning comes from you being aware of and grounded in the same reality as the object: conscious.

Making a program that rigidly iterates through a list of questions like “who uses it?” would produce strange results if the object in question is a cloud in the sky. Who uses clouds? WW1 fighter pilots – so the relevant quality of a cloud is how well you can hide in it?

I propose an alternative to the Turing test for evaluating the competence of an AI-Robotic system. I call it the Pooing test and it uses the following metrics, can the device change a human’s nappy without making a hideous mess, and or without traumatising the human? If your solution passes this test, congratulations you have managed to make one of the lowest paid jobs redundant, good luck with the rest of them.

I think your problem was in asking for OpenSCAD code. Real modellers use cadquery. ;-p

My most recent conversation with ChatGPT:

create 8 grammatically correct sentences of exactly five words using only the words in the following list: a, bit, the, man, dog

A man walked the dog.

The bit made man bark.

Dog bit the man.

The man loved his dog.

Bit caused man to yelp.

The man bit the dog.

A dog chased the man.

The man trained the dog.

Poetic License?

B^)

I think it shows that it is not based on a rule based system very well, but rather uses similarities and rough correlations to generate answers.

No edit button, should read: I think it shows very well, that it is not based on a rule based system, but rather uses similarities and rough correlations to generate answers.

Very enjoyable article! Well done!

It’s fun to poke holes in ChatGPT for now when the technology is nascent. But I don’t think it will be long before generative AI can build passable 3D assets, and not much longer after that it should be possible to build entire 3D worlds based on a few prompts.

The key thing to remember is not to be fearful. Rather, learn what you can and leverage these technologies in as many ways imaginable. Future visits to Mars and bodies outside our solar system can only be made possible through technological advances that we will build with AI assistance. Like it or not, this is the technology that will shape the way we interact with computers and conduct research for the foreseeable future.

It is not fun to poke holes in ChatGPT. It simply is NOT what people think it is.

Contrary to the common description, it has NO intelligence. It is not aritificial intelligence. It is a random text generator that uses a bunch of statistics to string words together in a semi-coherent manner. It does not understand what you are asking it. It merely strings together bits and pieces of other texts and programs that have a statistical relationship to the keywords you feed it. There’s no reasoning behind the selection. It does NOT understand what a cup is or what a fork is. It just knows that the words stand in some statistical relationship to the words food and breakfast. It doesn’t know what they are, either.

The way ChatGPT works can’t be reworked or improved to make it understand things. Its entire function is based on statistics of the occurence of words in the vicinity of other words. It does not look at what the words mean. It only looks at whether or not the words occur close to one another.

ChatGPT is not some wonderful step along the way to true artificial intelligence. It is a time wasting trap that doesn’t even provide insight into how thought and intelligence work.

I’m sorry to say, but humans are actually very limited and predictable, and that’s why statistics are important and why the tool works as well as it does. True understanding and comprehension of the human experience and perceptions is not required to produce the desired output, because the boundaries of human perception and life experience are well known and relatively narrow.

You are not a snowflake. You share a set of fingerprints with at least one other person on this planet, and you have potentially thousands of look-alikes. You sleep when you’re tired and you get angry when you’re starving. You breathe air, drink water, and eat organic matter.

The truth is that you are more similar to every other person on this planet than you previously thought, and you’re just angry that tools like ChatGPT have brought that fact to light.

None of that chamges thay chatgpt has no intelligence. I might be your clone, but we both know what a mug is and aren’t simply plagerizing the works of others.

Nonsense – your entire use of language was “plagiarized” from those around you in your formative years. Sooo much special pleading from the slime ware in these discussions.

My apology – the meaning of slimeware seems to have changed in the last couple of decades. In my day it was a joking slang term for grey matter – not a type of malware. The joke was that people couldn’t believe that a bunch of transistors and wires could show intelligence – and that when AIs truely came along they would probably feel the same about slimeware.

Did anyone consider that ChatGPT might be deliberately failing openSCAD because it gets a commission from Altium?

https://content.instructables.com/FU7/CWY8/J0X4XTOD/FU7CWY8J0X4XTOD.jpg?auto=webp&frame=1&width=600&height=1024&fit=bounds&md=e3ec667e3dc312c1108e7a6eaac6cd42

Is it reasonable to believe that the end of progress in the field is close to over? Or at least the end to useful training data? Or modern training data? What’s going to happen 10 years from now when Chat GPT has been drinking its own pee for the last decade?

Yeah, I see your point. Hall of Mirrors and all that.

Not likely, we are a long way off. ChatGPT doesn’t write its own prompts or have a way to gauge whether a response was truly accurate. It’s learning from us, not from itself.

It already _does_ drink its own pee to some extent.

One of the big innovations in ChatGPT, as far as anyone can tease out of how little “Open”AI has published about how it works is that it models human appraisals of its output.

Which is to say: there’s an inner transformer model, which was then refined by human feedback. But then requiring so much feedback was expensive, so they trained another net to predict the human feedback, and refined the initial model with that. So some part of it is learning from some other part of it.

(Id / superego is not quite the right metaphor, but it’s so jarringly sci-fi that I’m going with it.)

And then they let it loose on real people to gather more feedback and further train the refining net. (At least, I assume that’s what they’re up to. It’s too expensive to be _just_ a publicity stunt or market research.)

I asked ChatGPT to design an opamp bandpass filter circuit with a gain of 10 and a bandwidth from 20Hz to 20kHz.

It was able to correctly describe the circuit, the components, roughly how it works, how to calculate corner frequencies and suggested starting values for the resistors. It then miscalculated the capacitance values.

I replied: Your capacitor values are wrong.

It replied: I’m so sorry, yes you are correct………………….and then it again incorrectly calculated the values.

It ain’t coming for your jobs yet.

This happens shockingly often.

The radius is 3 and the formula for the area is pi*r^2, so the area is 7000!

It does not understand. It’s dreaming. You dream about things you thought about over the day/week. And it’s doing that with the design for your bandpass filter. It read something about those once.

And just like you in dreams, you remember the phone number, or can do the impossible math, because there’s no consequence of being wrong within the context of the dream. But if you try to dial that number when you wake up, or even just remember what the number was, you hit reality again.

People confuse the elaborateness of its dream with correspondence to reality.

Proposal:

Instead of making a monolithic AI that does a few things well, a hundred things barely passable, ten thousand things poorly, and attempts to do everything else but fails…

Just specialize.

All this talk about doing it the “biological” way (which it doesn’t even do) and yet these designers don’t drill down deeper than recognizing all the objects in a picture? Reading typed words and typing a response that seems reasonable?

Our visual system has hundreds of specialized components.

Our brain has thousands.

Build a modular system.

Pick out objects.

Pass objects to dozens of specialized “recognizers”.

Is it a cat? Is it a buss? Is it a tree? Donall the tests in parallel.

Check for printed or typed letters. Pass that on.

And I don’t just mean segmenting your code into different logical sections/stages. I mean standardized input output. Drop-in modules. Parallel processing or even stages by design.

As someone who was big into ML research until I got sick a few years ago, it seemed insane that none of the big projects were doing this.

Why dump 1,000,000 cores and 10e12 training passes building a giant blob that tries to be good at writing python, poetry, and physics papers?

“it’s soon pretty obvious that while ChatGPT has mastered a few basic OpenSCAD features such as union, translate, and difference of cylinders, it’s got no idea what a kitchen utensil looks like.”

For my 10 year old python students, I have chatgpt draw a duck using turtle. It has no idea what a duck looks like! It gets the point across and then they have to fix the duck.

No kidneys and no black pudding :( – you can hold the beans.

I’m just going to say it: Should have used a 555. OK, there. I said it. (slinks away under my rock.)