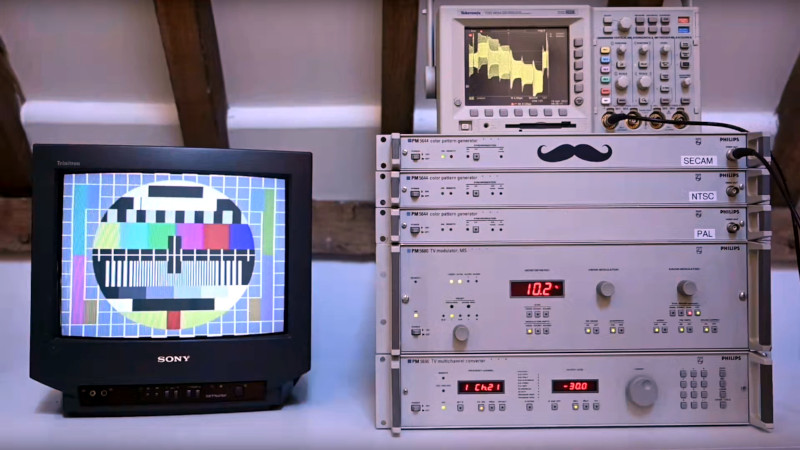

Today, acronyms such as PAL and initialisms such as NTSC are used as a lazy shorthand for 625 and 525-line video signals, but back in the days of analogue TV broadcasting they were much more than that, indeed much more than simply colour encoding schemes. They became political statements of technological prowess as nations vied with each other to demonstrate that they could provide their citizens with something essentially home-grown. In France, there was the daddy of all televisual symbols of national pride, as their SECAM system was like nothing else. [Matt’s TV Barn] took a deep dive into video standards to find out about it with an impressive rack of test pattern generation equipment.

At its simplest, a video signal consists of the black-and-while, or luminance, information to make a monochrome picture, along with a set of line and frame sync pulses. It becomes a composite video signal with the addition of a colour subcarrier at a frequency carefully selected to fall between harmonics of the line frequency and modulated in some form with the colour, or chrominance, information. In this instance, PAL is a natural progression from NTSC, having a colour subcarrier that’s amplitude modulated and with some nifty tricks using a delay line to cancel out colour shifting due to phase errors.

SECAM has the same line and frame frequency as PAL, but its colour subcarrier is frequency modulated instead of amplitude modulated. It completely avoids the NTSC and PAL phase errors by not being susceptible to them, at the cost of a more complex decoder in which the previous line’s colour information must be stored in a delay line to complete the decoding process. Any video processing equipment must also, by necessity, be more complex, something that provided the genesis of the SCART audiovisual connector standard as manufacturers opted for RGB interconnects instead. It’s even more unexpected at the transmission end, for unlike PAL or NTSC, the colour subcarrier is never absent, and to make things more French, it inverted the video modulation found in competing standards.

The video below takes us deep into the system and is well worth a watch. Meanwhile, if you fancy a further wallow in Gallic technology, peer inside a Minitel terminal.

NTSC – Never The Same Colour

Never Twice the Same Color 📺 (also popular)

The color standard was actually NTSC2 (NTSC being the black and white standard that preceded it) giving the even better never the same color twice.

PAL = Pay for Additional Luxury or Picture Always Lousy

SECAM = System Entirely Contrary to the American Method

Yes I am from that time :-)

I remember my Dutch uncle living in France having two TV’s and watching Wimbledon on both at the same time: He wanted to have the colour of the French and the commentary of the old black and white Dutch tv that came in via satellite. There was about a 2s delay between the two so it was seeing a point being scored and hearing the commentary only two seconds later.

Odd… I remember that analogue SAT was FAR better than any terrestrial TV signal.

And the receivers had RGB output too, so none of the PAL/SECAM problems.

These old tales from the 20th century still are quite fascinating, I think.

I also learned that East Germany had used SECAM back then. The incompatible version (to France).

(Some GDR citizens also had PAL support in their TV sets, to watch shows from West Germany in colour.)

But for some weird reason, it switched to real black/white transmitters when b/w programme were aired.

Now it makes sense. SECAM always had sent a colour carrier, which lowered monochrome fidelity.

Another interesting story was that 819 line format. Also a French invention.

It was HDTV before HDTV, essentially. But in beautiful monochrome (no ugly CRT mask for RGB! Yay!).

Makes me kind of sad such a thing no longer exists. Black/White TV was so beautiful. ❤️

Monochrome CRTs had no sub pixels and were as detailed as e-ink technology is today.

https://en.wikipedia.org/wiki/819_line

Analog TV existed well into the 2010s.

Yes, in some places. What I mean are true “black/white” TVs and pure monochrome video signals. As they used to exist, before lo-fi colour TV became the norm.

Both NTSC/PAL do degrade quality of the basic monochrome signal (luma):

If being received by a real black/white CRT TV (or monochrome video monitor), there’s interference visible, despite the fact that it shouldn’t happen in practice. Unfortunately, not all classic TVs have had the needed filtering capability to block out colour carrier.

That’s something that’s kind of sad. I’ve been always fascinated by 1970sera portable TVs and little camping TVs that ran on C and D cell batteries. They were so pretty. And that’s me, who had a fancy Casio LCD pocket TV in the early 90s. For some reason, monochrome video always seemed so peaceful and magical to me.

I’ve even watched videos and pictures on a small monochrome VGA monitor and it was awesome! A real monochrome monitor with a single tube looks so smooth and clean! 😍

Too bad we got no modern monochrome LCD monitors anymore. My father’s 486 laptop had an elegant monochrome LCD screen. I wished there was a 20″ version. Or there were affordable e-ink monitors, at least. *sigh* 😔

The reason 819 line format never made it into colour was primarily bandwidth. It’s no coincidence that 625/50i and 525/60i both have roughly roughly the same bandwidth requirements, trading either temporal or spatial resolution.

There’s some other oddball analogue schemes out there. For a start there was an early 405 line system that went the way of the dodo. Then Brazil went with a 525 line system but PAL for the colour sub-carrier. More recently, before digital HD was established as 1080 there was a 1035 line analogue HD that never really went anywhere (It actually had 1080 lines, but only 1035 were active, the rest were for the blanking period).

If you want REALLY weird analog HD transmission, look at MUSE (Hi-Vision): two axis interlacing! Built-in motion compensation! Variable chroma sub-sampling!

“They became political statements of technological prowess”. Doesn’t go well for the Americans, NTSC was the worst of the analogue colour systems, not only were the colours constantly changing, but their insistance on going from 60Hz to 59.94Hz (unnecessarily as it turned out) led directly to drop-frame timecode and the 1001 caveat. We’re still dealing with the fallout from that today.

Our system was the worst because it was the first. The black and white standard was designed with no prior guidance and one of the goals was getting as many channels as possible in a block of spectrum that wasn’t already in use for something else, while being good enough. The color standard was designed with the first priority of not messing up the performance of millions of existing B&W TV’s and being both reasonably simple to decode and not messing up channel assignments. Everyone else had the advantage of seeing what could be improved and starting from scratch with different priorities.

> The color standard was designed with the first priority of not messing up the performance of millions of existing B&W TV’s and being both reasonably simple to decode and not messing up channel assignments

Whereas now the authorities just charge in, auction off the spectrum and all the existing hardware becomes e-waste. It happened for TVs, it happened for 2G phones and it’s in the process of happening for 3G. I suppose this counts as “progress.”

Yeah, ask me about “Hanover Bars”

Side note – SECAM didn’t invert the modulation compared to other standards – that’s a function of the French B&W and Colour System L modulation/transmission system (which like the UK’s System A used for 405 line VHF and France’s System C used for 819 line VHF used +ve vision modulation), and nothing to do with SECAM (it was used prior to SECAM colour being introduced on the System L 625 line UHF broadcasts). SECAM outside of France was broadcast using Systems BGDK etc. which all used the more standard -ve vision modulation (and could, and were, also be used with PAL)

It’s always important to separate the colour system from the line standard and transmission system.

“It’s always important to separate the colour system from the line standard and transmission system.”

Yes. There were als the terms RS-170/RS-170A and CCIR..

They both refer to the underlying monochrome standards used by NTSC and PAL.

Title should be “System essentially better than American method”.

Or “System essentially not walking in the footsteps of the American method”.

Joke appart, SECAM was better than PAL but SECAM studio equipment was also more expensive than PAL one. I remember the story that in the French studios all the equipment was first in PAL, then transcoded in SECAM for the broadcast. I never knew if this was also the truth or another joke.

One can’t do, say, a lap dissove between SECAM sources. Threfore PAL in the studio, SECAM to air.

Yep, adding two AM signals with synchronized carriers gives one AM signal with added modulation. Doing the same with FM produces garbage, since the carriers cannot be synchronized. So all SECAM studios around the world had to use either PAL or RGB internally.

…or more likely YCbCr or similar

Kinda like the HD Radio…. lesser quality at a higher price.

Oh,,,ans proprietary. Long live DRM :) …haha

Now if AMBE would just DIE and long live CODEC2

I will never understand amerikans hate for OSS.

Guess cause it hard to make billions on a freebee???

(We need a EDIT feature here!!)

I am showing my ignorance, but I want to ask anyway. What does OSS mean in this context. Because you are obviously not referring to the United State’s WWII Office of Special Services. I don’t think that this is a different way of referring to the IBM operating system OS/2. And I am not a video person. So I am lost.

And the FCC did a similar nonstandard DTV with 8VSB rather than doing the DVB family (DVB-T, DVB-S, S2, etc.)

“DVB-T, DVB-S, S2, etc.)”

Yep,,,forgot abt that.

Just one more cluster fuke by the FCC and the US Gov :(

Among MANY!

and CCS, luckily industry self corrected that one

I’m pretty sure it was because of better spectral efficiency with the technology of the day. I know they had a bake-off. The risk of pioneering technology is that standards evolve pretty quickly. Remember early stereo radio wasn’t L-R subcarrier with a pilot carrier. That came later. It’s been a $$$$+ burden on broadcast owners to update through the versions ( now up to ATSC 3.0, etc.). Definitely not the way to create critical mass public buy in, but probably necessary and probably not done yet.

Today’s broadcast DTV is a whole lot better than the first version. It’s the pioneers that get the arrows.

BTW PAL also required a delay line to store the previous line.

“A camel is a horse designed by committee.”

And then they designed the SCART connector.

At least you can’t plug it in the wrong way around…

Wasn’t SECAM mostly used by Communist Soviet Union, and Communist China?

After the Berlin Wall fell, most of Eastern Europe dropped SECAM in favor of PAL.

SECAM was definitely used by Russia, China and, as you’d expect, most if not all French ‘territories’ and dependencies, pretty much everywhere else used PAL or NTSC.

Didn’t stop anyone in those countries watching PAL TV from neighbouring countries though, it was just in monochrome, the main (but simple to resolve) stumbling block was differing sound IF frequencies, some were 5.5MHz, others 6MHz and 6.5MHz so you needed to either replace or work out a way to switch between sound filters and alter the quadrature coil in the demodulator.

Odd… I remember that analogue SAT was FAR better than any terrestrial TV signal.

And the receivers had RGB output too, so none of the PAL/SECAM problems.

Sorry, double post. Was meant to reply to a comment above.

The original definition of composite video was a video signal composited with a sync signal. Non composite video being video without syncs as present on camera outputs.Later it became used to describe a coded colour signal.

Vision was the term originally to describe a video signal modulated onto an rf carrier hence vision mixers should more correctly be called video mixers and video tape recorders should have been called vision tape recorders.