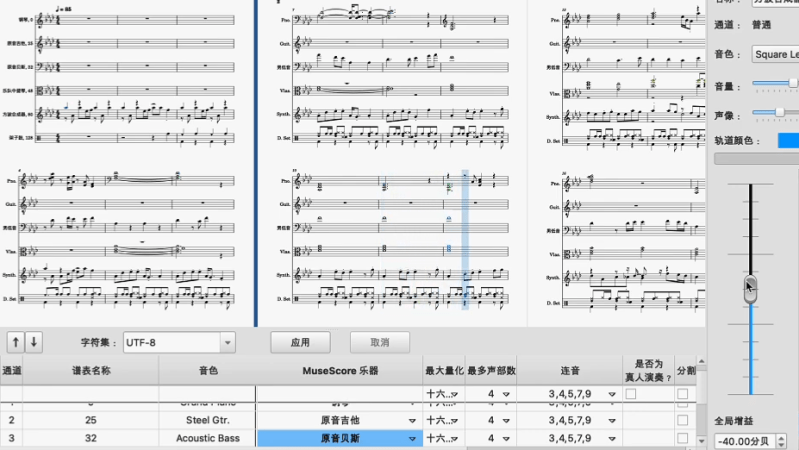

Music generation guided by machine learning can make great projects, but there’s not usually much apparent control over the results. The system makes what it makes, and it’s an achievement if the results are not obvious cacophony. But that’s all different with GETMusic which allows for a much more involved approach because it understands and is able to create music by tracks. Among other things, this means one can generate a basic rhythm and melody first, then add additional elements to those existing ones, leaving the previous elements unchanged.

GETMusic can make music from scratch, or guided from examples, and under the hood uses a diffusion-based approach similar to the method behind AI image generators like Stable Diffusion. We’ve previously covered how Stable Diffusion works, but instead of images the same basic principles are used to guide the model from random noise to useful tracks of music.

GETMusic can make music from scratch, or guided from examples, and under the hood uses a diffusion-based approach similar to the method behind AI image generators like Stable Diffusion. We’ve previously covered how Stable Diffusion works, but instead of images the same basic principles are used to guide the model from random noise to useful tracks of music.

Just a few years ago we saw a neural network trained to generate Bach, and while it was capable of moments of brilliance, it didn’t produce uniformly-listenable output. GETMusic is on an entirely different level. The model and code are available online and there is a research paper to accompany it.

You can watch a video putting it through its paces just below the page break, and there are more videos on the project summary page.

I am sure that AI can and will generate some excellent music. If it is commercialized, who will get the royalties? Maybe just like “video killed the radio star”, AI will kill the desire to learn and master an instrument. I hope not.

The most sensible thing would be for the person using/owning the AI to get the royalties. Of course, when everybody can make music with AI, royalties will trend towards zero.

> royalties will trend towards zero

It’s already the case with streaming services like Spotify

That’s a bit of myth. Just because the record companies take 99.5% doesn’t mean there are no royalties, they are just diverted away from the artist more.

IANAL, but right now I think that any IP (music, movies, code, etc) created by anything other than a person cannot be copyrighted in the US, at least. There is a ton of legal machinations around this of late with the advent of better and better AI.

Not sure what happens when hybrid approaches are taken… not to mention, of course, you could simply say “I invented this!” and who could disprove it?

Lots of interesting things to come, probably none of it will help Da Lil’ People (e.g. individuals vs. corporations.)

So, if I use a MIDI file “source” which is Isaac Hayes Theme From Shaft, and a “target” of Phil Collins In The Air Tonight, what do I get?

Whatever it is, I want to call it “Shaft In The Air”.

It should be noted that water from pubic fountains contains a lot of bacteria and should not be drunk unless you’re looking for a laxative.

Hmm…

I’ll have to remember that, next time I come across a “pubic” fountain.

It may be the bias caused by their source files, but this AI seems perfect for all your needs of 80’s eastern pop songs.

The music industry has been run by an inhuman algorithmic process and outside of the creative explosion of mid 60’s for less than a decade has and will continue to make mostly crap. Will this make it any worse? Producers, arrangers, and all the gota-sound-like-the-last-hit makers, even the Monkees did it.