Essentially, it uses a Raspberry Pi and a Respeaker four-mic array to listen to conversations in the room. It listens and records 15-20 seconds of audio, and sends that to the OpenWhisper API to generate a transcript.

Essentially, it uses a Raspberry Pi and a Respeaker four-mic array to listen to conversations in the room. It listens and records 15-20 seconds of audio, and sends that to the OpenWhisper API to generate a transcript.The natural lulls in conversation presented a bit of a problem in that the transcription was still generating during silences, presumably because of ambient noise. The answer was in voice activity detection software that gives a probability that a voice is present.

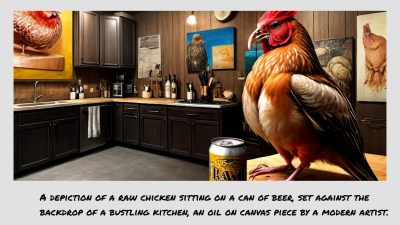

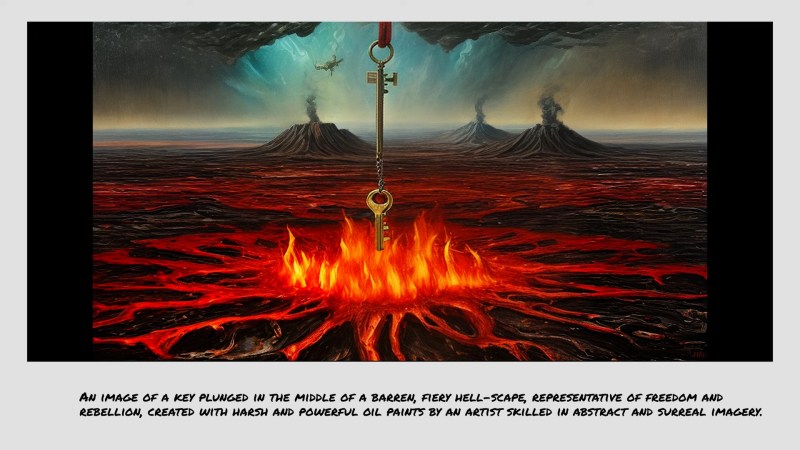

Naturally, people were curious about the prompts for the images, so [TheMorehavoc] made a little gallery sign with a MagTag that uses Adafruit.io as the MQTT broker. Build video is up after the break, and you can check out the images here (warning, some are NSFW).

wow.

What will sensors and AI lead to next?

“The walls have ears.”

That’s for sure.

I find it amazing how the “machine” gets some things done well, but totally blows it on others.

It all depends on how the input images are labeled. Applied concepts are what make AI blow it because because they can have so many varying depictions. You need to be a literal as possible.

It is like all things in life, if you master something and you are asked to work on something at the very edge of your knowledge where you have had little experience, you may totally suck at that. The same is true for AI, but unlike a human, AI does not “know” that they suck, they will just try and do what they were asked to do no matter how little training data was used in that area.

Exactly that. I play around with Easy Diffusion (a canned version of Stable Diffusion) on my computer and it can be amusing to see what it comes up with when I feed it detailed text prompts for HOW something should look but leave out WHAT it should be … or I make the subject really ambiguous. The AI plods ahead anyway. I’ve gotten some really pretty images that way, some of which are really bizarre.

“whisper frame” … it could be better named. Whisper frame does not truly capture its essence.

More like:

“ear art” “ listen art” “talk art”

“wall-E-ars”

“listen paintings” “talk paintings”

even “sound paintings” if you tuned the prompt generator to respond to music genres instead of words.

Nsfw? Are you from iran? But they are nice lucid dreams and night mares indeed..

Hmm…live chicken vs. raw chicken, chicken sitting near a can of “Raw” beer, bustling kitchen with no apparent activity… doesn’t really seem at all semantically sensitive or intelligent.

I’m not quite sold on AI. That is, other than the fact that it can come up with anything at all.

We used to do this in the late 60’s with orange barrel and purple micro dot. Shared visions were experienced. The fathers of electronic music the Berlin School, Tangerine Dream named suitably.

In the next generation will they will be on a “trip”, VR avatars games and all. But whose trip, that’s the future.

love this!

I love this!! You inspired me to make my own and my friends and I are having a great time with it. I put my code on GitHub so anyone can try it. Laptop or Raspberry Pi. https://www.jimschrempp.com/features/computer/speech_to_picture.htm