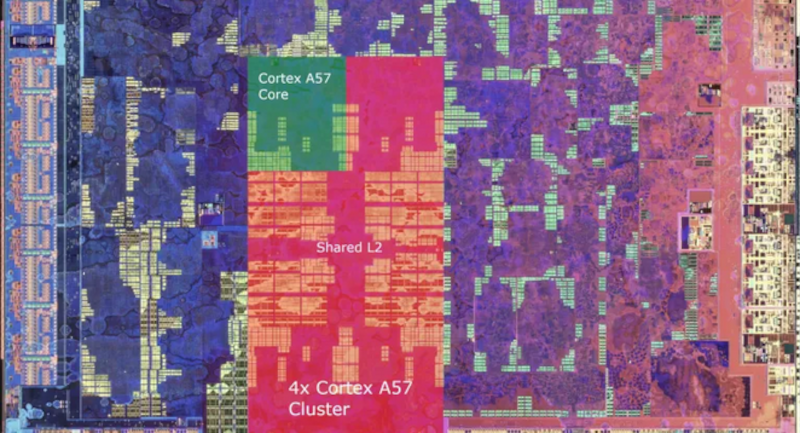

Ever wonder what’s inside a Nintendo Switch? Well, the chip is an Nvidia Tegra X1. However, if you peel back a layer, there are four ARM CPU cores inside — specifically Cortex A57 cores, which take up about two square millimeters of space on the die. The whole cluster, including some cache memory, takes up just over 13 square millimeters. [ClamChowder] takes us inside the Cortex A57 inside the Nintendo Switch in a recent post.

Interestingly, the X1 also has four A53 cores, which are more power efficient, but according to the post, Nintendo doesn’t use them. The 4 GB of DRAM is LPDDR4 memory with a theoretical bandwidth of 25.6 GB/s.

The post details the out-of-order execution and branch prediction used to improve performance. We can’t help but marvel that in our lifetime, we’ve seen computers go from giant, expensive machines to the point where a game console has 8 CPU cores and advanced things like out-of-order execution. Still, [ClamChowder] makes the point that the Switch’s processor is anemic by today’s standards, and can’t even compare with an outdated desktop CPU.

Want to program the ARM in assembly language? We can help you get started. You can even do it on a breadboard, though the LPC1114 is a pretty far cry from what even the Switch is packing under the hood.

I can’t help but wonder if the four unused cores were back from the earlier days where they might’ve hoped to use them when the system was undocked…

That said considering it’s basically just a slightly customized nvidia shield (which i don’t see nvidia ever allowing again considering how much cash nintendo made off it while their platform more or less failed) it’s possible it’s just a leftover from said shield days.

Of my two suppositions I think the idea that it would’ve used the 4 weaker cores in handheld mode might’ve been their original intent, tbh.

I say this because everyone and their grandma was quite disappointed in the switch’s original battery life but less than a year when it came out it became less of an issue because A) it’s difficult to find a place that doesn’t have a power outlet nowadays thanks to phones/laptops and B) the original switch is actually a pretty terrible handheld gaming device. It’s big, it’s heavy, it’s loud and the pathetic kickstand never actually worked and not only that but individual (as in, a single half) joycons per person are impossible to use even with the most minute and toddler-like hands. They’re just absurdly uncomfortable.

I could easily see Nintendo just being like… Yeah, this isn’t worth it. Especially because I’m not convinced the CPU would be what was sucking the power. The display alone is notoriously inefficient and gets uncomfortably hot even when the system is idling. They made the right choice in choosing a screen that’s cheap since the system was far more expensive than their past handheld systems but I can’t imagine it helped battery life at all.

I’m curious if there have been any changes to the processor in later revisions (the handheld-only version as well as the oled switch.) I don’t think it has but considering the other changes to both systems I would also not be surprised if they managed to, I dunno, dummy those things out.

At the end of the day though it’s a little sad that Nintendo/Nvidia had essentially created what other manufacturers are only just barely catching up to nowadays. There was so much more they could’ve done with this system but the entire time its felt unusually conservative for them. The Wii, DSi, 3DS and WiiU (the latter for better or for worse) felt more like celebrations of new and interesting experiments while the Switch was very… subdued. Minimalist. Which is in of itself appreciated since you can just hit power and play in less than two seconds (unlike the ms and sony consoles which usually take eons unless you already had a game loaded…) but at the same time it just feels unusually hollow for Nintendo.

Neh. Enough of my tangent. Either way it’s nice to actually get a proper peek at the processor. I look forward to combing over this for more details later.

Rumor is Tegra has a hardware bug where enabling one set of cores makes the other malfunction, you can enable either A53 or A57, but not both.

It’s not really a hardware bug, they just deliberately power-gated the clusters so that it’s either-or. I’m guessing they share cache SRAM or registers or something that makes them mutually exclusive. The idea though is that in sleep mode you could run things on the A53s, but the issue is the A53s are missing a lot of hardware features the A57s have. So you’d have to target the lowest common denominator in your compiler flags and lose performance on the A57 for that process. It gets messy really fast, kernels aren’t really designed to switch between two different ARM revisions afaict.

The A53 cores do work though, I tested them once on an RCM’d Switch.

re: processor changes, there was one significant upgrade to the whole SoC, from the 20nm Tegra X1 to the 16nm Tegra X1+. This was quietly pushed to the regular switch in august 2019 and used on all switch lite and switch OLED models. In addition to the die shrink, the X1+ model does indeed get rid of the A53 cores (though I suppose they could still be on the die and disabled). It also has upgraded microcode which fixes an unpatchable hardware exploit that was much loved by nintendo switch hackers..

The article saying that Nintendo chose not to use the A53 cores is correct but sort of misleading, as in fact pretty much *everyone* pretty quickly decided not to use the A53 cores (including Google for the Pixel C, and Nvidia themselves in many of their later devices). They simply didn’t offer enough power savings to justify their lower performance.

This seems like a great counterpoint to the concept of big.little . If a major player just discovers they can’t use the little cores maybe they don’t do as much good as is thought.

The Tegra X1 used a particularly crappy implementation of ARM’s big.little architecture, especially for gaming devices, so I wouldn’t use it to pass any sort of judgment on the concept as a whole.

The X1 can only run one cluster of 4 cores at a time (either the A57s and A53s), and it has a power management controller that handles switching between the two based on demand and other factors. The OS has no direct control over this, it essentially just sees a 4-core CPU.

In theory this allows additional power savings beyond just throttling down the A57s when they’re not under load, but in practice it doesn’t help very much on a device like the switch. If you’re CPU-bound on even *one* core and trying to maximize performance, you’re never going to be able to drop down to the A53s.

On something like a cell phone that spends a lot of time relatively idle, this arrangement makes way more sense, but it’s still the first, simplest and least efficient form of big.little – there’s also a form where each core has a big/little pair that can switch independently, and a final one that contains a mix of independent cores that can all be used simultaneously (this is basically the approach Intel has also adopted in their recent desktop CPUs)

An LPC1114 is a Cortex-M0 and only supports Thumb instructions. That’s pretty different from a Cortex-A53, which supports ARMv8 and can be programmed with 64-bit, 32-bit, and thumb instructions but is normally used in either 64-bit or 32-bit modes the majority of the time.

At a high-level it doesn’t matter too much what processor you want to learn on. Especially if you won’t be using assembler. Obvious choices are C/C++ or Rust, either on top of an OS or bare-metal, but you could even target an SoC with Pascal if you have a board support package and compiler for it. Ultibo supports bare metal Pascal all/most of the RPi models. In terms of capabilities and architecture, any SoC like RPi, BeagleBoard, OrangePi, etc would be a closer equivalent to the Tegra X1 than a microcontroller like STM32F or LPC1114.

PicoFly modchips are now available for $10-15. A little tricky to install on a Lite (first time I had to solder under a magnifier). But I am running Ubuntu Jammy derived from the L4T project, RetroPie is a thing, and open game engines will compile on it. Sadly there is a frame-pacing Bug nVidia doesn’t seem to be planning to fix. It looks like they apply the rotate (it is a portrait screen) at the wrong place in the pipeline, or use the wrong pipe? Unsure on details. But the video decoder will apply the rotate with no penalty, so in theory it can be fixed.

Also some work has been done soldering larger memory chips. Which do work.