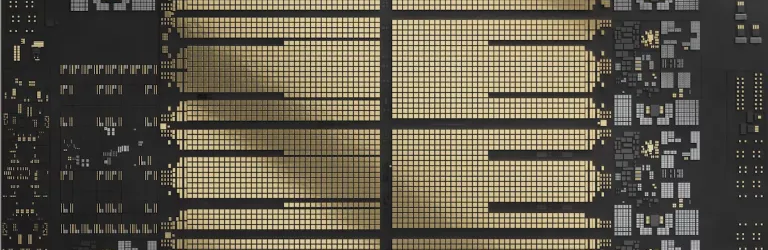

It is hard to imagine what a mainframe or supercomputer can do when we all have what amounts to supercomputers on our desks. But if you look at something like IBM’s mainframe Telum chip, you’ll get some ideas. The Telum II has “only” eight cores, but they run at 5.5 GHz. Unimpressed? It also has 360 MB of on-chip cache and I/O and AI accelerators. A mainframe might use 32 of these chips, by the way.

[Clamchowder] explains in the post how the cache has a unique architecture. There are actually ten 36 MB L2 caches on the chip. There are eight caches, one for each core, plus one for the I/O accelerator, and another one that is uncommitted.

A typical CPU will have a shared L3 cache, but with so much L2 cache, IBM went a different direction. As [Clamchowder] explains, the chip reuses the L2 capacity to form a virtual L3 cache. Each cache has a saturation metric and when one cache gets full, some of its data goes to a less saturated cache block.

Remember the uncommitted cache block? It always has the lowest saturation metric so, typically, unless the same data happens to be in another cache, it gets moved to the spare block.

There’s more to it than that — read the original post for more details. You’ll even read speculation about how IBM managed a virtual L4 cache, across CPUs.

Cache has been a security bane lately on desktop CPUs. But done right, it is good for performance.

A Top 500 list does not exclusively define ‘supercomputer’ because, if nothing else, it’s a moving target. A Raspberry Pi 1A will outbenchmark much of Cray’s 1970s and 1980s catalogue, but that doesn’t retroactively “de-super” the Crays.

Many of the exotic architectures that make supercomputers distinctive also gradually trickle down into newer commodity systems. You can get reasonably priced x86-64 systems with multiprocessors, a NUMA memory model, and a bag full of special purpose hardware accelerators, for example.

There’s a chance to qualify the phrase a bit better– “By 1990 standards, most of us now have a supercomputer on our desks”– but it’s not that terrible.

Communication requires effort on both sides to determine underlying intent and meaning. Being deliberately obtuse might give you a thrill but it just makes you look bad to everyone else.

And you’ve clearly made a habit of this, given you’re preempting the obvious rebuttal to your attack – however that doesn’t invalidate it. There is clearly an expected level of common understanding in the HaD readership, it’s not aimed at the technologically unsophisticated.

Linux Tech Tips had a video about this chip (and the mainframe that goes along with it). It’s one of the rare video on this channel which isn’t cringe so check it out if you’re interrsted in this chip

You think playing with cool tech is cringe? Okay, grandpa.

Linus is incompetent and mostly just an advertising tool, he had some knowledge and skill in his start, but he’s mostly just a corporate shill now, getting lots wrong, using bad metrics adn pushing crap products.

I’d rather watch a poorly dubbed mexican tech show

And his company treats employees like shit, from what I’ve heard.

It that suppose to be some kind of insult?

I don’t get the implication of being old and not liking cool tech. Maybe you mean old people gets out of touch with current trends.

At most, I would go say older people have seen more and are less impressionable about new trends.

+42

New tech is cringe, it doesn’t matter what’s in the PC, what you do with it does.

Wow – all now is with AI sticker. “AI accelerator” wow. Just a matrix calculations coprocessor.

Well the “done right” part is hard to test due to the exclusive rental nature of the chip.

Tilera used a virtual L3 trick years ago.

Nvidia owns them now.

Last week I reported that my hackaday.io password wasn’t working. No response yet.

This post sent me down a small rabbit hole reading about the z/architecture CPUs on this beast. Compared with the micro’s I have experience with (even on the desktop) it’s an entirely different and insanely more complex universe. Here’s a z/architecture document (at least what the ISA was in 2008) if you want some giggles.

https://www.ibm.com/docs/en/SSQ2R2_15.0.0/com.ibm.tpf.toolkit.hlasm.doc/dz9zr006.pdf

This is a 1960s CISC architecture that was allowed to grow without control. For example it has both binary floating point and hexadecimal floating point. If you thought x86-32 was arcane, this one goes much farther.

the newer ones also support decimal floating point

↑ This. However modern this iron would run the same System/370 stuff. I mean kudos for the kickass design and the power, I’m sure it’s a boon for datacenters pressed for more oomph sans the additional real estate and electricity but it’s all the same animal.

Can’t help but smile at the obvious Clamchowder’s boner over it. Sounds like Dave’s Garage.

Don’t forget about running CPUs in lockstep to detect transient CPU faults and recover without disturbing the software at all. When reliability matters it’s not enough to just cover the RAM. Coverage must include every part of the system.

3 years ago I bought a new PC. The old was still mostly sufficient, but I wanted a bigger monitor and the (then) 12 year old PC was not up to that. So I bought a Ryzen 5600G (pretty much the only choice during the virus thing, Video cards were impossible back then) My new PC has a passmark rating which is around 10x higher then my old PC. My new PC feels about twice as fast as the old one. I rarely use more then one of it’s cores (6 core 12 threads) for more then 2 or 3 seconds.

In the old days, you started saving money for a new PC on the day you bought a new PC because you knew it would be obsolete after two or three years. But now, the speed of a PC has become mostly uninteresting. I expect my current PC to also last more then 10 years. But I do agree that weather forecasts have become better in the last 20 years.

I am aware that there are still people who can benefit from faster processors and PC’s (and better weather forecasts?) Especially academia, AI stuff and gamers. But for most people it really does not matter whether their arduino sketch compiles in 100ms or 5s. Personally I have only one big exception. FreeCAD is terribly slow at one of my drawings, Over the years I’ve drawn 8 or so different CNC machines in FreeCAD, and as a comparison I made a composite drawing and loaded all of them in a single FreeCAD drawing. That drawing takes over 5 minutes to open, and all the while my CPU is chugging along at a measly 9% (I.e, a single thread). It’s one of the remaining big things for FreeCAD to fix before they are ready for a Version 1.0 release.

I agree. I upgraded to the Ryzen 1600 back when as my R&D Athlon machine just wasn’t doing VMs well. I then just upgraded just the processors on AM4 until I was running on a 5900X because I ‘could’… Not because I needed to. Point is there is really no ‘need’ for most users to upgrade to the latest boards/processors (there are exceptions I am sure). Those days are gone (I remember upgrading from Z80, 8086, 286, 386, 486, etc.) and the ‘leap’ in performance. Those were heady days. But, now my systems (laptops included) are very fast for all things I do. Plenty of memory (64GB on R&D box, 16GB min on others), and fast 1TB and 2TB SSDs … running Linux … what’s missing?

I agree I have an old intel i3 HP laptop 💻 with intergrated graphics , 8GB of RAM & 1TB HDD from 2015 & it’s still pretty fast for most things I wanna do besides AAA gaming which it wasn’t good at back then (can’t play ps4/xbox one era games well) but even when using it for stuff like android studio & other programming software even with a bunch of Google chrome tabs in the background it mostly runs fine it’s only now after almost 10 years I feel like the laptop would be more use able with a RAM upgrade & a switch to an SSD

Last year I got a new PC, because trying to play Cyberpunk 2077 on a Thinkpad T430 was torture. It runs at 640×480 at 5-8 fps, and that’s not great performance for a shooter… My new PC runs a Chinese motherboard from recycled server parts, a Xeon E5-2667 from 2014, and a 3060Ti. It’s so fast it’s hard to believe I am running a 10 year old processor…

I have 16 cores, but use mostly one. Even gaming don’t throw all cores to 100%. Running Cyberpunk on Ultra stresses out the GPU, but the CPU have power to spare. Paired with a cheap NVMe drive, and it boots quicker than my monitor can turn on.

I believe the increase in processing power is just for bragging rights, and just a few minority of users will really benefit from fast CPU speeds that are hitting the market today. Or 5 years ago.

I work with those IBM mainframes. There more power makes sense. You have the same amount of hardware, the same floor space, the same “air conditioning bill”, the same amount of network cables and switches, and a lot of incremental power to run virtual machines or zOS jobs. Without the increase, you would end pilling up hundreds or thousands of discrete servers, and that becomes an unmanageable mess quick.

They are a beast, a different kind of beast, and they are great to work with.

From my own experience, some image processing algorithms take tens of minutes to run on recent fairly high end processors. Antenna optimization can take a lot of time also. High resolution video can be challenging to run at full speed without graphics card acceleration.

Gamers want high performance new processors for best quality at high framerates.

haha yeah openscad is the story i told myself as i upgraded from a 2010 chip back in 2019.

Same issue here with the comments.

My father in law was a developer for the old IBM mainframes. It’s fun to mention “newer” IT tech to him because 90% of the time his reply is “We were doing that back in the 70s!”.

Speaking of mainframes… Here I have a RPI-5 running a DEC PDP-10 Mainframe simulator with a Front Panel (from Obsolescence Guaranteed) , with 2GB of memory, a 256G OS SD drive, and a 6TB data HDD all sitting on corner of a shelf in my office with full 1Gb ethernet and wifi access. Total cost for this setup of $600 or so and consuming very little power. And doing ‘way way’ more than the original room sized expensive time sharing PDP-10 could ever dream of doing.

Come a long ways.

I find it interesting that the $600 I spent today, in 1967 that was around $64… A PDP-10/50 back then was $342,000 and goes up from there depending on ‘extras’. That is $3,220,739 in today’s dollars according to an inflation calculator… I ‘think’ I got a bargain :) .

https://ia801704.us.archive.org/33/items/TNM_PDP-10_computer_price_list_1967_20180205_0300/TNM_PDP-10_computer_price_list_1967_20180205_0300.pdf