For the last few years or so, the story in the artificial intelligence that was accepted without question was that all of the big names in the field needed more compute, more resources, more energy, and more money to build better models. But simply throwing money and GPUs at these companies without question led to them getting complacent, and ripe to be upset by an underdog with fractions of the computing resources and funding. Perhaps that should have been more obvious from the start, since people have been building various machine learning algorithms on extremely limited computing platforms like this one built on the Atari 800 XL.

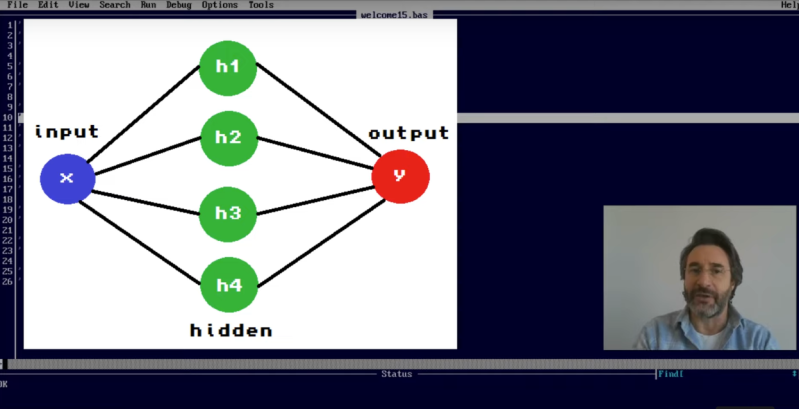

Unlike other models that use memory-intensive applications like gradient descent to train their neural networks, [Jean Michel Sellier] is using a genetic algorithm to work within the confines of the platform. Genetic algorithms evaluate potential solutions by evolving them over many generations and keeping the ones which work best each time. The changes made to the surviving generations before they are put through the next evolution can be made in many ways, but for a limited system like this a quick approach is to make small random changes. [Jean]’s program, written in BASIC, performs 32 generations of evolution to predict the points that will lie on a simple mathematical function.

While it is true that the BASIC program relies on stochastic methods to train, it does work and proves that it’s effective to create certain machine learning models using limited hardware, in this case an 8-bit Atari running BASIC. In previous projects he’s also been able to show how similar computers can be used for other complex mathematical tasks as well. Of course it’s true that an 8-bit machine like this won’t challenge OpenAI or Anthropic anytime soon, but looking for more efficient ways of running complex computation operations is always a more challenging and rewarding problem to solve than buying more computing resources.

Genetic algorithms are so much fun. I was playing around with them on the Commodore 128 back in the day, as an experiment for enhancing the gameplay for a type-in game from RUN Magazine. It’s a shame they fell out of fashion some time ago, since I always felt like they always had so much untapped potential.

I loved the story about a researcher who successfully ran a genetic algorithm tasked with creating a tone detector.

The algorithm successfully evolved code which worked on the target CPLD or FPGA but made absolutely no sense when analysed

Turned out the “logic” was using things like the inter gate capacitance

it’s very important to monitor and study genetic algorithms though, because these unusual computation strategies can lead to hacks like rowhammer. I’m convinced there’s a lot more in the wild.

Tons of fun security research on this front, the TEMPEST specification came out of investigating ordered leakage, after all, and that’s downright primitive when looking at modern threats.

At one point in time, the Atari 400/800 computers had been used by AMSAT DL “ground control” for the OSCAR satellites (esp. AO-10, later AO-13).

https://www.youtube.com/watch?v=GDR4pqkmmxE

The Atari 400 can bee seen at 32:14 in the background, for example.

https://en.wikipedia.org/wiki/AMSAT-OSCAR_10

Both OSCAR 10/13 had an IHU (integrated house-keeping unit) running IPS.

The processor was an COSMAC 1802.

https://www.om3ktr.sk/druzice/ao10.html

https://www.amsat.org/articles/g3ruh/118.html

https://amsat-dl.org/ips-high-level-programming-of-small-systems-for-the-amsat-space-projects/

How would you train a genetic algorithm to evaluate a chess board for the best move?

The thing that never sits right with me is the fact that for writing genetic algorithms, we usually have a fitness calculation function at hand which also implies we know what different variables are present and how they relate to the fitness. Is it not just a matter of reverse calculating or bruteforcing the parameters to arrive to the fittest “organism”