Holography is about capturing 3D data from a scene, and being able to reconstruct that scene — preferably in high fidelity. Holography is not a new idea, but engaging in it is not exactly a point-and-shoot affair. One needs coherent light for a start, and it generally only gets touchier from there. But now researchers describe a new kind of holographic camera that can capture a scene better and faster than ever. How much better? The camera goes from scene capture to reconstructed output in under 30 milliseconds, and does it using plain old incoherent light.

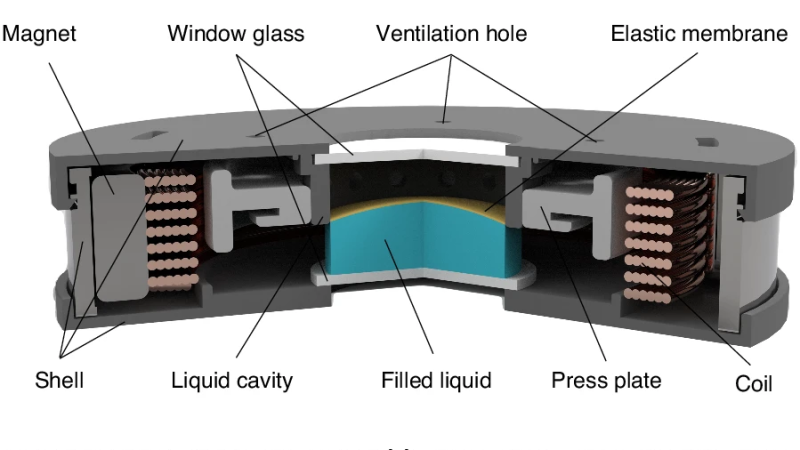

The new camera is a two-part affair: acquisition, and calculation. Acquisition consists of a camera with a custom electrically-driven liquid lens design that captures a focal stack of a scene within 15 ms. The back end is a deep learning neural network system (FS-Net) which accepts the camera data and computes a high-fidelity RGB hologram of the scene in about 13 ms. How good are the results? They beat other methods, and reconstruction of the scene using the data looks really, really good.

One might wonder what makes this different from, say, a 3D scene captured by a stereoscopic camera, or with an RGB depth camera (like the now-discontinued Intel RealSense). Those methods capture 2D imagery from a single perspective, combined with depth data to give an understanding of a scene’s physical layout.

Holography by contrast captures a scene’s wavefront information, which is to say it captures not just where light is coming from, but how it bends and interferes. This information can be used to optically reconstruct a scene in a way data from other sources cannot; for example allowing one to shift perspective and focus.

Being able to capture holographic data in such a way significantly lowers the bar for development and experimentation in holography — something that’s traditionally been tricky to pull off for the home gamer.

All these camera posts are giving HaD a real Gernsbackian feel and I like it. I remember being amazed by holograms as a child. It’s cool seeing progress like this being made on the technology.

I can remember being absolutely blown away by the 1984 issue of National Geographic that had a hologram – of an eagle, if memory serves – on its cover. Seriously impressive to my young mind.

After some brief searching, I found this article – yes, it was an eagle. My memory gets a gold star.

https://americanhistory.si.edu/collections/object/nmah_713865

I still have that issue at home!

Me too! I was in 4th or 5th grade. Thought it was so awesome I brought it to school with me to look at on the bus, lunchtime, etc. Lol! Also started a fascination with lasers that exist to this day. Only lasers I could find back then were in the edmund scientific catalog. 5mw HeNe lasers that were unobtainable no matter how many lawns I mowed.

Real-time acquisition and focus stacking is what enables fully sharp macro views at odd angles, as is typical for inspection during reflow. Really looking forward to that, 3D or not.

So it’s a video camera with a lens that does focus stacking in a fraction of a second. Stir in some AI, and ATAMO (And Then A Miracle Occurs)! It produces a “hologram”!

Aaaand… we’re back to the discussion of what a hologram really is and why this isn’t just called a light field camera.

Exactly. This doesn’t capture the interference pattern a typical hologram would capture. This will never be able to produce a different view from what was captured, simply because this information isn’t there anymore. Said differently, remove the AI magic that’s just a DNN for (m/f)aking the focus effect of a lens and it’s a plain old camera. No need for fancy equipment here to reproduce the “hologram”, just show the picture on the computer (their SLM is a plain old Texas instrument’s DLP, sold by CAS Microstar Technology). Said differently, it’s a 1080p video projector, this doesn’t have the resolution required to modulate the phase of the (incoherent) light reflecting in.

Does this mean that the 3D hologram is visible from a certain point of view and invisible from a slightly different perspective?

Not necessarily. The AI might be making up the data to fill in what the camera doesn’t capture.

Precisely. I’m a laser holographer 50 years and that may be another field camera but t it has nothing to do either with holography or actual holograms the language has morphed. Type in hologram on google image search. The first 71 images are not holograms the 72nd is lol

A while back I saw a video of a demo of a holographic display, not just a camera — this was a real hologram, producing a complete wavefront. It worked by having a big lump of transparent stuff, quartz maybe, surrounded by piezoelectric actuators. By producing the right sonic waveform within the glass it could induce it to bend laser light in just the right way to reproduce the interference patterns in a real hologram, except this could be changed in real time, so producing moving holograms.

It was connected to a very large computer which was just about capable of doing the real-time computations to render a spinning cube. I wonder what happened to this?

The https://en.wikipedia.org/wiki/Spatial_light_modulator you describe is a commercial product and has been available for more than a decade. The authors of this paper use one to display their computed hologram.

Excellent!

I am, however, sad that that article contains no references to “Illudium Q”.

Any other 3D reconstruction technique mentioned here (stereoscopic, time of flight, even old style kinect structured light projection) can also provide shifted perspectives just as well as this can. This camera with a fancy lens also has just as much trouble with occlusions as them due to having a single viewpoint. (the exception perhaps being wide-baseline sterioscopic and multiscopic cameras, being literally multiple viewpoints with guaranteed distance between them)

This isn’t even a very good implementation of this technique. There’s a temporal element as the camera effectively rotates through multiple different lenses one by one, meaning if the camera is moving or the scene is dynamic it’ll hurt reconstruction. I remember an alternative approach to this holographic reconstruction a while back which used simultaneous capture with multiple cameras with different focal lengths and generated the same wavefront information (and same ability to change focal lengths later) but without the motion problems.

15 Ms is 66 fps. Maybe not great for fast moving objects, but perfectly suitable for slow moving objects such as a object on a roasting platform to further generate a full 360 degree scene model, minus interior occlusions. Just because it isn’t perfect doesn’t mean it’s useless.

Who were the researchers? The article says the results are good, maybe it could show them? Seems some basic information is missing, no links names or other information for me to research further

Click straight through to the paper in the first link!

(There are twelve authors on the paper, hence “the researchers” for brevity.)

The capture time of 15ms got me wondering what sensor they were using. It’s noted as a Sony model in the description of the experimental setup. The exact model isn’t mentioned, but they all seem to top out at 60 FPS.

The paper seems to describe capturing only two depth levels to stack, so I think they may be only capturing two frames – they mention that adding more depth levels is possible, but would slow things down. Not to say you couldn’t achieve it, but the more frames you capture you either lose light available to each image or lower the overall frame rate.

Yes… Like any image sensor. The more time to integrate, the higher the number of photons converted to electron the better the SNR. The issue is that pesky real live scene that doesn’t stay still while you’re exposing.

So what could be done with a stereo pair of them?

I can imagine this to greatly simplify photogrammetric 3d reconstruction…

They keep saying ‘reconstruction’ and ‘real 3d scene’ but this is entirely synthetic from the NN. Am I missing something?

They are displaying a picture on the DLP’s and taking a picture of it. The picture that’s displayed on the DLP is generated/interpolated from 2 pictures of the same scene taken with a different focus. There is almost no advance in this research, they are using the term holography to draw readers in, but this isn’t holography, at best, it’s a novel focus stack interpolating algorithm.

That’s what I thought. Fast variable focus is cool. Some DSP can make that cooler. But holography it aint.

The death of RealSense has been greatly exaggerated, plenty of decent depth cameras available… https://www.intelrealsense.com/

Video based “3D” or at least variable focal field imaging has been available on high end phone cameras for some time, and using focal/plane information to provide 3D information has been part of some fairly intrusive surveillance camera systems for quite a while.

While this is a pretty cool implementation, the only novel feature (other than the software to make it all look good – no small feat in and of itself) seems to be the liquid lens.

The stuff about this being different from a Light Field Camera and actually a hologram is all completely false.

I’m not convinced this has anything to do with holography. It looks more like a single viewpoint light-field capture mechanism that uses focus stacking by modulating the shape of the lens. Since the definition of holography includes capturing interference patterns to encode 3D information, saying this system doesn’t use interference disqualifies it from holography. Also the author states that stereographic cameras capture from a single viewpoint is false. Stereographic cameras capture from 2 or more viewpoints. That’s what makes them stereographic. This camera captures from a single viewpoint.

Embarrassing that this is in a Nature journal, given that (as several other commenters have pointed out), it’s just a focus stacking camera with no relation to actual holography or even light field photography.

However, there is a recent paper in another journal in which true incoherent holograms were captured in full color at 22fps by a palm-sized camera. That’s much more interesting, IMO:

https://spj.science.org/doi/10.34133/adi.0076

The authors used a polarization-sensitive color image sensor and several thin optical elements. Depth of field and spatial resolution are still limited, which is a known challenge with incoherent holograms.

Please show us the hologram. Video is the best way, not perfect, but helps if it is a real hologram.

Which liquid lens did they use? I would like to try one for another project