You’d think a paper from a science team from Carnegie Mellon would be short on fun. But the team behind LegoGPT would prove you wrong. The system allows you to enter prompt text and produce physically stable LEGO models. They’ve done more than just a paper. You can find a GitHub repo and a running demo, too.

The authors note that the automated generation of 3D shapes has been done. However, incorporating real physics constraints and planning the resulting shape in LEGO-sized chunks is the real topic of interest. The actual project is a set of training data that can transform text to shapes. The real work is done using one of the LLaMA models. The training involved converting Lego designs into tokens, just like a chatbot converts words into tokens.

There are a lot of parts involved in the creation of the designs. They convert meshes to LEGO in one step using 1×1, 1×2, 1×4, 1×6, 1×8, 2×2, 2×4, and 2×6 bricks. Then they evaluate the stability of the design. Finally, they render an image and ask GPT-4o to produce captions to go with the image.

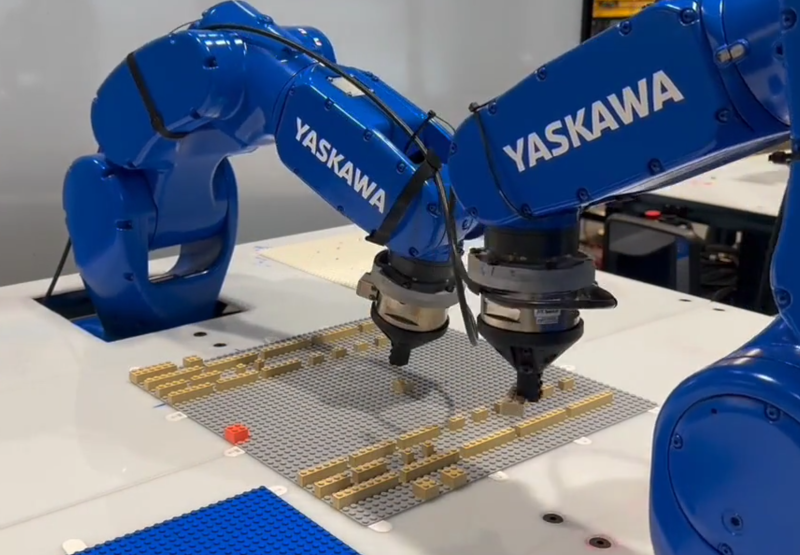

The most interesting example is when they feed robot arms the designs and let them make the resulting design. From text to LEGO with no human intervention! Sounds like something from a bad movie.

We wonder if they added the more advanced LEGO sets, if we could ask for our own Turing machine?

Millions of dollars, high tech equipment, some of the worlds greatest minds, a top university, and yes we have finally done it. Children’s toys can now be constructed by robots.

And the first webcam monitored a percolator

For awhile there was the Amazing Cooler Cam webpage.

Children ‘and adults’ toys can now be constructed by robots. Taking the creativity out of the process. I still say we are heading toward Idiocracy as the final goal with this AI stuff. … Let the AI do it…

The end goal is not to have skynet playing with building blocks so toddlers can be free from their labors to watch more cocomelon. Toys and play are an effective way to develop more advanced capabilities. It’s why we play with toys when we are growing up too.

Surely a missed opportunity to use the prompt “A double-decker couch”

👍

I tried “double decker couch” on the demo and didn’t succeed. The result is more of a lump than a couch. The queue 30min long so I don’t feel like attempting again.

Stability may also be a problem. Basically the same issue as overhangs and bridging in 3d printing. These are designed to be stable and placed one brick at a time so it may not be possible to make the span. If I were making a double-decker from lego I’d make the bottom and frame first, then the top couch independently, then place top couch on top. The AI generator would need to build an archway one brick at a time.

Robot arm types in “build a fully functioning, AI driven robot arm”. The end game is nigh

Building the processor out of legos might be a challenge. Maybe train it on minecraft redstone projects

I wondered how long it would be before someone wrote “legos”.

The plural of Lego is Lego. Or LEGO, if you prefer. Or LEGO® if you must.

I tried writing some software to generate LEGO sculptures that had different appearances from different angles, like the block characters on the front cover of Hofstatder’s “Godel, Escher, Bach”, so each of the orthogonal perspective drawings had a meaningful outline. But the results were not easy to impossible to build, because stuff lacked support and was not easy to figure out how to assemble. This might be a neat way to do something like that but with robust designs, and provide a build instruction set as well.

Next (logical to me) fork on the same road – introduce specially marked lego bricks that each stand for some kind of a command. Programming in the real world, sort of, kind of, but you get the idea. Say, each “command brick” is a FORTH word, so scanning the thing with some kind of cheap camera mated to simple sequencing software running on, say, ESP32-CAM thingie automagically generates the FORTH program of sorts. Either that, or a humble headless Unix shell generates piped command. Programming without a keyboard, so to speak.

Somehow I suspect this has been tried before.