To paraphrase an old joke: How do you know if someone is a Rust developer? Don’t worry, they’ll tell you. There is a move to put Rust everywhere, even in the Linux kernel. Not going fast enough for you? Then check out Asterinas — an effort to create a Linux-compatible kernel totally in Rust.

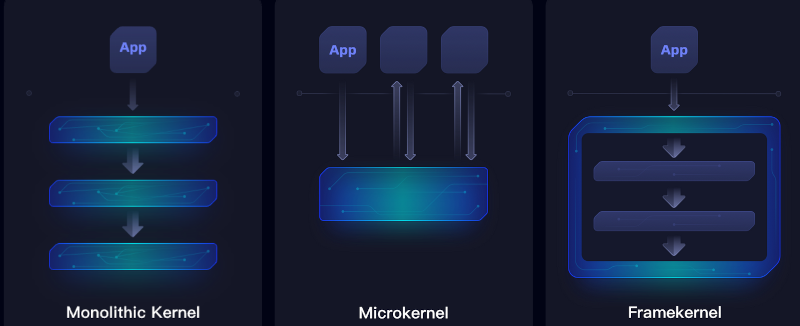

The goal is to improve memory safety and, to that end, the project describes what they call a “framekernel.” Historically kernels have been either monolithic, all in one piece, or employ a microkernel architecture where only bits and pieces load.

A framekernel is similar to a microkernel, but some services are not allowed to use “unsafe” Rust. This minimizes the amount of code that — in theory — could crash memory safety. If you want to know more, there is impressive documentation. You can find the code on GitHub.

Will it work? It is certainly possible. Is it worth it? Time will tell. Our experience is that no matter how many safeguards you put on code, there’s no cure-all that prevents bad programming. Of course, to take the contrary argument, seat belts don’t stop all traffic fatalities, but you could just choose not to have accidents. So we do have seat belts. If Rust can prevent some mistakes or malicious intent, maybe it’s worth it even if it isn’t perfect.

Want to understand Rust? Got ten minutes?

This is what Rust programmers should have done from the beginning. Rewrite it in Rust!

Why? Linux has thousands of man-years put into the development and a massive user base. Anything written from scratch will have a much smaller user base and a tiny fraction of the functionality.

Bringing rust into Linux a bit at a time is a much more sensible approach

Thousands of AAA developers (both hobbyists and corporate) would love to, but as long as Adolf Torvalds is in charge, there’s a zero chance of this happening. For Linux to move on we need to ditch the BDFL model of development and implement direct democratic process. Features could be discussed on a Reddit-like webpage and implemented (or rejected) based on amount of upvotes, instead of arbitrary “my way or the highway” mindset. I suppose some kind of blockchain voting should be used to prevent abuse and clone accounts.

What are you even talking about. Rust is already in the kernel, vetted by adolf torvalds himself.

Yes a lot of drama is going on, sometimes valud, sometimes not…

Reddit driven development for the core operating system used for the most things today…. You think that’s a good idea?

He must be attending communist rallies too…

It’s not Linus who’s stopping rust from the kernel but different maintainers who are unhappy with rust in the kernel mainly because they don’t want to learn a new language or maybe they’re afraid that they will loose the position if enough rust enters in the kernel

Hum, it’s always the other that are wrong, hu?

Maybe, just maybe, they are competent, studied the pro and cons of adding rust, and logically deduced that it wasn’t a good idea.

Because, beside “mhu memory safety”, what will rust bring to the linux kernel? (or other programs in general?)

I mean, I get it, it’s an other language, fairly new, but what makes it better at safety than, say ADA?

some of us have production servers to maintain. we dont want to deal with you rewriting the core utilities of linux and implementing untested implementations because you wanted to try a new language.

Linus Torvalds definitely votes to have safe code.

@cal5582 I fully agree with your “some of us have stuff to get done” stance… but that angle is a bit unfair.

Google has rewritten parts of Android in Rust as part of a larger “it doesn’t matter what language the rewrite is in as long as it’s memory-safe” and seen a corresponding decline in new memory-safety exploits. (Here’s a write-up from December 2022: https://security.googleblog.com/2022/12/memory-safe-languages-in-android-13.html )

Microsoft has rewritten parts of Windows in Rust. (Here’s a talk from 2023: https://www.youtube.com/watch?v=8T6ClX-y2AE&t=2611s )

It’s not as if Linux is jumping on some random toy moonshot.

Hell, with Rust4Linux, the whole point is to avoid rewriting the world by allowing new drivers to be written in Rust while keeping the mature C code.

No matter if written in C, Java or Rust, the fact remains that Linux is not UNIX, it’s something much worse.

Not gonna engage with that directly… But if someone really has the commitment to rewrite Linux from scratch, it does seem like a wasted opportunity not to treat it as a new OS. I’m sure even senior Linux devs have a list of things they’d do differently if they were starting over.

There are plenty of reasons, starting with the fact that there is no ecosystem and no immediate benefits for the majority of potential users.

Meanwhile a compatible kernel is not just an interesting research project, but a potential branch to test the viability of the idea. It’s not like the possible changes you mention can’t happen at all either.

But rust devs want to rewite the ecosystem too hahaha

From what I’ve seen, it’s more that “the ecosystem” just happens to be made up of the kinds of tools that people like to rewrite as practice/hobby projects.

…sort of like how it seemed everyone’s learning project was to write yet another IRC client.

Linux is in fact not UNIX/POSIX because Linux is a kernel while UNIX/POSIX defines a userspace environment. Linux can host a UNIX/POSIX compliant environment but does not do so in almost all cases. Adelie Linux is a distribution that is working toward full compliance.

Why would anyone want to take zero lessons from the last 46 years?

45* (since the 80’s when unix work, and most OS work, generally stopped)

≥ there’s no cure-all that prevents bad programming

which these days seems to include AI generated code being used without full understanding of what it does or needs to do. Every protection possible in the OS needed more than ever.

That sounds more like fear of new tools than a measured critique. AI-generated code can be risky if misused—just like human-written code. The key is understanding and testing, not assuming humans do it better by default.

Your comment leans on appeal to fear, a hasty generalization, and an implied slippery slope—none of which make for a strong argument.

and you should learn to read :)

Vibe coding skips debugging. If a human codes they test and debug the code, for example sending every possible value to an integer variable and seeing what breaks a function. In C/C++ syntax hides thijgs which Rust makes sure you declare upfront.

Humans do the full work, whereas using AI code skips several key steps of development. Shortcutting vs full investment in the process. AI use cant be a function of development if it is inhetently a dysfunction. AI is for research, and is less reliable than wikipedia.

Bad example, Wikipedia is much more reliable, and history, comments, and references are available.

Generative systems are fact auto completion that require a hand on the reigns at all times.

Wikipedia is the worst source of knowledge. Most universities forbid its use.

To CCO18: then just use Wikipedia as an aggregation of other sources. Most articles have citations which can be used and verified as primary sources

You think human software developers test every possible value of every variable? You are terribly mistaken; software development needs to happen within the lifetime of THIS universe.

I keep hearing this argument – that slop code is fine as long as you understand every detail of what it’s doing – and, yeah, obviously, but isn’t that wilfully missing the point? Because understanding the details is precisely what you’re using an LLM to avoid.

If you tell me a piece of code was generated by an LLM, I don’t know whether that code is good, but I am confident the person who generated it doesn’t understand it as well as a human author would. Because there would be no reason for them to use an LLM in the first place if they were willing to do that work.

There are plenty of parts of most programs that are simple but repetitive and time consuming.

i have some anxiety about the way the world is going, rust in particular, so i wanted to take a look at this.

the first question on my mind was how they could have done enough work to consider that they have built their own kernel, when in my mind that would require an enormous amount of device driver labor. and the answer is that it only runs under qemu, which means they use the handy qemu ‘virtio’ class devices. which definitely is, as they say, a convenient way to prototype OS ideas without the real struggle of making a kernel that talks to hardware.

the next question is whether the rust object model always makes a bunch of repetitive boilerplate code and this does seem to be another project that has that attribute. Linux itself, of course, has a lot of wrappers…but this has a lot of wrappers too. it didn’t seem to reduce that. and the wrappers each seem to have a lot of verbosity, like i found pro forma accessor functions, and also wrappers that copy the same 4 variables from one struct definition to another. i was really hoping it might improve the code readability but it seems to have slightly done the opposite.

and my biggest rust fear is cargo, and this uses cargo a bunch. it pulls in a bunch of 3rd-party crates. it has a bunch of repetitive filenames: 59 directories named “src/”, 55 files named “lib.rs”, and 185 files named “mod.rs”. all of these things make me unhappy but maybe i’m being unfair. surely the fantastic set of problems i ran into the first time i used cargo years ago have mostly been cleaned up. but i really hope if linux uses rust that it doesn’t use cargo, and certainly not 3rd-party crates.

so those observations really are more about my anxieties than they are necessarily problems in this project.

but i do have one straight up criticism…if i understand correctly, the ‘framekernel’ idea’s only concrete manifestation is that they’ve scattered deny(unsafe_code) around their codebase. that’s just the regular rust practice of only using unsafe when you need to. kind of no duh, and i don’t think it means this is fundamentally a different kind of kernel. it seems to be monolithic with the separation between services only guaranteed by the language…all rust kernels would presumably be like this.

mod.rs and lib.rs are a “don’t repeat yourself” measure built into standard rust; looking at them without their directory name is essentially incorrect. mod.rs avoids putting the root source file of a module outside of the directory for that module, so instead of having both some_module.rs and a some_module/ directory next to it you just have some_module/ with mod.rs and any submodule files. Nothing in the directory needs to repeat the module’s name, which is good practice. lib.rs is just the entry point for a library crate, and if there’s 55 of them then they’ve split the project into 55 libraries and probably a few executables (I’d expect four, given that there are 59 src/ directories that also are part of the usual crate layout). Crates are the unit of code that the compiler takes as input, not just code modularity or a cargo thing. main.rs is probably there a couple times too, although there could also be some src/bin/some_binary_name.rs. All of this is built into the toolchain so this is the default layout and using a different one is less convenient.

Seems a bit like complaining how C programmers frequently have a function called “main” in their code for executables.

#![deny(unsafe_code)] actually isn’t quite the standard practice; at the top of the crate it makes compilation error if there’s an unsafe block anywhere in the crate, and does so from a central location that is much less error-prone to validate than a search or just enforcing appropriate use of unsafe at code review. It probably would have ended up mostly that way anyways, and it’s not an unusual line to draw, but it’s not the default.

i’ll admit that it’s possibly harmless, or mostly harmless, or at least not very harmful. but it’s absolutely not comparable to main() in C. especially the “src/” directories really bum me out. the whole thing is a source tree…to make directories named src/ under the head of a source tree. yuck. android makes me do a bunch of that sort of garbage these days. it’s just clutter and it makes me feel bad about dominant rust development patterns.

my real question is just personal. i have an interest in new languages and i’ve run into rust a few times now and each time it gives me a big downer feeling. that’s my feeling.

and i couldn’t possibly disagree more strenuously about deny(unsafe_code). rust maintainers have resisted for years repeated requests by people to make deny(unsafe_code) the default, or a configurable default. it’s basically a way of saying you don’t trust rust, your contributors, or your process. given that it’s pulling in 3rd-party crates, i imagine the deny actually means something…and they really don’t / shouldn’t trust their 3rd-party contributors. safety doesn’t come from a keyword…it comes from a code audit, and deny is not a code audit. and 3rd party crates don’t make a code audit impossible but they sure do change the practice of it.

but you replied to a point i didn’t even make. i just said, it’s not a new kind of kernel. it’s just a regular monolithic kernel, but using rust. scattering deny around your source tree doesn’t differentiate it from a monolithic kernel.

“rust maintainers have resisted for years repeated requests by people to make deny(unsafe_code) the default”

Because it’ would be pointless.

There’s already a deny-by-default mechanism in the

unsafekeyword itself and makingdeny(unsafe_code)the default would literally be requiring that you pair yourunsafewith a#[yes_really]annotation.“or a configurable default”

That’s what putting #![deny(unsafe_code)] at the top of your root source file is… and it’s a per-project default because you want to make sure everyone who clones your repo gets the same settings. (Personally, I think git’s ~/.gitignore is a terrible idea because anyone who checks out your repos without that out-of-band info will see different

git stoutput.)“safety doesn’t come from a keyword…it comes from a code audit”

I’ll agree there.

First, I think that they should have used

forbid(unsafe_code)(you can’t re-allowif youforbid)Second,

deny(unsafe_code)orforbid(unsafe_code)is a PR reviewing aid. The idea is that you slap#![forbid(unsafe_code)]on the modules the junior devs get to work on and have the senior devs encapsulate all use ofunsafebehind correct-by-construction APIs that the junior devs can’t use to violate memory safety.Umm, RedoxOS seems to be coming along…

all RTOS use this way ;-)

kernel linux is a normal proces, and small RTOS working on metal

RUST is NOT even finalised, there is NO ANSI/ISO spec..

Why build a skyscraper on sand?

What would an ANSI/ISO spec do?

Rust as implemented by rustc is already pinned down more tightly by the regression/conformance suite they run on every

pushthan C is by ANSI/ISO with all the implementation-defined holes and undefined-behaviour twister they had to put in to get the various proprietary compiler authors to shake hands on a single spec that their existing compilers would require minimal modification to comply with.…and, even if that weren’t the case, the Linux kernel isn’t written in ANSI/ISO C, it’s written in GNU C.

https://maskray.me/blog/2024-05-12-exploring-gnu-extensions-in-linux-kernel

An ANSI/ISO Rust wouldn’t prevent the rustc devs from continuing to build out a superset of what’s been pinned down, similar to how GCC races ahead of ANSI/ISO C with GNU C.

Hell, the LLVMLinux effort was a little bit of removing things the Linux devs had changed their mind on anyway (eg. use of VLAs) and mostly teaching LLVM Clang to understand GNU C.

ISO/IEC standard provides a solid reference that covers everything from syntax to libraries. It’s a lifesaver in corporate life when shit hits the fan one has to find a creative way to shift the blame not to get fired. A few times MISRA came useful too, especially when other devs in the project were too lazy to look through company’s online library and vacuum whatever useful PDFs were there that could not be obtained from Libgen. And let’s be real. C is still and will be the thing in the embedded systems industry. By learning C17 instead of K&R as done at the university, I’m not just picking up a skill but I’m fitting myself with knowledge that’s relevant and in demand. It’s like having a golden ticket to job opportunities that many other languages can’t compete with.

I spent so long dealing with fortran and C compiler bugs in my HPC days I can catagorically say that all the ISO standards did was allow blame shifting by the vendor. Absolutely useless if you want to get real work done. At least for rust I can see the tests that defacto define the behaviour, and contribute new ones if I find a corner case. Far more useful than a spec that makes so much ‘undefined’ that it’s useless

rust seems like one of those things that people hype because its new and not because its better.