Look inside a science fiction computer, and you’ll probably see tubes and cubes that emit light. Of course, it’s for effect, but the truth is, people do think light computing may be the final frontier of classical computing power. Engineers at the University of Southern California Information Sciences Institute and the University of Wisconsin-Madison are showing off a workable photonic latch — a memory element that uses light.

The device uses a commercial process (GlobalFoundries (GF) Fotonix Silicon Photonics platform) and, like a DRAM, regenerates periodically to prevent loss of the memory contents.

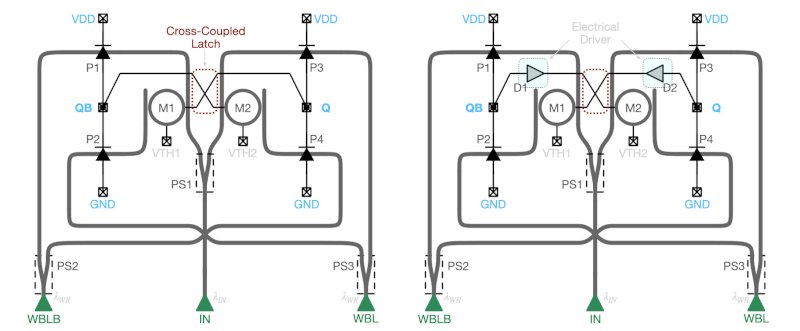

On the device, you’ll find a combination of tiny photodiodes, micro-ring resonators, and optical waveguides. Simulations show the memory element can operate at 20 GHz and might even be readable at 50 or 60 GHz.

If you want to dive deeper, the work is based on a paper from earlier in the year.

First steps to isolinear rods

Just make sure you’re wearing your Isotoners.

“Look inside a science fiction computer, and you’ll probably see tubes and cubes that emit light.”

https://ntrs.nasa.gov/citations/20190030489

For some reason, title of the article made me think of using a light based version of delay line memory.

The internet says the SFH 203 P photo transistor has a switching time of 5ns. So 5 feet gets you one bit. That’s quite a lot of optical fibre to coil up. Still, there’s no reason why not. There’s always that mirror on the moon, weather permitting.

This may be wrong but some quick maths suggests 946969 miles per gigabit.

The “1 foot in 1ns” is for light at the speed of C, aka in a vacuum.

Here on earth light travels slower than C when we inconvenience the photons by sending them on an adventure through glass and plastics and such.

It’s about 7 inches per 1ns in a 50/125 multimode glass fiber optic cable for instance.

Photonic computing is always going to be a problem looking for a solution.

Photons are expensive per bit, energetically speaking, and (as the article says) they take up a lot of space. Any optical computing device will be large and power hungry, with no prospect to reduce either problem.

There are and will be niche applications where they perform really well (clocks, long-haul interconnects, some kinds of pattern matching and identification), but as a replacement for a general-purpose CPU or RAM? Nope.