Machine learning has come a long way in the last decade, as it turned out throwing huge wads of computing power at piles of linear algebra actually turned out to make creating artificial intelligence relatively easy. OpenAI have been working in the field for a while now, and recently observed some exciting behaviour in a hide-and-seek game they built.

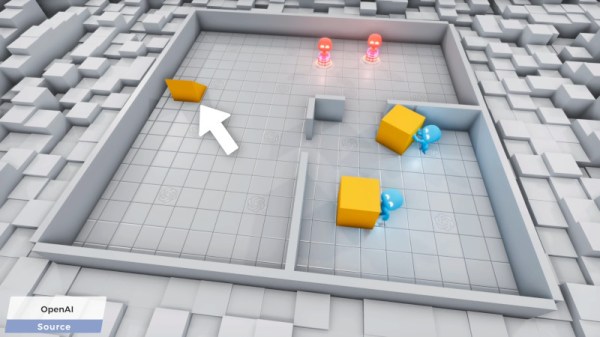

The game itself is simple; two teams of AI bots play a game of hide-and-seek, with the red bots being rewarded for spotting the blue ones, and the blue ones being rewarded for avoiding their gaze. Initially, nothing of note happens, but as the bots randomly run around, they slowly learn. Over millions of trials, the seekers first learn to find the hiders, while the hiders respond by building barriers to hide behind. The seekers then learn to use ramps to loft over them, while the blue bots learn to bend the game’s physics and throw them out of the playfield. It ends with the seekers learning to skate around on blocks and the hiders building tight little barriers. It’s a continual arms race of techniques between the two sides, organically developed as the bots play against each other over time.

It’s a great study, and particularly interesting to note how much longer it takes behaviours to develop when the team switches from a basic fixed scenario to an changable world with more variables. We’ve seen other interesting gaming efforts with machine learning, too – like teaching an AI to play Trackmania. Video after the break.