You don’t speak the language of dogs and yet you can tell when one is angry, excited, or down in the dumps. How does that work, and can it be replicated by a robot? That’s the question which [Megha Sharma] set out to study as part of her graduate research at the University of Manitoba in Winnipeg.

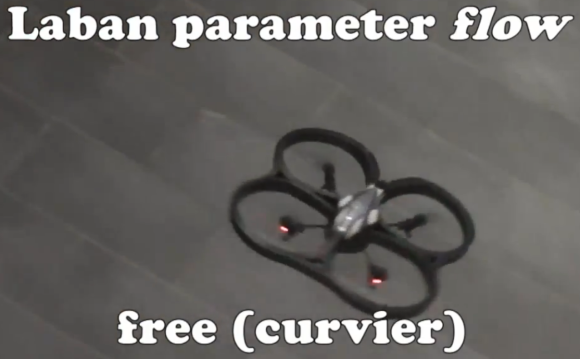

The experiment starts by training the robot in a series of patterns meant to mimic emotion. How, you might ask? Apparently you hire an actor trained in Laban Movement. This is a method of describing and dealing with how the human body moves. It’s no surprise that the technique is included in the arsenal of some actors. The training phase uses stationary cameras (kind of like those acrobatic quadcopter projects) to record the device as it is moved by the actor.

Phase two of the experiment involves playing back the recorded motion with the quadcopter under its own power. A human test subject watches each performance and is asked to describe how the quadcopter feels. The surprising thing is that when asked they end up anthropomorphising the inanimate device even further; making up small stories about what the thing actually wants.

It’s an interesting way to approach the problem of the uncanny valley in robotic projects.