We were initially skeptical of this article by [Aleksey Statsenko] as it read a bit conspiratorially. However, he proved the rule by citing his sources and we could easily check for ourselves and reach our own conclusions. There were fatal crashes in Toyota cars due to a sudden unexpected acceleration. The court thought that the code might be to blame, two engineers spent a long time looking at the code, and it did not meet common industry standards. Past that there’s not a definite public conclusion.

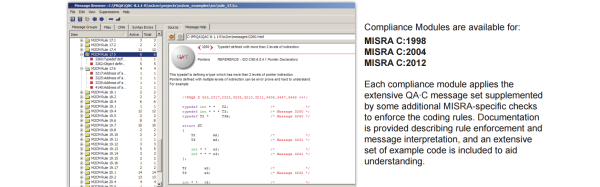

[Aleksey] has a tendency to imply that normal legal proceedings and recalls for design defects are a sign of a sinister and collaborative darker undercurrent in the world. However, this article does shine a light on an actual dark undercurrent. More and more things rely on software than ever before. Now, especially for safety critical code, there are some standards. NASA has one and in the pertinent case of cars, there is the Motor Industry Software Reliability Association C Standard (MISRA C). Are these standards any good? Are they realistic? If they are, can they even be met?

When two engineers sat down, rather dramatically in a secret hotel room, they looked through Toyota’s code and found that it didn’t even come close to meeting these standards. Toyota insisted that it met their internal standards, and further that the incidents were to be blamed on user error, not the car.

So the questions remain. If they didn’t meet the standard why didn’t Toyota get VW’d out of the market? Adherence to the MIRSA C standard entirely voluntary, but should common rules to ensure code quality be made mandatory? Is it a sign that people still don’t take software seriously? What does the future look like? Either way, browsing through [Aleksey]’s article and sources puts a fresh and very real perspective on the problem. When it’s NASA’s bajillion dollar firework exploding a satellite it’s one thing, when it’s a car any of us can own it becomes very real.