There are a variety of instruments used in sleep studies to measure bodily activity during sleep and consequent sleep quality. Many of them use techniques that perhaps aren’t so easy to replicate on the bench, but an EEG or electroencephalograph to measure brain waves can be achieved using a readily-available module. [Ben Jabituya] shows us a sleep monitor using one of these modules, an EGG Mikroe Click.

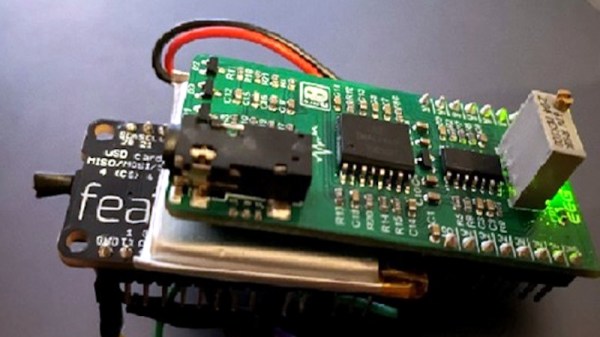

The brains of the operation is an Adafruit Adalogger Feather M0, which is hooked up to a headband containing the sensing electrodes. The write-up gives us a round-up of the available boards, which should be handy for any experimenters in this field. The firmware meanwhile was written using the Arduino IDE. It collects raw sampling data to an SD card, and one surprise comes in just how relatively small a space it requires to store a night’s results.

Finally, a Python script was used to process the data and turn it into a spectrogram to look at brain activity through the night. He envisages using the device for triggering lucid dreaming during REM sleep, but we can see it might be rather useful for sleep disorder sufferers, too. Take a look at it in the video below the break. Continue reading “A Sleep Monitor For Minimum Outlay”